想随时随地写出一段机器学习代码吗?

想不靠上网找教程就完成对一个csv数据表格的处理吗?

一直感觉理论也不太会,就算知道一点实际上手一句代码也写不出来,还是要靠搜教程复制粘贴度日吗?

一直知道数据拿到手要清洗,处理,分割。那你可以秒写出如何分割吗?

关于ML。很多人说不需要掌握数学知识你也可以学得很好,对此我不反驳,但我也难以同意。同时这也不是本文要讨论的重点。

本文就假设我们数学基础小学毕业,这样能做到码出漂亮的代码吗?

Of course!

来看看那些你必会的ML,DL常用语句。

陆续更新…

须知:本文仅适用于有一定python编程经验,与小规模数据(sklearn自带数据集)处理的经验的初学者。

以下内容均为必会必背:

1.关于各种库的导入

import numpy as np

import matplotlib.pyplot as plt

#import pylab as plt等同于上句

import pandas as pd

2.关于数据分割

2.1最基本的方法,无验证集。

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size = 0.25, random_state = 0)

3.关于数据预处理

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

4.模型导入及训练

4.1逻辑回归:

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

---------------------------------------------我是分割线---------------------------------------

停一下。一个二分类问题在上面就被你解决了!这就是sklearn的魅力!所以很多人会说ML很简单,其实其他类似的多分类,回归问题与上类似~OK,我这里暂停下就是告诉以下上面就是一个完整的模板。下面继续背我们的常用语句:

0.常见小数据集的加载

本小节参考:https://www.cnblogs.com/nolonely/p/6980160.html

乳腺癌数据集load-barest-cancer():简单经典的用于二分类任务的数据集

糖尿病数据集:load-diabetes():经典的用于回归认为的数据集,值得注意的是,这10个特征中的每个特征都已经被处理成0均值,方差归一化的特征值,

波士顿房价数据集:load-boston():经典的用于回归任务的数据集

体能训练数据集:load_linnerud():经典的用于多变量回归任务的数据集,其内部包含两个小数据集:Excise是对3个训练变量的20次观测(体重,腰围,脉搏),physiological是对3个生理学变量的20次观测(引体向上,仰卧起坐,立定跳远)

from sklearn.datasets import load_iris

#加载数据集

iris=load_iris()

iris.keys() #dict_keys(['target', 'DESCR', 'data', 'target_names', 'feature_names'])

#数据的条数和维数

n_samples,n_features=iris.data.shape

print("Number of sample:",n_samples) #Number of sample: 150

print("Number of feature",n_features) #Number of feature 4

#第一个样例

print(iris.data[0]) #[ 5.1 3.5 1.4 0.2]

print(iris.data.shape) #(150, 4)

print(iris.target.shape) #(150,)

print(iris.target)

1.关于各种库的导入

1.1fundamental

import numpy as np

import matplotlib.pyplot as plt

#import pylab as plt等同于上句

import pandas as pd

import seaborn as sns

1.2model_selection常用库

from sklearn.model_selection import learning_curve

from sklearn.model_selection import ShuffleSplit

from sklearn.model_selection import GridSearchCV

1.3其他常用库:

import warnings

warnings.filterwarnings("ignore")

from sklearn import metrics

from sklearn.externals import joblib

from sklearn.metrics import f1_score

y_true = [0, 1, 2, 0, 1, 2]

y_pred = [0, 2, 1, 0, 0, 1]

f1_score(y_true, y_pred, average='macro')

f1_score(y_true, y_pred, average='micro')

f1_score(y_true, y_pred, average='weighted')

f1_score(y_true, y_pred, average=None)

2.关于数据分割

2.1最基本的方法,无验证集。

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size = 0.25, random_state = 0)

3.关于数据预处理

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import PolynomialFeatures

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

4.模型导入及训练

4.1逻辑回归:

from sklearn.linear_model import LogisticRegression

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

4.2 LinearRegression

from sklearn.linear_model import LinearRegression

4.3多项式回归

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

poly_reg =PolynomialFeatures(degree=2)

X_ploy =poly_reg.fit_transform(X_train)

lin_reg_2=LinearRegression()

lin_reg_2.fit(X_ploy,y_train)

print("截距:",lin_reg_2.intercept_)

print("回归系数:",lin_reg_2.coef_)

y_pred1 = lin_reg_2.predict(poly_reg.fit_transform(X_test))

4.4 岭回归

from sklearn.linear_model import Ridge

linreg = Ridge()

linreg.fit(X_train, y_train)

print("----------------------------")

print("截距:",linreg.intercept_)

print("回归系数:",linreg.coef_)

y_pred1 = linreg.predict(X_test)

print("平均相对误差:",np.mean(np.abs(y_test-y_pred1)/y_test))

print("最大相对误差:",np.max(np.abs(y_test-y_pred1)/y_test))

print("MAE:",metrics.mean_absolute_error(y_test, y_pred1))

print("MSE:",metrics.mean_squared_error(y_test, y_pred1))

print("RMSE:",np.sqrt(metrics.mean_squared_error(y_test, y_pred1)))

print("R2:",metrics.r2_score(y_test, y_pred1))

4.4.2 RidgeCV

https://blog.csdn.net/ssswill/article/details/86411009

from sklearn import linear_model

reg = linear_model.RidgeCV(alphas=[0.1, 1.0, 10.0], cv=3)

reg.fit([[0, 0], [0, 0], [1, 1]], [0, .1, 1])

print(reg.alpha_)

若需要改变cv参数:

from slkearn.model_selection import KFold,ShuffleSplit

kfold = KFold(n_splits=3)

shuffle_split = ShuffleSplit(test_size=.5,n_splits=10)

ridge1 = RidfeCV(alphas = [.1,1,10,100],cv = kfold)

ridge2 = RidfeCV(alphas = [.1,1,10,100],cv = shuffle_split)

4.5Lasso回归

from sklearn.linear_model import LassoCV

linreg = LassoCV()

linreg.fit(X_train, y_train)

print('最佳的alpha:',linreg.alpha_)

print("----------------------------")

print("截距:",linreg.intercept_)

print("回归系数:",linreg.coef_)

y_pred1 = linreg.predict(X_test)

画lasso回归权重图:

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandrdScaler

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LassoCV

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0,test_size=0.15)

scaler2 = StandardScaler()

X_train = scaler2.fit_transform(X_train)

X_test = scaler2.transform(X_test)

print("ss处理之后的数据",X_test)

linreg = LassoCV()

linreg.fit(X_train, y_train)

print('最佳的alpha:',linreg.alpha_)

print("----------------------------")

print("截距:",linreg.intercept_)

print("回归系数:",linreg.coef_)

y_pred4 = linreg.predict(X_test)

coef = pd.Series(linreg.coef_, index = X_train.columns)

imp_coef = pd.concat([coef.sort_values().head(10), coef.sort_values().tail(10)])

#选头尾各10条,.sort_values() 可以将某一列的值进行排序。

#matplotlib.rcParams['figure.figsize'] = (8.0, 10.0)

plt.rcParams['figure.figsize'] = (8.0, 10.0)

imp_coef.plot(kind = "barh")

plt.title("Coefficients in the Lasso Model")

plt.show()

4.6 SVR

from sklearn.svm import SVR

4.7 随机森林回归

from sklearn.ensemble import RandomForestRegressor

4.8随机森林分类

from sklearn.ensemble import RandomForestClassifier

clf = RandomForestClassifier(n_estimators=100, max_depth=2,

random_state=0)

clf.fit(X, y)

print(clf.feature_importances_)

print(clf.predict([[0, 0, 0, 0]]))

5.模型保存及导入

from sklearn.externals import joblib

joblib.dump(grid_search.best_estimator_, '/Users/will/Desktop/sklearn_model/k2_svm.pickle')

model = joblib.load('/Users/will/Desktop/sklearn_modle/k2modle.pickle')

y_pred_svr1 = model.predict(X_test)

6.网格搜索寻找超参数

para_grid = {

'C':[0.0001,0.001,0.01,0.1,1,10,100],

'gamma':[0.001,0.01,0.1,1,10,100,1000]

}

t0 = time.time()

# grid_search = GridSearchCV(SVR(kernel="rbf"),para_grid,cv=5,scoring="neg_mean_absolute_error")

grid_search = GridSearchCV(SVR(kernel="rbf"),para_grid,cv=5)

grid_search.fit(X_train,y_train)

print("网格搜索用时:%.3f 秒"%(time.time()-t0))

print("最佳参数:{}".format(grid_search.best_params_))

关于网格搜索的更多内容请前往:

https://blog.csdn.net/ssswill/article/details/86373659

6.关于可视化

plt.rcParams['figure.figsize'] = (8.0, 4.0) # 设置figure_size尺寸

plt.rcParams['image.interpolation'] = 'nearest' # 设置 interpolation style

plt.rcParams['image.cmap'] = 'gray' # 设置 颜色 style

#figsize(12.5, 4) # 设置 figsize

plt.rcParams['savefig.dpi'] = 300 #图片像素

plt.rcParams['figure.dpi'] = 300 #分辨率

# 默认的像素:[6.0,4.0],分辨率为100,图片尺寸为 600&400

# 指定dpi=200,图片尺寸为 1200*800

# 指定dpi=300,图片尺寸为 1800*1200

# 设置figsize可以在不改变分辨率情况下改变比例

具体参考:

https://blog.csdn.net/ssswill/article/details/86411009

7.关于交叉验证

7.1关于cross_val_score函数

sklearn是利用model_selection模块中的cross_val_score函数来实现交叉验证的。

The simplest way to use cross-validation is to call the cross_val_score helper function on the estimator and the dataset.

The following example demonstrates how to estimate the accuracy of a linear kernel support vector machine on the iris dataset by splitting the data, fitting a model and computing the score 5 consecutive times (with different splits each time):

from sklearn.model_selection import cross_val_score

clf = svm.SVC(kernel='linear', C=1)

scores = cross_val_score(clf, iris.data, iris.target, cv=5)

scores

array([0.96..., 1. ..., 0.96..., 0.96..., 1. ])

By default, the score computed at each CV iteration is the score method of the estimator. It is possible to change this by using the scoring parameter:

修改评分函数:

from sklearn import metrics

scores = cross_val_score(

clf, iris.data, iris.target, cv=5, scoring='f1_macro')

修改cv:

from sklearn.model_selection import ShuffleSplit

n_samples = iris.data.shape[0]

cv = ShuffleSplit(n_splits=5, test_size=0.3, random_state=0)

cross_val_score(clf, iris.data, iris.target, cv=cv)

7.2KFold

import numpy as np

from sklearn.model_selection import KFold

X = ["a", "b", "c", "d"]

kf = KFold(n_splits=2)

for train, test in kf.split(X):

print("%s %s" % (train, test))

[2 3] [0 1]

[0 1] [2 3]

7.3Leave One Out (LOO)

from sklearn.model_selection import LeaveOneOut

X = [1, 2, 3, 4]

loo = LeaveOneOut()

for train, test in loo.split(X):

print("%s %s" % (train, test))

[1 2 3] [0]

[0 2 3] [1]

[0 1 3] [2]

[0 1 2] [3]

7.4 Random permutations cross-validation a.k.a. Shuffle & Split

from sklearn.model_selection import ShuffleSplit

X = np.arange(10)

ss = ShuffleSplit(n_splits=5, test_size=0.25,

random_state=0)

for train_index, test_index in ss.split(X):

print("%s %s" % (train_index, test_index))

[9 1 6 7 3 0 5] [2 8 4]

[2 9 8 0 6 7 4] [3 5 1]

[4 5 1 0 6 9 7] [2 3 8]

[2 7 5 8 0 3 4] [6 1 9]

[4 1 0 6 8 9 3] [5 2 7]

7.5 Stratified k-fold

from sklearn.model_selection import StratifiedKFold

X = np.ones(10)

y = [0, 0, 0, 0, 1, 1, 1, 1, 1, 1]

skf = StratifiedKFold(n_splits=3)

for train, test in skf.split(X, y):

print("%s %s" % (train, test))

[2 3 6 7 8 9] [0 1 4 5]

[0 1 3 4 5 8 9] [2 6 7]

[0 1 2 4 5 6 7] [3 8 9]

7.6 Stratified Shuffle Split

from sklearn.model_selection import StratifiedShuffleSplit

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4], [1, 2], [3, 4]])

y = np.array([0, 0, 0, 1, 1, 1])

sss = StratifiedShuffleSplit(n_splits=5, test_size=0.5, random_state=0)

sss.get_n_splits(X, y)

print(sss)

for train_index, test_index in sss.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [5 2 3] TEST: [4 1 0]

TRAIN: [5 1 4] TEST: [0 2 3]

TRAIN: [5 0 2] TEST: [4 3 1]

TRAIN: [4 1 0] TEST: [2 3 5]

TRAIN: [0 5 1] TEST: [3 4 2]

7.7Group k-fold

from sklearn.model_selection import GroupKFold

X = [0.1, 0.2, 2.2, 2.4, 2.3, 4.55, 5.8, 8.8, 9, 10]

y = ["a", "b", "b", "b", "c", "c", "c", "d", "d", "d"]

groups = [1, 1, 1, 2, 2, 2, 3, 3, 3, 3]

gkf = GroupKFold(n_splits=3)

for train, test in gkf.split(X, y, groups=groups):

print("%s %s" % (train, test))

[0 1 2 3 4 5] [6 7 8 9]

[0 1 2 6 7 8 9] [3 4 5]

[3 4 5 6 7 8 9] [0 1 2]

对于分类:sklearn默认使用分层k折cv。

回归:标准k折cv

常用cv:kfold,stratified,groupkfold

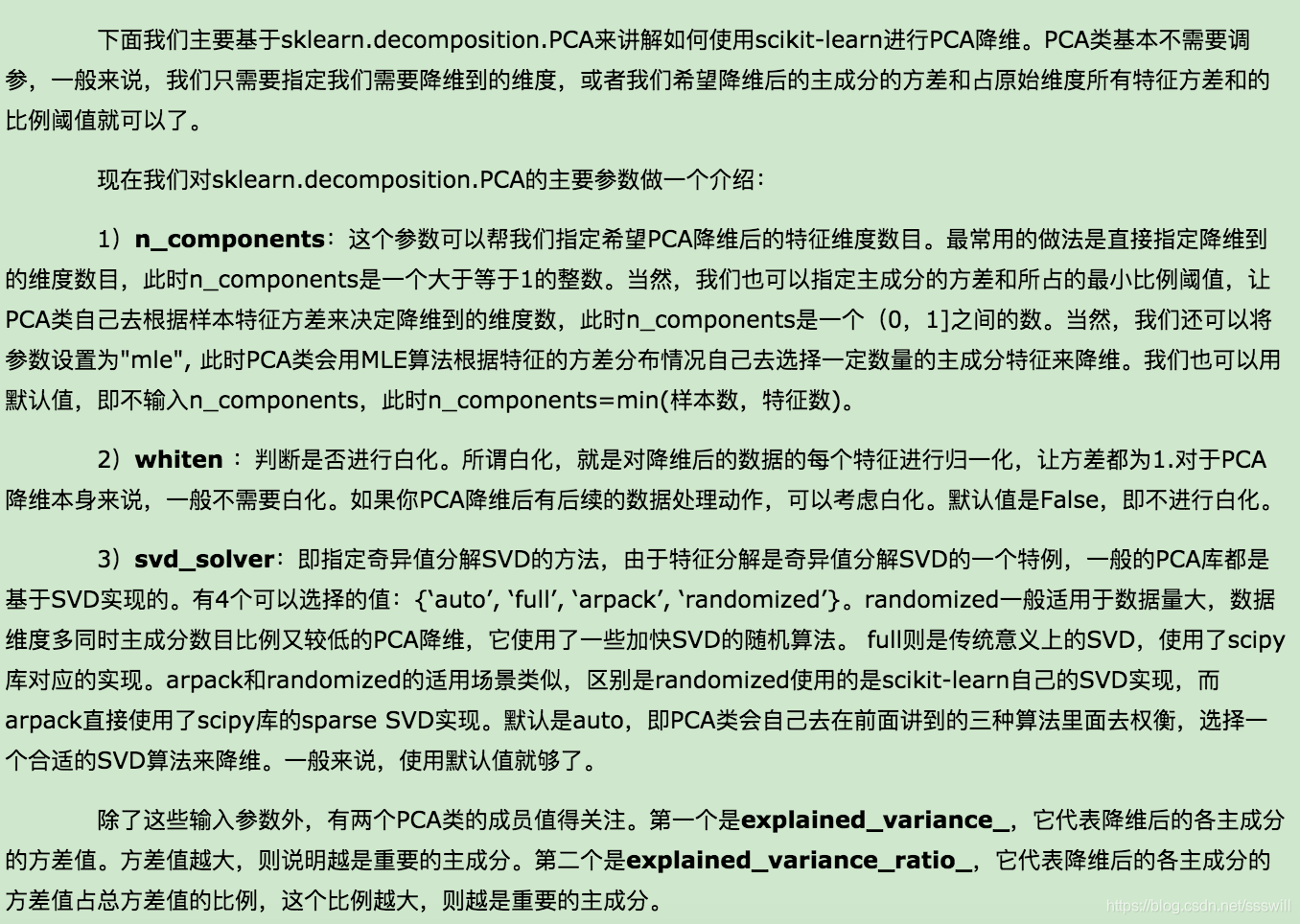

8.降维

8.1PCA降维

参考:https://www.cnblogs.com/pinard/p/6243025.html

https://blog.csdn.net/u013597931/article/details/80066641

from sklearn.decomposition import PCA

pca = PCA(n_components=3)

pca.fit(X)

print pca.explained_variance_ratio_

print pca.explained_variance_

X_new = pca.transform(X)

#X_new = pca.fit_transform(X)