kubernetes v1.12.1

从 v1.8 开始,资源使用情况的度量(如容器的 CPU 和内存使用)可以通过 Metrics API 获取。注意

Metrics API 只可以查询当前的度量数据,并不保存历史数据

Metrics API URI 为

/apis/metrics.k8s.io/,在 k8s.io/metrics 维护必须部署

metrics-server才能使用该 API,metrics-server 通过调用 Kubelet Summary API 获取数据

Kubernetes 监控架构

Kubernetes 监控架构由以下两部分组成:

-

核心度量流程(下图黑色部分):这是 Kubernetes 正常工作所需要的核心度量,从 Kubelet、cAdvisor 等获取度量数据,再由 metrics-server 提供给 Dashboard、HPA 控制器等使用。

-

监控流程(下图蓝色部分):基于核心度量构建的监控流程,比如 Prometheus 可以从 metrics-server 获取核心度量,从其他数据源(如 Node Exporter 等)获取非核心度量,再基于它们构建监控告警系统。

引用:https://kubernetes.feisky.xyz/bu-shu-pei-zhi/index-1/metrics#bu-shu-metricsserver

证书文件

需要使用TLS证书对通信进行加密,这里我们使用CloudFlare的PKI 工具集cfssl 来生成Certificate Authority(CA) 证书和密钥文件, CA 是自签名的证书,用来签名后续创建的其他TLS 证书,

安装 CFSSL

$ wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

$ chmod +x cfssl_linux-amd64

$ sudo mv cfssl_linux-amd64 /usr/k8s/bin/cfssl

$ wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

$ chmod +x cfssljson_linux-amd64

$ sudo mv cfssljson_linux-amd64 /usr/k8s/bin/cfssljson

$ wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

$ chmod +x cfssl-certinfo_linux-amd64

$ sudo mv cfssl-certinfo_linux-amd64 /usr/k8s/bin/cfssl-certinfo

主要用在Metrics API aggregator 上

# cat metrics-ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

# cat metrics-client-csr.json

{

"CN": "metrics-client",

"key": {

"algo": "rsa",

"size": 2048

}

}生成CA 证书和私钥

# cfssl gencert -initca metrics-ca-csr.json | cfssljson -bare metrics-ca

# cfssl gencert -ca=metrics-ca.pem -ca-key=metrics-ca-key.pem -config=/etc/kubernetes/ssl/ca-config.json -profile=kubernetes metrics-client-csr.json | cfssljson -bare metrics-client

将证书传到所有运行kube-apiserver的节点

scp metrics* [email protected]:/etc/kubernetes/ssl/

kube-apiserver启动配置

- --requestheader-client-ca-file: 根证书绑定包用于在信任--requestheader-username-headers指定的标头中的用户名前,在传入请求上验证客户证书

- --requestheader-allowed-names: 客户端证书常用名称列表,允许在--requestheader-username-headers指定的标头中提供用户名,如果为空,则允许在--requestheader-client-ca文件中通过当局验证的任何客户端证书

- --requestheader-extra-headers-prefix: 要检查的请求标头前缀列表

- --requestheader-group-headers: 要检查组的请求标头列表

- --requestheader-username-headers: 要检查用户名的请求标头列表

- --proxy-client-cert-file: 用于证明aggregator或kube-apiserver在请求期间发出呼叫的身份的客户端证书

- --proxy-client-key-file: 用于证明聚合器或kube-apiserver的身份的客户端证书的私钥,当它必须在请求期间调用时使用。包括将请求代理给用户api-server和调用webhook admission插件

- --enable-aggregator-routing=true: 打开aggregator路由请求到endpoints IP,而不是集群IP

cat /etc/systemd/system/kube-apiserver.service

[Service]

ExecStart=/usr/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--advertise-address=10.12.51.171 \

--bind-address=0.0.0.0 \

--insecure-bind-address=127.0.0.1 \

--authorization-mode=Node,RBAC \

--kubelet-https=true \

--token-auth-file=/etc/kubernetes/token.csv \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-52766 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \

--etcd-servers=https://10.12.51.171:2379,https://10.12.51.172:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=2 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--event-ttl=1h \

--logtostderr=true \

--requestheader-client-ca-file=/etc/kubernetes/ssl/metrics-ca.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/ssl/metrics-client.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/metrics-client-key.pem \

--enable-aggregator-routing=true \

--v=4kube-controller-manager启动配置

kubernetes 1.12.1 已经启动设置 --horizontal-pod-autoscaler-use-rest-clients="true"

初始化client代码为: sttartHPAControllerWIthRESTClient

这个无须设置

github: https://github.com/stefanprodan/k8s-prom-hpa

部署prometheus

kubectl apply -f prometheus

或者使用自己的方式部署

- kube-state-metrics: 收集k8s集群内资源对象数据

- node_exporter: 收集集群中各节点的数据

- prometheus: 收集apiserver,scheduler,controller-manager,kubelet组件数据

- alertmanager: 实现监控报警

- grafana: 实现数据可视化

1. metrics-server方式

metric-server收集数据给k8s集群内使用,如kubectl,hpa,scheduler等

1.1 修改文件metrics-server/metrics-server-deployment.yaml

否则出现错误:

error: You must be logged in to the server (Unauthorized) spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

hostPath:

path: /etc/kubernetes/ssl

containers:

- command:

- /metrics-server

- --kubelet-insecure-tls

#- --source=kubernetes.summary_api:https://kubernetes.default.svc?kubeletHttps=true&kubeletPort=10250&useServiceAccount=true&insecure=true

- --requestheader-client-ca-file=/etc/kubernetes/ssl/metrics-ca.pem

name: metrics-server

image: zhangzhonglin/metrics-server-amd64:v0.3.1

imagePullPolicy: Always

volumeMounts:

- name: tmp-dir

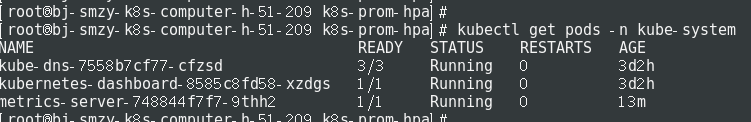

mountPath: /etc/kubernetes/ssl1.2 并部署kubectl apply -f metrics-server

1.3 测试1

kubectl get apiservice v1beta1.metrics.k8s.io -o yaml

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apiregistration.k8s.io/v1beta1","kind":"APIService","metadata":{"annotations":{},"name":"v1beta1.metrics.k8s.io"},"spec":{"group":"metrics.k8s.io","groupPriorityMinimum":100,"insecureSkipTLSVerify":true,"service":{"name":"metrics-server","namespace":"kube-system"},"version":"v1beta1","versionPriority":100}}

creationTimestamp: 2019-01-10T08:36:10Z

name: v1beta1.metrics.k8s.io

resourceVersion: "9491888"

selfLink: /apis/apiregistration.k8s.io/v1/apiservices/v1beta1.metrics.k8s.io

uid: c81ed0de-14b2-11e9-a6a8-00505683f77b

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

status:

conditions:

- lastTransitionTime: 2019-01-11T03:32:59Z

message: all checks passed

reason: Passed

status: "True"

type: Available1.4 测试2

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes" | jq .

1.5 测试3

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/pods" | jq .

{

"kind": "PodMetricsList",

"apiVersion": "metrics.k8s.io/v1beta1",

"metadata": {

"selfLink": "/apis/metrics.k8s.io/v1beta1/pods"

},

"items": [

{

"metadata": {

"name": "podinfo-c7cb8dfdd-dq48b",

"namespace": "default",

"selfLink": "/apis/metrics.k8s.io/v1beta1/namespaces/default/pods/podinfo-c7cb8dfdd-dq48b",

"creationTimestamp": "2019-01-11T03:51:26Z"

},

"timestamp": "2019-01-11T03:52:49Z",

"window": "30s",

"containers": [

{

"name": "podinfod",

"usage": {

"cpu": "1357770n",

"memory": "12396Ki"

}

}

]

},

测试

部署一个应用,主要是设置request,hpa根据这个处理,基于cpu metrics

创建hpa,kubectl autoscale deploy bbbbbbbbbbb --min=1 --max=10 --cpu-percent=80

在pod容器中加入stress压测工具执行 ./stress -c 1

查看cpu情况

查看hpa处理pod情况,已经扩容了好几个pod

将stress进程kill掉,发现一会hpa controller缩容到1个副本

参考:

https://kubernetes.feisky.xyz/bu-shu-pei-zhi/index-1/metrics#bu-shu-metricsserver

https://github.com/kubernetes-incubator/custom-metrics-apiserver