两篇文章掌握Tensorflow中scope用法:

【2】Tensorflow中tf.name_scope() 和 tf.variable_scope() 的区别

1. tf.name_scope()

在 Tensorflow 当中有两种途径生成变量 variable,一种是 tf.get_variable(),另一种是tf.variable()。 如果在tf.name_scope()的框架下使用这两种方式,结果会如下。

import tensorflow as tf

with tf.name_scope("a_name_scope"):

initializer = tf.constant_initializer(value=1)

var1 = tf.get_variable(name='var1', shape=[1], dtype=tf.float32, initializer=initializer)

var2 = tf.Variable(name='var2', initial_value=[2], dtype=tf.float32)

var21 = tf.Variable(name='var2', initial_value=[2.1], dtype=tf.float32)

var22 = tf.Variable(name='var2', initial_value=[2.2], dtype=tf.float32)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(var1.name) # var1:0

print(sess.run(var1)) # [ 1.]

print(var2.name) # a_name_scope/var2:0

print(sess.run(var2)) # [ 2.]

print(var21.name) # a_name_scope/var2_1:0

print(sess.run(var21)) # [ 2.1]

print(var22.name) # a_name_scope/var2_2:0

print(sess.run(var22)) # [ 2.2]结果:

分析:

在tf.name_scope()中使用tf.variable()定义变量的时候,虽然name都一样,但是为了不重复变量名,Tensorflow输出的变量名并不是一样的。所以,本质上var2、var21、var22并不是一样的变量。而另一方面,使用tf.get_variable()定义的变量不会被tf.name_scope()当中的名字所影响,相当于tf.name_scope()对tf.get_variable()是无效的。

2. tf.variable_scope()

如果想要达到重复利用变量的效果, 我们就要使用tf.variable_scope(), 并搭配tf.get_variable()。这种方式产生和提取变量,不像tf.Variable()每次都会产生新的变量,tf.get_variable() 如果遇到了同样名字的变量时, 它会单纯的提取这个同样名字的变量(避免产生新变量), 当在重复使用相同变量名字的时候, 一定要在代码中强调 scope.reuse_variables() ,否则系统将会报错, 以为你只是不小心重复使用到了一个已经使用过的变量。

import tensorflow as tf

with tf.variable_scope("a_variable_scope") as scope:

initializer = tf.constant_initializer(value=3)

var3 = tf.get_variable(name='var3', shape=[1], dtype=tf.float32, initializer=initializer)

scope.reuse_variables()

var3_reuse = tf.get_variable(name='var3', )

var4 = tf.Variable(name='var4', initial_value=[4], dtype=tf.float32)

var4_reuse = tf.Variable(name='var4', initial_value=[4], dtype=tf.float32)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

print(var3.name) # a_variable_scope/var3:0

print(sess.run(var3)) # [ 3.]

print(var3_reuse.name) # a_variable_scope/var3:0

print(sess.run(var3_reuse)) # [ 3.]

print(var4.name) # a_variable_scope/var4:0

print(sess.run(var4)) # [ 4.]

print(var4_reuse.name) # a_variable_scope/var4_1:0

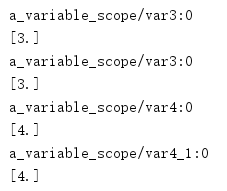

print(sess.run(var4_reuse)) # [ 4.]结果:

分析:

上面结果中,var3和var3_reuse是同一个变量,而var4和var4_reuse是不同的变量。

在tf.variable_scope()中,使用tf.get_variable()方法创建变量时,如果这个变量已经存在,想直接使用这个变量,加上scope.reuse_variables()即可;如果没有加上scope.reuse_variables(),Tensorflow会报重复使用变量的错误。

不管是在tf.name_scope还是在tf.variable_scope()中,tf.Variable()都是在创建新的变量。如果这个变量存在,则后缀会增加0、1、2等数字编号予以区别。

3. RNN应用例子

在使用循环神经网络(RNN)对序列化数据建模时,training RNN和test RNN的time_steps会有不同的取值,这将会影响整个RNN的结构,所以导致在test的时候,不能单纯的使用training时建立的那个RNN模型。但是,training RNN和test RNN又必须是有相同的weights biases的参数。所以,这时就是使用reuse variable的好时机。

下面给一个例子:

首先,定义training和test的不同参数:

class TrainConfig:

batch_size = 20

time_steps = 20

input_size = 10

output_size = 2

cell_size = 11

learning_rate = 0.01

class TestConfig(TrainConfig):

time_steps = 1

train_config = TrainConfig()

test_config = TestConfig()然后,让train_rnn 和 test_rnn 在同一个tf.variable_scope(‘rnn’) 之中。 并且定义scope.reuse_variables(), 使我们能把train_rnn的所有 weights, biases 参数全部绑定到test_rnn 中。这样,不管两者的time_steps有多不同,结构有多不同,train_rnn,W,b 参数更新成什么样,test_rnn的参数也更新成什么样。

with tf.variable_scope('rnn') as scope:

sess = tf.Session()

train_rnn = RNN(train_config)

scope.reuse_variables()

test_rnn = RNN(test_config)

sess.run(tf.global_variables_initializer())最后,给出RNN用tf.variable_scope()和get_variable_scope()实现参数共享的完整例子:

# 22 scope (name_scope/variable_scope)

from __future__ import print_function

import tensorflow as tf

class TrainConfig:

batch_size = 20

time_steps = 20

input_size = 10

output_size = 2

cell_size = 11

learning_rate = 0.01

class TestConfig(TrainConfig):

time_steps = 1

class RNN(object):

def __init__(self, config):

self._batch_size = config.batch_size

self._time_steps = config.time_steps

self._input_size = config.input_size

self._output_size = config.output_size

self._cell_size = config.cell_size

self._lr = config.learning_rate

self._built_RNN()

def _built_RNN(self):

with tf.variable_scope('inputs'):

self._xs = tf.placeholder(tf.float32, [self._batch_size, self._time_steps, self._input_size], name='xs')

self._ys = tf.placeholder(tf.float32, [self._batch_size, self._time_steps, self._output_size], name='ys')

with tf.name_scope('RNN'):

with tf.variable_scope('input_layer'):

l_in_x = tf.reshape(self._xs, [-1, self._input_size], name='2_2D') # (batch*n_step, in_size)

# Ws (in_size, cell_size)

Wi = self._weight_variable([self._input_size, self._cell_size])

print(Wi.name)

# bs (cell_size, )

bi = self._bias_variable([self._cell_size, ])

# l_in_y = (batch * n_steps, cell_size)

with tf.name_scope('Wx_plus_b'):

l_in_y = tf.matmul(l_in_x, Wi) + bi

l_in_y = tf.reshape(l_in_y, [-1, self._time_steps, self._cell_size], name='2_3D')

with tf.variable_scope('cell'):

cell = tf.contrib.rnn.BasicLSTMCell(self._cell_size)

with tf.name_scope('initial_state'):

self._cell_initial_state = cell.zero_state(self._batch_size, dtype=tf.float32)

self.cell_outputs = []

cell_state = self._cell_initial_state

for t in range(self._time_steps):

if t > 0: tf.get_variable_scope().reuse_variables()

cell_output, cell_state = cell(l_in_y[:, t, :], cell_state)

self.cell_outputs.append(cell_output)

self._cell_final_state = cell_state

with tf.variable_scope('output_layer'):

# cell_outputs_reshaped (BATCH*TIME_STEP, CELL_SIZE)

cell_outputs_reshaped = tf.reshape(tf.concat(self.cell_outputs, 1), [-1, self._cell_size])

Wo = self._weight_variable((self._cell_size, self._output_size))

bo = self._bias_variable((self._output_size,))

product = tf.matmul(cell_outputs_reshaped, Wo) + bo

# _pred shape (batch*time_step, output_size)

self._pred = tf.nn.relu(product) # for displacement

with tf.name_scope('cost'):

_pred = tf.reshape(self._pred, [self._batch_size, self._time_steps, self._output_size])

mse = self.ms_error(_pred, self._ys)

mse_ave_across_batch = tf.reduce_mean(mse, 0)

mse_sum_across_time = tf.reduce_sum(mse_ave_across_batch, 0)

self._cost = mse_sum_across_time

self._cost_ave_time = self._cost / self._time_steps

with tf.variable_scope('trian'):

self._lr = tf.convert_to_tensor(self._lr)

self.train_op = tf.train.AdamOptimizer(self._lr).minimize(self._cost)

@staticmethod

def ms_error(y_target, y_pre):

return tf.square(tf.subtract(y_target, y_pre))

@staticmethod

def _weight_variable(shape, name='weights'):

initializer = tf.random_normal_initializer(mean=0., stddev=0.5, )

return tf.get_variable(shape=shape, initializer=initializer, name=name)

@staticmethod

def _bias_variable(shape, name='biases'):

initializer = tf.constant_initializer(0.1)

return tf.get_variable(name=name, shape=shape, initializer=initializer)

if __name__ == '__main__':

train_config = TrainConfig()

test_config = TestConfig()

# the wrong method to reuse parameters in train rnn

with tf.variable_scope('train_rnn'):

train_rnn1 = RNN(train_config)

with tf.variable_scope('test_rnn'):

test_rnn1 = RNN(test_config)

# the right method to reuse parameters in train rnn

with tf.variable_scope('rnn') as scope:

sess = tf.Session()

train_rnn2 = RNN(train_config)

scope.reuse_variables()

test_rnn2 = RNN(test_config)

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)