1、首先启动hadoop

start-all.sh

2、建立maven工程

2.1 编辑pom.xml文件

1 <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 2 xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> 3 <modelVersion>4.0.0</modelVersion> 4 5 <groupId>com.js</groupId> 6 <artifactId>ss</artifactId> 7 <version>0.0.1-SNAPSHOT</version> 8 <packaging>jar</packaging> 9 10 <name>ss</name> 11 <url>http://maven.apache.org</url> 12 13 <properties> 14 <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> 15 <!-- 定義一個變量 --> 16 <hadoop.version>3.1.1</hadoop.version> 17 </properties> 18 19 <dependencies> 20 <dependency> 21 <groupId>junit</groupId> 22 <artifactId>junit</artifactId> 23 <version>3.8.1</version> 24 <scope>test</scope> 25 </dependency> 26 27 <!-- JDK依賴 --> 28 <dependency> 29 <groupId>jdk.tools</groupId> 30 <artifactId>jdk.tools</artifactId> 31 <version>1.8</version> 32 <scope>system</scope> 33 <systemPath>${JAVA_HOME}/lib/tools.jar</systemPath> 34 </dependency> 35 36 <!-- hadoop start --> 37 38 <dependency> 39 <groupId>org.apache.hadoop</groupId> 40 <artifactId>hadoop-hdfs</artifactId> 41 <version>${hadoop.version}</version> 42 </dependency> 43 44 <dependency> 45 <groupId>org.apache.hadoop</groupId> 46 <artifactId>hadoop-client</artifactId> 47 <version>${hadoop.version}</version> 48 </dependency> 49 50 <dependency> 51 <groupId>org.apache.hadoop</groupId> 52 <artifactId>hadoop-common</artifactId> 53 <version>${hadoop.version}</version> 54 </dependency> 55 56 <!-- hadoop end --> 57 </dependencies> 58 59 </project>

2.2 新建一个类文件

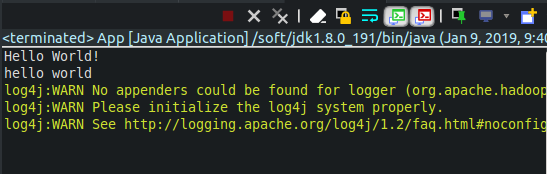

1 package com.js; 2 3 import java.io.FileOutputStream; 4 import java.io.IOException; 5 import java.net.URI; 6 import java.net.URISyntaxException; 7 8 import org.apache.hadoop.conf.Configuration; 9 import org.apache.hadoop.fs.FSDataInputStream; 10 import org.apache.hadoop.fs.FileSystem; 11 import org.apache.hadoop.fs.Path; 12 import org.apache.hadoop.io.IOUtils; 13 14 /** 15 * Hello world! 16 * 17 */ 18 public class App 19 { 20 public static void main( String[] args ) throws IOException, InterruptedException, URISyntaxException { 21 System.out.println( "Hello World!" ); 22 System.out.println("hello world"); 23 24 25 Configuration conf = new Configuration(); 26 // 设置hadoop的文件系统格式 27 conf.set("fs.defaultFS", "hdfs://localhost:9000"); 28 29 FileSystem fs = FileSystem.get(conf); 30 31 Path src = new Path("/wordcount/input"); 32 FSDataInputStream in = fs.open(src); 33 34 FileOutputStream os = new FileOutputStream("./out"); 35 IOUtils.copyBytes(in, os, conf, true); 36 37 } 38 }

在当前目录下,会出现out文件