本文包含的知识点

本文包含的知识点

- 秒杀场景简述及分析

- 使用乐观锁控制库存数量

- 结合redis缓存减小DB压力

- 使用zookeeper分布式锁控制库存数量

- kafka异步化

- 接口限流

- jmeter压测接口

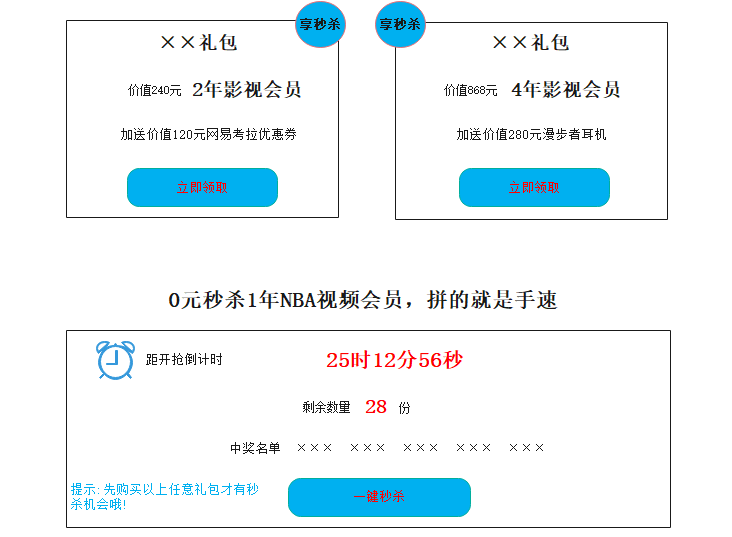

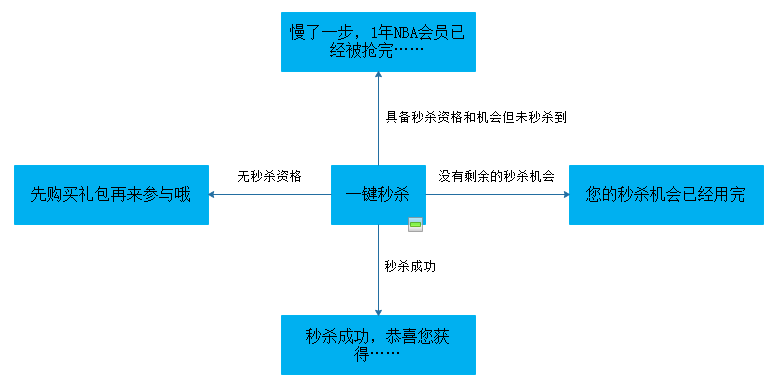

前阵子经常开发一些秒杀类型的项目,故而抽时间总结下。把我们产品的流程图大致勾勒了下:

秒杀一类项目有一些公共的特点:

- 秒杀开始是并发流量瞬间增大;

- 秒杀的奖品库存一般不多,真正秒杀成功到的比较少;

- 业务流程相对简单

应该着重考虑的点:

- 确保商品不卖超,10件库存就严格控制最多只能10人秒杀到

- 既然库存量和参加秒杀人数比率相差这么大,就应该把尽可能多的请求拦截在上游

- 使用缓存,减少落在DB上的无效请求

- 某些操作异步化,加快接口响应

- 快速失败机制,没库存或者异常统一当做秒杀失败处理

- 是否采取限流策略,防接口被刷

我们这边的架构设计如下图:通过nginx负载请求在7台机器上,中间结合redis缓存、kafka处理,最终到主从复制的mysql:

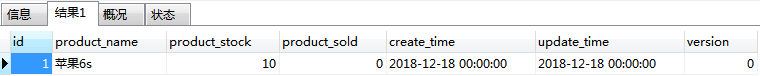

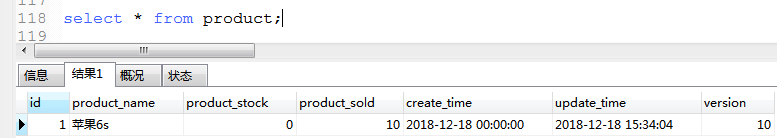

我这里就只部署了一台应用服务来模拟,下面将结合代码一步一步的还原接口开发过程,相应的代码均在github上:https://github.com/simonsfan/SpikeDemo。同时下面的代码是经过简化抽取出来的。先看下表结构:

CREATE TABLE `product` (

`id` INT NOT NULL AUTO_INCREMENT,

`product_name` VARCHAR(50) NOT NULL COMMENT '商品名称',

`product_stock` INT NOT NULL COMMENT '商品库存数量',

`product_sold` INT(11) NOT NULL COMMENT '已售数量',

`create_time` datetime DEFAULT '1970-01-01 00:00:00' COMMENT '参与时间',

`update_time` datetime DEFAULT '1970-01-01 00:00:00' COMMENT '更新时间',

`version` INT NOT NULL DEFAULT 0 COMMENT '乐观锁,版本号',

PRIMARY KEY (`id`),

INDEX idx_product_name(product_name)

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=UTF8 COMMENT '商品库存信息表';

CREATE TABLE `pro_order` (

`id` INT NOT NULL AUTO_INCREMENT,

`product_id` INT NOT NULL COMMENT '商品库存ID',

`order_id` BIGINT NOT NULL COMMENT '订单ID',

`user_name` varchar(50) NOT NULL COMMENT '用户名',

`create_time` datetime DEFAULT '1970-01-01 00:00:00' COMMENT '参与时间',

`update_time` datetime DEFAULT '1970-01-01 00:00:00' COMMENT '更新时间',

PRIMARY KEY (`id`)

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=UTF8 COMMENT '订单信息表'; 无同步限制

无同步限制

@Slf4j

@Service

@Transactional

public class SpikeServiceImpl implements SpikeService {

@Autowired

private OrderService orderService;

@Autowired

private ProductService productService;

@Override

public String spike(Product product,String userName) {

Integer productId = Integer.valueOf(product.getId());

//查库存

Product restProduct = productService.checkStock(productId);

if (product.getProductStock() <= 0) {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//更新库存

int updateStockCount = productService.updateStock(productId);

//生成订单记录

Long orderId = Long.parseLong(RandomStringUtils.randomNumeric(18));

orderService.createOrder(new ProOrder(productId, orderId, userName));

return ResultUtil.success(ResultEnum.SUCCESS.getCode(),ResultEnum.SUCCESS.getMessage());

}

}@Slf4j

@Controller

public class SpikeController {

@Autowired

private SpikeService spikeService;

@AccessLimit(time = 1, threshold = 5)

@ResponseBody

@RequestMapping(method = RequestMethod.GET, value = "/spike")

public String spike(@RequestParam(value = "id", required = false) String id,

@RequestParam(value = "username", required = false) String userName) {

log.info("/spike params:id={},username={}", id, userName);

Product product = new Product();

try {

if (StringUtils.isEmpty(id)) {

return ResultUtil.fail();

}

Integer productId = Integer.valueOf(id);

product.setId(productId);

} catch (Exception e) {

log.error("spike fail username={},e={}", userName, e.toString());

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

return spikeService.spike(product, userName);

}

}<update id="updateStock" parameterType="java.lang.Integer">

update product set product_stock=product_stock-1,product_sold=product_sold+1,update_time=now() where id = #{id}

</update>秒杀基本步骤可浓缩为:查库存-->更新库存-->生成订单,测试时候初始化了10件库存商品

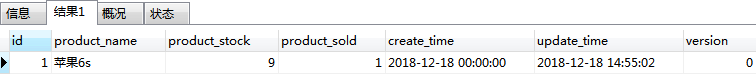

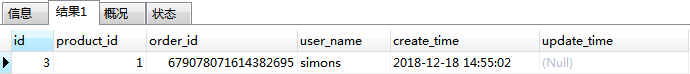

访问http://localhost:8080/spike?id=1&username=simons,发现秒杀成功,库存变为9,同时也生成了该用户的订单记录

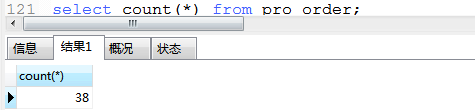

看起来没啥问题,但是bug很明显,查库存和更新库存两个操作不具有原子性,就有并发问题,于是用jmeter压测下(jmeter使用教程传送门),模拟了40个用户并发访问秒杀接口,"秒杀模拟参数.txt"文件部分内容如下:

username,id

simons_1,1

simons_2,1

simons_3,1

……

看看库存表和订单表数据:

结果不出预料的卖超啦!!!

加上乐观锁控制扣减库存

加上乐观锁控制扣减库存

乐观锁的简单体现就是给表加上个version字段,用于每次update时判断依据,如果update时和之前select取出来的version相等就允许更新,否则说明有并发操作。在我这里的体现就是更新库存时候判断version字段和判断库存取出来时候的version是否相同,不相同就说明有人并发修改过了,舍弃这个update操作(或者在update库存时的where条件中加上where product_stock>0都行)。

@Slf4j

@Service

@Transactional

public class SpikeServiceImpl implements SpikeService {

@Autowired

private OrderService orderService;

@Autowired

private ProductService productService;

@Override

public String spike(Product pro, String userName) {

Integer productId = Integer.valueOf(pro.getId());

//查库存

Product product = productService.checkStock(productId);

if (product.getProductStock() <= 0) {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//更新库存,和第一版的区别就在这个地方

int updateStockCount = productService.updateStockVersion(product);

if (updateStockCount == 0) {

log.error("username={},id={}秒杀失败", userName, pro.getId());

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//生成订单记录

Long orderId = Long.parseLong(RandomStringUtils.randomNumeric(18));

orderService.createOrder(new ProOrder(productId, orderId, userName));

return ResultUtil.success(ResultEnum.SUCCESS.getCode(),ResultEnum.SUCCESS.getMessage());

}

}<update id="updateStockVersion" parameterType="com.spike.demo.bean.Product">

update product set product_stock=product_stock-1,product_sold=product_sold+1,update_time=now(),version=version+1 where id=#{id} and version={version}

</update>压测时候我把 "秒杀模拟参数.txt" 文件中的用户增加至400个,也就是说模拟400个用户去秒杀商品,压测结果为:

无论怎么压测,发现在10个库存的情况下一定只有10个人能秒杀到商品,控制住了库存量。但是这里我们重点关注一下,经过多次的压测,接口的TPS(每秒事务处理数,简单理解为吞吐量)平均在200左右:

使用redis缓存减少DB压力

使用redis缓存减少DB压力

上面分析到了既然秒杀的商品库存和参与秒杀的人数比率很小,真正秒杀成功的人其实很少,那么我们就应该尽量把请求拦截在上游,比如400个请求,前10个人就秒杀成功了,剩下的390个请求就可以快速返回了。于是当库存量为0时候,我们在redis中表标识已经无剩余库存,或者索性使用redis的decr or incr方法来控制库存和version,都行,redis基于内存操作非常快,我这里是使用库存标识。

@Slf4j

@Service

@Transactional

public class SpikeServiceImpl implements SpikeService {

@Autowired

private OrderService orderService;

@Autowired

private ProductService productService;

@Resource(name = "redisTemplate")

private RedisTemplate<String, Object> redisTemplate;

private final String SPIKE_KEY = "spike:limit";

private final String SPIKE_VALUE = "over";

@Override

public String spike(Product pro, String userName) {

Integer productId = Integer.valueOf(pro.getId());

//查库存前先判断是否缓存里有库存为0的标识

String flag = (String) redisTemplate.opsForValue().get(SPIKE_KEY);

if (StringUtils.isNotEmpty(flag)) {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

Product product = productService.checkStock(productId);

if (product.getProductStock() <= 0) {

redisTemplate.opsForValue().set(SPIKE_KEY, SPIKE_VALUE, 12, TimeUnit.HOURS);

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//更新库存

int updateStockCount = productService.updateStockVersion(product);

if (updateStockCount == 0) {

log.error("username={},id={}秒杀失败", userName, pro.getId());

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//生成订单记录

Long orderId = Long.parseLong(RandomStringUtils.randomNumeric(18));

orderService.createOrder(new ProOrder(productId, orderId, userName));

return ResultUtil.success(ResultEnum.SUCCESS.getCode(), ResultEnum.SUCCESS.getMessage());

}

}和上面一样也是模拟的400个用户,多次压测后使用缓存后接口的TPS平均在350左右,每秒提升了150个TPS,吞吐量显著增大。

使用zookeeper分布式锁来控制库存量

使用zookeeper分布式锁来控制库存量

zookeeper作为一个高性能、稳定的分布式协调方案、服务,广泛用于Hadoop、Storm、Kafka等产品,zookeeper中的临时有序节点特性非常适合用来实现分布式锁(关于zk实现分布式锁传送门)。

@Slf4j

@Service

@Transactional

public class SpikeServiceImpl implements SpikeService {

@Autowired

private OrderService orderService;

@Autowired

private ProductService productService;

@Override

public String spike(Product pro, String userName) {

DistributedLock lock = new DistributedLock("spike-act");

try {

Integer productId = Integer.valueOf(pro.getId());

if (lock.acquireLock()) {

//查库存

Product product = productService.checkStock(productId);

if (product.getProductStock() <= 0) {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//更新库存

productService.updateStock(product.getId());

//生成订单记录

Long orderId = Long.parseLong(RandomStringUtils.randomNumeric(18));

orderService.createOrder(new ProOrder(productId, orderId, userName));

return ResultUtil.success(ResultEnum.SUCCESS.getCode(), ResultEnum.SUCCESS.getMessage());

} else {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

} finally {

lock.releaseLock();

}

}

}多次压测后,zookeeper实现的分布式锁也能较好的保证库存不卖超,但是接口的TPS较低,平均在10左右(同上400个请求),比乐观锁控制库存和乐观锁加缓存的TPS都相差甚大,如图:

尤其像我们项目的接口服务由nginx负载在7台机器上,这个时候使用zookeeper的话,一旦使用zk分布式锁,这就相当于把7台机器当做一台来用了,都在排队等候,吞吐量实在太低!!!

kafka异步化操作

kafka异步化操作

接着把上面生成订单操作异步化执行,把生成订单操作放在kafka里面,因为能生成订单说明这个"请求"已经秒杀成功了。

@Slf4j

@Service

@Transactional

public class SpikeServiceImpl implements SpikeService {

@Autowired

private OrderService orderService;

@Autowired

private ProductService productService;

@Resource(name = "redisTemplate")

private RedisTemplate<String, Object> redisTemplate;

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

private final String SPIKE_KEY = "spike:limit";

private final String SPIKE_VALUE = "over";

private final String SPIKE_TOPIC = "spike_topic";

@Override

public String spike(Product pro, String userName) {

Integer productId = Integer.valueOf(pro.getId());

//查库存前先判断是否缓存里有库存为0的标识

String flag = (String) redisTemplate.opsForValue().get(SPIKE_KEY);

if (StringUtils.isNotEmpty(flag)) {

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

Product product = productService.checkStock(productId);

if (product.getProductStock() <= 0) {

redisTemplate.opsForValue().set(SPIKE_KEY, SPIKE_VALUE, 12, TimeUnit.HOURS);

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//更新库存

int updateStockCount = productService.updateStockVersion(product);

if (updateStockCount == 0) {

log.error("username={},id={}秒杀失败", userName, pro.getId());

return ResultUtil.success(ResultEnum.SPIKEFAIL.getCode(), ResultEnum.SPIKEFAIL.getMessage());

}

//推送给kafka处理 生成订单操作

String content = productId + ":" + userName;

kafkaTemplate.send(SPIKE_TOPIC, content);

return ResultUtil.success(ResultEnum.SUCCESS);

}

@KafkaListener(topics = SPIKE_TOPIC)

public void messageConsumerHandler(String content) {

log.info("进入kafka消费队列==========content:{}", content);

//生成订单记录

Long orderId = Long.parseLong(RandomStringUtils.randomNumeric(18));

String[] split = content.split(":");

orderService.createOrder(new ProOrder(Integer.valueOf(split[0]), orderId, split[1]));

log.info("生成订单success");

}

}压测结果就不贴图了。

接口限流

接口限流

对接口进行限流能较好的保护应用服务,限制用户每秒访问接口次数,防止用户恶意刷接口,具体的代码如下:

/**

* @ClassName AccessLimit

* @Description 自定义注解

* @Author simonsfan

* @Date 2018/12/19

* Version 1.0

*/

@Documented

@Inherited

@Retention(value = RetentionPolicy.RUNTIME)

@Target(value = ElementType.METHOD)

public @interface AccessLimit {

/**

* 默认1秒内限制4次

* @return

*/

int threshold() default 1;

//单位: 秒

int time() default 4;

}/**

* @ClassName AccessLimitInterceptor

* @Description 自定义拦截器

* @Author simonsfan

* @Date 2018/12/19

* Version 1.0

*/

@Component

public class AccessLimitInterceptor extends HandlerInterceptorAdapter {

@Resource(name="redisTemplate")

private RedisTemplate<String, Integer> redisTemplate;

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

if (handler instanceof HandlerMethod) {

HandlerMethod handlerMethod = (HandlerMethod) handler;

Method method = handlerMethod.getMethod();

boolean annotationPresent = method.isAnnotationPresent(AccessLimit.class);

if (!annotationPresent) {

return false;

}

AccessLimit accessLimit = method.getAnnotation(AccessLimit.class);

int threshold = accessLimit.threshold();

int time = accessLimit.time();

String ip = IpUtil.getIpAddrAdvanced(request);

Integer limitRecord = redisTemplate.opsForValue().get(ip);

if (limitRecord == null) {

redisTemplate.opsForValue().set(ip, 1, time, TimeUnit.SECONDS);

} else if (limitRecord < threshold) {

redisTemplate.opsForValue().set(ip, limitRecord+1, time, TimeUnit.SECONDS);

} else {

outPut(response, ResultEnum.FREQUENT);

return false;

}

}

return true;

}

public void outPut(HttpServletResponse response, ResultEnum resultEnum) {

try {

response.getWriter().write(ResultUtil.success(resultEnum.getCode(),resultEnum.getMessage()));

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, @Nullable ModelAndView modelAndView) throws Exception {

}

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response, Object handler, @Nullable Exception ex) throws Exception {

}

}/**

* @ClassName InterceptorConfig

* @Description 把自定义的拦截器加入配置

* @Author simonsfan

* @Date 2018/12/19

* Version 1.0

*/

@Configuration

public class InterceptorConfig implements WebMvcConfigurer {

@Autowired

private AccessLimitInterceptor accessLimitInterceptor;

@Override

public void addInterceptors(InterceptorRegistry registry) {

registry.addInterceptor(accessLimitInterceptor);

}

}使用时将加在注解@AccessLimit加在controller的spike方法上即可,比如

@AccessLimit(threshold = 2, time = 5)

@ResponseBody

@RequestMapping(method = RequestMethod.GET, value = "/spike")

public String spike() {

}限流的文章具体的可以看这篇:https://blog.csdn.net/fanrenxiang/article/details/80683378

![]() 项目github:https://github.com/simonsfan/SpikeDemo

项目github:https://github.com/simonsfan/SpikeDemo

![]() Jmeter自定义变量压测接口:https://blog.csdn.net/fanrenxiang/article/details/80753159

Jmeter自定义变量压测接口:https://blog.csdn.net/fanrenxiang/article/details/80753159

![]() Zookeeper实现分布式锁:https://blog.csdn.net/fanrenxiang/article/details/81704691

Zookeeper实现分布式锁:https://blog.csdn.net/fanrenxiang/article/details/81704691

![]() 接口限流:https://blog.csdn.net/fanrenxiang/article/details/80683378

接口限流:https://blog.csdn.net/fanrenxiang/article/details/80683378

![]() Kafka quick start:http://kafka.apache.org/quickstart

Kafka quick start:http://kafka.apache.org/quickstart

![]() 本文用到的压测jmeter文件及秒杀模拟参数.tx文件:https://pan.baidu.com/s/1JMxDCp-wQVFJTpSXUhhLbA

本文用到的压测jmeter文件及秒杀模拟参数.tx文件:https://pan.baidu.com/s/1JMxDCp-wQVFJTpSXUhhLbA