初涉scrapy,这是一个用于练手的项目,所有工作均在Mac上调试完成。

基本思路步骤:

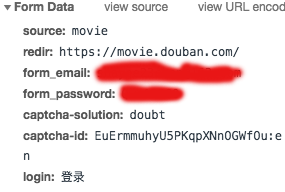

1.看到所有短评需要登录,需要模拟登录。传入登录的URL,使用FormRequest进行登录,详细参数通过浏览器开发者模式查看

其中,captcha-solution为验证码中的字符,captcha-id为验证码图片的ID,需要先解析html得到每次登录时的验证码图片ID

本想直接通过百度识图API解决验证码问题,但识别正确率太低,改为手动识别的方法。先将验证码图片自动下载到本地并解析出ID,然后通过Image模块自动打开,在终端运行时输入验证码即可

2.登录成功后,进入到豆瓣电影的主页,此时可以根据电影名称进行搜索,在终端输入需要爬取的电影的名称。搜索结果页面由于js等问题,scrapy无法正确解析,此处开始通过selenium跳转到电影的短评页面,并返回URL。

3.开始抓取,获取用户名、评分、短评存储到MongoDB中

4.从mongoDB中提取数据,使用wordcloud进行数据可视化

5.反爬虫策略,修改scrapy源代码,修改其中的USER-AGENT为本机浏览器内容;cookie传递通过scrapy的cookiejar完成,需要在配置文件中开启cookie记录

代码示例

items.py

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

user = scrapy.Field()

score = scrapy.Field()

comment = scrapy.Field()

核心部分

class DoubanSpider(scrapy.Spider):

name = "douban"

allowed_domains = ['douban.com']

def start_requests(self):

login_url = 'https://accounts.douban.com/login'

return [Request(login_url, callback=self.login, meta={'cookiejar':1})]

def login(self, response):

data = {

"source":"movie",

"redir":"https://movie.douban.com/",

"form_email":"******",

"form_password":"******",

"login":"登录"

}

img_path = '/Users/shawn/Downloads/check.png'

if os.path.exists(img_path):

os.remove(img_path)

img_url = response.xpath('//*[@id="captcha_image"]/@src').extract_first()

if img_url != None:

img_id = img_url.split("id=")[1].split('&')[0]

urllib.request.urlretrieve(img_url, filename=img_path)

img = Image.open(img_path)

img.show()

check_input = input("Input the check:")

data["captcha-id"] = img_id

data["captcha-solution"] = check_input

return FormRequest.from_response(response, meta={'cookiejar':response.meta['cookiejar']},formdata=data, callback=self.parge_main)

else:

return FormRequest.from_response(response, meta={'cookiejar':response.meta['cookiejar']}, formdata=data, callback=self.parge_main)

def parge_main(self, response):

movie_name = input("Input movie name: ")

search_url = response.urljoin("subject_search?search_text=%s&cat=1002"%movie_name)

browser = webdriver.Chrome("/Users/shawn/Downloads/chromedriver")

browser.get(search_url)

time.sleep(1)

movie_info = browser.find_element_by_xpath('//*[@id="root"]/div/div[2]/div[1]/div[1]/div[1]/div[1]/div/div[1]/a')

movie_info.click()

time.sleep(1)

content_info = browser.find_element_by_xpath('//*[@id="comments-section"]/div[1]/h2/span/a')

content_info.click()

time.sleep(1)

content_url = browser.current_url

return Request(content_url, callback=self.parse, meta={'cookiejar':response.meta['cookiejar']})

def parse(self, response):

item = DoubanItem()

all_person_comments = response.xpath('//div[contains(@class,"comment-item")]')

for person_comment in all_person_comments:

username = person_comment.xpath('./div[2]/h3/span[2]/a/text()').extract_first()

comment = person_comment.xpath('./div[2]/p/span/text()').extract_first()

score_flag = (person_comment.xpath('./div[2]/h3/span[2]/span[2]').extract_first()).split("title")[0]

score_list = (re.findall('\d+', score_flag))

if len(score_list) != 0:

score = int(score_list[0])

else:

score = 0

item["user"] = username

item["score"] = score

item["comment"] = comment

yield item

next_url = response.xpath('//*[@id="paginator"]/a[last()]/@href').extract_first()

if "limit=-20" not in next_url:

next_page_url = response.urljoin(next_url)

yield Request(url=next_page_url, callback=self.parse, meta={'cookiejar':response.meta['cookiejar']})

import pymongo

from scrapy.conf import settings

class DoubanPipeline(object):

def __init__(self):

host = settings["MONGODB_HOST"]

port = settings["MONGODB_PORT"]

dbname = settings["MONGODB_DBNAME"]

client = pymongo.MongoClient(host=host, port=port)

db = client[dbname]

self.collection = db[settings["MONGODB_TABLE"]]

def process_item(self, item, spider):

person_comment = dict(item)

self.collection.insert(person_comment)

return item

抓取电影《海王》的数据来看看

热词图