版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_29622761/article/details/84106626

爬虫地址

http://www.boc.cn/sdbapp/rwmerchant/sra32/

设计技术

- requests请求页面

- re正则表达式

- xpath语法解析html对象

爬虫思路

- 爬虫开始

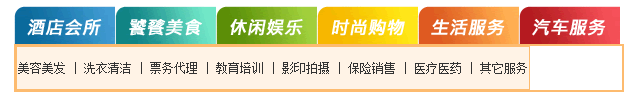

- 先找到大类,大类比如:

- 每一个大类找到分页的链接

- 解析每一个分页的链接里面的商店的链接

- 对每一个商店的链接进行抓取和解析

- 爬虫结束

爬虫代码

#-*-coding:utf-8-*-

import json

import os

import re

import time

import lxml

import requests

import xlrd

import xlwt

from lxml import etree

from xlutils.copy import copy

def get_page(url):

try:

response= requests.get(url)

if response.status_code==200:

return response

except:

return None

def parse_detail_page(detail_html):

company_name=''.join(detail_html.xpath('//td[@colspan="3"]/text()'))

try:

company_address = detail_html.xpath('//td[@colspan="5"]/text()')[0]

except:

company_address=''

try:

company_phone = detail_html.xpath('//td[@colspan="5"]/text()')[1]

except:

company_phone=''

company_type=''.join(detail_html.xpath('//td[@width="161"]/text()'))

company_belongcity = ''.join(detail_html.xpath('//td[@width="141"]/text()'))

activity_deadtime = ''.join(detail_html.xpath('//td[@width="180"]/text()')).strip().replace('\r\n','').replace('\n','')

activity_content = ''.join(detail_html.xpath('//td[@class="dashlv"]/p/text()')).strip().replace('\r\n','').replace('\n','')

# print(company_name)

# print(company_address)

# print(company_phone)

# print(company_type)

# print(company_belongcity)

# print(activity_deadtime)

# print(activity_content)

# print('-----------------------')

#activity_deadtime=detail_html.xpath('detail_url')

info_list=[company_name,company_phone,company_address,company_belongcity,company_type,activity_deadtime,activity_content]

return info_list

def write_data(sheet, row,lst):

for data_infos in lst:

j = 0

for data in data_infos:

sheet.write(row, j, data)

j += 1

row += 1

def save(file_name,data):

if os.path.exists(file_name):

# 打开excel

rb = xlrd.open_workbook(file_name, formatting_info=True)

# 用 xlrd 提供的方法获得现在已有的行数

rn = rb.sheets()[0].nrows

# 复制excel

wb = copy(rb)

# 从复制的excel文件中得到第一个sheet

sheet = wb.get_sheet(0)

# 向sheet中写入文件

write_data(sheet, rn, data)

# 删除原先的文件

os.remove(file_name)

# 保存

wb.save(file_name)

else:

header = ['company_name','company_phone','company_address','company_belongcity','company_type','activity_deadtime','activity_content']

book = xlwt.Workbook(encoding='utf-8')

sheet = book.add_sheet('中国银行卡-优惠商户活动数据')

# 向 excel 中写入表头

for h in range(len(header)):

sheet.write(0, h, header[h])

# 向sheet中写入内容

write_data(sheet, 1, data)

book.save(file_name)

def main():

print('*' * 80)

print('\t\t\t\t中国银行卡-优惠服务数据下载')

print('作者:谢华东 2018.11.12')

print('--------------')

path = (input('请输入要保存的地址(例如:C:\\Users\\xhdong1\\Desktop\\),不输入则保存到当前地址:\n'))

file_name = path + '中国银行卡-优惠商户活动数据.xls'

print(file_name)

js='http://www.boc.cn/sdbapp/images/boc08_sra3_p.js'

js = get_page(js).content.decode('utf-8')

#print(js)

pattern = '(/[0-9]{4}/[0-9]{4}/[0-9]{4}/)'

url_list = re.findall(pattern,js,re.I)

print('需要爬取的大类目的链接有:')

print(url_list)

for url in url_list:

# 计算有多少页

base_url = 'http://www.boc.cn/sdbapp/rwmerchant'+url

#print(base_url)

html = get_page(base_url)

html = html.content.decode('utf-8')

#print(html)

total_page = ''.join(re.findall('getNav\(0,(\d+),"index","html"\)', html, re.I))

#print(total_page)

print('正在爬' + base_url + '链接下的商店数据')

for i in range(0,int(total_page)):

if i==0:

second_url = base_url

else:

second_url = base_url+'index_{i}.html'.format(i=str(i))

#print(second_url)

print('总共{total}页,正在爬第{i}/{total}页'.format(total=total_page,i=i))

# 提取出每一个具体的商户链接

second_html = get_page(second_url)

second_html = second_html.content.decode('utf-8')

detail_url_list = re.findall('../../..(/\d+/t\d+_\d+.html)',second_html,re.I)

#print(detail_url_list)

all_info_list=[]

for url in detail_url_list:

detail_url='http://www.boc.cn/sdbapp/rwmerchant'+url

#print(detail_url)

detail_page = get_page(detail_url)

try:

detail_page = lxml.etree.HTML(detail_page.content.decode('utf-8'))

except:

continue

info_list = parse_detail_page(detail_page)

all_info_list.append(info_list)

#print(all_info_list)

save(file_name,all_info_list)

time.sleep(1)

print('----------------')

print('爬完')

if __name__ == '__main__':

main()

致谢

感谢生活,感谢工作。以前,我总觉得做事要快点,阅读要快,开车要快,跑步要快,赚钱要快…如今,我觉得凡是要慢点,吃饭慢点,阅读慢点,生活慢点…