本文参考:

(1)sakura小樱 :https://blog.csdn.net/Sakura55/article/details/80786499

(2)周志华老师的机器学习第四章决策树

================================================================================================

为什么要剪枝?

随着树的增长, 在训练样集上的精度是单调上升的, 然而在独立的测试样例上测出的精度先上升后下降。

剪枝(pruning)是决策树学习算法对付‘过拟合’的主要手段,在决策树学习中,为了正确分类训练样本,节点划分过程将不断重复,有时会造成决策树分支过多,这时就可能因训练样本学得‘太好了’,以致于把训练集自身的一些特点当作所有数据都具有的一般性质而导致过拟合。因此,可通过主动去掉一些分支来降低过拟合的风险。决策树剪枝的基本策略有‘预剪枝’和‘后剪枝’

预剪枝(Pre-Pruning):在决策树生成的过程中,对每个结点在划分前先进行估计,若当前结点的划分不能带来决策树泛化性能的提升,则停止划分并将当前结点标记为叶结点,其类别标记为样例数最多地类别

(1)每一个结点所包含的最小样本数目,例如10,则该结点总样本数小于10时,则不再分;

(2)指定树的高度或者深度,例如树的最大深度为4;

(3)指定结点的熵小于某个值,不再划分。随着树的增长, 在训练样集上的精度是单调上升的, 然而在独立的测试样例上测出的精度先上升后下降。

后剪枝:先从训练集生成一颗完整的决策树,然后自底向上地对非叶结点进行考察,若将该结点对应地子树替换为叶结点能带来决策树泛化性能提升,则将该子树替换为叶结点

(1)REP-错误率降低剪枝

(2)PEP-悲观剪枝

(3)CCP-代价复杂度剪枝

(4)MEP-最小错误剪枝

简单小栗子

预剪枝

1)

import numpy as np

def binSplitDataSet(dataSet, feature, value):

'''

:param dataSet: 数据集合

:param feature: 待切分的特征

:param value: 该特征的值

:return:

mat0:切分的数据集合0

mat1:切分的数据集合1

'''

mat0 = dataSet[np.nonzero(dataSet[:, feature] > value)[0], :]

mat1 = dataSet[np.nonzero(dataSet[:, feature] <= value)[0], :]

return mat0, mat1

if __name__ == '__main__':

testMat = np.mat(np.eye(4))

mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

print('原始集合:\n', testMat)

print('mat0:\n', mat0)

print('mat1:\n', mat1)binSplitDataSet函数根据特定规则,对数据矩阵进行切分。mat0存放的是大于指定特征值的矩阵,mat1存放的是小于指定特征值的矩阵。

import numpy as np

import matplotlib.pyplot as plt

def binSplitDataSet(dataSet, feature, value):

'''

:param dataSet: 数据集合

:param feature: 待切分的特征

:param value: 该特征的值

:return:

mat0:切分的数据集合0

mat1:切分的数据集合1

'''

mat0 = dataSet[np.nonzero(dataSet[:, feature] > value)[0], :]

mat1 = dataSet[np.nonzero(dataSet[:, feature] <= value)[0], :]

return mat0, mat1

def loadDataSet(fileName):

dataMat = []

fr = open(fileName)

for line in fr.readlines(): # 逐行读取,滤除空格等

curLine = line.strip().split('\t')

fltLine = list(map(float, curLine)) # 转化为float类型

dataMat.append(fltLine) # 添加数据

return dataMat

def plotDataSet(fileName):

dataMat = loadDataSet(fileName)

n = len(dataMat)

xcord = []

ycord = []

for i in range(n):

xcord.append(dataMat[i][0])

ycord.append(dataMat[i][1])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord, ycord, s=20, c='blue', alpha=.5)

plt.title('DataSet')

plt.xlabel('X')

plt.show()

if __name__ == '__main__':

# testMat = np.mat(np.eye(4))

# mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

# print('原始集合:\n', testMat)

# print('mat0:\n', mat0)

# print('mat1:\n', mat1)

fileName='ex00.txt'

plotDataSet(fileName)

添加如下代码:

def regLeaf(dataSet):

'''

生成叶结点

:param dataSet: 数据集合

:return: 目标变量的均值

'''

return np.mean(dataSet[:, -1])

def regErr(dataSet):

'''

误差估计函数

:param dataSet: 数据集合

:return: 目标变量的总方差

'''

return np.var(dataSet[:, -1]) * np.shape(dataSet)[0]

def chooseBestSplit(dataSet, leafType=regLeaf, errType=regErr, ops=(1, 4)):

'''

找到数据的最佳二元切分方式函数

:param dataSet: 数据集合

:param leafType: 生成叶结点

:param errType: 误差估计函数

:param ops: 用户定义的参数构成的元组

:return:

BestIndex-最佳切分特征

bestValue-最佳特征值

'''

import types

tolS = ops[0] # ***

tolN = ops[1] # ***

if len(set(dataSet[:, -1].T.tolist()[0])) == 1:

return None, leafType(dataSet)

m, n = np.shape(dataSet)

S = errType(dataSet)

bestS = float('inf')

bestIndex = 0

bestValue = 0

for featIndex in range(n - 1):

for splitVal in set(dataSet[:, featIndex].T.A.tolist()[0]):

mat0, mat1 = binSplitDataSet(dataSet, featIndex, splitVal)

# 如果数据少于tolN,则跳过

if (np.shape(mat0)[0] < tolN) or (np.shape(mat1)[0] < tolN): continue # ****

newS = errType(mat0) + errType(mat1)

if newS < bestS:

bestIndex = featIndex

bestValue = splitVal

bestS = newS

if (S - bestS) < tolS: # ******

return None, leafType(dataSet)

mat0, mat1 = binSplitDataSet(dataSet, bestIndex, bestValue)

if (np.shape(mat0)[0] < tolN) or (np.shape(mat1)[0] < tolN):

return None, leafType(dataSet)

return bestIndex, bestValue

if __name__ == '__main__':

# testMat = np.mat(np.eye(4))

# mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

# print('原始集合:\n', testMat)

# print('mat0:\n', mat0)

# print('mat1:\n', mat1)

# fileName = 'ex00.txt'

# plotDataSet(fileName)

myDat = loadDataSet('ex00.txt')

myMat = np.mat(myDat)

feat, val = chooseBestSplit(myMat, regLeaf, regErr, (1, 4))

print(feat)

print(val)![]()

切分的最佳特征为第1列特征,最佳切分特征值为0.48813,这个特征值怎么选出来的?就是根据误差估计的大小,我们选择的这个特征值可以使误差最小化。设置tolS和tolN就是一种预剪枝操作。

def createTree(dataSet, leafType=regLeaf, errType=regErr, ops=(1, 4)):

'''

递归

:param dataSet: 数据集合

:param leafType: 建立叶结点的函数

:param errType: 误差计算函数

:param ops: 包含树构建所有其他参数的元组

:return: 构建的回归树

'''

# 选择最佳切分特征和特征值

feat, val = chooseBestSplit(dataSet, leafType, errType, ops)

if feat == None: return val

retTree = {}

retTree['spInd'] = feat

retTree['spVal'] = val

# 分成左数据集和右数据集

lSet, rSet = binSplitDataSet(dataSet, feat, val)

# 创建左子树和右子树

retTree['left'] = createTree(lSet, leafType, errType, ops)

retTree['right'] = createTree(rSet, leafType, errType, ops)

return retTree

if __name__ == '__main__':

# testMat = np.mat(np.eye(4))

# mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

# print('原始集合:\n', testMat)

# print('mat0:\n', mat0)

# print('mat1:\n', mat1)

# fileName = 'ex00.txt'

# plotDataSet(fileName)

myDat = loadDataSet('ex00.txt')

myMat = np.mat(myDat)

# feat, val = chooseBestSplit(myMat, regLeaf, regErr, (1, 4))

# print(feat)

# print(val)

print(createTree(myMat))![]()

这棵树只有两个叶结点。

3)换一个复杂一点的数据集,分段常数数据集。数据集下载地址:https://cuijiahua.com/wp-content/themes/begin/inc/go.php?url=https://github.com/Jack-Cherish/Machine-Learning/blob/master/Regression%20Trees/ex0.txt

修改代码:

def plotDataSet(fileName):

dataMat = loadDataSet(fileName)

n = len(dataMat)

xcord = []

ycord = []

for i in range(n):

xcord.append(dataMat[i][1])

ycord.append(dataMat[i][2])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord, ycord, s=20, c='blue', alpha=.5)

plt.title('DataSet')

plt.xlabel('X')

plt.show()if __name__ == '__main__':

# testMat = np.mat(np.eye(4))

# mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

# print('原始集合:\n', testMat)

# print('mat0:\n', mat0)

# print('mat1:\n', mat1)

fileName = 'ex01.txt'

plotDataSet(fileName)

myDat = loadDataSet('ex01.txt')

myMat = np.mat(myDat)

# feat, val = chooseBestSplit(myMat, regLeaf, regErr, (1, 4))

# print(feat)

# print(val)

print(createTree(myMat))

{'spInd': 1, 'spVal': 0.39435, 'left': {'spInd': 1, 'spVal': 0.582002, 'left': {'spInd': 1, 'spVal': 0.797583, 'left': 3.9871632, 'right': 2.9836209534883724}, 'right': 1.980035071428571}, 'right': {'spInd': 1, 'spVal': 0.197834, 'left': 1.0289583666666666, 'right': -0.023838155555555553}}

这棵树有5个叶结点。

4)预剪枝有一定的局限性,现在使用一个新的数据集。

用上述代码绘制数据集看一下:

自己修改plotDataSet函数~~~

{'spInd': 0, 'spVal': 0.499171, 'left': {'spInd': 0, 'spVal': 0.729397, 'left': {'spInd': 0, 'spVal': 0.952833, 'left': {'spInd': 0, 'spVal': 0.958512, 'left': 105.24862350000001, 'right': 112.42895575000001}, 'right': {'spInd': 0, 'spVal': 0.759504, 'left': {'spInd': 0, 'spVal': 0.790312, 'left': {'spInd': 0, 'spVal': 0.833026, 'left': {'spInd': 0, 'spVal': 0.944221, 'left': 87.3103875, 'right': {'spInd': 0, 'spVal': 0.85497, 'left': {'spInd': 0, 'spVal': 0.910975, 'left': 96.452867, 'right': {'spInd': 0, 'spVal': 0.892999, 'left': 104.825409, 'right': {'spInd': 0, 'spVal': 0.872883, 'left': 95.181793, 'right': 102.25234449999999}}}, 'right': 95.27584316666666}}, 'right': {'spInd': 0, 'spVal': 0.811602, 'left': 81.110152, 'right': 88.78449880000001}}, 'right': 102.35780185714285}, 'right': 78.08564325}}, 'right': {'spInd': 0, 'spVal': 0.640515, 'left': {'spInd': 0, 'spVal': 0.666452, 'left': {'spInd': 0, 'spVal': 0.706961, 'left': 114.554706, 'right': {'spInd': 0, 'spVal': 0.698472, 'left': 104.82495374999999, 'right': 108.92921799999999}}, 'right': 114.1516242857143}, 'right': {'spInd': 0, 'spVal': 0.613004, 'left': 93.67344971428572, 'right': {'spInd': 0, 'spVal': 0.582311, 'left': 123.2101316, 'right': {'spInd': 0, 'spVal': 0.553797, 'left': 97.20018024999999, 'right': {'spInd': 0, 'spVal': 0.51915, 'left': {'spInd': 0, 'spVal': 0.543843, 'left': 109.38961049999999, 'right': 110.979946}, 'right': 101.73699325000001}}}}}}, 'right': {'spInd': 0, 'spVal': 0.457563, 'left': {'spInd': 0, 'spVal': 0.467383, 'left': 12.50675925, 'right': 3.4331330000000007}, 'right': {'spInd': 0, 'spVal': 0.126833, 'left': {'spInd': 0, 'spVal': 0.373501, 'left': {'spInd': 0, 'spVal': 0.437652, 'left': -12.558604833333334, 'right': {'spInd': 0, 'spVal': 0.412516, 'left': 14.38417875, 'right': {'spInd': 0, 'spVal': 0.385021, 'left': -0.8923554999999995, 'right': 3.6584772500000016}}}, 'right': {'spInd': 0, 'spVal': 0.335182, 'left': {'spInd': 0, 'spVal': 0.350725, 'left': -15.08511175, 'right': -22.693879600000002}, 'right': {'spInd': 0, 'spVal': 0.324274, 'left': 15.05929075, 'right': {'spInd': 0, 'spVal': 0.297107, 'left': -19.9941552, 'right': {'spInd': 0, 'spVal': 0.166765, 'left': {'spInd': 0, 'spVal': 0.202161, 'left': {'spInd': 0, 'spVal': 0.217214, 'left': {'spInd': 0, 'spVal': 0.228473, 'left': {'spInd': 0, 'spVal': 0.25807, 'left': 0.40377471428571476, 'right': -13.070501}, 'right': 6.770429}, 'right': -11.822278500000001}, 'right': 3.4496025}, 'right': {'spInd': 0, 'spVal': 0.156067, 'left': -12.1079725, 'right': -6.247900000000001}}}}}}, 'right': {'spInd': 0, 'spVal': 0.084661, 'left': 6.509843285714284, 'right': {'spInd': 0, 'spVal': 0.044737, 'left': -2.544392714285715, 'right': 4.091626}}}}}

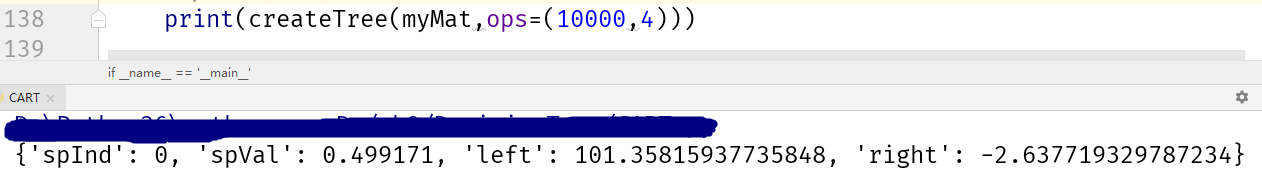

构建出的树有很多叶结点。产生这个现象的原因在于,停止条件tolS对误差的数量级十分敏感。

将参数tolS修改为10000后,构建的树就是只有两个叶结点。

后剪枝

将数据集分成测试集和训练集,用ex02.txt文件作为训练集,新数据集ex02test.txt文件作为测试集。

修改代码:

import numpy as np

import matplotlib.pyplot as plt

def binSplitDataSet(dataSet, feature, value):

'''

:param dataSet: 数据集合

:param feature: 待切分的特征

:param value: 该特征的值

:return:

mat0:切分的数据集合0

mat1:切分的数据集合1

'''

mat0 = dataSet[np.nonzero(dataSet[:, feature] > value)[0], :]

mat1 = dataSet[np.nonzero(dataSet[:, feature] <= value)[0], :]

return mat0, mat1

def loadDataSet(fileName):

dataMat = []

fr = open(fileName)

for line in fr.readlines(): # 逐行读取,滤除空格等

curLine = line.strip().split('\t')

fltLine = list(map(float, curLine)) # 转化为float类型

dataMat.append(fltLine) # 添加数据

return dataMat

def plotDataSet(fileName):

dataMat = loadDataSet(fileName)

n = len(dataMat)

xcord = []

ycord = []

for i in range(n):

xcord.append(dataMat[i][0])

ycord.append(dataMat[i][1])

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(xcord, ycord, s=20, c='blue', alpha=.5)

plt.title('DataSet')

plt.xlabel('X')

plt.show()

def regLeaf(dataSet):

'''

生成叶结点

:param dataSet: 数据集合

:return: 目标变量的均值

'''

return np.mean(dataSet[:, -1])

def regErr(dataSet):

'''

误差估计函数

:param dataSet: 数据集合

:return: 目标变量的总方差

'''

return np.var(dataSet[:, -1]) * np.shape(dataSet)[0]

def chooseBestSplit(dataSet, leafType=regLeaf, errType=regErr, ops=(1, 4)):

'''

找到数据的最佳二元切分方式函数

:param dataSet: 数据集合

:param leafType: 生成叶结点

:param errType: 误差估计函数

:param ops: 用户定义的参数构成的元组

:return:

BestIndex-最佳切分特征

bestValue-最佳特征值

'''

import types

tolS = ops[0]

tolN = ops[1]

if len(set(dataSet[:, -1].T.tolist()[0])) == 1:

return None, leafType(dataSet)

m, n = np.shape(dataSet)

S = errType(dataSet)

bestS = float('inf')

bestIndex = 0

bestValue = 0

for featIndex in range(n - 1):

for splitVal in set(dataSet[:, featIndex].T.A.tolist()[0]):

mat0, mat1 = binSplitDataSet(dataSet, featIndex, splitVal)

# 如果数据少于tolN,则跳过

if (np.shape(mat0)[0] < tolN) or (np.shape(mat1)[0] < tolN): continue

newS = errType(mat0) + errType(mat1)

if newS < bestS:

bestIndex = featIndex

bestValue = splitVal

bestS = newS

if (S - bestS) < tolS:

return None, leafType(dataSet)

mat0, mat1 = binSplitDataSet(dataSet, bestIndex, bestValue)

if (np.shape(mat0)[0] < tolN) or (np.shape(mat1)[0] < tolN):

return None, leafType(dataSet)

return bestIndex, bestValue

def createTree(dataSet, leafType=regLeaf, errType=regErr, ops=(1, 4)):

'''

递归

:param dataSet: 数据集合

:param leafType: 建立叶结点的函数

:param errType: 误差计算函数

:param ops: 包含树构建所有其他参数的元组

:return: 构建的回归树

'''

# 选择最佳切分特征和特征值

feat, val = chooseBestSplit(dataSet, leafType, errType, ops)

if feat == None: return val

retTree = {}

retTree['spInd'] = feat

retTree['spVal'] = val

# 分成左数据集和右数据集

lSet, rSet = binSplitDataSet(dataSet, feat, val)

# 创建左子树和右子树

retTree['left'] = createTree(lSet, leafType, errType, ops)

retTree['right'] = createTree(rSet, leafType, errType, ops)

return retTree

# ————————————————————————————————————from here——————————————————————————————————————

def isTree(obj):

'''

判断测试输入变量是否是一棵树

:param obj: 测试对象

:return: 是否是一棵树

'''

import types

return (type(obj).__name__ == 'dict')

def getMean(tree):

'''

返回树平均值

:param tree:

:return: 树的平均值

'''

if isTree(tree['right']): tree['right'] = getMean(tree['right'])

if isTree(tree['left']): tree['left'] = getMean(tree['left'])

return (tree['left'] + tree['right']) / 2.0

def prune(tree, testData):

'''

后剪枝

:param tree: 树

:param testData: 测试集

:return: 树的平均值

'''

if np.shape(testData)[0] == 0: return getMean(tree)

if (isTree(tree['right']) or isTree(tree['left'])):

lSet, rSet = binSplitDataSet(testData, tree['spInd'], tree['spVal'])

if isTree(tree['left']): tree['left'] = prune(tree['left'], lSet)

if isTree(tree['right']): tree['right'] = prune(tree['right'], rSet)

if not isTree(tree['left']) and not isTree(tree['right']):

lSet, rSet = binSplitDataSet(testData, tree['spInd'], tree['spVal'])

errorNoMerge = np.sum(np.power(lSet[:, -1] - tree['left'], 2)) + np.sum(

np.power(rSet[:, -1] - tree['right'], 2))

treeMean = (tree['left'] + tree['right']) / 2.0

errorMerge = np.sum(np.power(testData[:, -1] - treeMean, 2))

if errorMerge < errorNoMerge:

return treeMean

else:

return tree

else:

return tree

if __name__ == '__main__':

# testMat = np.mat(np.eye(4))

# mat0, mat1 = binSplitDataSet(testMat, 1, 0.5)

# print('原始集合:\n', testMat)

# print('mat0:\n', mat0)

# print('mat1:\n', mat1)

# fileName = 'ex02.txt'

# plotDataSet(fileName)

# myDat = loadDataSet('ex02.txt')

# myMat = np.mat(myDat)

# feat, val = chooseBestSplit(myMat, regLeaf, regErr, (1, 4))

# print(feat)

# print(val)

# print(createTree(myMat, ops=(10000, 4)))

train_filename = 'ex02.txt'

train_Data = loadDataSet(train_filename)

train_Mat = np.mat(train_Data)

tree = createTree(train_Mat)

print(tree)

test_filename = 'ex02test.txt'

test_Data = loadDataSet(test_filename)

test_Mat = np.mat(test_Data)

print(prune(tree, test_Mat))

{'spInd': 0, 'spVal': 0.499171, 'left': {'spInd': 0, 'spVal': 0.729397, 'left': {'spInd': 0, 'spVal': 0.952833, 'left': {'spInd': 0, 'spVal': 0.958512, 'left': 105.24862350000001, 'right': 112.42895575000001}, 'right': {'spInd': 0, 'spVal': 0.759504, 'left': {'spInd': 0, 'spVal': 0.790312, 'left': {'spInd': 0, 'spVal': 0.833026, 'left': {'spInd': 0, 'spVal': 0.944221, 'left': 87.3103875, 'right': {'spInd': 0, 'spVal': 0.85497, 'left': {'spInd': 0, 'spVal': 0.910975, 'left': 96.452867, 'right': {'spInd': 0, 'spVal': 0.892999, 'left': 104.825409, 'right': {'spInd': 0, 'spVal': 0.872883, 'left': 95.181793, 'right': 102.25234449999999}}}, 'right': 95.27584316666666}}, 'right': {'spInd': 0, 'spVal': 0.811602, 'left': 81.110152, 'right': 88.78449880000001}}, 'right': 102.35780185714285}, 'right': 78.08564325}}, 'right': {'spInd': 0, 'spVal': 0.640515, 'left': {'spInd': 0, 'spVal': 0.666452, 'left': {'spInd': 0, 'spVal': 0.706961, 'left': 114.554706, 'right': {'spInd': 0, 'spVal': 0.698472, 'left': 104.82495374999999, 'right': 108.92921799999999}}, 'right': 114.1516242857143}, 'right': {'spInd': 0, 'spVal': 0.613004, 'left': 93.67344971428572, 'right': {'spInd': 0, 'spVal': 0.582311, 'left': 123.2101316, 'right': {'spInd': 0, 'spVal': 0.553797, 'left': 97.20018024999999, 'right': {'spInd': 0, 'spVal': 0.51915, 'left': {'spInd': 0, 'spVal': 0.543843, 'left': 109.38961049999999, 'right': 110.979946}, 'right': 101.73699325000001}}}}}}, 'right': {'spInd': 0, 'spVal': 0.457563, 'left': {'spInd': 0, 'spVal': 0.467383, 'left': 12.50675925, 'right': 3.4331330000000007}, 'right': {'spInd': 0, 'spVal': 0.126833, 'left': {'spInd': 0, 'spVal': 0.373501, 'left': {'spInd': 0, 'spVal': 0.437652, 'left': -12.558604833333334, 'right': {'spInd': 0, 'spVal': 0.412516, 'left': 14.38417875, 'right': {'spInd': 0, 'spVal': 0.385021, 'left': -0.8923554999999995, 'right': 3.6584772500000016}}}, 'right': {'spInd': 0, 'spVal': 0.335182, 'left': {'spInd': 0, 'spVal': 0.350725, 'left': -15.08511175, 'right': -22.693879600000002}, 'right': {'spInd': 0, 'spVal': 0.324274, 'left': 15.05929075, 'right': {'spInd': 0, 'spVal': 0.297107, 'left': -19.9941552, 'right': {'spInd': 0, 'spVal': 0.166765, 'left': {'spInd': 0, 'spVal': 0.202161, 'left': {'spInd': 0, 'spVal': 0.217214, 'left': {'spInd': 0, 'spVal': 0.228473, 'left': {'spInd': 0, 'spVal': 0.25807, 'left': 0.40377471428571476, 'right': -13.070501}, 'right': 6.770429}, 'right': -11.822278500000001}, 'right': 3.4496025}, 'right': {'spInd': 0, 'spVal': 0.156067, 'left': -12.1079725, 'right': -6.247900000000001}}}}}}, 'right': {'spInd': 0, 'spVal': 0.084661, 'left': 6.509843285714284, 'right': {'spInd': 0, 'spVal': 0.044737, 'left': -2.544392714285715, 'right': 4.091626}}}}}

{'spInd': 0, 'spVal': 0.499171, 'left': {'spInd': 0, 'spVal': 0.729397, 'left': {'spInd': 0, 'spVal': 0.952833, 'left': {'spInd': 0, 'spVal': 0.958512, 'left': 105.24862350000001, 'right': 112.42895575000001}, 'right': {'spInd': 0, 'spVal': 0.759504, 'left': {'spInd': 0, 'spVal': 0.790312, 'left': {'spInd': 0, 'spVal': 0.833026, 'left': {'spInd': 0, 'spVal': 0.944221, 'left': 87.3103875, 'right': {'spInd': 0, 'spVal': 0.85497, 'left': {'spInd': 0, 'spVal': 0.910975, 'left': 96.452867, 'right': {'spInd': 0, 'spVal': 0.892999, 'left': 104.825409, 'right': {'spInd': 0, 'spVal': 0.872883, 'left': 95.181793, 'right': 102.25234449999999}}}, 'right': 95.27584316666666}}, 'right': {'spInd': 0, 'spVal': 0.811602, 'left': 81.110152, 'right': 88.78449880000001}}, 'right': 102.35780185714285}, 'right': 78.08564325}}, 'right': {'spInd': 0, 'spVal': 0.640515, 'left': {'spInd': 0, 'spVal': 0.666452, 'left': {'spInd': 0, 'spVal': 0.706961, 'left': 114.554706, 'right': 106.87708587499999}, 'right': 114.1516242857143}, 'right': {'spInd': 0, 'spVal': 0.613004, 'left': 93.67344971428572, 'right': {'spInd': 0, 'spVal': 0.582311, 'left': 123.2101316, 'right': 101.580533}}}}, 'right': {'spInd': 0, 'spVal': 0.457563, 'left': 7.969946125, 'right': {'spInd': 0, 'spVal': 0.126833, 'left': {'spInd': 0, 'spVal': 0.373501, 'left': {'spInd': 0, 'spVal': 0.437652, 'left': -12.558604833333334, 'right': {'spInd': 0, 'spVal': 0.412516, 'left': 14.38417875, 'right': 1.383060875000001}}, 'right': {'spInd': 0, 'spVal': 0.335182, 'left': {'spInd': 0, 'spVal': 0.350725, 'left': -15.08511175, 'right': -22.693879600000002}, 'right': {'spInd': 0, 'spVal': 0.324274, 'left': 15.05929075, 'right': {'spInd': 0, 'spVal': 0.297107, 'left': -19.9941552, 'right': {'spInd': 0, 'spVal': 0.166765, 'left': {'spInd': 0, 'spVal': 0.202161, 'left': -5.801872785714286, 'right': 3.4496025}, 'right': {'spInd': 0, 'spVal': 0.156067, 'left': -12.1079725, 'right': -6.247900000000001}}}}}}, 'right': {'spInd': 0, 'spVal': 0.084661, 'left': 6.509843285714284, 'right': {'spInd': 0, 'spVal': 0.044737, 'left': -2.544392714285715, 'right': 4.091626}}}}}

树的大量结点已经被剪枝掉了,但没有像预期的那样剪枝成两部分,这说明后剪枝可能不如预剪枝有效。一般地,为了寻求最佳模型可以同时使用两种剪枝技术。