2018/11/9

我的Hadoop一开始配置好了,然后把HBASE配置好之后,启动HBASE,然后发现HMASTER启动之后立马关闭了。。就很难受。然后由于我的把hbase数据目录是放在hdfs上面的,所以我就想是不是数据没清理干净。

![]()

然后我就hdfs dfs -ls /

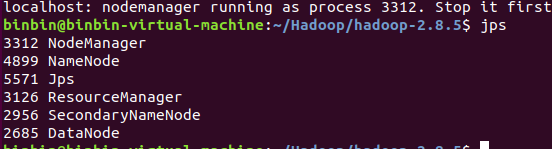

发现了一个/tmp目录,我还以为这个东西是hbase产生的。。。就手贱删了。然后更刺激的来了,我jps后只有jps这个进程。。。我的NameNode,DataNode,secondarynamede都不见了!!!然后各种尝试改配置,重新格式化

嘻嘻嘻嘻嘻

就很绝望有木有!!!

然后翻了下log,不是我能解决的。。。(忘了截图。。。下次一定记住!!)

然后我就直接百度去了,有个大牛的博客说是/tmp文件是存那几个node的端口的,要我去查询那几个NameNode的端口号,然后添加到tmp文件夹中指定的文件中!

ps -ef | grep namenode| grep -v grep

ps -ef | grep secondarynamenode| grep -v grep

ps -ef | grep resourcemanager | grep -v grep

我丢,我都删了,我怎么知道那个文件的结构。。然后大致的意思我懂了,就是我的进程照样还在只是jps不知道它的端口号了,就不能listen了,也就不能操作了。但是那毕竟是tmp目录,临时的嘛,我就放弃的使用最古老的办法了reboot之后大部分node还在

只有我的小可爱NameNode不见了,就然后我去hdfs中看看tmp有没有。出现connection refused,喵喵喵

然后我就重新format,之后就美滋滋啦

Hbase的问题日志是:

018-11-09 18:29:46,391 ERROR [main] master.HMasterCommandLine: Master exiting

java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster.

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:3115)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:236)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:140)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:149)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:3126)

Caused by: java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:644)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:628)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2667)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:93)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2701)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2683)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:372)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.hadoop.hbase.util.CommonFSUtils.getRootDir(CommonFSUtils.java:362)

at org.apache.hadoop.hbase.util.CommonFSUtils.isValidWALRootDir(CommonFSUtils.java:411)

at org.apache.hadoop.hbase.util.CommonFSUtils.getWALRootDir(CommonFSUtils.java:387)

at org.apache.hadoop.hbase.regionserver.HRegionServer.initializeFileSystem(HRegionServerhttps://blog.csdn.net/woloqun/article/details/81350323

执行

cp $HBASE_HOME/lib/client-facing-thirdparty/htrace-core-3.1.0-incubating.jar $HBASE_HOME/lib/由这个大牛解决,大牛的建议扒源码,从Hadoop那边扒jar过来即可美滋滋。看提示信息:org/apache/htrace/SamplerBuilder

log里面也有Hregion的信息,相比较就会发现都与htrace有关,又这两个是依赖关系(hbase依赖Hadoop)且是Hbase找不到主类。。就把主类给他呗。

ERROR [main] regionserver.HRegionServer: Failed construction RegionServer

java.lang.NoClassDefFoundError: org/apache/htrace/SamplerBuilder

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:644)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:628)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2667)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:93)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2701)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2683)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:372)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.hadoop.hbase.util.CommonFSUtils.getRootDir(CommonFSUtils.java:362)

at org.apache.hadoop.hbase.util.CommonFSUtils.isValidWALRootDir(CommonFSUtils.java:411)

at org.apache.hadoop.hbase.util.CommonFSUtils.getWALRootDir(CommonFSUtils.java:387)

at教训:

一定要把.Trash功能开启!!!这样乱删文件还能找回来。。。

在core-site.xml文件中加下面这个

<!--Enable Trash -->

<property>

<name>fs.trash.interval</name>

<value>120</value>

</property>

<property>

<name>fs.trash.checkpoint.interval</name>

<value>120</value>

</property>现在Hbase默认端口是16010(可通过regionserver文件里面的IP,再加":16010"浏览器访问)

Hadoop的web界面端口是50070

emmmmmmmmmmmmmm

2. 打了一会游戏,回来一开HMASTER不见了,嗨呀

日常:第一步翻日志:

2018-11-09 20:01:28,899 ERROR [main-EventThread] master.HMaster: Master server abort: loaded coprocessors are: []emmmmm往前面继续翻

2018-11-09 20:01:25,720 WARN [master/binbin-virtual-machine:16000] util.Sleeper:

We slept 1019849ms instead of 3000ms, this is likely due to

a long garbage collecting pause and it's usually bad, see http://hbase.apache.org/book.html#trouble.rs.runtime.zkexpired2018-11-09 20:01:28,956 INFO

[master/binbin-virtual-machine:16000.splitLogManager..Chore.1]

hbase.ScheduledChore: Chore: SplitLogManager Timeout Monitor was stopped就是session超时了嘛。

在hbase-site.xml加以下代码就好

<property>

<name>zookeeper.session.timeout</name>

<value>240000</value>

<!--默认: 180000 :zookeeper 会话超时时间,单位是毫秒 -->

</property>注:这个时间还依赖于zookeeper自身的超时时间,如果太短也需要设置一下,在zookeeper的配置文件中配置:

minSessionTimeout,maxSessionTimeout。

详见zookeeper安装章节。from:https://blog.csdn.net/qq_41665356/article/details/80271643