参考文章:https://blog.csdn.net/russle/article/details/83421904

一、问题描述

实际项目中我们可能在创建topic时没有设置好正确的replication-factor,(默认情况为1份),导致kafka集群虽然是高可用的,但是该topic所在的broker宕机时,可能发生无法使用的情况。topic一旦使用又不能轻易删除重建,因此动态增加副本因子就成为最终的选择。

二、问题解决

首先本地编辑一个json文件reassign.json如下:

/*

*# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

# For anything other than development testing, a value greater than 1 is recommended for to ensure availability such as 3.

offsets.topic.replication.factor=3

*/

//实际默认为1,即offsets.topic.replication.factor=1

//动态增加之后如下:

{"version":1,

"partitions":[

{"topic":"__consumer_offsets","partition":0,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":1,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":2,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":3,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":4,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":5,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":6,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":7,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":8,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":9,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":10,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":11,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":12,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":13,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":14,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":15,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":16,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":17,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":18,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":19,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":20,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":21,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":22,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":23,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":24,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":25,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":26,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":27,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":28,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":29,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":30,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":31,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":32,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":33,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":34,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":35,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":36,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":37,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":38,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":39,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":40,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":41,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":42,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":43,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":44,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":45,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":46,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":47,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":48,"replicas":[1,2,3]},

{"topic":"__consumer_offsets","partition":49,"replicas":[1,2,3]}

]}

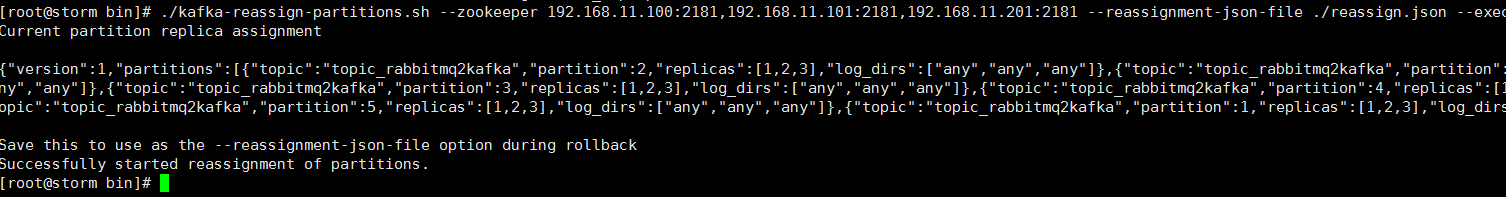

如下保存为reassign.json文件,然后在shell的kafka的bin目录下执行如下命令

kafka-reassign-partitions.sh --zookeeper localhost:2181 --reassignment-json-file reassign.json --execute

这样,就增加了topic的副本数量;防止topic中的offset所在的broker宕机时候不能提供消费;

如果需要指定自身topic中offset的保存replicas位置(broker位置),则需要将"topic":"__consumer_offsets"改为相应的"topic":“topic_xxx”

我犯的错误是:

Partition reassignment data file is empty

被坑的不清,最后发现是json文件格式中最后一行有一个逗号“,”。这还是在别人发现的问题。特此标注。

如下是原始的reassign.json文件

去掉之后,重新执行

kafka-reassign-partitions.sh --zookeeper localhost:2181 --reassignment-json-file reassign.json --execute

topic的replication设置成功!