本次部署采用了openshift的高级部署形式,使用ansible脚本完成,共需要4台云主机,三台高配置的云主机构建一个openshift单集群,一台普通配置云主机做openshift集群nfs存储节点。

云主机具体规划如下:

| ip | hostname | 操作系统 | 内存 | 数据盘 |

| 192.168.1.100 | master.xykz.com master | centos7.3 | 64G | 1000G |

| 192.168.1.55 | node1.xykz.com node1 | centos7.3 | 64G | 1000G |

| 192.168.1.208 | node2.xykz.com node2 | centos7.3 | 64G | 1000G |

| 192.168.1.74 | nfs2.xykz.com nfs2 | centos7.3 | 64G | 1000G |

一、分别设置云主机名与修改/etc/hosts

hostnamectl set-hostname master.xykz.com

echo "192.168.1.100 master.xykz.com master" >> /etc/hosts

echo "192.168.1.55 node1.xykz.com node1" >> /etc/hosts

echo "192.168.1.208 node2.xykz.com node2" >> /etc/hosts

echo "192.168.1.74 nfs2.xykz.com nfs2" >> /etc/hosts

--master主机设置完毕

hostnamectl set-hostname node1.xykz.com

echo "192.168.1.100 master.xykz.com master" >> /etc/hosts

echo "192.168.1.55 node1.xykz.com node1" >> /etc/hosts

echo "192.168.1.208 node2.xykz.com node2" >> /etc/hosts

echo "192.168.1.74 nfs2.xykz.com nfs2" >> /etc/hosts

--node1主机设置完毕

hostnamectl set-hostname node2.xykz.com

echo "192.168.1.100 master.xykz.com master" >> /etc/hosts

echo "192.168.1.55 node1.xykz.com node1" >> /etc/hosts

echo "192.168.1.208 node2.xykz.com node2" >> /etc/hosts

echo "192.168.1.74 nfs2.xykz.com nfs2" >> /etc/hosts

--node2主机设置完毕

hostnamectl set-hostname nfs2.xykz.com

echo "192.168.1.100 master.xykz.com master" >> /etc/hosts

echo "192.168.1.55 node1.xykz.com node1" >> /etc/hosts

echo "192.168.1.208 node2.xykz.com node2" >> /etc/hosts

echo "192.168.1.74 nfs2.xykz.com nfs2" >> /etc/hosts

nfs服务器设置完毕

二、所有节点修改设置云主机ssh自动连接超时

sed -i 's/TMOUT=1800/#TMOUT=1800/g' /etc/profile

source /etc/profile

三、所有节点切换镜像到阿里云

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum makecache

四、所有节点修改selinux,需要开启,天翼云镜像默认关闭

sed -i 's/SELINUX=disabled/SELINUX=enforcing/g' /etc/selinux/config

reboot

sestatus

五、在所有节点安装依赖软件包

yum install wget git yum-utils net-tools bind-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct bash-completion.noarch bash-completion-extras.noarch java-1.8.0-openjdk-headless python-passlib NetworkManager -y

安装完毕后顺便对系统镜像进行升级

yum update -y

reboot

#升级后重启机器

六、在mater节点上生成rsa密钥,将生成的密钥拷贝至包括自己在内的节点

ssh-keygen -t rsa

#三次回车

ssh-copy-id master.xykz.com

ssh-copy-id node1.xykz.com

ssh-copy-id node2.xykz.com

ssh-copy-id nfs2.xykz.com

七、集群内除nfs无需安装docker,其余机器均需要安装指定版本的docker

yum install docker-1.13.1 -y

openshift3 依赖docker的版本为1.13.1

八、初始化安装docker的机器存储

vgremove DOCKER -y

fdisk /dev/xvde

echo "d"\n"w"\n >> fdisk.txt

vim fdisk.txt

d

w

fdisk /dev/xvde < fdisk.txt

#清理磁盘数据

wipefs --all /dev/xvde

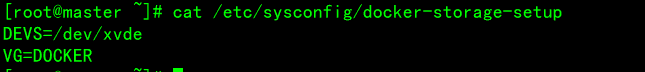

九、为每台机器的docker配置专属存储

echo DEVS=/dev/xvde >> /etc/sysconfig/docker-storage-setup

echo VG=DOCKER >> /etc/sysconfig/docker-storage-setup

#echo SETUP_LVM_THIN_POOL=yes >> /etc/sysconfig/docker-storage-setup

#定义创建 DATA thin pool 的大小,默认为 VG 的 40%

#echo DATA_SIZE="100%FREE">> /etc/sysconfig/docker-storage-setup

#执行docker存储配置

docker-storage-setup

十、修改docker的镜像为国内加快下载速度

vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://registry.docker-cn.com"

]

}

#注意,一定要保证该文件符合 json 规范,否则 Docker 将不能启动。之后重新启动服务。

#启动docker

systemctl enable docker

systemctl start docker

十一、安装ansible扩展库

#3.9必須2.5版本

yum -y install epel-release

禁用扩展库防止后续安装出错

sed -i -e "s/^enabled=1/enabled=0/" /etc/yum.repos.d/epel.repo

十二、安装ansible软件

yum install ansible pyOpenSSL -y

rpm -Uvh https://releases.ansible.com/ansible/rpm/release/epel-7-x86_64/ansible-2.6.4-1.el7.ans.noarch.rpm

十三、从github上下载openshift已经release版本

#release版本下载汇聚

https://github.com/openshift/openshift-ansible/releases

#3.10.60-1

wget https://github.com/openshift/openshift-ansible/archive/openshift-ansible-3.10.60-1.zip

下载完以后解压到/home下

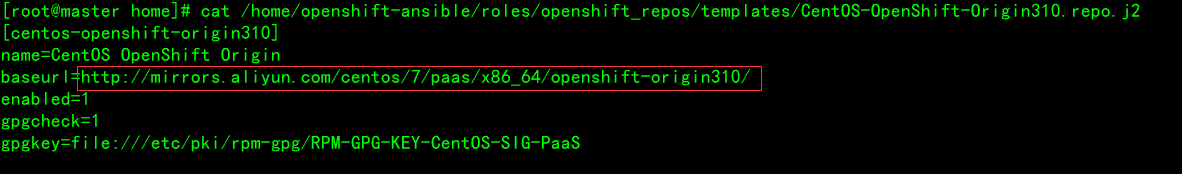

十四、修改软件源

vim /home/openshift-ansible/roles/openshift_repos/templates/CentOS-OpenShift-Origin310.repo.j2

修改修改CentOS-OpenShift-Origin310.repo.j2中的源修改為國內的,比如阿里雲。

http://mirrors.aliyun.com/centos/7/paas/x86_64/openshift-origin310/

十五、配置nfs节点

#新硬盘分区

fdisk /dev/xvde

#格式化硬盘

mkfs.ext4 /dev/xvde1

#创建目录

mkdir -p /exports/

#挂载硬盘

mount /dev/xvde1 /exports/

#修改fstab文件,保障重启后自动挂载

echo "/dev/xvde1 /exports ext4 defaults 1 2" >> /etc/fstab

#默认情况下,SELinux不允许从pod写入远程NFS服务器。NFS卷正确安装,但是只读。

#要在每个节点上启用SELinux写入:

setsebool -P virt_use_nfs 1

十六、在master上配置ansible安装脚本

[root@master home]# vim /etc/ansible/hosts

[OSEv3:children]

masters

nodes

etcd

nfs

[OSEv3:vars]

ansible_ssh_user=root

openshift_deployment_type=origin

openshift_release="3.10"

openshift_pkg_version=-3.10.0

openshift_image_tag=v3.10.0

openshift_install_examples=true

openshift_master_default_subdomain=apps.192.168.1.100.nip.io

openshift_check_min_host_disk_gb=1.5

openshift_check_min_host_memory_gb=1.9

openshift_portal_net=172.30.0.0/16

debug_level=4

openshift_docker_insecure_registries=172.30.0.0/16

#禁止磁盤、內存和鏡像檢查

openshift_disable_check=disk_availability,docker_storage,memory_availability,docker_image_availability

openshift_master_identity_providers=[{'name': 'htpasswd_auth', 'login': 'true', 'challenge': 'true', 'kind': 'HTPasswdPasswordIdentityProvider'}]

openshift_clock_enabled=true

openshift_service_catalog_image_prefix=openshift/origin-

openshift_service_catalog_image_version=latest

openshift_enable_unsupported_configurations=True

openshift_hosted_registry_storage_kind=nfs

openshift_hosted_registry_storage_access_modes=['ReadWriteMany']

openshift_hosted_registry_storage_nfs_directory=/exports

openshift_hosted_registry_storage_nfs_options='*(rw,root_squash)'

openshift_hosted_registry_storage_volume_name=registry

openshift_hosted_registry_storage_volume_size=10Gi

openshift_metrics_storage_kind=nfs

openshift_metrics_storage_access_modes=['ReadWriteOnce']

openshift_metrics_storage_nfs_directory=/exports

openshift_metrics_storage_nfs_options='*(rw,root_squash)'

openshift_metrics_storage_volume_name=metrics

openshift_metrics_storage_volume_size=10Gi

openshift_metrics_storage_labels={'storage': 'metrics'}

openshift_logging_storage_kind=nfs

openshift_logging_storage_access_modes=['ReadWriteOnce']

openshift_logging_storage_nfs_directory=/exports

openshift_logging_storage_nfs_options='*(rw,root_squash)'

openshift_logging_storage_volume_name=logging

openshift_logging_storage_volume_size=10Gi

openshift_logging_storage_labels={'storage': 'logging'}

os_sdn_network_plugin_name=redhat/openshift-ovs-multitenant

openshift_ca_cert_expire_days=3650

openshift_node_cert_expire_days=3650

openshift_master_cert_expire_days=3650

etcd_ca_default_days=3650

# Enable cockpit

osm_use_cockpit=true

# Set cockpit plugins

osm_cockpit_plugins=['cockpit-kubernetes']

openshift_enable_service_catalog=false

template_service_broker_install=false

ansible_service_broker_install=false

[all:vars]

# bootstrap configs

#openshift_master_bootstrap_auto_approve=true

#openshift_master_bootstrap_auto_approver_node_selector={"node-role.kubernetes.io/master":"true"}

#osm_controller_args={"experimental-cluster-signing-duration": ["20m"]}

#osm_default_node_selector="node-role.kubernetes.io/compute=true"

[masters]

master

[etcd]

master

[nfs]

nfs2

[nodes]

master openshift_schedulable=True openshift_node_group_name="node-config-master-infra"

node1 openshift_schedulable=True openshift_node_group_name="node-config-infra" openshift_node_group_name="node-config-compute"

node2 openshift_schedulable=True openshift_node_group_name="node-config-infra" openshift_node_group_name="node-config-compute"

十七、通过ansible自动安装

#安装前检查与环境设置

ansible-playbook /home/openshift-ansible/playbooks/prerequisites.yml

#正式安装

ansible-playbook /home/openshift-ansible/playbooks/deploy_cluster.yml

十八、安装完成后创建用户

#master节点下创建用户dev

htpasswd -bc /etc/origin/master/htpasswd dev dev

#查看所有节点状态

十九、在nfs节点上创建目录并赋予权限

for i in $(seq 1 20); do mkdir -p /exports/pv$i ; done;

chown -R nfsnobody. /exports/pv*

chmod -R 777 /exports/pv*

ls /exports/|xargs -i echo "/exports/{}" *(rw,root_squash) >> /etc/exports.d/openshift-ansible.exports

重启服务让nfs配置生效

systemctl restart rpcbind nfs-server

二十、在master节点上制作pv模板,配合nfs配置生成20个pv

cat >/tmp/pv.temp.yaml<<EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: \$pvi

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

path: /exports/\$pvi

server: nfs2

persistentVolumeReclaimPolicy: Recycle

EOF

#添加pv

for i in $(seq 1 20); do export pvi=pv$i; cat /tmp/pv.temp.yaml|envsubst|oc create -f - ;done;