上一节我们写了一个简单的程序,它可以把一个视频文件解码成多张图片。我们只是简单的使用的ffmepg提供的api来实现这一过程的,但对api具体的实现过程却一无所知,因此,从这篇博客看是,就逐步分析这些api的内部实现原理。这一节,主要分析avformat_open_input函数的具体实现。

avformat_open_input函数如下:

/**

* Open an input stream and read the header. The codecs are not opened.

* The stream must be closed with avformat_close_input().

*

* @param ps Pointer to user-supplied AVFormatContext (allocated by avformat_alloc_context).

* May be a pointer to NULL, in which case an AVFormatContext is allocated by this

* function and written into ps.

* Note that a user-supplied AVFormatContext will be freed on failure.

* @param url URL of the stream to open.

* @param fmt If non-NULL, this parameter forces a specific input format.

* Otherwise the format is autodetected.

* @param options A dictionary filled with AVFormatContext and demuxer-private options.

* On return this parameter will be destroyed and replaced with a dict containing

* options that were not found. May be NULL.

*

* @return 0 on success, a negative AVERROR on failure.

*

* @note If you want to use custom IO, preallocate the format context and set its pb field.

*/

int avformat_open_input(AVFormatContext **ps, const char *filename,

AVInputFormat *fmt, AVDictionary **options)

{

AVFormatContext *s = *ps;

int i, ret = 0;

AVDictionary *tmp = NULL;

ID3v2ExtraMeta *id3v2_extra_meta = NULL;

if (!s && !(s = avformat_alloc_context()))

return AVERROR(ENOMEM);

if (!s->av_class) {

av_log(NULL, AV_LOG_ERROR, "Input context has not been properly allocated by avformat_alloc_context() and is not NULL either\n");

return AVERROR(EINVAL);

}

if (fmt)

s->iformat = fmt;

if (options)

av_dict_copy(&tmp, *options, 0);

if (s->pb) // must be before any goto fail

s->flags |= AVFMT_FLAG_CUSTOM_IO;

if ((ret = av_opt_set_dict(s, &tmp)) < 0)

goto fail;

if ((ret = init_input(s, filename, &tmp)) < 0)

goto fail;

s->probe_score = ret;

if (!s->protocol_whitelist && s->pb && s->pb->protocol_whitelist) {

s->protocol_whitelist = av_strdup(s->pb->protocol_whitelist);

if (!s->protocol_whitelist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

if (!s->protocol_blacklist && s->pb && s->pb->protocol_blacklist) {

s->protocol_blacklist = av_strdup(s->pb->protocol_blacklist);

if (!s->protocol_blacklist) {

ret = AVERROR(ENOMEM);

goto fail;

}

}

if (s->format_whitelist && av_match_list(s->iformat->name, s->format_whitelist, ',') <= 0) {

av_log(s, AV_LOG_ERROR, "Format not on whitelist \'%s\'\n", s->format_whitelist);

ret = AVERROR(EINVAL);

goto fail;

}

avio_skip(s->pb, s->skip_initial_bytes);

/* Check filename in case an image number is expected. */

if (s->iformat->flags & AVFMT_NEEDNUMBER) {

if (!av_filename_number_test(filename)) {

ret = AVERROR(EINVAL);

goto fail;

}

}

s->duration = s->start_time = AV_NOPTS_VALUE;

av_strlcpy(s->filename, filename ? filename : "", sizeof(s->filename));

/* Allocate private data. */

if (s->iformat->priv_data_size > 0) {

if (!(s->priv_data = av_mallocz(s->iformat->priv_data_size))) {

ret = AVERROR(ENOMEM);

goto fail;

}

if (s->iformat->priv_class) {

*(const AVClass **) s->priv_data = s->iformat->priv_class;

av_opt_set_defaults(s->priv_data);

if ((ret = av_opt_set_dict(s->priv_data, &tmp)) < 0)

goto fail;

}

}

/* e.g. AVFMT_NOFILE formats will not have a AVIOContext */

if (s->pb)

ff_id3v2_read(s, ID3v2_DEFAULT_MAGIC, &id3v2_extra_meta, 0);

if (!(s->flags&AVFMT_FLAG_PRIV_OPT) && s->iformat->read_header)

if ((ret = s->iformat->read_header(s)) < 0)//read header,reader mp4 box info

goto fail;

if (id3v2_extra_meta) {

if (!strcmp(s->iformat->name, "mp3") || !strcmp(s->iformat->name, "aac") ||

!strcmp(s->iformat->name, "tta")) {

if ((ret = ff_id3v2_parse_apic(s, &id3v2_extra_meta)) < 0)

goto fail;

} else

av_log(s, AV_LOG_DEBUG, "demuxer does not support additional id3 data, skipping\n");

}

ff_id3v2_free_extra_meta(&id3v2_extra_meta);

if ((ret = avformat_queue_attached_pictures(s)) < 0)

goto fail;

if (!(s->flags&AVFMT_FLAG_PRIV_OPT) && s->pb && !s->internal->data_offset)

s->internal->data_offset = avio_tell(s->pb);

s->internal->raw_packet_buffer_remaining_size = RAW_PACKET_BUFFER_SIZE;

for (i = 0; i < s->nb_streams; i++)

s->streams[i]->internal->orig_codec_id = s->streams[i]->codecpar->codec_id;

if (options) {

av_dict_free(options);

*options = tmp;

}

*ps = s;

return 0;

fail:

ff_id3v2_free_extra_meta(&id3v2_extra_meta);

av_dict_free(&tmp);

if (s->pb && !(s->flags & AVFMT_FLAG_CUSTOM_IO))

avio_closep(&s->pb);

avformat_free_context(s);

*ps = NULL;

return ret;

}

结合注释,我们可以知道这个函数的作用是:打开一个输入流并且读它的头部信息,编解码器不会被打开,这个方法调用结束后,一定要调用avformat_close_input()关闭流。

使用要这个函数要注意一点:如果fmt参数非空,也就是人为的指定了一个AVInputFormat的实例,那么这个函数就不会再检测输入文件的格式了,相反,如果fmt为空,那么这个函数就会自动探测输入文件的格式等信息。

从源码来看,这个函数主要做了四件事:

1.分配一个AVFormatContext的实例。

2.调用init_input函数初始化输入流的信息。这里会初始化AVInputFormat。

3.根据上一步初始化好的AVInputFormat的类型,调用它的read_header方法,读取文件头。

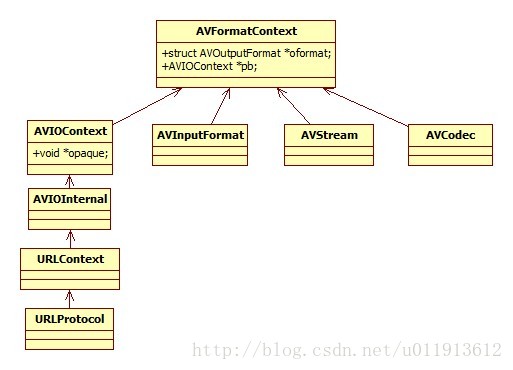

该函数不管做的事情有多么复杂,它主要还是围绕着初始化下面一些数据结构来的:

这三件事说起来简单,具体分析起来相当复杂,我们一件一件来看:

第一件事情

分配一个AVFormatContext结构体。使用的是avformat_alloc_context函数,这个函数定义如下:

AVFormatContext *avformat_alloc_context(void)

{

AVFormatContext *ic;

ic = av_malloc(sizeof(AVFormatContext));

if (!ic) return ic;

avformat_get_context_defaults(ic);

ic->internal = av_mallocz(sizeof(*ic->internal));

if (!ic->internal) {

avformat_free_context(ic);

return NULL;

}

ic->internal->offset = AV_NOPTS_VALUE;

ic->internal->raw_packet_buffer_remaining_size = RAW_PACKET_BUFFER_SIZE;

ic->internal->shortest_end = AV_NOPTS_VALUE;

return ic;

}这个函数中调用av_malloc函数分配一块内容以后,使用avformat_get_context_defaults方法来做一些默认的初始化。之后给internal成员分配内存并做初始化工作。

avformat_get_context_defaults函数如下,看看它做了哪些初始化工作。

static void avformat_get_context_defaults(AVFormatContext *s)

{

memset(s, 0, sizeof(AVFormatContext));

s->av_class = &av_format_context_class;

s->io_open = io_open_default;

s->io_close = io_close_default;

av_opt_set_defaults(s);

}这里做的初始化工作有:

1.av_class成员,指向一个av_format_context_class结构体,这个结构体如下:

static const AVClass av_format_context_class = {

.class_name = "AVFormatContext",

.item_name = format_to_name,

.option = avformat_options,

.version = LIBAVUTIL_VERSION_INT,

.child_next = format_child_next,

.child_class_next = format_child_class_next,

.category = AV_CLASS_CATEGORY_MUXER,

.get_category = get_category,

};

这个结构体的option选项赋值为avformat_options,其值如下:

static const AVOption avformat_options[] = {

{"avioflags", NULL, OFFSET(avio_flags), AV_OPT_TYPE_FLAGS, {.i64 = DEFAULT }, INT_MIN, INT_MAX, D|E, "avioflags"},

{"direct", "reduce buffering", 0, AV_OPT_TYPE_CONST, {.i64 = AVIO_FLAG_DIRECT }, INT_MIN, INT_MAX, D|E, "avioflags"},

{"probesize", "set probing size", OFFSET(probesize), AV_OPT_TYPE_INT64, {.i64 = 5000000 }, 32, INT64_MAX, D},

{"formatprobesize", "number of bytes to probe file format", OFFSET(format_probesize), AV_OPT_TYPE_INT, {.i64 = PROBE_BUF_MAX}, 0, INT_MAX-1, D},

{"packetsize", "set packet size", OFFSET(packet_size), AV_OPT_TYPE_INT, {.i64 = DEFAULT }, 0, INT_MAX, E},内容非常多,这里只列出其中一些。

那么AVClass,AVOption有什么作用呢?

很多的Ffmpeg中的结构体都有AVClass成员,用来描述属主结构体。AVClass有一定会有一个AVOption成员,存放这个结构体需要的一些值,以键值对的形式存放,还有帮助信息等,这些字段都是可以修改的,而且ffmpeg提供了一系列专门用于操作AVOption的函数,这些函数定义在opt.h文件中:

int av_opt_set (void *obj, const char *name, const char *val, int search_flags);

const AVOption *av_opt_next(const void *obj, const AVOption *prev);

const AVOption *av_opt_find2(void *obj, const char *name, const char *unit,

...这些函数的作用从名字上可以猜个大概,这里就不展开了。

接下来的av_opt_set_defaults(s)函数主要就是设置这些字段的:

void av_opt_set_defaults(void *s)

{

av_opt_set_defaults2(s, 0, 0);

}

void av_opt_set_defaults2(void *s, int mask, int flags)

{

const AVOption *opt = NULL;

while ((opt = av_opt_next(s, opt))) {

void *dst = ((uint8_t*)s) + opt->offset;

if ((opt->flags & mask) != flags)

continue;

if (opt->flags & AV_OPT_FLAG_READONLY)

continue;

switch (opt->type) {

case AV_OPT_TYPE_CONST:

/* Nothing to be done here */

break;

case AV_OPT_TYPE_BOOL:

case AV_OPT_TYPE_FLAGS:

case AV_OPT_TYPE_INT:

case AV_OPT_TYPE_INT64:

case AV_OPT_TYPE_DURATION:

case AV_OPT_TYPE_CHANNEL_LAYOUT:

case AV_OPT_TYPE_PIXEL_FMT:

case AV_OPT_TYPE_SAMPLE_FMT:

write_number(s, opt, dst, 1, 1, opt->default_val.i64);

break;

case AV_OPT_TYPE_DOUBLE:

case AV_OPT_TYPE_FLOAT: {

double val;

val = opt->default_val.dbl;

write_number(s, opt, dst, val, 1, 1);

}

break;

case AV_OPT_TYPE_RATIONAL: {

AVRational val;

val = av_d2q(opt->default_val.dbl, INT_MAX);

write_number(s, opt, dst, 1, val.den, val.num);

}

break;

case AV_OPT_TYPE_COLOR:

set_string_color(s, opt, opt->default_val.str, dst);

break;

case AV_OPT_TYPE_STRING:

set_string(s, opt, opt->default_val.str, dst);

break;

case AV_OPT_TYPE_IMAGE_SIZE:

set_string_image_size(s, opt, opt->default_val.str, dst);

break;

case AV_OPT_TYPE_VIDEO_RATE:

set_string_video_rate(s, opt, opt->default_val.str, dst);

break;

case AV_OPT_TYPE_BINARY:

set_string_binary(s, opt, opt->default_val.str, dst);

break;

case AV_OPT_TYPE_DICT:

/* Cannot set defaults for these types */

break;

default:

av_log(s, AV_LOG_DEBUG, "AVOption type %d of option %s not implemented yet\n",

opt->type, opt->name);

}

}

}

这里会遍历AVOption的每一个项,根据每一项的类型,写入一个默认的值。

也就是说,创建AVFormatContext结构体的主要工作是初始化它的internal和AVClass以及AVClass中的AVOption成员。还有就是给它的io_open赋值为io_open_default函数,io_close 赋值为io_close_default函数。

第二件事情

第二件事其实就是init_input函数做的事,这个函数定义如下:

/* Open input file and probe the format if necessary. */

static int init_input(AVFormatContext *s, const char *filename,

AVDictionary **options)

{

int ret;

AVProbeData pd = { filename, NULL, 0 };

int score = AVPROBE_SCORE_RETRY;

if (s->pb) {

s->flags |= AVFMT_FLAG_CUSTOM_IO;

if (!s->iformat)

return av_probe_input_buffer2(s->pb, &s->iformat, filename,

s, 0, s->format_probesize);

else if (s->iformat->flags & AVFMT_NOFILE)

av_log(s, AV_LOG_WARNING, "Custom AVIOContext makes no sense and "

"will be ignored with AVFMT_NOFILE format.\n");

return 0;

}

if ((s->iformat && s->iformat->flags & AVFMT_NOFILE) ||

(!s->iformat && (s->iformat = av_probe_input_format2(&pd, 0, &score))))

return score;

if ((ret = s->io_open(s, &s->pb, filename, AVIO_FLAG_READ | s->avio_flags, options)) < 0)

return ret;

if (s->iformat)

return 0;

return av_probe_input_buffer2(s->pb, &s->iformat, filename,

s, 0, s->format_probesize);

}从注释上来看,其作用是打开输入文件并且探测它的格式。

因此,这个函数做药做了两件事:

1.打开输入文件

2.探测输入文件的格式

打开输入文件使用的s->io_open,io_open是一个函数指针,着我们在分析第一件事情的时候已经说过了,它的值是io_open_default,看下这个函数,这个函数定义在libavformat/options.c文件中:

static int io_open_default(AVFormatContext *s, AVIOContext **pb,

const char *url, int flags, AVDictionary **options)

{

#if FF_API_OLD_OPEN_CALLBACKS

FF_DISABLE_DEPRECATION_WARNINGS

if (s->open_cb)

return s->open_cb(s, pb, url, flags, &s->interrupt_callback, options);

FF_ENABLE_DEPRECATION_WARNINGS

#endif

return ffio_open_whitelist(pb, url, flags, &s->interrupt_callback, options, s->protocol_whitelist, s->protocol_blacklist);

}open_cb肯定为空的,我们没有设置过,因此这里会调用ffio_open_whitelist方法。

这个函数定义在Libavformat/aviobuf.c文件中:

int ffio_open_whitelist(AVIOContext **s, const char *filename, int flags,

const AVIOInterruptCB *int_cb, AVDictionary **options,

const char *whitelist, const char *blacklist

)

{

URLContext *h;

int err;

err = ffurl_open_whitelist(&h, filename, flags, int_cb, options, whitelist, blacklist, NULL);

if (err < 0)

return err;

err = ffio_fdopen(s, h);

if (err < 0) {

ffurl_close(h);

return err;

}

return 0;

}

这里首先调用ffurl_open_whitelist方法,这个方法定义在libavformat/avio.c中:

int ffurl_open_whitelist(URLContext **puc, const char *filename, int flags,

const AVIOInterruptCB *int_cb, AVDictionary **options,

const char *whitelist, const char* blacklist,

URLContext *parent)

{

AVDictionary *tmp_opts = NULL;

AVDictionaryEntry *e;

int ret = ffurl_alloc(puc, filename, flags, int_cb);

if (ret < 0)

return ret;

if (parent)

av_opt_copy(*puc, parent);

if (options &&

(ret = av_opt_set_dict(*puc, options)) < 0)

goto fail;

if (options && (*puc)->prot->priv_data_class &&

(ret = av_opt_set_dict((*puc)->priv_data, options)) < 0)

goto fail;

if (!options)

options = &tmp_opts;

av_assert0(!whitelist ||

!(e=av_dict_get(*options, "protocol_whitelist", NULL, 0)) ||

!strcmp(whitelist, e->value));

av_assert0(!blacklist ||

!(e=av_dict_get(*options, "protocol_blacklist", NULL, 0)) ||

!strcmp(blacklist, e->value));

if ((ret = av_dict_set(options, "protocol_whitelist", whitelist, 0)) < 0)

goto fail;

if ((ret = av_dict_set(options, "protocol_blacklist", blacklist, 0)) < 0)

goto fail;

if ((ret = av_opt_set_dict(*puc, options)) < 0)

goto fail;

ret = ffurl_connect(*puc, options);

if (!ret)

return 0;

fail:

ffurl_close(*puc);

*puc = NULL;

return ret;

}

这个函数首先会使用ffurl_alloc方法分配一个URLContext,这个函数也是定义在avio.c文件中:

int ffurl_alloc(URLContext **puc, const char *filename, int flags,

const AVIOInterruptCB *int_cb)

{

URLProtocol *p = NULL;

if (!first_protocol) {

av_log(NULL, AV_LOG_WARNING, "No URL Protocols are registered. "

"Missing call to av_register_all()?\n");

}

p = url_find_protocol(filename);

if (p)

return url_alloc_for_protocol(puc, p, filename, flags, int_cb);

*puc = NULL;

if (av_strstart(filename, "https:", NULL))

av_log(NULL, AV_LOG_WARNING, "https protocol not found, recompile FFmpeg with "

"openssl, gnutls,\n"

"or securetransport enabled.\n");

return AVERROR_PROTOCOL_NOT_FOUND;

}

这个函数会根据所给的文件名找到对应的协议。使用的是url_find_protocol函数,这个函数也是定义在avio.c文件:

static struct URLProtocol *url_find_protocol(const char *filename)

{

URLProtocol *up = NULL;

char proto_str[128], proto_nested[128], *ptr;

size_t proto_len = strspn(filename, URL_SCHEME_CHARS);

printf("proto_len=%ld\n",proto_len);

printf("name is %s\n",filename);

//hello.mp4

if (filename[proto_len] != ':' &&

(strncmp(filename, "subfile,", 8) || !strchr(filename + proto_len + 1, ':')) ||

is_dos_path(filename))

strcpy(proto_str, "file");

else

av_strlcpy(proto_str, filename,

FFMIN(proto_len + 1, sizeof(proto_str)));

//proto_str = file

printf("proto_str=%s\n",proto_str);

if ((ptr = strchr(proto_str, ',')))

*ptr = '\0';

av_strlcpy(proto_nested, proto_str, sizeof(proto_nested));

//proto_nested = proto_str = file

printf("proto_nested=%s\n",proto_nested);

if ((ptr = strchr(proto_nested, '+')))

*ptr = '\0';

while (up = ffurl_protocol_next(up)) {

printf("int while:::up->name %s\n",up->name);

if (!strcmp(proto_str, up->name))

break;

if (up->flags & URL_PROTOCOL_FLAG_NESTED_SCHEME &&

!strcmp(proto_nested, up->name))

break;

}

printf("up->name %s\n",up->name);

return up;

}

这里为了理解程序,我加了一些打印。如果我们传入的参数是一个xxx.mp4这样的本地文件,这个函数找到的协议是file协议,这个协议定义在libavformat/file.c文件中:

const URLProtocol ff_file_protocol = {

.name = "file",

.url_open = file_open,

.url_read = file_read,

.url_write = file_write,

.url_seek = file_seek,

.url_close = file_close,

.url_get_file_handle = file_get_handle,

.url_check = file_check,

.url_delete = file_delete,

.url_move = file_move,

.priv_data_size = sizeof(FileContext),

.priv_data_class = &file_class,

.url_open_dir = file_open_dir,

.url_read_dir = file_read_dir,

.url_close_dir = file_close_dir,

.default_whitelist = "file,crypto"

};可见,这个协议定义了文件的操作方法,以后,我们打开和读写视频文件都会用到这里对应的方法。现在我们有了一个URLProtocol结构体的实例,它里面定义了对文件的操作函数。找到协议以后,要使用url_alloc_for_protocol函数来创建URLContext 的实例,并且做一堆的初始化工作。这个函数也是定义在avio.c文件中:

static int url_alloc_for_protocol(URLContext **puc, const URLProtocol *up,

const char *filename, int flags,

const AVIOInterruptCB *int_cb)

{

URLContext *uc;

int err;

#if CONFIG_NETWORK

if (up->flags & URL_PROTOCOL_FLAG_NETWORK && !ff_network_init())

return AVERROR(EIO);

#endif

if ((flags & AVIO_FLAG_READ) && !up->url_read) {

av_log(NULL, AV_LOG_ERROR,

"Impossible to open the '%s' protocol for reading\n", up->name);

return AVERROR(EIO);

}

if ((flags & AVIO_FLAG_WRITE) && !up->url_write) {

av_log(NULL, AV_LOG_ERROR,

"Impossible to open the '%s' protocol for writing\n", up->name);

return AVERROR(EIO);

}

uc = av_mallocz(sizeof(URLContext) + strlen(filename) + 1);

if (!uc) {

err = AVERROR(ENOMEM);

goto fail;

}

uc->av_class = &ffurl_context_class;

uc->filename = (char *)&uc[1];

strcpy(uc->filename, filename);

uc->prot = up;

uc->flags = flags;

uc->is_streamed = 0; /* default = not streamed */

uc->max_packet_size = 0; /* default: stream file */

if (up->priv_data_size) {

uc->priv_data = av_mallocz(up->priv_data_size);

if (!uc->priv_data) {

err = AVERROR(ENOMEM);

goto fail;

}

if (up->priv_data_class) {

int proto_len= strlen(up->name);

char *start = strchr(uc->filename, ',');

*(const AVClass **)uc->priv_data = up->priv_data_class;

av_opt_set_defaults(uc->priv_data);

if(!strncmp(up->name, uc->filename, proto_len) && uc->filename + proto_len == start){

int ret= 0;

char *p= start;

char sep= *++p;

char *key, *val;

p++;

if (strcmp(up->name, "subfile"))

ret = AVERROR(EINVAL);

while(ret >= 0 && (key= strchr(p, sep)) && p<key && (val = strchr(key+1, sep))){

*val= *key= 0;

if (strcmp(p, "start") && strcmp(p, "end")) {

ret = AVERROR_OPTION_NOT_FOUND;

} else

ret= av_opt_set(uc->priv_data, p, key+1, 0);

if (ret == AVERROR_OPTION_NOT_FOUND)

av_log(uc, AV_LOG_ERROR, "Key '%s' not found.\n", p);

*val= *key= sep;

p= val+1;

}

if(ret<0 || p!=key){

av_log(uc, AV_LOG_ERROR, "Error parsing options string %s\n", start);

av_freep(&uc->priv_data);

av_freep(&uc);

err = AVERROR(EINVAL);

goto fail;

}

memmove(start, key+1, strlen(key));

}

}

}

if (int_cb)

uc->interrupt_callback = *int_cb;

*puc = uc;

return 0;

fail:

*puc = NULL;

if (uc)

av_freep(&uc->priv_data);

av_freep(&uc);

#if CONFIG_NETWORK

if (up->flags & URL_PROTOCOL_FLAG_NETWORK)

ff_network_close();

#endif

return err;

}

这里创建了一个URLContext的实例,并且做了很多的初始化:

uc->av_class = &ffurl_context_class;

uc->filename = (char *)&uc[1];

strcpy(uc->filename, filename);

uc->prot = up;

uc->flags = flags;

uc->is_streamed = 0; /* default = not streamed */

uc->max_packet_size = 0; /* default: stream file */结束以后,我们就有了URLContext的实例 了,当然也有URLProtocol的实例,的它保存在uc->prot里面。

回到ffurl_open_whitelist函数,接下来,该函数会调用ffurl_connect函数,这个函数是要建立连接的意思,我们的文件是本地文件,应该会打开它。这个函数依旧定义在avio.c中:

int ffurl_connect(URLContext *uc, AVDictionary **options)

{

int err;

AVDictionary *tmp_opts = NULL;

AVDictionaryEntry *e;

...

err =

uc->prot->url_open2 ? uc->prot->url_open2(uc,

uc->filename,

uc->flags,

options) :

uc->prot->url_open(uc, uc->filename, uc->flags);

av_dict_set(options, "protocol_whitelist", NULL, 0);

av_dict_set(options, "protocol_blacklist", NULL, 0);

if (err)

return err;

uc->is_connected = 1;

/* We must be careful here as ffurl_seek() could be slow,

* for example for http */

if ((uc->flags & AVIO_FLAG_WRITE) || !strcmp(uc->prot->name, "file"))

if (!uc->is_streamed && ffurl_seek(uc, 0, SEEK_SET) < 0)

uc->is_streamed = 1;

return 0;

}果然,这里出现了url_open函数的调用,那么对应的就会调用file_open函数,这个函数定义在libavformat/file.c中:

static int file_open(URLContext *h, const char *filename, int flags)

{

FileContext *c = h->priv_data;

int access;

int fd;

struct stat st;

av_strstart(filename, "file:", &filename);

if (flags & AVIO_FLAG_WRITE && flags & AVIO_FLAG_READ) {

access = O_CREAT | O_RDWR;

if (c->trunc)

access |= O_TRUNC;

} else if (flags & AVIO_FLAG_WRITE) {

access = O_CREAT | O_WRONLY;

if (c->trunc)

access |= O_TRUNC;

} else {

access = O_RDONLY;

}

#ifdef O_BINARY

access |= O_BINARY;

#endif

fd = avpriv_open(filename, access, 0666);

if (fd == -1)

return AVERROR(errno);

c->fd = fd;

h->is_streamed = !fstat(fd, &st) && S_ISFIFO(st.st_mode);

return 0;

}这里参数检查以后,会调用avpriv_open函数,这个函数定义在libavutil/file_open.c中:

int avpriv_open(const char *filename, int flags, ...)

{

int fd;

unsigned int mode = 0;

va_list ap;

va_start(ap, flags);

if (flags & O_CREAT)

mode = va_arg(ap, unsigned int);

va_end(ap);

#ifdef O_CLOEXEC

flags |= O_CLOEXEC;

#endif

#ifdef O_NOINHERIT

flags |= O_NOINHERIT;

#endif

fd = open(filename, flags, mode);

#if HAVE_FCNTL

if (fd != -1) {

if (fcntl(fd, F_SETFD, FD_CLOEXEC) == -1)

av_log(NULL, AV_LOG_DEBUG, "Failed to set close on exec\n");

}

#endif

return fd;

}可以看到这里回归到了open这样的系统调用函数了,调用open打开文件后返回文件描述符,这个函数的任务就完成了。这个函数返回到file_open函数后,就把得到的文件描述符赋值给FileContext结构体的fd成员:

c->fd = fd;

之后函数继续返回,函数返回到avio.c中的ffio_open_whitelist函数,接下来调用ffio_fdopen函数,这个函数定义在libavformat下的aviobuf.c中:

int ffio_fdopen(AVIOContext **s, URLContext *h)

{

AVIOInternal *internal = NULL;

uint8_t *buffer = NULL;

int buffer_size, max_packet_size;

max_packet_size = h->max_packet_size;

if (max_packet_size) {

buffer_size = max_packet_size; /* no need to bufferize more than one packet */

} else {

buffer_size = IO_BUFFER_SIZE;

}

buffer = av_malloc(buffer_size);

if (!buffer)

return AVERROR(ENOMEM);

internal = av_mallocz(sizeof(*internal));

if (!internal)

goto fail;

internal->h = h;

*s = avio_alloc_context(buffer, buffer_size, h->flags & AVIO_FLAG_WRITE,

internal, io_read_packet, io_write_packet, io_seek);

if (!*s)

goto fail;

(*s)->protocol_whitelist = av_strdup(h->protocol_whitelist);

if (!(*s)->protocol_whitelist && h->protocol_whitelist) {

avio_closep(s);

goto fail;

}

(*s)->protocol_blacklist = av_strdup(h->protocol_blacklist);

if (!(*s)->protocol_blacklist && h->protocol_blacklist) {

avio_closep(s);

goto fail;

}

(*s)->direct = h->flags & AVIO_FLAG_DIRECT;

(*s)->seekable = h->is_streamed ? 0 : AVIO_SEEKABLE_NORMAL;

(*s)->max_packet_size = max_packet_size;

if(h->prot) {

(*s)->read_pause = io_read_pause;

(*s)->read_seek = io_read_seek;

}

(*s)->av_class = &ff_avio_class;

return 0;

fail:

av_freep(&internal);

av_freep(&buffer);

return AVERROR(ENOMEM);

}

这个函数还是做了很多的事情的:

1.分配一快buffer内存,其大小有这里定义:#define IO_BUFFER_SIZE 32768

buffer的大小本来取决于URLContext的max_packet_size,前面在url_alloc_for_protocol函数中初始化URLContext的时候将其初始化为0了,因此,这里分配的大小为32768。

2.分配一个AVIOContext结构体,这个结构体也很重要,分配完以后我们就有AVIOContext结构体的实例了,分配完成后做了一些初始化工作。

(*s)->direct = h->flags & AVIO_FLAG_DIRECT;

(*s)->seekable = h->is_streamed ? 0 : AVIO_SEEKABLE_NORMAL;

(*s)->max_packet_size = max_packet_size;

(*s)->av_class = &ff_avio_class;这要注意seekable 是AVIO_SEEKABLE_NORMAL,因为is_streamed 为0,这在url_alloc_for_protocol函数中初始化URLContext的时候将其初始化。

这两件事情做完后函数一路返回,又来到了init_input函数中,是不是都快忘记了,这里就是本文分析的avformat_open_input函数做的第二件事情了。

init_input函数接下来调用av_probe_input_buffer2函数,探测输入的buffer,用来确定文件的格式。这里会创建给长重要的AVInputFormat结构体的实例。这个函数定义在libavformat/format.c中:

int av_probe_input_buffer2(AVIOContext *pb, AVInputFormat **fmt,

const char *filename, void *logctx,

unsigned int offset, unsigned int max_probe_size)

{

AVProbeData pd = { filename ? filename : "" };

uint8_t *buf = NULL;

int ret = 0, probe_size, buf_offset = 0;

int score = 0;

int ret2;

if (!max_probe_size)

max_probe_size = PROBE_BUF_MAX;

else if (max_probe_size < PROBE_BUF_MIN) {

av_log(logctx, AV_LOG_ERROR,

"Specified probe size value %u cannot be < %u\n", max_probe_size, PROBE_BUF_MIN);

return AVERROR(EINVAL);

}

if (offset >= max_probe_size)

return AVERROR(EINVAL);

if (pb->av_class) {

uint8_t *mime_type_opt = NULL;

av_opt_get(pb, "mime_type", AV_OPT_SEARCH_CHILDREN, &mime_type_opt);

pd.mime_type = (const char *)mime_type_opt;

}

#if 0

if (!*fmt && pb->av_class && av_opt_get(pb, "mime_type", AV_OPT_SEARCH_CHILDREN, &mime_type) >= 0 && mime_type) {

if (!av_strcasecmp(mime_type, "audio/aacp")) {

*fmt = av_find_input_format("aac");

}

av_freep(&mime_type);

}

#endif

for (probe_size = PROBE_BUF_MIN; probe_size <= max_probe_size && !*fmt;

probe_size = FFMIN(probe_size << 1,

FFMAX(max_probe_size, probe_size + 1))) {

score = probe_size < max_probe_size ? AVPROBE_SCORE_RETRY : 0;

/* Read probe data. */

if ((ret = av_reallocp(&buf, probe_size + AVPROBE_PADDING_SIZE)) < 0)

goto fail;

if ((ret = avio_read(pb, buf + buf_offset,

probe_size - buf_offset)) < 0) {

/* Fail if error was not end of file, otherwise, lower score. */

if (ret != AVERROR_EOF)

goto fail;

score = 0;

ret = 0; /* error was end of file, nothing read */

}

buf_offset += ret;

if (buf_offset < offset)

continue;

pd.buf_size = buf_offset - offset;

pd.buf = &buf[offset];

memset(pd.buf + pd.buf_size, 0, AVPROBE_PADDING_SIZE);

/* Guess file format. */

*fmt = av_probe_input_format2(&pd, 1, &score);

if (*fmt) {

/* This can only be true in the last iteration. */

if (score <= AVPROBE_SCORE_RETRY) {

av_log(logctx, AV_LOG_WARNING,

"Format %s detected only with low score of %d, "

"misdetection possible!\n", (*fmt)->name, score);

} else

av_log(logctx, AV_LOG_DEBUG,

"Format %s probed with size=%d and score=%d\n",

(*fmt)->name, probe_size, score);

#if 0

FILE *f = fopen("probestat.tmp", "ab");

fprintf(f, "probe_size:%d format:%s score:%d filename:%s\n", probe_size, (*fmt)->name, score, filename);

fclose(f);

#endif

}

}

if (!*fmt)

ret = AVERROR_INVALIDDATA;

fail:

/* Rewind. Reuse probe buffer to avoid seeking. */

ret2 = ffio_rewind_with_probe_data(pb, &buf, buf_offset);

if (ret >= 0)

ret = ret2;

av_freep(&pd.mime_type);

return ret < 0 ? ret : score;

}

这个函数做的事情就是探测文件的格式。它会不断的读文件,然后分析,知道确定了文件的格式位置。

这个函数的for循环每次读PROBE_BUF_MIN个字节的内容,其实就是2048了,然后调用av_probe_input_format2函数来猜测文件的格式:

这个函数也定义在format.c文件中:

AVInputFormat *av_probe_input_format2(AVProbeData *pd, int is_opened, int *score_max)

{

int score_ret;

AVInputFormat *fmt = av_probe_input_format3(pd, is_opened, &score_ret);

if (score_ret > *score_max) {

*score_max = score_ret;

return fmt;

} else

return NULL;

}这里调用av_probe_input_format3继续处理:

该函数还是在format.c中:

AVInputFormat *av_probe_input_format3(AVProbeData *pd, int is_opened,

int *score_ret)

{

AVProbeData lpd = *pd;

AVInputFormat *fmt1 = NULL, *fmt;

int score, nodat = 0, score_max = 0;

const static uint8_t zerobuffer[AVPROBE_PADDING_SIZE];

if (!lpd.buf)

lpd.buf = zerobuffer;

if (lpd.buf_size > 10 && ff_id3v2_match(lpd.buf, ID3v2_DEFAULT_MAGIC)) {

int id3len = ff_id3v2_tag_len(lpd.buf);

if (lpd.buf_size > id3len + 16) {

lpd.buf += id3len;

lpd.buf_size -= id3len;

} else if (id3len >= PROBE_BUF_MAX) {

nodat = 2;

} else

nodat = 1;

}

fmt = NULL;

while ((fmt1 = av_iformat_next(fmt1))) {

if (!is_opened == !(fmt1->flags & AVFMT_NOFILE) && strcmp(fmt1->name, "image2"))

continue;

score = 0;

if (fmt1->read_probe) {

score = fmt1->read_probe(&lpd);

if (score)

av_log(NULL, AV_LOG_TRACE, "Probing %s score:%d size:%d\n", fmt1->name, score, lpd.buf_size);

if (fmt1->extensions && av_match_ext(lpd.filename, fmt1->extensions)) {

if (nodat == 0) score = FFMAX(score, 1);

else if (nodat == 1) score = FFMAX(score, AVPROBE_SCORE_EXTENSION / 2 - 1);

else score = FFMAX(score, AVPROBE_SCORE_EXTENSION);

}

} else if (fmt1->extensions) {

if (av_match_ext(lpd.filename, fmt1->extensions))

score = AVPROBE_SCORE_EXTENSION;

}

if (av_match_name(lpd.mime_type, fmt1->mime_type))

score = FFMAX(score, AVPROBE_SCORE_MIME);

if (score > score_max) {

score_max = score;

fmt = fmt1;

} else if (score == score_max)

fmt = NULL;

}

if (nodat == 1)

score_max = FFMIN(AVPROBE_SCORE_EXTENSION / 2 - 1, score_max);

*score_ret = score_max;

av_log(NULL, AV_LOG_TRACE, "format----- %s score:%d size:%d\n", fmt->name, score, lpd.buf_size);

return fmt;

}

这个函数会根据文件名的后缀,遍历AVInputFormat的全局链表,比如我们输入的xxx.mp4,那么匹配成功的必然是ff_mov_demuxer了,这个AVInputFormat 实例定义如下:

mp4文件格式解复用器:

AVInputFormat ff_mov_demuxer = {

.name = "mov,mp4,m4a,3gp,3g2,mj2",

.long_name = NULL_IF_CONFIG_SMALL("QuickTime / MOV"),

.priv_class = &mov_class,

.priv_data_size = sizeof(MOVContext),

.extensions = "mov,mp4,m4a,3gp,3g2,mj2",

.read_probe = mov_probe,

.read_header = mov_read_header,

.read_packet = mov_read_packet,

.read_close = mov_read_close,

.read_seek = mov_read_seek,

.flags = AVFMT_NO_BYTE_SEEK,

};

找到对应的AVInputFormat 的实例后,我们的AVFormatContext中的iformat成员就有内容了。至此,我们已经知道了文件的格式了,接下来需要做第三件事情了。

我们简单回顾下。第二件事情做完后,我们的AVFormatContext中的一下成员就被初始化了:

const AVClass *av_class;

struct AVInputFormat *iformat;

void *priv_data;

AVIOContext *pb;第三件事情

第三件事情就是调用AVInputFormat的read_header方法来解析文件了。我们找到的AVInputFormat的是ff_mov_demuxer ,它的.read_header 初始化为 mov_read_header,这个函数定义在libavformat/mov.c文件中:

static int mov_read_header(AVFormatContext *s)

{

MOVContext *mov = s->priv_data;

AVIOContext *pb = s->pb;

int j, err;

MOVAtom atom = { AV_RL32("root") };//AV)RL32把字符串组合成int类型

int i;

if (mov->decryption_key_len != 0 && mov->decryption_key_len != AES_CTR_KEY_SIZE) {

av_log(s, AV_LOG_ERROR, "Invalid decryption key len %d expected %d\n",

mov->decryption_key_len, AES_CTR_KEY_SIZE);

return AVERROR(EINVAL);

}

mov->fc = s;

mov->trak_index = -1;

/* .mov and .mp4 aren't streamable anyway (only progressive download if moov is before mdat) */

if (pb->seekable)

atom.size = avio_size(pb);

else

atom.size = INT64_MAX;

/* check MOV header */

do {

if (mov->moov_retry)

avio_seek(pb, 0, SEEK_SET);

if ((err = mov_read_default(mov, pb, atom)) < 0) {

av_log(s, AV_LOG_ERROR, "error reading header\n");

mov_read_close(s);

return err;

}

} while (pb->seekable && !mov->found_moov && !mov->moov_retry++);

if (!mov->found_moov) {

av_log(s, AV_LOG_ERROR, "moov atom not found\n");

mov_read_close(s);

return AVERROR_INVALIDDATA;

}

av_log(mov->fc, AV_LOG_TRACE, "on_parse_exit_offset=%"PRId64"\n", avio_tell(pb));

if (pb->seekable) {

if (mov->nb_chapter_tracks > 0 && !mov->ignore_chapters)

mov_read_chapters(s);

for (i = 0; i < s->nb_streams; i++)

if (s->streams[i]->codecpar->codec_tag == AV_RL32("tmcd")) {

mov_read_timecode_track(s, s->streams[i]);

} else if (s->streams[i]->codecpar->codec_tag == AV_RL32("rtmd")) {

mov_read_rtmd_track(s, s->streams[i]);

}

}

/* copy timecode metadata from tmcd tracks to the related video streams */

for (i = 0; i < s->nb_streams; i++) {

AVStream *st = s->streams[i];

MOVStreamContext *sc = st->priv_data;

if (sc->timecode_track > 0) {

AVDictionaryEntry *tcr;

int tmcd_st_id = -1;

for (j = 0; j < s->nb_streams; j++)

if (s->streams[j]->id == sc->timecode_track)

tmcd_st_id = j;

if (tmcd_st_id < 0 || tmcd_st_id == i)

continue;

tcr = av_dict_get(s->streams[tmcd_st_id]->metadata, "timecode", NULL, 0);

if (tcr)

av_dict_set(&st->metadata, "timecode", tcr->value, 0);

}

}

export_orphan_timecode(s);

for (i = 0; i < s->nb_streams; i++) {

AVStream *st = s->streams[i];

MOVStreamContext *sc = st->priv_data;

fix_timescale(mov, sc);

if(st->codecpar->codec_type == AVMEDIA_TYPE_AUDIO && st->codecpar->codec_id == AV_CODEC_ID_AAC) {

st->skip_samples = sc->start_pad;

}

if (st->codecpar->codec_type == AVMEDIA_TYPE_VIDEO && sc->nb_frames_for_fps > 0 && sc->duration_for_fps > 0)

av_reduce(&st->avg_frame_rate.num, &st->avg_frame_rate.den,

sc->time_scale*(int64_t)sc->nb_frames_for_fps, sc->duration_for_fps, INT_MAX);

if (st->codecpar->codec_type == AVMEDIA_TYPE_SUBTITLE) {

if (st->codecpar->width <= 0 || st->codecpar->height <= 0) {

st->codecpar->width = sc->width;

st->codecpar->height = sc->height;

}

if (st->codecpar->codec_id == AV_CODEC_ID_DVD_SUBTITLE) {

if ((err = mov_rewrite_dvd_sub_extradata(st)) < 0)

return err;

}

}

if (mov->handbrake_version &&

mov->handbrake_version <= 1000000*0 + 1000*10 + 2 && // 0.10.2

st->codecpar->codec_id == AV_CODEC_ID_MP3

) {

av_log(s, AV_LOG_VERBOSE, "Forcing full parsing for mp3 stream\n");

st->need_parsing = AVSTREAM_PARSE_FULL;

}

}

if (mov->trex_data) {

for (i = 0; i < s->nb_streams; i++) {

AVStream *st = s->streams[i];

MOVStreamContext *sc = st->priv_data;

if (st->duration > 0)

st->codecpar->bit_rate = sc->data_size * 8 * sc->time_scale / st->duration;

}

}

if (mov->use_mfra_for > 0) {

for (i = 0; i < s->nb_streams; i++) {

AVStream *st = s->streams[i];

MOVStreamContext *sc = st->priv_data;

if (sc->duration_for_fps > 0) {

st->codecpar->bit_rate = sc->data_size * 8 * sc->time_scale /

sc->duration_for_fps;

}

}

}

for (i = 0; i < mov->bitrates_count && i < s->nb_streams; i++) {

if (mov->bitrates[i]) {

s->streams[i]->codecpar->bit_rate = mov->bitrates[i];

}

}

ff_rfps_calculate(s);

for (i = 0; i < s->nb_streams; i++) {

AVStream *st = s->streams[i];

MOVStreamContext *sc = st->priv_data;

switch (st->codecpar->codec_type) {

case AVMEDIA_TYPE_AUDIO:

err = ff_replaygain_export(st, s->metadata);

if (err < 0) {

mov_read_close(s);

return err;

}

break;

case AVMEDIA_TYPE_VIDEO:

if (sc->display_matrix) {

AVPacketSideData *sd, *tmp;

tmp = av_realloc_array(st->side_data,

st->nb_side_data + 1, sizeof(*tmp));

if (!tmp)

return AVERROR(ENOMEM);

st->side_data = tmp;

st->nb_side_data++;

sd = &st->side_data[st->nb_side_data - 1];

sd->type = AV_PKT_DATA_DISPLAYMATRIX;

sd->size = sizeof(int32_t) * 9;

sd->data = (uint8_t*)sc->display_matrix;

sc->display_matrix = NULL;

}

break;

}

}

ff_configure_buffers_for_index(s, AV_TIME_BASE);

return 0;

}

要看懂这个函数的话,的先对mp4文件的格式有所了解(我这里以mp4文件为例)。这里就不展开分析Mp4文件的格式了,请大家自行了解。

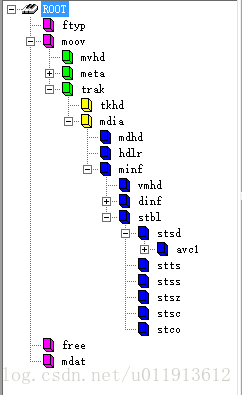

mp4文件由一系列的box组成,如下图:

从图中可以看出来这些box是有层级关系的。每一个box它的头部的8个字节是固定的,前四个字节是这个box的大小,后四个字节是这个box的类型,也就是途中的fytp,moov之类的。这个信息在ffmpeg中使用MOVAtom来标示:

typedef struct MOVAtom {

uint32_t type;

int64_t size; /* total size (excluding the size and type fields) */

} MOVAtom;

这个函数的一开始,就够早了一个叫做root的MOVAtom ,并从它开始,遍历这些box,怎么遍历的呢?调用mov_read_default方法,这个方法中,又会递归的调用mov_read_default方法来解析它的子box,一次来推,最终解析所有的box。

接下来我们看看mov_read_default方法;

static int mov_read_default(MOVContext *c, AVIOContext *pb, MOVAtom atom)

{

int64_t total_size = 0;

MOVAtom a;

int i;

char mmmss[5];

if (c->atom_depth > 10) {

av_log(c->fc, AV_LOG_ERROR, "Atoms too deeply nested\n");

return AVERROR_INVALIDDATA;

}

c->atom_depth ++;

printf("mov_read_default\n");

printf("c->atom_depth=%d\n",c->atom_depth);

if (atom.size < 0)

atom.size = INT64_MAX;

printf("total_size=%ld\n",total_size);

printf("atom.size=%ld\n",atom.size);

printf("avio_feof(pb)=%d\n",avio_feof(pb));

while (total_size + 8 <= atom.size && !avio_feof(pb)) {

int (*parse)(MOVContext*, AVIOContext*, MOVAtom) = NULL;

a.size = atom.size;

a.type=0;

if (atom.size >= 8) {

a.size = avio_rb32(pb);

a.type = avio_rl32(pb);

if (a.type == MKTAG('f','r','e','e') &&

a.size >= 8 &&

c->moov_retry) {

uint8_t buf[8];

uint32_t *type = (uint32_t *)buf + 1;

if (avio_read(pb, buf, 8) != 8)

return AVERROR_INVALIDDATA;

avio_seek(pb, -8, SEEK_CUR);

if (*type == MKTAG('m','v','h','d') ||

*type == MKTAG('c','m','o','v')) {

av_log(c->fc, AV_LOG_ERROR, "Detected moov in a free atom.\n");

a.type = MKTAG('m','o','o','v');

}

}

if (atom.type != MKTAG('r','o','o','t') &&

atom.type != MKTAG('m','o','o','v'))

{

if (a.type == MKTAG('t','r','a','k') || a.type == MKTAG('m','d','a','t'))

{

av_log(c->fc, AV_LOG_ERROR, "Broken file, trak/mdat not at top-level\n");

avio_skip(pb, -8);

c->atom_depth --;

return 0;

}

}

total_size += 8;

if (a.size == 1 && total_size + 8 <= atom.size) { /* 64 bit extended size */

a.size = avio_rb64(pb) - 8;

total_size += 8;

}

}

av_log(c->fc, AV_LOG_TRACE, "type: %08x '%.4s' parent:'%.4s' sz: %"PRId64" %"PRId64" %"PRId64"\n",

a.type, (char*)&a.type, (char*)&atom.type, a.size, total_size, atom.size);

if (a.size == 0) {

a.size = atom.size - total_size + 8;

}

a.size -= 8;

if (a.size < 0)

break;

a.size = FFMIN(a.size, atom.size - total_size);

for (i = 0; mov_default_parse_table[i].type; i++)

if (mov_default_parse_table[i].type == a.type) {

parse = mov_default_parse_table[i].parse;

break;

}

// container is user data

if (!parse && (atom.type == MKTAG('u','d','t','a') ||

atom.type == MKTAG('i','l','s','t')))

parse = mov_read_udta_string;

if (!parse) { /* skip leaf atoms data */

avio_skip(pb, a.size);

} else {

int64_t start_pos = avio_tell(pb);

int64_t left;

int err = parse(c, pb, a);

if (err < 0) {

c->atom_depth --;

return err;

}

if (c->found_moov && c->found_mdat &&

((!pb->seekable || c->fc->flags & AVFMT_FLAG_IGNIDX) ||

start_pos + a.size == avio_size(pb))) {

if (!pb->seekable || c->fc->flags & AVFMT_FLAG_IGNIDX)

c->next_root_atom = start_pos + a.size;

c->atom_depth --;

return 0;

}

left = a.size - avio_tell(pb) + start_pos;

if (left > 0) /* skip garbage at atom end */

avio_skip(pb, left);

else if (left < 0) {

av_log(c->fc, AV_LOG_WARNING,

"overread end of atom '%.4s' by %"PRId64" bytes\n",

(char*)&a.type, -left);

avio_seek(pb, left, SEEK_CUR);

}

}

total_size += a.size;

mmmss[4]='\0';

printf("-------------while----------");

printf("total_size=%ld\n",total_size);

printf("a.size=%ld\n",a.size);

AV_WL32(mmmss,a.type);

printf("a.type=%s\n",mmmss);

}

为了更好的理解,我加了写打印。这个函数中,没找到一个box,读出它的类型以后,就会调用对应的parse方法。配型与parse方法通过一下数组来对应:

static const MOVParseTableEntry mov_default_parse_table[] = {

{ MKTAG('A','C','L','R'), mov_read_aclr },

{ MKTAG('A','P','R','G'), mov_read_avid },

{ MKTAG('A','A','L','P'), mov_read_avid },

{ MKTAG('A','R','E','S'), mov_read_ares },

{ MKTAG('a','v','s','s'), mov_read_avss },

{ MKTAG('c','h','p','l'), mov_read_chpl },

{ MKTAG('c','o','6','4'), mov_read_stco },

{ MKTAG('c','o','l','r'), mov_read_colr },

{ MKTAG('c','t','t','s'), mov_read_ctts }, /* composition time to sample */

{ MKTAG('d','i','n','f'), mov_read_default },

{ MKTAG('D','p','x','E'), mov_read_dpxe },

{ MKTAG('d','r','e','f'), mov_read_dref },

{ MKTAG('e','d','t','s'), mov_read_default },

{ MKTAG('e','l','s','t'), mov_read_elst },

{ MKTAG('e','n','d','a'), mov_read_enda },

{ MKTAG('f','i','e','l'), mov_read_fiel },

{ MKTAG('f','t','y','p'), mov_read_ftyp },

{ MKTAG('g','l','b','l'), mov_read_glbl },

{ MKTAG('h','d','l','r'), mov_read_hdlr },

{ MKTAG('i','l','s','t'), mov_read_ilst },

{ MKTAG('j','p','2','h'), mov_read_jp2h },

{ MKTAG('m','d','a','t'), mov_read_mdat },

{ MKTAG('m','d','h','d'), mov_read_mdhd },

{ MKTAG('m','d','i','a'), mov_read_default },

{ MKTAG('m','e','t','a'), mov_read_meta },

{ MKTAG('m','i','n','f'), mov_read_default },

{ MKTAG('m','o','o','f'), mov_read_moof },

{ MKTAG('m','o','o','v'), mov_read_moov },

{ MKTAG('m','v','e','x'), mov_read_default },

{ MKTAG('m','v','h','d'), mov_read_mvhd },

{ MKTAG('S','M','I',' '), mov_read_svq3 },

{ MKTAG('a','l','a','c'), mov_read_alac }, /* alac specific atom */

{ MKTAG('a','v','c','C'), mov_read_glbl },

{ MKTAG('p','a','s','p'), mov_read_pasp },

{ MKTAG('s','t','b','l'), mov_read_default },

{ MKTAG('s','t','c','o'), mov_read_stco },

{ MKTAG('s','t','p','s'), mov_read_stps },

{ MKTAG('s','t','r','f'), mov_read_strf },

{ MKTAG('s','t','s','c'), mov_read_stsc },

{ MKTAG('s','t','s','d'), mov_read_stsd }, /* sample description */

{ MKTAG('s','t','s','s'), mov_read_stss }, /* sync sample */

{ MKTAG('s','t','s','z'), mov_read_stsz }, /* sample size */

{ MKTAG('s','t','t','s'), mov_read_stts },

{ MKTAG('s','t','z','2'), mov_read_stsz }, /* compact sample size */

{ MKTAG('t','k','h','d'), mov_read_tkhd }, /* track header */

{ MKTAG('t','f','d','t'), mov_read_tfdt },

{ MKTAG('t','f','h','d'), mov_read_tfhd }, /* track fragment header */

{ MKTAG('t','r','a','k'), mov_read_trak },

{ MKTAG('t','r','a','f'), mov_read_default },

{ MKTAG('t','r','e','f'), mov_read_default },

{ MKTAG('t','m','c','d'), mov_read_tmcd },

{ MKTAG('c','h','a','p'), mov_read_chap },

{ MKTAG('t','r','e','x'), mov_read_trex },

{ MKTAG('t','r','u','n'), mov_read_trun },

{ MKTAG('u','d','t','a'), mov_read_default },

{ MKTAG('w','a','v','e'), mov_read_wave },

{ MKTAG('e','s','d','s'), mov_read_esds },

{ MKTAG('d','a','c','3'), mov_read_dac3 }, /* AC-3 info */

{ MKTAG('d','e','c','3'), mov_read_dec3 }, /* EAC-3 info */

{ MKTAG('w','i','d','e'), mov_read_wide }, /* place holder */

{ MKTAG('w','f','e','x'), mov_read_wfex },

{ MKTAG('c','m','o','v'), mov_read_cmov },

{ MKTAG('c','h','a','n'), mov_read_chan }, /* channel layout */

{ MKTAG('d','v','c','1'), mov_read_dvc1 },

{ MKTAG('s','b','g','p'), mov_read_sbgp },

{ MKTAG('h','v','c','C'), mov_read_glbl },

{ MKTAG('u','u','i','d'), mov_read_uuid },

{ MKTAG('C','i','n', 0x8e), mov_read_targa_y216 },

{ MKTAG('f','r','e','e'), mov_read_free },

{ MKTAG('-','-','-','-'), mov_read_custom },

{ 0, NULL }

};以trak为例,其解析方法如下:

static int mov_read_trak(MOVContext *c, AVIOContext *pb, MOVAtom atom)

{

AVStream *st;

MOVStreamContext *sc;

int ret;

st = avformat_new_stream(c->fc, NULL);

if (!st) return AVERROR(ENOMEM);

st->id = c->fc->nb_streams;

sc = av_mallocz(sizeof(MOVStreamContext));

if (!sc) return AVERROR(ENOMEM);

st->priv_data = sc;

st->codec->codec_type = AVMEDIA_TYPE_DATA;

sc->ffindex = st->index;

if ((ret = mov_read_default(c, pb, atom)) < 0)

return ret;

/* sanity checks */

if (sc->chunk_count && (!sc->stts_count || !sc->stsc_count ||

(!sc->sample_size && !sc->sample_count))) {

av_log(c->fc, AV_LOG_ERROR, "stream %d, missing mandatory atoms, broken header\n",

st->index);

return 0;

}

fix_timescale(c, sc);

avpriv_set_pts_info(st, 64, 1, sc->time_scale);

mov_build_index(c, st);

if (sc->dref_id-1 < sc->drefs_count && sc->drefs[sc->dref_id-1].path) {

MOVDref *dref = &sc->drefs[sc->dref_id - 1];

if (c->enable_drefs) {

if (mov_open_dref(c, &sc->pb, c->fc->filename, dref,

&c->fc->interrupt_callback) < 0)

av_log(c->fc, AV_LOG_ERROR,

"stream %d, error opening alias: path='%s', dir='%s', "

"filename='%s', volume='%s', nlvl_from=%d, nlvl_to=%d\n",

st->index, dref->path, dref->dir, dref->filename,

dref->volume, dref->nlvl_from, dref->nlvl_to);

} else {

av_log(c->fc, AV_LOG_WARNING,

"Skipped opening external track: "

"stream %d, alias: path='%s', dir='%s', "

"filename='%s', volume='%s', nlvl_from=%d, nlvl_to=%d."

"Set enable_drefs to allow this.\n",

st->index, dref->path, dref->dir, dref->filename,

dref->volume, dref->nlvl_from, dref->nlvl_to);

}

} else {

sc->pb = c->fc->pb;

sc->pb_is_copied = 1;

}

if (st->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

if (!st->sample_aspect_ratio.num && st->codec->width && st->codec->height &&

sc->height && sc->width &&

(st->codec->width != sc->width || st->codec->height != sc->height)) {

st->sample_aspect_ratio = av_d2q(((double)st->codec->height * sc->width) /

((double)st->codec->width * sc->height), INT_MAX);

}

#if FF_API_R_FRAME_RATE

if (sc->stts_count == 1 || (sc->stts_count == 2 && sc->stts_data[1].count == 1))

av_reduce(&st->r_frame_rate.num, &st->r_frame_rate.den,

sc->time_scale, sc->stts_data[0].duration, INT_MAX);

#endif

}

// done for ai5q, ai52, ai55, ai1q, ai12 and ai15.

if (!st->codec->extradata_size && st->codec->codec_id == AV_CODEC_ID_H264 &&

TAG_IS_AVCI(st->codec->codec_tag)) {

ret = ff_generate_avci_extradata(st);

if (ret < 0)

return ret;

}

switch (st->codec->codec_id) {

#if CONFIG_H261_DECODER

case AV_CODEC_ID_H261:

#endif

#if CONFIG_H263_DECODER

case AV_CODEC_ID_H263:

#endif

#if CONFIG_MPEG4_DECODER

case AV_CODEC_ID_MPEG4:

#endif

st->codec->width = 0; /* let decoder init width/height */

st->codec->height= 0;

break;

}

/* Do not need those anymore. */

av_freep(&sc->chunk_offsets);

av_freep(&sc->stsc_data);

av_freep(&sc->sample_sizes);

av_freep(&sc->keyframes);

av_freep(&sc->stts_data);

av_freep(&sc->stps_data);

av_freep(&sc->elst_data);

av_freep(&sc->rap_group);

return 0;

}一开始,创建一个新流:

AVStream *avformat_new_stream(AVFormatContext *s, const AVCodec *c)

{

AVStream *st;

int i;

AVStream **streams;

if (s->nb_streams >= INT_MAX/sizeof(*streams))

return NULL;

streams = av_realloc_array(s->streams, s->nb_streams + 1, sizeof(*streams));

if (!streams)

return NULL;

s->streams = streams;

st = av_mallocz(sizeof(AVStream));

if (!st)

return NULL;

if (!(st->info = av_mallocz(sizeof(*st->info)))) {

av_free(st);

return NULL;

}

st->info->last_dts = AV_NOPTS_VALUE;

#if FF_API_LAVF_AVCTX

FF_DISABLE_DEPRECATION_WARNINGS

st->codec = avcodec_alloc_context3(c);

if (!st->codec) {

av_free(st->info);

av_free(st);

return NULL;

}

FF_ENABLE_DEPRECATION_WARNINGS

#endif

st->internal = av_mallocz(sizeof(*st->internal));

if (!st->internal)

goto fail;

st->codecpar = avcodec_parameters_alloc();

if (!st->codecpar)

goto fail;

st->internal->avctx = avcodec_alloc_context3(NULL);

if (!st->internal->avctx)

goto fail;

if (s->iformat) {

#if FF_API_LAVF_AVCTX

FF_DISABLE_DEPRECATION_WARNINGS

/* no default bitrate if decoding */

st->codec->bit_rate = 0;

FF_ENABLE_DEPRECATION_WARNINGS

#endif

/* default pts setting is MPEG-like */

avpriv_set_pts_info(st, 33, 1, 90000);

/* we set the current DTS to 0 so that formats without any timestamps

* but durations get some timestamps, formats with some unknown

* timestamps have their first few packets buffered and the

* timestamps corrected before they are returned to the user */

st->cur_dts = RELATIVE_TS_BASE;

} else {

st->cur_dts = AV_NOPTS_VALUE;

}

st->index = s->nb_streams;

st->start_time = AV_NOPTS_VALUE;

st->duration = AV_NOPTS_VALUE;

st->first_dts = AV_NOPTS_VALUE;

st->probe_packets = MAX_PROBE_PACKETS;

st->pts_wrap_reference = AV_NOPTS_VALUE;

st->pts_wrap_behavior = AV_PTS_WRAP_IGNORE;

st->last_IP_pts = AV_NOPTS_VALUE;

st->last_dts_for_order_check = AV_NOPTS_VALUE;

for (i = 0; i < MAX_REORDER_DELAY + 1; i++)

st->pts_buffer[i] = AV_NOPTS_VALUE;

st->sample_aspect_ratio = (AVRational) { 0, 1 };

#if FF_API_R_FRAME_RATE

st->info->last_dts = AV_NOPTS_VALUE;

#endif

st->info->fps_first_dts = AV_NOPTS_VALUE;

st->info->fps_last_dts = AV_NOPTS_VALUE;

st->inject_global_side_data = s->internal->inject_global_side_data;

st->internal->need_context_update = 1;

s->streams[s->nb_streams++] = st;

return st;

fail:

free_stream(&st);

return NULL;

}

- 最后将创建好的新流添加到AVFormatContext中的streams成员,添加到最后一项,并且让nb_streams成员自增。

2.递归解析下一级的box

通过这种解析,可以看到我们获取到了流的信息。

trak包含了很多的子box,这些box描述了trak的功能。比如我们要获取这个流是视频流呢还是音频流呢?亦或者是不是字幕流呢就要通过解析hdlr这个子box来获取:

if ((ret = mov_read_default(c, pb, atom)) < 0)

return ret;区分音频流还是视频流:

static int mov_read_hdlr(MOVContext *c, AVIOContext *pb, MOVAtom atom)

{

AVStream *st;

uint32_t type;

uint32_t av_unused ctype;

int64_t title_size;

char *title_str;

int ret;

if (c->fc->nb_streams < 1) // meta before first trak

return 0;

st = c->fc->streams[c->fc->nb_streams-1];

avio_r8(pb); /* version */

avio_rb24(pb); /* flags */

/* component type */

ctype = avio_rl32(pb);

type = avio_rl32(pb); /* component subtype */

av_log(c->fc, AV_LOG_TRACE, "ctype= %.4s (0x%08x)\n", (char*)&ctype, ctype);

av_log(c->fc, AV_LOG_TRACE, "stype= %.4s\n", (char*)&type);

if (type == MKTAG('v','i','d','e'))

st->codec->codec_type = AVMEDIA_TYPE_VIDEO;

else if (type == MKTAG('s','o','u','n'))

st->codec->codec_type = AVMEDIA_TYPE_AUDIO;

else if (type == MKTAG('m','1','a',' '))

st->codec->codec_id = AV_CODEC_ID_MP2;

else if ((type == MKTAG('s','u','b','p')) || (type == MKTAG('c','l','c','p')))

st->codec->codec_type = AVMEDIA_TYPE_SUBTITLE;

avio_rb32(pb); /* component manufacture */

avio_rb32(pb); /* component flags */

avio_rb32(pb); /* component flags mask */

title_size = atom.size - 24;

if (title_size > 0) {

title_str = av_malloc(title_size + 1); /* Add null terminator */

if (!title_str)

return AVERROR(ENOMEM);

ret = ffio_read_size(pb, title_str, title_size);

if (ret < 0) {

av_freep(&title_str);

return ret;

}

title_str[title_size] = 0;

if (title_str[0]) {

int off = (!c->isom && title_str[0] == title_size - 1);

av_dict_set(&st->metadata, "handler_name", title_str + off, 0);

}

av_freep(&title_str);

}

return 0;

}

其核心代码:

if (type == MKTAG('v','i','d','e'))

st->codec->codec_type = AVMEDIA_TYPE_VIDEO;

else if (type == MKTAG('s','o','u','n'))

st->codec->codec_type = AVMEDIA_TYPE_AUDIO;

else if (type == MKTAG('m','1','a',' '))

st->codec->codec_id = AV_CODEC_ID_MP2;

else if ((type == MKTAG('s','u','b','p')) || (type == MKTAG('c','l','c','p')))

st->codec->codec_type = AVMEDIA_TYPE_SUBTITLE;如果hdlr的第16-20个字节的内容为vide,那么就是视频流,如果是soun,那么就是音频流,如说是subp或者clcp,那么就是字幕流。

我们不可能把所有box的解析都分析一遍,这里对box的解析就到这里,其他的请感兴趣的reader自行分析了。