版权声明:本文为博主原创文章,出处为 http://blog.csdn.net/silentwolfyh https://blog.csdn.net/silentwolfyh/article/details/83383119

目录:

1、重要事项说明

2、Notebook的配置

3、livy的配置

4、livy的版本问题

5、spark的版本问题

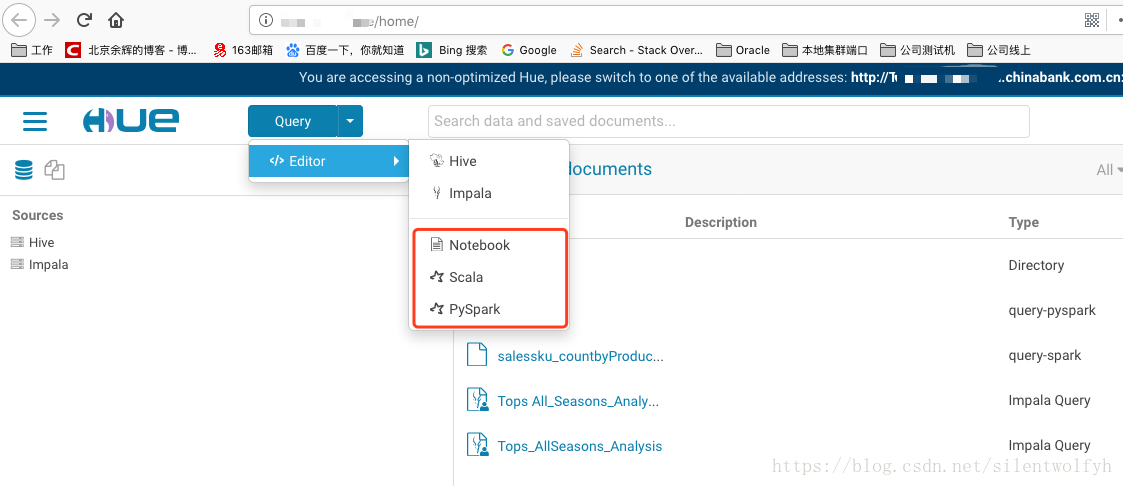

6、页面展示

7、参考文章

1、重要事项说明

1、cdh5.14默认的python版本为2.6.6, 一定要升级到2.7,方法如下:https://blog.csdn.net/silentwolfyh/article/details/82839713

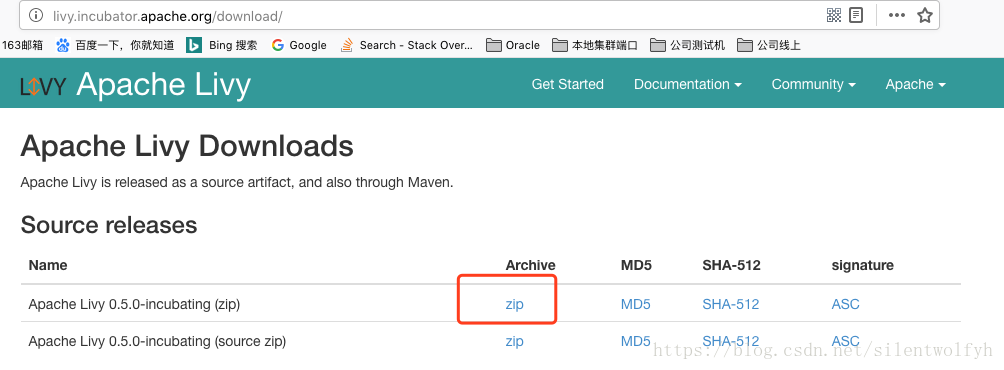

2、livy的livy-server-0.2.0和livy-server-0.3.0版本不行,需要升级到 livy-0.5.0-incubating-bin版本.

下载路径:http://livy.incubator.apache.org/download/

3、spark2.10版本会和 livy-0.5.0-incubating-bin有冲突,所以要换成spark1.6的版本.

4、环境变量尽量不要写到" ~/.bash_profile "中, 要写到 " /etc/profile ", 这个问题很严重. 我同事在hdfs用户和root用户的变量有很多问题,后来我统一了.

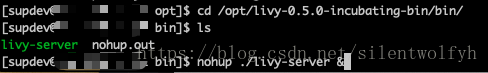

5、livy启动要使用hdfs的用户,命令如下:

nohup ./livy-server &

2、Notebook的配置

CDH的hue的 hue_safety_valve.ini 配置如下:

[desktop]

app_blacklist=

[spark]

server_url=http://IP:8998/

languages='[{"name": "Scala", "type": "scala"},{"name": "PySpark", "type": "pyspark"},{"name": "Python", "type": "python"},{"name": "Impala SQL", "type": "impala"},{"name": "Hive SQL", "type": "hive"},{"name": "Text", "type": "text"}]'

[notebook]

show_notebooks=true

enable_batch_execute=true

enable_query_builder=true

enable_query_scheduling=false

[[interpreters]]

[[[hive]]]

# The name of the snippet.

name=Hive

# The backend connection to use to communicate with the server.

interface=hiveserver2

[[[impala]]]

name=Impala

interface=hiveserver2

[[[spark]]]

name=Scala

interface=livy

[[[pyspark]]]

name=PySpark

interface=livy

3、livy的配置

livy-env.sh的配置如下,在结尾添加如下:

export SPARK_HOME=/export/servers/cloudera/parcels/CDH-5.14.4-1.cdh5.14.4.p0.3/lib/spark

export JAVA_HOME=/export/servers/jdk1.7.0_71

export PYSPARK_PYTHON=/usr/bin/python

livy.conf的配置如下,在结尾添加如下:

livy.repl.enableHiveContext = true

livy.impersonation.enabled = true

livy.server.session.timeout = 1h

livy.server.session.factory = yarn/local

4、livy的版本问题

错误定位:

在livy的livy-server启动脚本打印出来的日志.

ERROR repl.PythonInterpreter: Process has died with 1

解决办法:

换成: livy-0.5.0-incubating-bin.zip的版本

5、spark的版本问题

错误定位:

解决办法:

换成:spark1.6.0

18/10/24 17:28:56 WARN util.Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.1.0.cloudera3

/_/

Using Python version 2.7.10 (default, Oct 23 2018 13:12:58)

SparkSession available as 'spark'.

>>>

>>> from pyspark.sql import HiveContext

>>> sqlContext.sql("select * from dwd.dwd_t1_branch_lt_a limit 100").show()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/pyspark/sql/context.py", line 384, in sql

return self.sparkSession.sql(sqlQuery)

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/pyspark/sql/session.py", line 545, in sql

return DataFrame(self._jsparkSession.sql(sqlQuery), self._wrapped)

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/lib/py4j-0.10.7-src.zip/py4j/java_gateway.py", line 1257, in __call__

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/pyspark/sql/utils.py", line 63, in deco

return f(*a, **kw)

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o47.sql.

: java.util.ServiceConfigurationError: org.apache.spark.sql.sources.DataSourceRegister: Provider org.apache.spark.sql.hive.orc.DefaultSource could not be instantiated

at java.util.ServiceLoader.fail(ServiceLoader.java:232)

at java.util.ServiceLoader.access$100(ServiceLoader.java:185)

at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:384)

at java.util.ServiceLoader$LazyIterator.next(ServiceLoader.java:404)

at java.util.ServiceLoader$1.next(ServiceLoader.java:480)

at scala.collection.convert.Wrappers$JIteratorWrapper.next(Wrappers.scala:43)

at scala.collection.Iterator$class.foreach(Iterator.scala:893)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1336)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.filterImpl(TraversableLike.scala:247)

at scala.collection.TraversableLike$class.filter(TraversableLike.scala:259)

at scala.collection.AbstractTraversable.filter(Traversable.scala:104)

at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:575)

at org.apache.spark.sql.execution.datasources.DataSource.providingClass$lzycompute(DataSource.scala:86)

at org.apache.spark.sql.execution.datasources.DataSource.providingClass(DataSource.scala:86)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:325)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$$anonfun$8$$anonfun$9.apply(HiveMetastoreCatalog.scala:293)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$$anonfun$8$$anonfun$9.apply(HiveMetastoreCatalog.scala:283)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$$anonfun$8.apply(HiveMetastoreCatalog.scala:283)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$$anonfun$8.apply(HiveMetastoreCatalog.scala:275)

at org.apache.spark.sql.hive.HiveMetastoreCatalog.withTableCreationLock(HiveMetastoreCatalog.scala:69)

at org.apache.spark.sql.hive.HiveMetastoreCatalog.org$apache$spark$sql$hive$HiveMetastoreCatalog$$convertToLogicalRelation(HiveMetastoreCatalog.scala:275)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$ParquetConversions$.org$apache$spark$sql$hive$HiveMetastoreCatalog$ParquetConversions$$convertToParquetRelation(HiveMetastoreCatalog.scala:371)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$ParquetConversions$$anonfun$apply$1.applyOrElse(HiveMetastoreCatalog.scala:388)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$ParquetConversions$$anonfun$apply$1.applyOrElse(HiveMetastoreCatalog.scala:379)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:290)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$transformUp$1.apply(TreeNode.scala:290)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:70)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:289)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:307)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:188)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:305)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:307)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:188)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:305)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$3.apply(TreeNode.scala:287)

at org.apache.spark.sql.catalyst.trees.TreeNode$$anonfun$4.apply(TreeNode.scala:307)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapProductIterator(TreeNode.scala:188)

at org.apache.spark.sql.catalyst.trees.TreeNode.mapChildren(TreeNode.scala:305)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformUp(TreeNode.scala:287)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$ParquetConversions$.apply(HiveMetastoreCatalog.scala:379)

at org.apache.spark.sql.hive.HiveMetastoreCatalog$ParquetConversions$.apply(HiveMetastoreCatalog.scala:358)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:85)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1$$anonfun$apply$1.apply(RuleExecutor.scala:82)

at scala.collection.LinearSeqOptimized$class.foldLeft(LinearSeqOptimized.scala:124)

at scala.collection.immutable.List.foldLeft(List.scala:84)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:82)

at org.apache.spark.sql.catalyst.rules.RuleExecutor$$anonfun$execute$1.apply(RuleExecutor.scala:74)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.sql.catalyst.rules.RuleExecutor.execute(RuleExecutor.scala:74)

at org.apache.spark.sql.execution.QueryExecution.analyzed$lzycompute(QueryExecution.scala:64)

at org.apache.spark.sql.execution.QueryExecution.analyzed(QueryExecution.scala:62)

at org.apache.spark.sql.execution.QueryExecution.assertAnalyzed(QueryExecution.scala:48)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:63)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:600)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.VerifyError: Bad return type

Exception Details:

Location:

org/apache/spark/sql/hive/orc/DefaultSource.createRelation(Lorg/apache/spark/sql/SQLContext;[Ljava/lang/String;Lscala/Option;Lscala/Option;Lscala/collection/immutable/Map;)Lorg/apache/spark/sql/sources/HadoopFsRelation; @35: areturn

Reason:

Type 'org/apache/spark/sql/hive/orc/OrcRelation' (current frame, stack[0]) is not assignable to 'org/apache/spark/sql/sources/HadoopFsRelation' (from method signature)

Current Frame:

bci: @35

flags: { }

locals: { 'org/apache/spark/sql/hive/orc/DefaultSource', 'org/apache/spark/sql/SQLContext', '[Ljava/lang/String;', 'scala/Option', 'scala/Option', 'scala/collection/immutable/Map' }

stack: { 'org/apache/spark/sql/hive/orc/OrcRelation' }

Bytecode:

0x0000000: b200 1c2b c100 1ebb 000e 592a b700 22b6

0x0000010: 0026 bb00 2859 2c2d b200 2d19 0419 052b

0x0000020: b700 30b0

at java.lang.Class.getDeclaredConstructors0(Native Method)

at java.lang.Class.privateGetDeclaredConstructors(Class.java:2671)

at java.lang.Class.getConstructor0(Class.java:3075)

at java.lang.Class.newInstance(Class.java:412)

at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:380)

... 72 more

>>> ^C

Traceback (most recent call last):

File "/export/servers/cloudera/parcels/SPARK2-2.1.0.cloudera3-1.cdh5.13.3.p0.569822/lib/spark2/python/pyspark/context.py", line 240, in signal_handler

raise KeyboardInterrupt()

KeyboardInterrupt

>>>

6、页面展示

7、参考文章

Run Hue Spark Notebook on Cloudera

https://blogs.msdn.microsoft.com/pliu/2016/06/19/run-jupyter-notebook-on-cloudera/