官网:https://gstreamer.freedesktop.org/

Demo基础教程:https://gstreamer.freedesktop.org/documentation/tutorials/basic/concepts.html

Demo下载地址:git://anongit.freedesktop.org/gstreamer/gst-docs

Goal

GStreamer自动处理多线程,但有时候我们需要手动处理线程。这边文章就是告诉我们如何手动创建线程并且应用到pipeline的部分element中,什么是可用的pad以及如何复制流。

GStreamer handles multithreading automatically, but, under some circumstances(情况), you might need to decouple(分离) threads manually. This tutorial shows how to do this and, in addition, completes the exposition about Pad Availability. More precisely(更确切的), this document explains:

-

How to create new threads of execution for some parts of the pipeline

-

What is the Pad Availability(可用的)

-

How to replicate(复制) streams

Introduction

Multithreading

GStreamer是一个多线程框架,内部会自动创建多线程来完成工作。更重要的是我们在穿甲哪一个pipeline的时候,可以指定一部分pipeline运行在指定的线程上。这就依赖于queue element,queue可以衔接两个线程之间的数据。数据通过queue的入口和出口来连接数据的上下游。queue的大小可以通过属性设置。

GStreamer is a multithreaded framework. This means that, internally(内部), it creates and destroys threads as it needs them, for example, to decouple streaming from the application thread. Moreover(另外), plugins are also free to create threads for their own processing, for example, a video decoder could create 4 threads to take full advantage of a CPU with 4 cores.

On top of this(最重要的是), when building the pipeline an application can specify explicitly that a branch (a part of the pipeline) runs on a different thread (for example, to have the audio and video decoders executing simultaneously(同时)).

This is accomplished(熟练地) using the queue element, which works as follows. The sink pad just enqueues(入队) data and returns control. On a different thread, data is dequeued and pushed downstream. This element is also used for buffering, as seen later in the streaming tutorials. The size of the queue can be controlled through properties.

The example pipeline

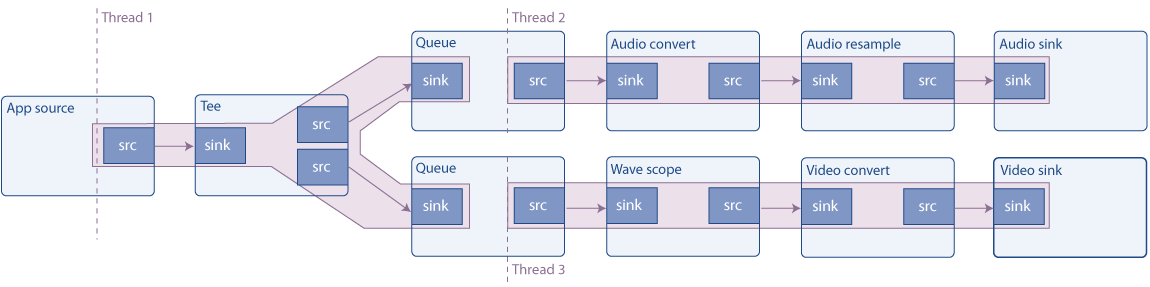

This example builds the following pipeline:

如上图,灰色背景表示独立线程,有三个独立线程。Thread1负责源数据的读取以及音视频的拆分。Thread2负责音频的转换、采样率变化以及渲染。Thread3负责音频的波形图渲染工作。

The source is a synthetic(综合的) audio signal (a continuous tone) which is split using a tee element (it sends through its source pads everything it receives through its sink pad). One branch then sends the signal to the audio card, and the other renders a video of the waveform and sends it to the screen.

As seen in the picture, queues create a new thread, so this pipeline runs in 3 threads. Pipelines with more than one sink usually need to be multithreaded, because, to be synchronized, sinks usually block execution until all other sinks are ready, and they cannot get ready if there is only one thread, being blocked by the first sink.

Request pads

pad分为三类:Sometimes Pads(不定期出现)、 Always Pads(一直存在)、 Request Pad(需要的时候)

Reques Pad在需要的时候才会被创建,比较经典的例子是tee element,开始只有sink pad,没有src pad,数据到来时动态创建src pad。request pad不像Always Pad一样可以自动连接。但是request pad可以随便复制。在PLAYING或PAUSE状态下,request pads需要额外注意(需要用到pad锁),本章不重点介绍。

In Basic tutorial 3: Dynamic pipelines we saw an element (uridecodebin) which had no pads to begin with, and they appeared as data started to flow and the element learned about the media. These are called Sometimes Pads, and contrast(对比) with the regular(定期的有规律的) pads which are always available and are called Always Pads.

The third kind of pad is the Request Pad, which is created on demand(需要的时候). The classical example is the tee element, which has one sink pad and no initial source pads: they need to be requested and then tee adds them. In this way, an input stream can be replicated(复制) any number of times(任意数量). The disadvantage is that linking elements with Request Pads is not as automatic, as linking Always Pads, as the walkthrough for this example will show.

Also, to request (or release) pads in the PLAYING or PAUSED states, you need to take additional cautions (Pad blocking) which are not described in this tutorial. It is safe to request (or release) pads in the NULL or READY states, though.

Without further delay, let's see the code.

Simple multithreaded example

Copy this code into a text file named basic-tutorial-7.c (or find it in your GStreamer installation).

basic-tutorial-7.c

#include <gst/gst.h>

int main(int argc, char *argv[]) {

GstElement *pipeline, *audio_source, *tee, *audio_queue, *audio_convert, *audio_resample, *audio_sink;

GstElement *video_queue, *visual, *video_convert, *video_sink;

GstBus *bus;

GstMessage *msg;

GstPad *tee_audio_pad, *tee_video_pad;

GstPad *queue_audio_pad, *queue_video_pad;

/* Initialize GStreamer */

gst_init (&argc, &argv);

/* Create the elements */

audio_source = gst_element_factory_make ("audiotestsrc", "audio_source");

tee = gst_element_factory_make ("tee", "tee");

audio_queue = gst_element_factory_make ("queue", "audio_queue");

audio_convert = gst_element_factory_make ("audioconvert", "audio_convert");

audio_resample = gst_element_factory_make ("audioresample", "audio_resample");

audio_sink = gst_element_factory_make ("autoaudiosink", "audio_sink");

video_queue = gst_element_factory_make ("queue", "video_queue");

visual = gst_element_factory_make ("wavescope", "visual");

video_convert = gst_element_factory_make ("videoconvert", "csp");

video_sink = gst_element_factory_make ("autovideosink", "video_sink");

/* Create the empty pipeline */

pipeline = gst_pipeline_new ("test-pipeline");

if (!pipeline || !audio_source || !tee || !audio_queue || !audio_convert || !audio_resample || !audio_sink ||

!video_queue || !visual || !video_convert || !video_sink) {

g_printerr ("Not all elements could be created.\n");

return -1;

}

/* Configure elements */

g_object_set (audio_source, "freq", 215.0f, NULL);

g_object_set (visual, "shader", 0, "style", 1, NULL);

/* Link all elements that can be automatically linked because they have "Always" pads */

gst_bin_add_many (GST_BIN (pipeline), audio_source, tee, audio_queue, audio_convert, audio_resample, audio_sink,

video_queue, visual, video_convert, video_sink, NULL);

if (gst_element_link_many (audio_source, tee, NULL) != TRUE ||

gst_element_link_many (audio_queue, audio_convert, audio_resample, audio_sink, NULL) != TRUE ||

gst_element_link_many (video_queue, visual, video_convert, video_sink, NULL) != TRUE) {

g_printerr ("Elements could not be linked.\n");

gst_object_unref (pipeline);

return -1;

}

/* Manually link the Tee, which has "Request" pads */

tee_audio_pad = gst_element_get_request_pad (tee, "src_%u");

g_print ("Obtained request pad %s for audio branch.\n", gst_pad_get_name (tee_audio_pad));

queue_audio_pad = gst_element_get_static_pad (audio_queue, "sink");

tee_video_pad = gst_element_get_request_pad (tee, "src_%u");

g_print ("Obtained request pad %s for video branch.\n", gst_pad_get_name (tee_video_pad));

queue_video_pad = gst_element_get_static_pad (video_queue, "sink");

if (gst_pad_link (tee_audio_pad, queue_audio_pad) != GST_PAD_LINK_OK ||

gst_pad_link (tee_video_pad, queue_video_pad) != GST_PAD_LINK_OK) {

g_printerr ("Tee could not be linked.\n");

gst_object_unref (pipeline);

return -1;

}

gst_object_unref (queue_audio_pad);

gst_object_unref (queue_video_pad);

/* Start playing the pipeline */

gst_element_set_state (pipeline, GST_STATE_PLAYING);

/* Wait until error or EOS */

bus = gst_element_get_bus (pipeline);

msg = gst_bus_timed_pop_filtered (bus, GST_CLOCK_TIME_NONE, GST_MESSAGE_ERROR | GST_MESSAGE_EOS);

/* Release the request pads from the Tee, and unref them */

gst_element_release_request_pad (tee, tee_audio_pad);

gst_element_release_request_pad (tee, tee_video_pad);

gst_object_unref (tee_audio_pad);

gst_object_unref (tee_video_pad);

/* Free resources */

if (msg != NULL)

gst_message_unref (msg);

gst_object_unref (bus);

gst_element_set_state (pipeline, GST_STATE_NULL);

gst_object_unref (pipeline);

return 0;

}

This tutorial plays an audible tone(可听音) through the audio card and opens a window with a waveform(波形) representation of the tone(音调). The waveform should be a sinusoid(正弦曲线), but due to the refreshing of the window might not appear so.

Required libraries:

gstreamer-1.0

Walkthrough

/* Create the elements */

audio_source = gst_element_factory_make ("audiotestsrc", "audio_source");

tee = gst_element_factory_make ("tee", "tee");

audio_queue = gst_element_factory_make ("queue", "audio_queue");

audio_convert = gst_element_factory_make ("audioconvert", "audio_convert");

audio_resample = gst_element_factory_make ("audioresample", "audio_resample");

audio_sink = gst_element_factory_make ("autoaudiosink", "audio_sink");

video_queue = gst_element_factory_make ("queue", "video_queue");

visual = gst_element_factory_make ("wavescope", "visual");

video_convert = gst_element_factory_make ("videoconvert", "video_convert");

video_sink = gst_element_factory_make ("autovideosink", "video_sink");

所有的元素都在这里创建。audiotestsrc 是一个混合音频源。wavescope 是一个信号显示器。autoaudiosink 和autoaudiosink 我们很熟悉了。audioconvert, audioresample and videoconvert是为了顺利连接而增加的。由于受硬件的影响,编写阶段还真不知道是否可行。如果连接成功的花,以为直连原因,对系统性能影响很小。其中queue就相当于创建了二个线程。

All the elements in the above picture are instantiated here:

audiotestsrc produces a synthetic(合成的) tone. wavescope consumes an audio signal and renders a waveform as if it was an (admittedly cheap很便宜) oscilloscope(示波器). We have already worked with the autoaudiosink and autovideosink.

The conversion elements (audioconvert, audioresample and videoconvert) are necessary to guarantee(保证) that the pipeline can be linked. Indeed, the Capabilities of the audio and video sinks depend on the hardware, and you do not know at design time if they will match the Caps produced by the audiotestsrc and wavescope. If the Caps matched, though, these elements act in “pass-through” mode and do not modify the signal, having negligible(很小) impact(影响) on performance(性能).

/* Configure elements */

g_object_set (audio_source, "freq", 215.0f, NULL);

g_object_set (visual, "shader", 0, "style", 1, NULL);

为了好看,通过设置属性,做微小调整。

Small adjustments(调整) for better demonstration: The “freq” property of audiotestsrc controls the frequency of the wave (215Hz makes the wave appear almost stationary in the window), and this style and shader for wavescope make the wave continuous. Use the gst-inspect-1.0 tool described in Basic tutorial 10: GStreamer tools to learn all the properties of these elements.

/* Link all elements that can be automatically linked because they have "Always" pads */

gst_bin_add_many (GST_BIN (pipeline), audio_source, tee, audio_queue, audio_convert, audio_sink,

video_queue, visual, video_convert, video_sink, NULL);

if (gst_element_link_many (audio_source, tee, NULL) != TRUE ||

gst_element_link_many (audio_queue, audio_convert, audio_sink, NULL) != TRUE ||

gst_element_link_many (video_queue, visual, video_convert, video_sink, NULL) != TRUE) {

g_printerr ("Elements could not be linked.\n");

gst_object_unref (pipeline);

return -1;

}

这一部分会把element连接起来。这些element的pad都是always pad。

This code block adds all elements to the pipeline and then links the ones that can be automatically linked (the ones with Always Pads, as the comment says).

gst_element_link_many() 也可以连接request pad,但是因为request pad需要手动释放,而使用gst_element_link_many() 经常误导我们忘记释放request pad,所以一般request pad我们都通过gst_pad_link手动连接。

gst_element_link_many()can actually link elements with Request Pads. It internally requests the Pads so you do not have worry about the elements being linked having Always or Request Pads. Strange as it might seem, this is actually inconvenient, because you still need to release the requested Pads afterwards(后来), and, if the Pad was requested automatically bygst_element_link_many(), it is easy to forget. Stay out of trouble by always requesting Request Pads manually, as shown in the next code block.

/* Manually link the Tee, which has "Request" pads */

tee_audio_pad = gst_element_get_request_pad (tee, "src_%u");

g_print ("Obtained request pad %s for audio branch.\n", gst_pad_get_name (tee_audio_pad));

queue_audio_pad = gst_element_get_static_pad (audio_queue, "sink");

tee_video_pad = gst_element_get_request_pad (tee, "src_%u");

g_print ("Obtained request pad %s for video branch.\n", gst_pad_get_name (tee_video_pad));

queue_video_pad = gst_element_get_static_pad (video_queue, "sink");

if (gst_pad_link (tee_audio_pad, queue_audio_pad) != GST_PAD_LINK_OK ||

gst_pad_link (tee_video_pad, queue_video_pad) != GST_PAD_LINK_OK) {

g_printerr ("Tee could not be linked.\n");

gst_object_unref (pipeline);

return -1;

}

gst_object_unref (queue_audio_pad);

gst_object_unref (queue_video_pad);

request pad调用gst_element_get_request_pad()函数获取,tee这个element的获取src pad通过src_%u获取。queue对应的sink pad使用正常的gst_element_get_request_pad()获取即可。最后通过gst_pad_link()将两个pad连接起来。gst_element_link() and gst_element_link_many()也是通过调用gst_pad_link()来实现的连接。需要注意的是request pad需要手动释放。

To link Request Pads, they need to be obtained by “requesting” them to the element. An element might be able to produce different kinds of Request Pads, so, when requesting them, the desired Pad Template name must be provided. In the documentation for the tee element we see that it has two pad templates named “sink” (for its sink Pads) and “src_%u” (for the Request Pads). We request two Pads from the tee (for the audio and video branches) with gst_element_get_request_pad().

We then obtain the Pads from the downstream elements to which these Request Pads need to be linked. These are normal Always Pads, so we obtain them with gst_element_get_static_pad().

Finally, we link the pads with gst_pad_link(). This is the function that gst_element_link() and gst_element_link_many() use internally.

The sink Pads we have obtained need to be released with gst_object_unref(). The Request Pads will be released when we no longer need them, at the end of the program.

We then set the pipeline to playing as usual, and wait until an error message or an EOS is produced. The only thing left to so is cleanup the requested Pads:

/* Release the request pads from the Tee, and unref them */

gst_element_release_request_pad (tee, tee_audio_pad);

gst_element_release_request_pad (tee, tee_video_pad);

gst_object_unref (tee_audio_pad);

gst_object_unref (tee_video_pad);

gst_element_release_request_pad() releases the pad from the tee, but it still needs to be unreferenced (freed) with gst_object_unref().

Conclusion

This tutorial has shown:

-

How to make parts of a pipeline run on a different thread by using

queueelements. -

What is a Request Pad and how to link elements with request pads, with

gst_element_get_request_pad(),gst_pad_link()andgst_element_release_request_pad(). -

How to have the same stream available in different branches by using

teeelements.

The next tutorial builds on top of this one to show how data can be manually injected into and extracted from a running pipeline.

It has been a pleasure having you here, and see you soon!