应用入口点: AndroidManifest.xml

找到 action.MAIN/ category.LAUNCHER所在的activity

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>找到Launcher activity对应的代码

<activity android:name="com.example.android.tflitecamerademo.CameraActivity"另外,如果在activity元素用配置了

android:screenOrientation="portrait"在旋转屏幕就不在会做对应的调整如改变layout配置文件等。CameraActivity.java

/** Main {@code Activity} class for the Camera app. */

public class CameraActivity extends Activity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_camera);// layout文件

if (null == savedInstanceState) {

getFragmentManager()

.beginTransaction()// Fragment不能单独存在,通过container引入

.replace(R.id.container, Camera2BasicFragment.newInstance())

.commit();

}

}

}

Camera2BasicFragment.java

其中public所以CameraActivity.java可使用

public static Camera2BasicFragment newInstance() {

return new Camera2BasicFragment();

}//即使没有构造函数的实现也很调用基类的构造函数,创建成员变量和方法等

/** Basic fragments for the Camera. */

public class Camera2BasicFragment extends Fragment

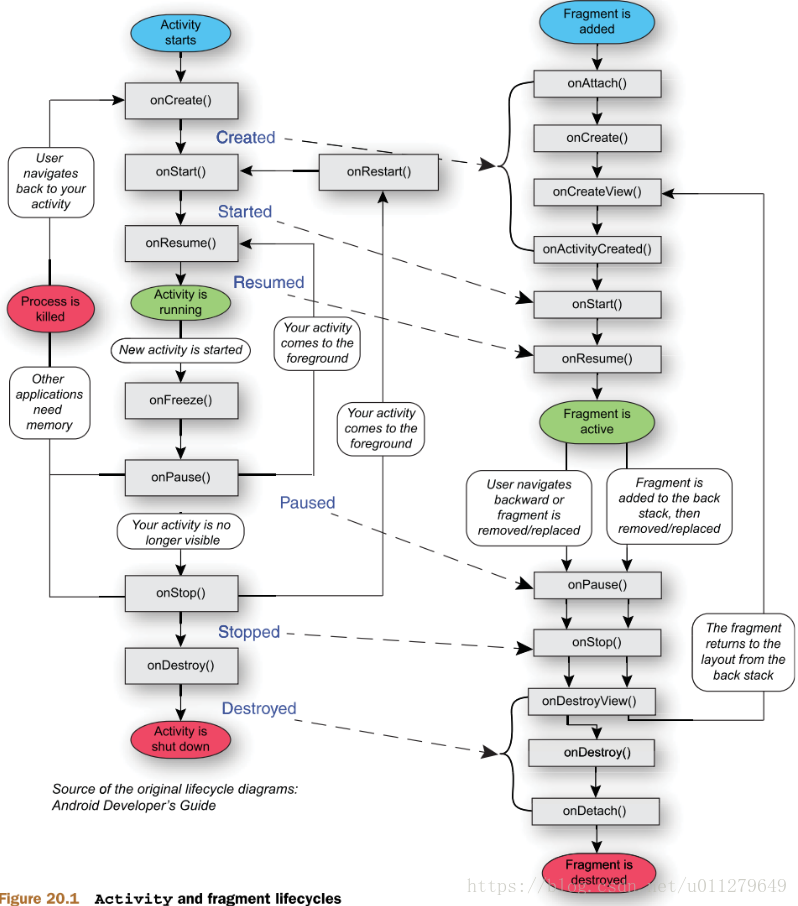

implements FragmentCompat.OnRequestPermissionsResultCallback {}Fragment的生命周期

该过程创建layout的各种view,

/* Though a Fragment's lifecycle is tied to its owning activity, it has

* its own wrinkle on the standard activity lifecycle. It includes basic

* activity lifecycle methods such as onResume, but also important

* are methods related to interactions with the activity and UI generation.

*

* The core series of lifecycle methods that are called to bring a fragment

* up to resumed state (interacting with the user) are:

*

* {onAttach} called once the fragment is associated with its activity.

* {onCreate} called to do initial creation of the fragment.

* {onCreateView} creates and returns the view hierarchy associated with the fragment.

* {onActivityCreated} tells the fragment that its activity has

* completed its own {Activity.onCreate()}.

* {ViewStateRestored} tells the fragment that all of the saved

* tate of its view hierarchy has been restored.

* {onStart} makes the fragment visible to the user (based on its

* containing activity being started).

* {onResume} makes the fragment begin interacting with the user

* (based on its containing activity being resumed).

*/

从上图的layout可以看到:textureView(预览窗口),textView(识别结果显示区域)

toggleButton(TFLITE/NNAPI选择),numberPicker(Threads:选择)

/** Layout the preview and buttons. */

@Override//和layout关联

public View onCreateView(//import android.view.LayoutInflater;

LayoutInflater inflater, ViewGroup container, Bundle savedInstanceState) {

return inflater.inflate(R.layout.fragment_camera2_basic, container, false);

}

/** Connect the buttons to their event handler. 和view关联,创建camera预览view和text view显示结果*/

@Override

public void onViewCreated(final View view, Bundle savedInstanceState) {

textureView = (AutoFitTextureView) view.findViewById(R.id.texture);//Preview

textView = (TextView) view.findViewById(R.id.text);//识别结果(前三)

toggle = (ToggleButton) view.findViewById(R.id.button);//tflite/nnapi选择

toggle.setOnCheckedChangeListener(new CompoundButton.OnCheckedChangeListener() {

public void onCheckedChanged(CompoundButton buttonView, boolean isChecked) {

classifier.setUseNNAPI(isChecked);

}

});//选择变化处理函数

np = (NumberPicker) view.findViewById(R.id.np);//[1,10] number picker选择

np.setMinValue(1);

np.setMaxValue(10);

np.setWrapSelectorWheel(true);

np.setOnValueChangedListener(new NumberPicker.OnValueChangeListener() {

@Override

public void onValueChange(NumberPicker picker, int oldVal, int newVal){

classifier.setNumThreads(newVal);

}

});

}

/** Load the model and labels. */

@Override

public void onActivityCreated(Bundle savedInstanceState) {

super.onActivityCreated(savedInstanceState);

try {

// create either a new ImageClassifierQuantizedMobileNet or an ImageClassifierFloatInception

classifier = new ImageClassifierQuantizedMobileNet(getActivity());

} catch (IOException e) {

Log.e(TAG, "Failed to initialize an image classifier.", e);

}

startBackgroundThread();

}

@Override

public void onResume() {

super.onResume();

startBackgroundThread();

// When the screen is turned off and turned back on, the SurfaceTexture is already

// available, and "onSurfaceTextureAvailable" will not be called. In that case, we can open

// a camera and start preview from here (otherwise, we wait until the surface is ready in

// the SurfaceTextureListener).

if (textureView.isAvailable()) {

openCamera(textureView.getWidth(), textureView.getHeight());

} else {

textureView.setSurfaceTextureListener(surfaceTextureListener);

}

}

new ImageClassifierQuantizedMobileNet

提供了两种类型的模型:Quantized/ Float, 和这两者相关的参数,操作在subclass中

public class ImageClassifierQuantizedMobileNet extends ImageClassifier {

/**

* An array to hold inference results, to be feed into Tensorflow Lite as outputs.

* This isn't part of the super class, because we need a primitive array here.

*/

private byte[][] labelProbArray = null;

/**

* Initializes an {@code ImageClassifier}.

*

* @param activity

*/

ImageClassifierQuantizedMobileNet(Activity activity) throws IOException {

super(activity);

labelProbArray = new byte[1][getNumLabels()];

}}

基类的构造函数

基类的构造函数由模型文件创建了Interpreter, labelList, 为reference提供输入参数imgData(ByteBuffer格式)

/** Initializes an {@code ImageClassifier}. */

ImageClassifier(Activity activity) throws IOException {

tflite = new Interpreter(loadModelFile(activity));

labelList = loadLabelList(activity);

imgData = ByteBuffer.allocateDirect(

DIM_BATCH_SIZE // 1

* getImageSizeX() // protected abstract -> protected实现在subclass

* getImageSizeY()

* DIM_PIXEL_SIZE // 3

* getNumBytesPerChannel());

imgData.order(ByteOrder.nativeOrder());

filterLabelProbArray = new float[FILTER_STAGES][getNumLabels()];

Log.d(TAG, "Created a Tensorflow Lite Image Classifier.");

}TextureView

/**

* A TextureView can be used to display a content stream. Such a content

* stream can for instance be a video or an OpenGL scene. The content stream

* can come from the application's process as well as a remote process.

*

* TextureView can only be used in a hardware accelerated window. When

* rendered in software, TextureView will draw nothing.[?]

*

* Unlike {SurfaceView}, TextureView does not create a separate

* window but behaves as a regular View. This key difference allows a

* TextureView to be moved, transformed, animated, etc. For instance, you

* can make a TextureView semi-translucent by calling myView.setAlpha(0.5f)

*

* Using a TextureView is simple: all you need to do is get its

* {SurfaceTexture}. The {SurfaceTexture} can then be used to render content.

*

* A TextureView's SurfaceTexture can be obtained either by invoking

* {getSurfaceTexture()} or by using a {SurfaceTextureListener}.

* It is therefore highly recommended you use a listener to

* be notified when the SurfaceTexture becomes available.[用listener获得SurfaceTexture]

*/

/**

* {@link TextureView.SurfaceTextureListener} handles several lifecycle events on a {@link

* TextureView}.

*/

private final TextureView.SurfaceTextureListener surfaceTextureListener =

new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture texture, int width, int height) {

openCamera(width, height);//预览窗口的大小是这里作为输入参数得到的

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture texture, int width, int height) {

configureTransform(width, height);

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture texture) {

return true;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture texture) {}

};

openCamera(width, height)

/** Opens the camera specified by {@link Camera2BasicFragment#cameraId}. */

private void openCamera(int width, int height) {

setUpCameraOutputs(width, height);

configureTransform(width, height);

//Configures the necessary {android.graphics.Matrix} transformation to `textureView`

Activity activity = getActivity();

CameraManager manager = (CameraManager) activity.getSystemService(Context.CAMERA_SERVICE);

try {

manager.openCamera(cameraId, stateCallback, backgroundHandler);

}

}获得camera_ID

private void setUpCameraOutputs(int width, int height) {

Activity activity = getActivity();

CameraManager manager = (CameraManager) activity.getSystemService(Context.CAMERA_SERVICE);

try {

for (String cameraId : manager.getCameraIdList()) {

CameraCharacteristics characteristics = manager.getCameraCharacteristics(cameraId);

// We don't use a front facing camera in this sample.

Integer facing = characteristics.get(CameraCharacteristics.LENS_FACING);

if (facing != null && facing == CameraCharacteristics.LENS_FACING_FRONT) {

continue;

}

StreamConfigurationMap map =

characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

if (map == null) {

continue;

}//旋转角度相关的,这里忽略

this.cameraId = cameraId;

return;

}}

manager.openCamera(cameraId, stateCallback, backgroundHandler)

为了获得预览图像需要经过如下的过程:open CameraDevice, 提交 session, 接收结果:cameraDevice stateCallback -> create session -> session result:这个过程需要把texture和camera预览返回的image关联。

private final CameraDevice.StateCallback stateCallback =

new CameraDevice.StateCallback() {

@Override

public void onOpened(@NonNull CameraDevice currentCameraDevice) {

// This method is called when the camera is opened. We start camera preview here.

cameraOpenCloseLock.release();

cameraDevice = currentCameraDevice;

createCameraPreviewSession();

}

@Override

public void onDisconnected(@NonNull CameraDevice currentCameraDevice) {

cameraOpenCloseLock.release();

currentCameraDevice.close();

cameraDevice = null;

}

@Override

public void onError(@NonNull CameraDevice currentCameraDevice, int error) {

cameraOpenCloseLock.release();

currentCameraDevice.close();

cameraDevice = null;

Activity activity = getActivity();

if (null != activity) {

activity.finish();

}

}

}; /** Creates a new {@link CameraCaptureSession} for camera preview. */

private void createCameraPreviewSession() {

try {

SurfaceTexture texture = textureView.getSurfaceTexture();

assert texture != null;

// We configure the size of default buffer to be the size of camera preview we want.

texture.setDefaultBufferSize(previewSize.getWidth(), previewSize.getHeight());

// This is the output Surface we need to start preview.

Surface surface = new Surface(texture);

// We set up a CaptureRequest.Builder with the output Surface.

previewRequestBuilder = cameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

previewRequestBuilder.addTarget(surface);//通过surface和TextureView关联

// Here, we create a CameraCaptureSession for camera preview.

cameraDevice.createCaptureSession(

Arrays.asList(surface),

new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(@NonNull CameraCaptureSession cameraCaptureSession) {

// The camera is already closed

if (null == cameraDevice) {

return;

}

// When the session is ready, we start displaying the preview.

captureSession = cameraCaptureSession;

try {

// Auto focus should be continuous for camera preview.

previewRequestBuilder.set(

CaptureRequest.CONTROL_AF_MODE,

CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

// Finally, we start displaying the camera preview.

previewRequest = previewRequestBuilder.build();

captureSession.setRepeatingRequest(

previewRequest, captureCallback, backgroundHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession cameraCaptureSession) {

showToast("Failed");

}

},

null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

} /** A {@link CameraCaptureSession.CaptureCallback} that handles events related to capture. */

private CameraCaptureSession.CaptureCallback captureCallback =

new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureProgressed(

@NonNull CameraCaptureSession session,

@NonNull CaptureRequest request,

@NonNull CaptureResult partialResult) {}

@Override

public void onCaptureCompleted(

@NonNull CameraCaptureSession session,

@NonNull CaptureRequest request,

@NonNull TotalCaptureResult result) {}

};BackgroundThread

通过一个HandleThread周期性处理得到的frame,使用lock控制原子操作:classifyFrame

/** Starts a background thread and its {@link Handler}. */

private void startBackgroundThread() {

backgroundThread = new HandlerThread(HANDLE_THREAD_NAME);

backgroundThread.start();

backgroundHandler = new Handler(backgroundThread.getLooper());

synchronized (lock) {

runClassifier = true;

}

backgroundHandler.post(periodicClassify);

} /** Takes photos and classify them periodically. */

private Runnable periodicClassify =

new Runnable() {

@Override

public void run() {

synchronized (lock) {

if (runClassifier) {

classifyFrame();

}

}

backgroundHandler.post(periodicClassify);

}

};

private void classifyFrame() {

//从textureView获得Bitmap

Bitmap bitmap =

textureView.getBitmap(classifier.getImageSizeX(), classifier.getImageSizeY());

String textToShow = classifier.classifyFrame(bitmap);

bitmap.recycle();

showToast(textToShow);

} /** Classifies a frame from the preview stream. */

String classifyFrame(Bitmap bitmap) {

convertBitmapToByteBuffer(bitmap); //把BitMap保存到ByteBuffer中

// Here's where the magic happens!!!

long startTime = SystemClock.uptimeMillis();

runInference();

long endTime = SystemClock.uptimeMillis();

Log.d(TAG, "Timecost to run model inference: " + Long.toString(endTime - startTime));

// Print the results.

String textToShow = printTopKLabels();

textToShow = Long.toString(endTime - startTime) + "ms" + textToShow;

return textToShow;

} @Override

protected void runInference() {

tflite.run(imgData, labelProbArray);//imgData全局变量ByteBuffer, labelProbArray输出结果

} /**

* Shows a {@link Toast} on the UI thread for the classification results.

*

* @param text The message to show

*/

private void showToast(final String text) {

final Activity activity = getActivity();

if (activity != null) {

activity.runOnUiThread(

new Runnable() {

@Override

public void run() {

textView.setText(text);//怎样把文本显示在textView上

}

});

}

}