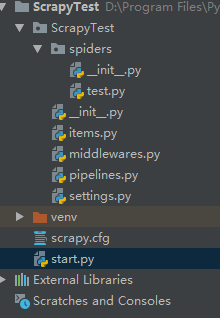

练习代码如下:

主函数:

# _*_ coding:utf-8 _*_ import scrapy from scrapy.selector import Selector from lxml import etree from ScrapyTest.items import ScrapytestItem class testSpider(scrapy.Spider): #爬虫名字 name = "itcast" #允许爬取的域名 # allowwd_domains = ["http://www.itcast.cn/"] #爬取信息的网址 start_urls = ( "http://www.hs-bianma.com/search.php?ser=%E5%8F%A3%E7%BA%A2&ai=1", ) def parse(self, response): # print(response.body) #先爬取口红列表中的所有信息 items = response.xpath("/html/body/div[2]/div") print(items) #使用xpath,在items的基础上继续查找口红的具体信息 #使用replace()方法删除多余的字段 #使用yield将爬取信息传给items.py for item in items: str = './/div[1]/text()' m = item.xpath(str).extract_first() n = item.xpath('.//div[2]').extract_first() # a = response.xpath('/html/body/div[2]/div/div[2]').extract()[11] b = item.xpath(".//div[3]/a[1]/@href").extract_first() c = item.xpath(".//div[3]/a[2]/@href").extract_first() a1 = n.replace('</div>', '').replace('<div>', '').replace('<span class="red">', '').replace('</span>', '') a1 = a1.replace('<b>商品描述</b>', '') # print(m) # print(a1) # print(b) # print(c) # print(a1) item = ScrapytestItem() item['hscode'] = m item['商品描述'] = a1 item['归类实例'] = b item['详情'] = c yield item # for m in mlist: # test_list = {} # print(test_list) # # test_list['m'] = m # print(m) #判断下一页是否存在,存在着交给newParse处理 next_page = response.xpath("//*[@id='main']/div/div[3]/a[1]/@href").extract_first() next_page = 'http://www.hs-bianma.com'+next_page print(next_page) if next_page is not None: next_page = response.urljoin(next_page) yield scrapy.Request(next_page, callback=self.myParse) def myParse(self, response): # print(response.text) second_items = response.xpath(".//*[@id='main']/div") # print(second_items) for second_item in second_items: second_hscode = second_item.xpath(".//div[1]").extract_first() second_hscode = second_hscode.replace('<span class="red">', '').replace('</span>', '') second_hscode = second_hscode.replace('<div>', '').replace('</div>','').replace('<b>hscode</b>','') second_description = second_item.xpath(".//div[2]/text()").extract_first() second_details = second_item.xpath(".//div[3]/a/@href").extract_first() print(second_hscode) print(second_description) print(second_details) item = ScrapytestItem() item['second_hscode'] = second_hscode item['二级商业描述'] = second_description item['二级详情'] = second_details yield item

setting.py:

# -*- coding: utf-8 -*- # Scrapy settings for ScrapyTest project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'ScrapyTest' SPIDER_MODULES = ['ScrapyTest.spiders'] NEWSPIDER_MODULE = 'ScrapyTest.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #伪装为火狐浏览器访问 USER_AGENT = 'Mozilla/5.0 (Windows NT 6.3; WOW64; rv:45.0) Gecko/20100101 Firefox/45.0' # 修改编码为gbk,utf-8不行 FEED_EXPORT_ENCODING = 'gbk' #输出csv文件 FEED_URI = u'file:///F://douban.csv' FEED_FORMAT = 'CSV' # Obey robots.txt rules ROBOTSTXT_OBEY = False

items.py:

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class ScrapytestItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() hscode = scrapy.Field() 商品描述 = scrapy.Field() 归类实例 = scrapy.Field() 详情 = scrapy.Field() second_hscode = scrapy.Field() 二级商业描述 = scrapy.Field() 二级详情 = scrapy.Field() pass

start.py:

pipelines.py:

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class ScrapytestPipeline(object): def process_item(self, item, spider): return item class FilePipeLine(object): def __init__(self): self.file = open('f:/hjd', 'wb') #输出txt文件 def process_item(self, item, spider): line = "%s\t%s\t%s\t%s\t%s\t%s\n" % (item['hscode'], item['description'], item['example'], item['details']) self.file.write(line.encode("utf-8")) return item