1.10 Vanishing/Exploding Gradients

这一篇写得特别好:

详解机器学习中的梯度消失、爆炸原因及其解决方法

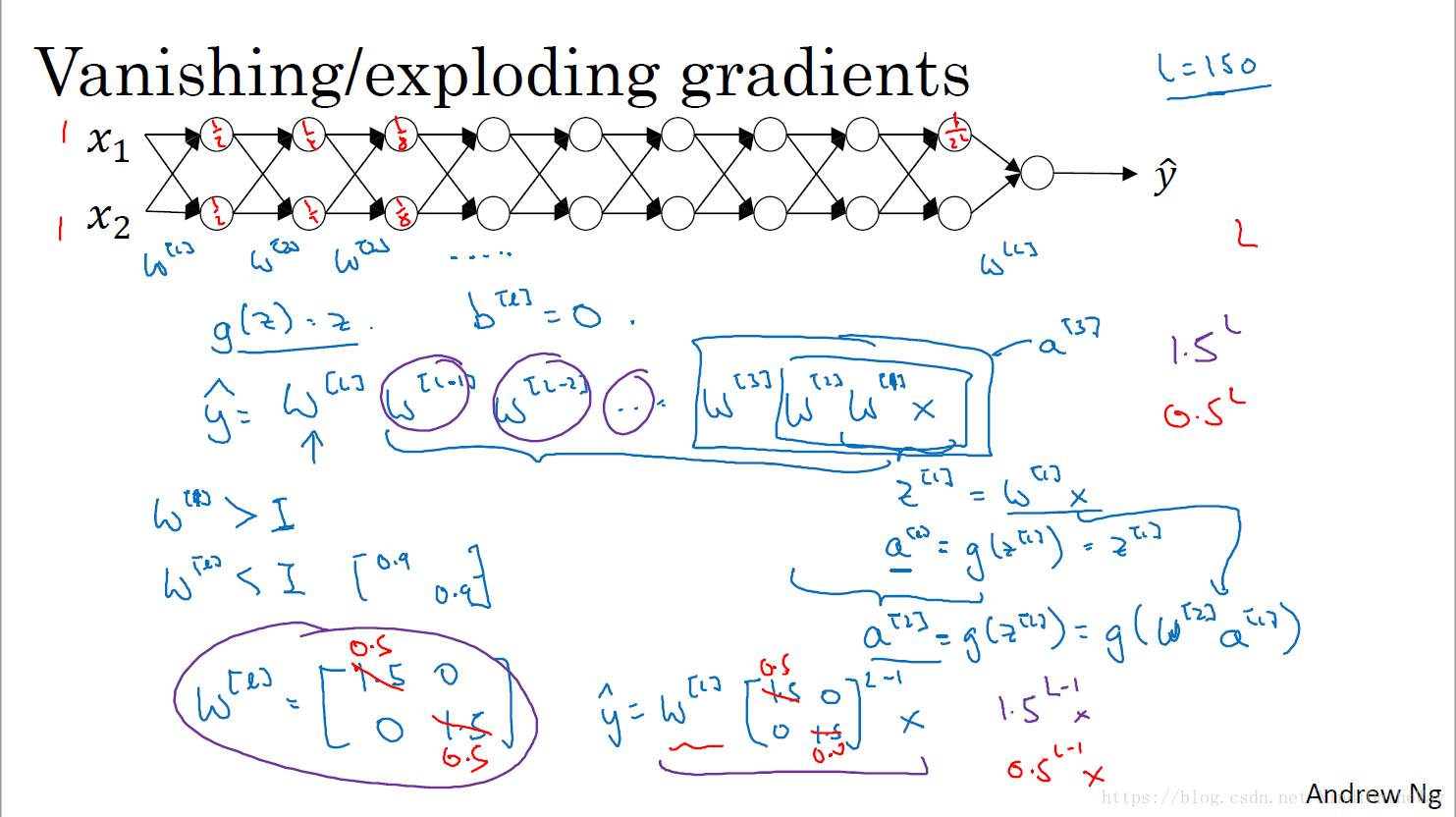

One of the problems of training neural network, especially very deep neural networks, is data vanishing and exploding gradients. What that means is that when you're training a very deep network your derivatives or your slopes can sometimes get either very, very big or very, very small, maybe even exponentially small, and this makes training difficult.

假设激活函数

,即激活函数是线性的,并且没有偏移项

:

- The weights W, if they’re all just a little bit bigger than one or just a little bit bigger than the identity matrix, then with a very deep network the activations can explode.

- And if W is just a little bit less than identity. So this maybe here’s 0.9, 0.9, then you have a very deep network, the activations will decrease exponentially.

- And even though I went through this argument in terms of activations increasing or decreasing exponentially as a function of L, a similar argument can be used to show that the derivatives or the gradients the computer is going to send will also increase exponentially or decrease exponentially as a function of the number of layers.

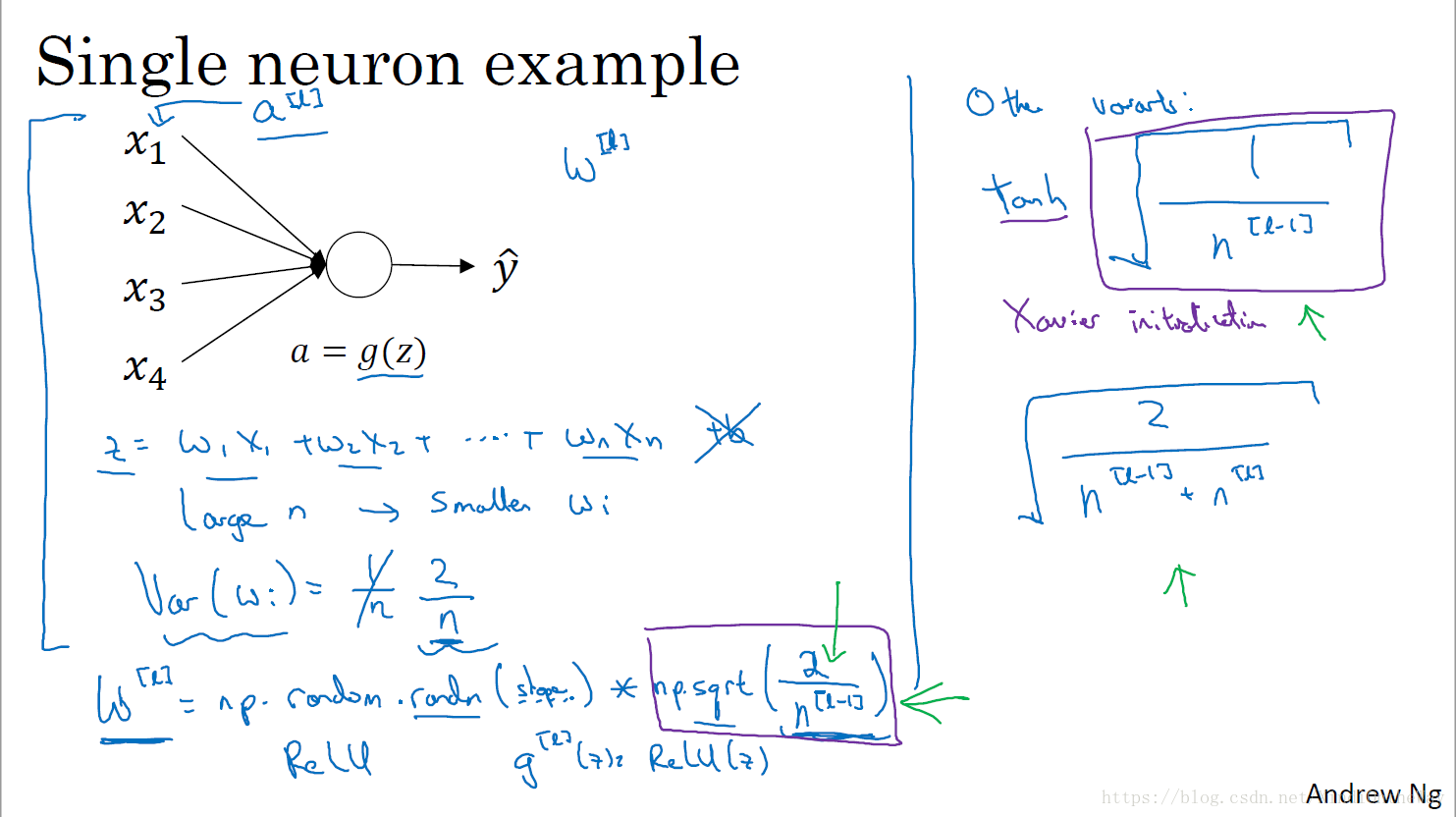

1.11 Weight initialization for deep networks

It turns out that a partial solution to vanishing/exploding gradients, doesn’t solve it entirely but helps a lot, is better or more careful choice of the random initialization for your neural network.

So in order to make

not blow up and not become too small you notice that the larger n is, the smaller you want Wi to be, right? Because z is the sum of the WiXi and so if you’re adding up a lot of these terms you want each of these terms to be smaller. One reasonable thing to do would be to set the variance of Wi to be equal to 1 over n, where n is the number of input features that’s going into a neuron.

So in practice, what you can do is set the weight matrix for a certain layer to be you know, and then whatever the shape of the matrix is for this out here, and then times square root of 1 over the number of features that I fed into each neuron and there else is going to be $because that’s the number of units that I’m feeding into each of the units and they are l.

So if the input features of activations are roughly mean 0 and standard variance and variance 1 then this would cause z to also take on a similar scale and this doesn’t solve, but it definitely helps reduce the vanishing, exploding gradients problem because it’s trying to set each of the weight matrices w you know so that it’s not too much bigger than 1 and not too much less than 1 so it doesn’t explode or vanish too quickly. I’ve just mention some other variants.