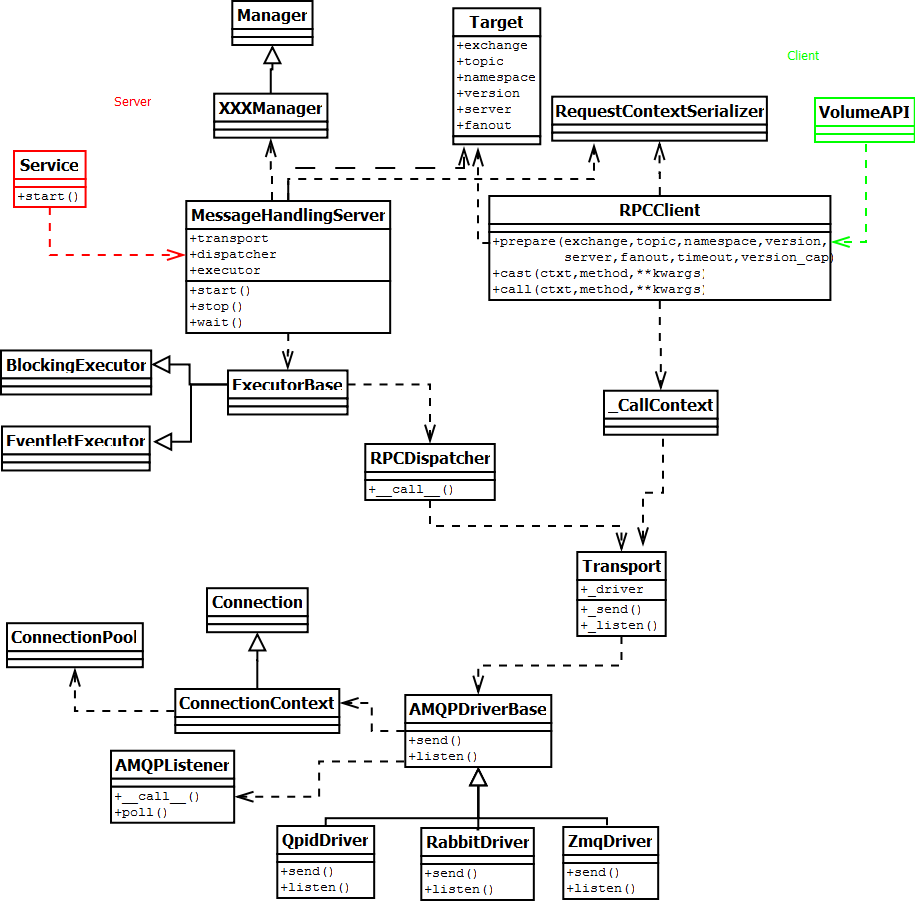

对象关系图

1. Server端:

- 消息队列的监听会在service启动的时候开启, 比如cinder-volume启动时,会启动MessageHandlingServer( 下面具体介绍),来监听消息并把消息dispatch到距离的Manager方法中做消息处理.

- RPC 请求处理由 Sever 角色负责,以 cinder 为例,cinder-api 是请求发起者即 Client,请求通过使用 RPC 方法发送给 cinder-scheduler,cinder-scheduler 负责处理请求则为 Server。

- Server 负责处理请求,首先需要创建 Consumer。cinder-scheduler server 创建了 cinder-scheduler 和 cinder-scheduler:host 的 Topic Consumer 和 cinder-scheduler Fanout Consumer,分别用于接收不同类型的消息。Consumer 创建时,就会在 Qpid server 上创建对应的 Message Queue,并声明 Routing Key 绑定到 Exchange 上。Cinder-scheduler 服务启动时,将 cinder-scheduler manager 注册为了 RPC_dispatcher 即 callback 对象即 Client 发送的请求最终会有 cinder-scheduler manager 对象调用执行。

- 启动 consumer 线程,接受 Queue 上的消息,当线程接收到 Queue 上消息后,将消息传递给 RPC_dispatcher 即 callback 对象,由 callback 对象根据消息内容,调用对应的处理函数,例如 create_volume,并处理请求。

2. Client端: 负责消息发出,方法调用的code是在具体API中,如本例的VolumeAPI, 一般存放在rpcapi.py中.

为了进行详细解释,先画出整体的对象依赖图:

serve_rpc函数

serve_rpc函数最重要的工作就是启动各个插件的RpcWorker。

neutron/neutron/service.py

1. serve_rpc()

def serve_rpc():

plugin = manager.NeutronManager.get_plugin()

if cfg.CONF.rpc_workers < 1:

cfg.CONF.set_override('rpc_workers', 1)

# If 0 < rpc_workers then start_rpc_listeners would be called in a

# subprocess and we cannot simply catch the NotImplementedError. It is

# simpler to check this up front by testing whether the plugin supports

# multiple RPC workers.

if not plugin.rpc_workers_supported():

LOG.debug("Active plugin doesn't implement start_rpc_listeners")

if 0 < cfg.CONF.rpc_workers:

LOG.error(_LE("'rpc_workers = %d' ignored because "

"start_rpc_listeners is not implemented."),

cfg.CONF.rpc_workers)

raise NotImplementedError()

try:

rpc = RpcWorker(plugin)

# dispose the whole pool before os.fork, otherwise there will

# be shared DB connections in child processes which may cause

# DB errors.

LOG.debug('using launcher for rpc, workers=%s', cfg.CONF.rpc_workers)

session.dispose()

launcher = common_service.ProcessLauncher(cfg.CONF, wait_interval=1.0)

launcher.launch_service(rpc, workers=cfg.CONF.rpc_workers)

return launcher

except Exception:

with excutils.save_and_reraise_exception():

LOG.exception(_LE('Unrecoverable error: please check log for '

'details.'))2. RpcWorker类:

class RpcWorker(worker.NeutronWorker):

"""Wraps a worker to be handled by ProcessLauncher"""

def __init__(self, plugin):

self._plugin = plugin

self._servers = []

def start(self):

super(RpcWorker, self).start()

self._servers = self._plugin.start_rpc_listeners()

def wait(self):

try:

self._wait()

except Exception:

LOG.exception(_LE('done with wait'))

raise

def _wait(self):

LOG.debug('calling RpcWorker wait()')

for server in self._servers:

if isinstance(server, rpc_server.MessageHandlingServer):

LOG.debug('calling wait on %s', server)

server.wait()

else:

LOG.debug('NOT calling wait on %s', server)

LOG.debug('returning from RpcWorker wait()')

def stop(self):

LOG.debug('calling RpcWorker stop()')

for server in self._servers:

if isinstance(server, rpc_server.MessageHandlingServer):

LOG.debug('calling stop on %s', server)

server.stop()

@staticmethod

def reset():

config.reset_service()过程分析

首先,会根据配置文件core_plugin的配置加载plugin,然后创建RpcWorker,开始监听rpc;通过调用_plugin.start_rpc_listeners进行监听。

以ml2 plugin为例,在它的start_rpc_listener方法中,创建neutron.plugin.ml2.rpc.RpcCallbacks类的实例,并创建了dispatcher处理’q-plugin’ topic。ml2 plugin所在文件:

neutron/plugins/ml2/plugin.py

start_rpc_listener方法中的_setup_rpc函数,创建neutron.plugin.ml2.rpc.RpcCallbacks类的实例

def _setup_rpc(self):

"""Initialize components to support agent communication."""

self.endpoints = [

rpc.RpcCallbacks(self.notifier, self.type_manager),

securitygroups_rpc.SecurityGroupServerRpcCallback(),

dvr_rpc.DVRServerRpcCallback(),

dhcp_rpc.DhcpRpcCallback(),

agents_db.AgentExtRpcCallback(),

metadata_rpc.MetadataRpcCallback(),

resources_rpc.ResourcesPullRpcCallback()

]

创建了dispatcher处理topic = ‘q-plugin’, 用来订阅ml2 Agent消息队列的消息(consumer,消费者)(topics.PLUGIN)以便接收来自agent的rpc请求。

def start_rpc_listeners(self):

"""Start the RPC loop to let the plugin communicate with agents."""

self._setup_rpc()

self.topic = topics.PLUGIN

self.conn = n_rpc.create_connection(new=True)

self.conn.create_consumer(self.topic, self.endpoints, fanout=False)

return self.conn.consume_in_threads()ML2Plugin初始化时,针对agent创建自己的消息队列(notify,生产者)(topics.AGENT)以便向agent发送rpc请求,同时订阅ml2 Agent消息队列的消息(consumer,消费者)(topics.PLUGIN)以便接收来自agent的rpc请求。同样,

ml2 Agent初始化时,也会创建自己的消息队列(notify,生产者)(topics.PLUGIN)来向plugin发送rpc请求,同时订阅ML2Plugin消息队列的消息(consumer,消费者)(topics.AGENT)来接收来自plugin的rpc请求。

消息队列的生成者类(xxxxNotifyAPI)和对应的消费者类(xxxxRpcCallback)定义有相同的接口函数,生产者类中的函数主要作用是rpc调用消费者类中的同名函数,消费者类中的函数执行实际的动作。如:xxxNotifyAPI类中定义有network_delete()函数,则xxxRpcCallback类中也会定义有network_delete()函数。xxxNotifyAPI::network_delete()通过rpc调用xxxRpcCallback::network_delete()函数,xxxRpcCallback::network_delete()执行实际的network delete删除动作。

生成者类(xxxxNotifyAPI):

/neutron/neutron/agent/rpc.py

def update_device_up(self, context, device, agent_id, host=None):

cctxt = self.client.prepare()

return cctxt.call(context, 'update_device_up', device=device,

agent_id=agent_id, host=host)消费者类(xxxxRpcCallback):

/neutron/neutron/plugins/ml2/rpc.py

def update_device_up(self, rpc_context, **kwargs):

"""Device is up on agent."""

agent_id = kwargs.get('agent_id')

device = kwargs.get('device')

host = kwargs.get('host')

LOG.debug("Device %(device)s up at agent %(agent_id)s",

{'device': device, 'agent_id': agent_id})

plugin = manager.NeutronManager.get_plugin()

port_id = plugin._device_to_port_id(rpc_context, device)

if (host and not plugin.port_bound_to_host(rpc_context,

port_id, host)):

LOG.debug("Device %(device)s not bound to the"

" agent host %(host)s",

{'device': device, 'host': host})

return

port_id = plugin.update_port_status(rpc_context, port_id,

n_const.PORT_STATUS_ACTIVE,

host)

try:

# NOTE(armax): it's best to remove all objects from the

# session, before we try to retrieve the new port object

rpc_context.session.expunge_all()

port = plugin._get_port(rpc_context, port_id)

except exceptions.PortNotFound:

LOG.debug('Port %s not found during update', port_id)

else:

kwargs = {

'context': rpc_context,

'port': port,

'update_device_up': True

}

registry.notify(

resources.PORT, events.AFTER_UPDATE, plugin, **kwargs)RpcCallbacks类中的方法与neutron.agent.rpc.PluginApi的方法是对应的

参考:

RabbitMQ基础概念详细介绍: http://blog.csdn.net/whycold/article/details/41119807

oslo_messaging组件: http://blog.csdn.net/gj19890923/article/details/50278669

主要介绍RPC-server和PRC-client的创建,以及对cast和call的远程调用。

你应该知道的 RPC 原理: http://blog.jobbole.com/92290/

RPC 通信原理: http://www.ibm.com/developerworks/cn/cloud/library/1403_renmm_opestackrpc/index.html

rabbit官网: https://www.rabbitmq.com/tutorials/tutorial-one-python.html