在前面的文章中我们用ffmpeg在windows上实现了多种功能,下面系列的文章我们将移植到Android系统上实现。

我们将使用最新版:

最新版ffmpeg ffmpeg3.3

新版Android studio Android studio2.3

新版JNI编译方式 CMake

对于Android开发者或许对C/C++/JNI知识不够了解。

可以先看这里:

C语言小结 :http://blog.csdn.net/king1425/article/details/70256764

C++小结(一) : http://blog.csdn.net/king1425/article/details/70260091

JNI高阶知识总结 : http://blog.csdn.net/king1425/article/details/71405131

下面我们开始实现今天的功能:

1.编译Android版ffmpeg并移植到Android studio中

看这里:

windows下编译最新版ffmpeg3.3-android,并通过CMake方式移植到Android studio2.3中

http://blog.csdn.net/king1425/article/details/70338674

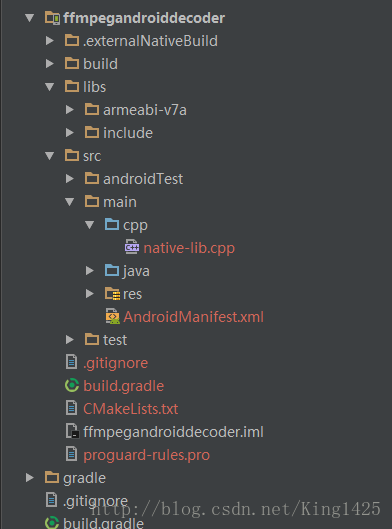

2.复制一份第一步移植成功的的代码命名为:ffmpegandroiddecoder

3.删减部分代码验证无误,然后编写布局代码逻辑代码

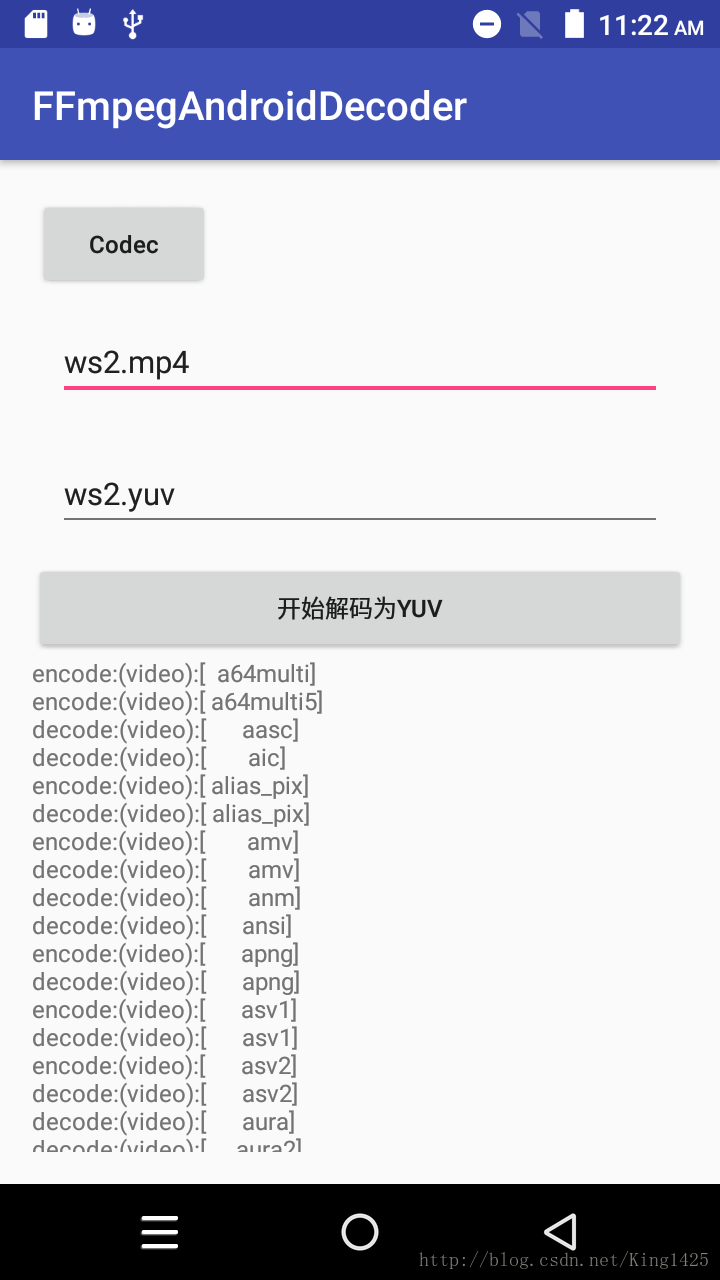

点击codec按钮出现如上图列表界面则无误

下面是相关代码:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:id="@+id/activity_main"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

android:paddingBottom="@dimen/activity_vertical_margin"

android:paddingLeft="@dimen/activity_horizontal_margin"

android:paddingRight="@dimen/activity_horizontal_margin"

android:paddingTop="@dimen/activity_vertical_margin">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="horizontal">

<Button

android:id="@+id/btn_codec"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_margin="2dp"

android:text="Codec"

android:textAllCaps="false" />

</LinearLayout>

<EditText

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_margin="12dp"

android:text="ws2.mp4"

android:id="@+id/editText1" />

<EditText

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_margin="12dp"

android:text="ws2.yuv"

android:id="@+id/editText2" />

<Button

android:text="开始解码为YUV"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:id="@+id/button" />

<ScrollView

android:layout_width="match_parent"

android:layout_height="wrap_content">

<TextView

android:id="@+id/tv_info"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="Hello World!" />

</ScrollView>

</LinearLayout>

package com.ws.ffmpegandroiddecoder;

import android.os.Bundle;

import android.os.Environment;

import android.support.v7.app.AppCompatActivity;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

import android.widget.TextView;

public class MainActivity extends AppCompatActivity implements View.OnClickListener {

// Used to load the 'native-lib' library on application startup.

static {

System.loadLibrary("native-lib");

}

private Button codec;

private TextView tv_info;

private EditText editText1,editText2;

private Button btnDecoder;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

init();

}

private void init() {

codec = (Button) findViewById(R.id.btn_codec);

tv_info= (TextView) findViewById(R.id.tv_info);

editText1 = (EditText) findViewById(R.id.editText1);

editText2 = (EditText) findViewById(R.id.editText2);

btnDecoder= (Button) findViewById(R.id.button);

codec.setOnClickListener(this);

btnDecoder.setOnClickListener(this);

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.btn_codec:

tv_info.setText(avcodecinfo());

break;

case R.id.button:

startDecode();

break;

default:

break;

}

}

private void startDecode() {

String folderurl= Environment.getExternalStorageDirectory().getPath();

String inputurl=folderurl+"/"+editText1.getText().toString();

String outputurl=folderurl+"/"+editText2.getText().toString();

Log.e("ws-----------inputurl",inputurl);

Log.e("ws------------outputurl",outputurl);

decode(inputurl,outputurl);

}

public native String decode(String inputurl, String outputurl);

public native String avcodecinfo();

}

4.实现native方法 decode(String inputurl, String outputurl)

对ffmeg API不了解的同学先看这里: ffmpeg源码简析(一)结构总览:http://blog.csdn.net/king1425/article/details/70597642

#include <jni.h>

#include <string>

extern "C"

{

#include <libavutil/log.h>

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libavutil/imgutils.h>

#include <android/log.h>

#define LOGE(format, ...) __android_log_print(ANDROID_LOG_ERROR, "(>_<)", format, ##__VA_ARGS__)

#define LOGI(format, ...) __android_log_print(ANDROID_LOG_INFO, "(^_^)", format, ##__VA_ARGS__)

//Output FFmpeg's av_log()

void custom_log(void *ptr, int level, const char* fmt, va_list vl){

FILE *fp=fopen("/storage/emulated/0/av_log.txt","a+");

if(fp){

vfprintf(fp,fmt,vl);

fflush(fp);

fclose(fp);

}

}

JNIEXPORT jint JNICALL

Java_com_ws_ffmpegandroiddecoder_MainActivity_decode(

JNIEnv *env,

jobject , jstring input_jstr, jstring output_jstr) {

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame,*pFrameYUV;

uint8_t *out_buffer;

AVPacket *packet;

int y_size;

int ret, got_picture;

struct SwsContext *img_convert_ctx;

FILE *fp_yuv;

int frame_cnt;

clock_t time_start, time_finish;

double time_duration = 0.0;

char input_str[500]={0};

char output_str[500]={0};

char info[1000]={0};

sprintf(input_str,"%s",env->GetStringUTFChars(input_jstr, NULL));

sprintf(output_str,"%s",env->GetStringUTFChars(output_jstr, NULL));

//FFmpeg av_log() callback

av_log_set_callback(custom_log);

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if(avformat_open_input(&pFormatCtx,input_str,NULL,NULL)!=0){

LOGE("Couldn't open input stream.\n");

return -1;

}

if(avformat_find_stream_info(pFormatCtx,NULL)<0){

LOGE("Couldn't find stream information.\n");

return -1;

}

videoindex=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO){

videoindex=i;

break;

}

if(videoindex==-1){

LOGE("Couldn't find a video stream.\n");

return -1;

}

pCodecCtx=pFormatCtx->streams[videoindex]->codec;

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL){

LOGE("Couldn't find Codec.\n");

return -1;

}

if(avcodec_open2(pCodecCtx, pCodec,NULL)<0){

LOGE("Couldn't open codec.\n");

return -1;

}

pFrame=av_frame_alloc();

pFrameYUV=av_frame_alloc();

out_buffer=(unsigned char *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height,1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize,out_buffer,

AV_PIX_FMT_YUV420P,pCodecCtx->width, pCodecCtx->height,1);

packet=(AVPacket *)av_malloc(sizeof(AVPacket));

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

sprintf(info, "[Input ]%s\n", input_str);

sprintf(info, "%s[Output ]%s\n",info,output_str);

sprintf(info, "%s[Format ]%s\n",info, pFormatCtx->iformat->name);

sprintf(info, "%s[Codec ]%s\n",info, pCodecCtx->codec->name);

sprintf(info, "%s[Resolution]%dx%d\n",info, pCodecCtx->width,pCodecCtx->height);

fp_yuv=fopen(output_str,"wb+");

if(fp_yuv==NULL){

printf("Cannot open output file.\n");

return -1;

}

frame_cnt=0;

time_start = clock();

while(av_read_frame(pFormatCtx, packet)>=0){

if(packet->stream_index==videoindex){

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if(ret < 0){

LOGE("Decode Error.\n");

return -1;

}

if(got_picture){

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

}

av_free_packet(packet);

}

//flush decoder

//FIX: Flush Frames remained in Codec

while (1) {

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

if (ret < 0)

break;

if (!got_picture)

break;

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height,

pFrameYUV->data, pFrameYUV->linesize);

int y_size=pCodecCtx->width*pCodecCtx->height;

fwrite(pFrameYUV->data[0],1,y_size,fp_yuv); //Y

fwrite(pFrameYUV->data[1],1,y_size/4,fp_yuv); //U

fwrite(pFrameYUV->data[2],1,y_size/4,fp_yuv); //V

//Output info

char pictype_str[10]={0};

switch(pFrame->pict_type){

case AV_PICTURE_TYPE_I:sprintf(pictype_str,"I");break;

case AV_PICTURE_TYPE_P:sprintf(pictype_str,"P");break;

case AV_PICTURE_TYPE_B:sprintf(pictype_str,"B");break;

default:sprintf(pictype_str,"Other");break;

}

LOGI("Frame Index: %5d. Type:%s",frame_cnt,pictype_str);

frame_cnt++;

}

time_finish = clock();

time_duration=(double)(time_finish - time_start);

sprintf(info, "%s[Time ]%fms\n",info,time_duration);

sprintf(info, "%s[Count ]%d\n",info,frame_cnt);

sws_freeContext(img_convert_ctx);

fclose(fp_yuv);

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

jstring

Java_com_ws_ffmpegandroiddecoder_MainActivity_avcodecinfo(

JNIEnv *env, jobject) {

char info[40000] = {0};

av_register_all();

AVCodec *c_temp = av_codec_next(NULL);

while (c_temp != NULL) {

if (c_temp->decode != NULL) {

sprintf(info, "%sdecode:", info);

} else {

sprintf(info, "%sencode:", info);

}

switch (c_temp->type) {

case AVMEDIA_TYPE_VIDEO:

sprintf(info, "%s(video):", info);

break;

case AVMEDIA_TYPE_AUDIO:

sprintf(info, "%s(audio):", info);

break;

default:

sprintf(info, "%s(other):", info);

break;

}

sprintf(info, "%s[%10s]\n", info, c_temp->name);

c_temp = c_temp->next;

}

return env->NewStringUTF(info);

}

}

5.拷贝ws.mp4到SD卡根目录,编译运行

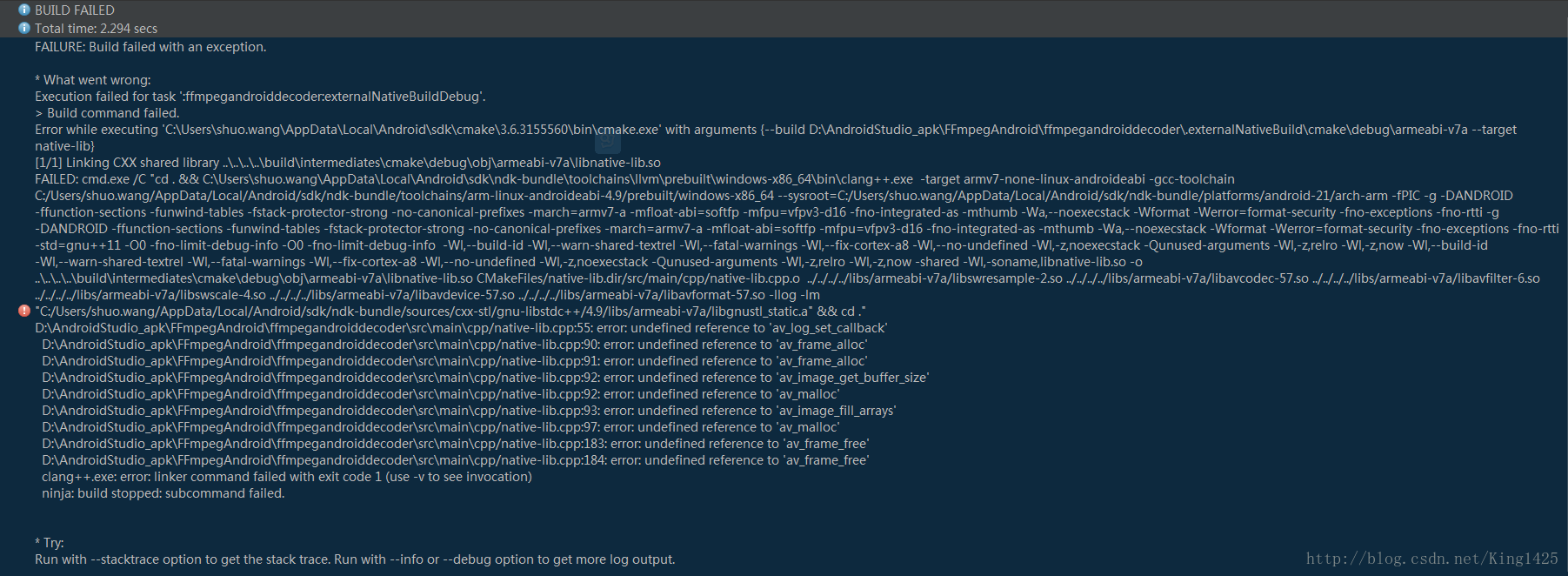

GG编译报错(⊙o⊙),不慌不慌,链接库报错,应该是CMakeLists.txt配置问题。

在之前一章配置的target_link_libraries基础上,更改如下即可

target_link_libraries( native-lib avutil-55 swresample-2 avcodec-57 avfilter-6 swscale-4 avdevice-57 avformat-57

${log-lib} )6.点击开始解码按钮,然后看SD卡跟目录是否多了一个ws.yuv文件

OK~success

请大家务必对着源码观看博文,否则如盲人摸象(⊙o⊙)

源码:https://github.com/WangShuo1143368701/FFmpegAndroid/tree/master/ffmpegandroiddecoder