好久没有写东西了~~感觉都跟时代脱节了~~幸亏有师兄师弟拉扯。

今天看了实体关系抽取的论文,简单记录一下

(1)

论文名称:Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification

作者信息:中科大自动化所 Zhou

模型名称:Att+BLSTM

论文内容:对word做embedding, attention训练每个word的weight,输出一句话的vector并进行分类,一个句子对应一个关系

(2)

论文名称:Neural Relation Extraction with Selective Attention over Instances

作者信息:清华 Lin et al. 2016

模型名称:CNN+ATT / PCNN+ATT

论文内容:句子级别的attention, 先把句子->vector, 然后vectors->relation representation, attention训练每个句子所占的权重,最后得到一个句子set对应的relation representation

https://github.com/thunlp/TensorFlow-NRE

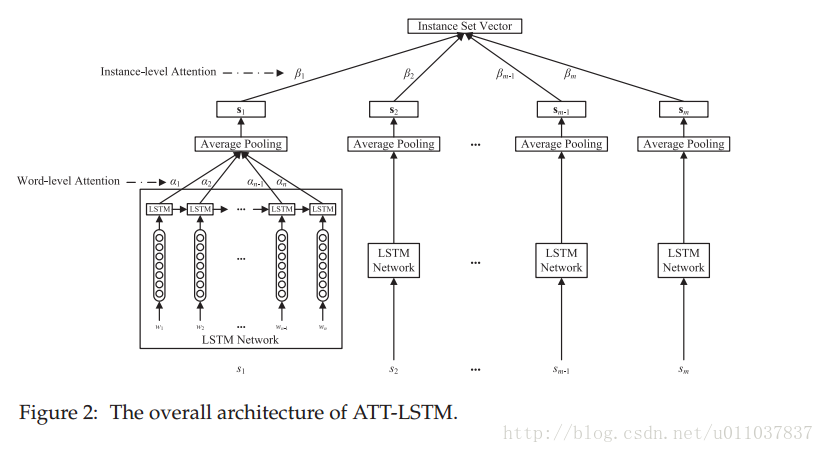

(4)A Customized Attention-Based Long Short-Term Memory Network for Distant Supervised Relation Extraction

采用了两层attention,一个是word到关系实体之间的attention,一个是关系实体到句子(关系实体集合)之间的attention,attention公式采用的是lin的