elk介绍

三大插件以及作用

Elasticsearch 是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful 风格接口,多数据源,自动搜索负载等;

Logstash 是一个完全开源的工具,他可以对你的日志进行收集、过滤,并将其存储供以后使用;

Kibana也是一个开源和免费工具,它 Kibana可以为Logstash和ElasticSearch提供的日志分析友好的Web界面,可以帮助您汇总、分析和搜索重要数据日志;

扩展:

beats是一款轻量型数据采集器,Beats 平台集合了多种单一用途数据采集器。这些采集器安装后可用作轻量型代理,从成百上千或成千上万台机器向 Logstash 或 Elasticsearch 发送数据。

工作流程

在需要收集日志的所有服务上部署logstash,作为logstash agent(logstash shipper)用于监控并过滤收集日志,将过滤后的内容发送到Redis,然后logstash indexer将日志收集在一起交给全文搜索服务ElasticSearch,可以用ElasticSearch进行自定义搜索通过Kibana 来结合自定义搜索进行页面展示。

elk日志分析平台搭建

实验环境:redhat6.5 ;防火墙和seliunx关闭

server2 172.25.60.2 elasticsearch-master

server1 172.25.60.1 logstash-slave

server3 172.25.60.3 Kibana-slave

server2操作:

[root@server2 ~]# ls

elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm

elasticsearch-head-master.zip

[root@server2 ~]# rpm -ivh elasticsearch-2.3.3.rpm

elasticsearch依赖java环境:

[root@server2 ~]# /etc/init.d/elasticsearch start

which: no java in (/sbin:/usr/sbin:/bin:/usr/bin)

Could not find any executable java binary. Please install java in your PATH or set JAVA_HOME

安装java:

[root@server2 ~]# rpm -ivh jdk-8u121-linux-x64.rpm

[root@server2 ~]# cd /etc/elasticsearch/

[root@server2 elasticsearch]# ls

elasticsearch.yml logging.yml scripts

[root@server2 elasticsearch]# vim elasticsearch.yml

17 cluster.name: my-westos ##定义集群名字

23 node.name: server2 ##设置节点名称(本机ip)

33 path.data: /var/lib/elasticsearch/ ##elasticsearch数据目录

37 path.logs: /var/log/elasticsearch ##elasticsearch日志目录

43 bootstrap.mlockall: true ##是否开启内存限制(如果宿主机内存太小需要限制)

54 network.host: 172.25.60.2

58 http.port: 9200 ##设置apache服务端口

[root@server2 elasticsearch]# /etc/init.d/elasticsearch start

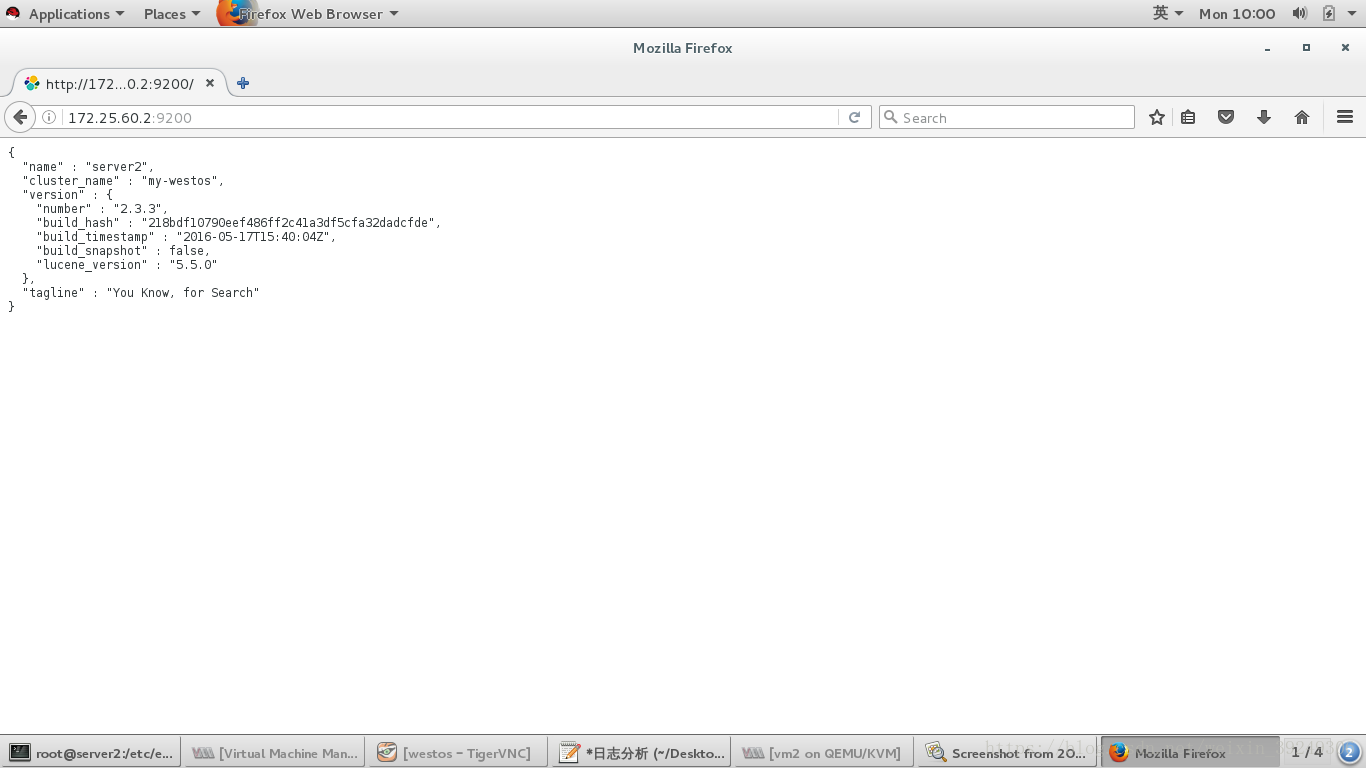

Starting elasticsearch: [ OK ]浏览器测试:172.25.60.2:9200

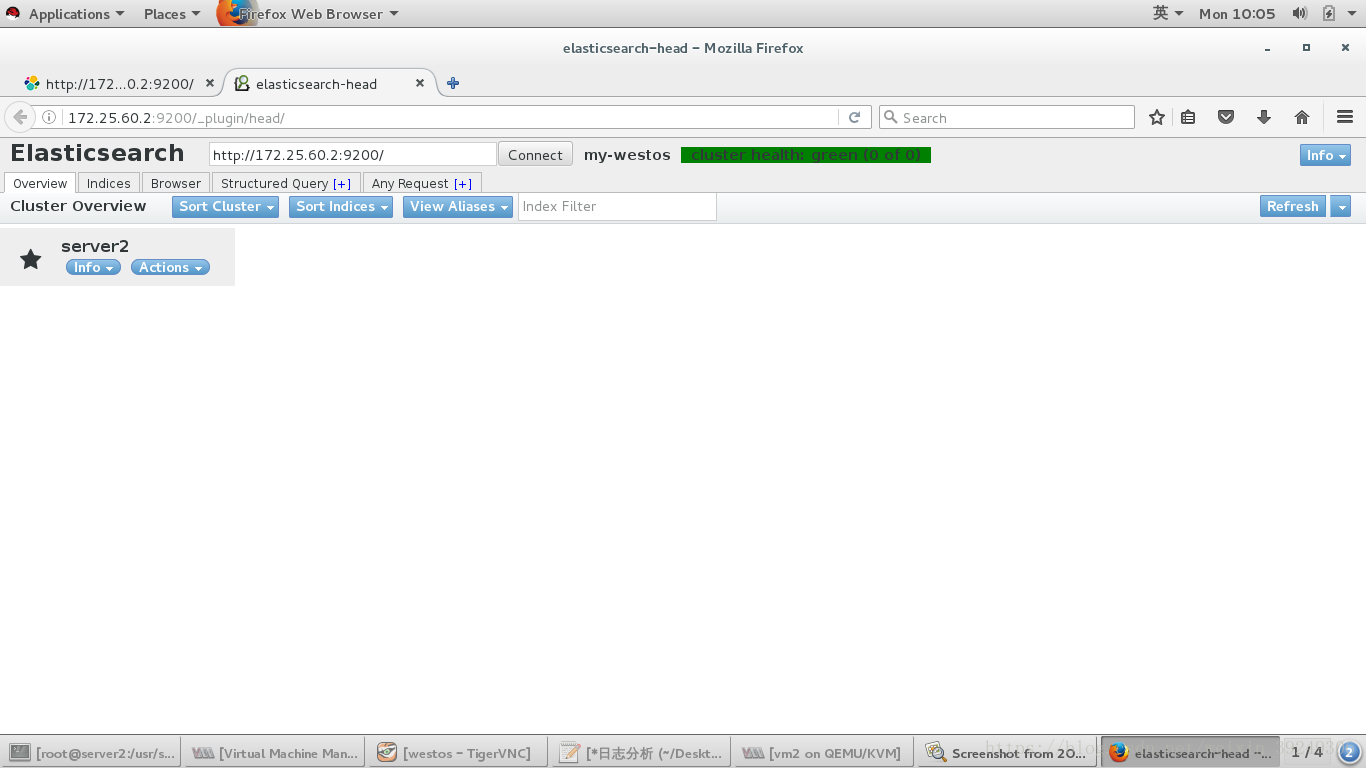

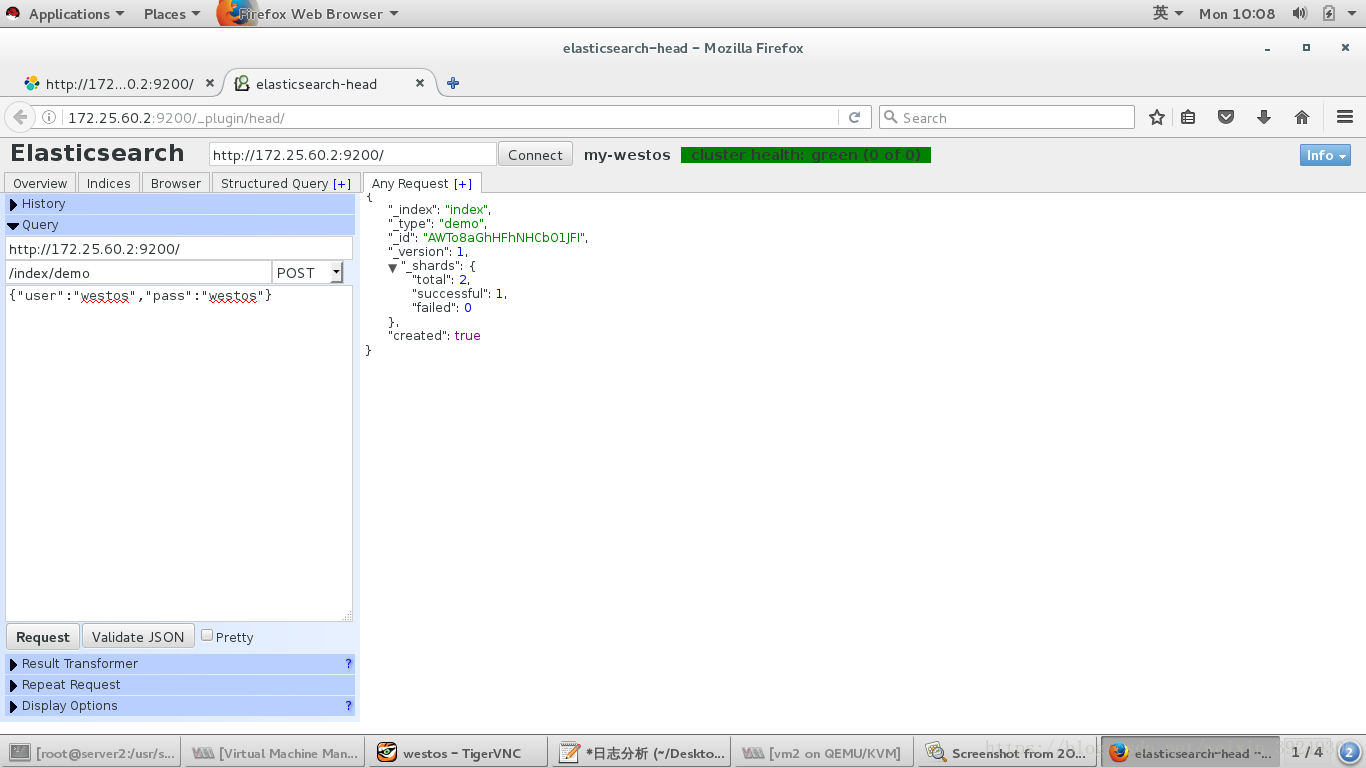

head 插件,它是一个用浏览器跟 ES 集群交互的插件,可以查看集群状态、集群的 doc 内容、执行搜索和普通的 Rest 请求等。访问172.25.60.2:9200/_plugin/head 页面来查看 ES 集群状态:

[root@server2 plugins]# file /usr/share/elasticsearch/bin/plugin

/usr/share/elasticsearch/bin/plugin: POSIX shell script text executable

本文采取:离线安装:

[root@server2 ~]# /usr/share/elasticsearch/bin/plugin install file:///root/elasticsearch-head-master.zip

在线安装:

[root@server2 ~]# /usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

查看相关文件:

[root@server2 ~]# cd /usr/share/elasticsearch/plugins/head/

[root@server2 head]# ls

elasticsearch-head.sublime-project LICENCE _site

Gruntfile.js package.json src

grunt_fileSets.js plugin-descriptor.properties test

index.html README.textile浏览器测试:如果不能出现刷新几次

安装 logstash,设置集群:

server1操作:

[root@server1 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

[root@server1 ~]# rpm -ivh jdk-8u121-linux-x64.rpm

编辑server2配置文件设置集群成员:

[root@server2 ~]# vim /etc/elasticsearch/elasticsearch.ym

68 discovery.zen.ping.unicast.hosts: ["server1", "server2", "server3"]

[root@server2 ~]# /etc/init.d/elasticsearch reload

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

文件编辑完毕,传送给其他节点server1和server3:

[root@server2 ~]# scp /etc/elasticsearch/elasticsearch.yml 172.25.60.1:/etc/elasticsearch/

root@172.25.60.1's password:

elasticsearch.yml 100% 3203 3.1KB/s 00:00

[root@server2 ~]# scp /etc/elasticsearch/elasticsearch.yml 172.25.60.3:/etc/elasticsearch/

server1和srever3修改配置文件ip为本机ip和主机名:

23 node.name: server2 修改为serve1或者server3

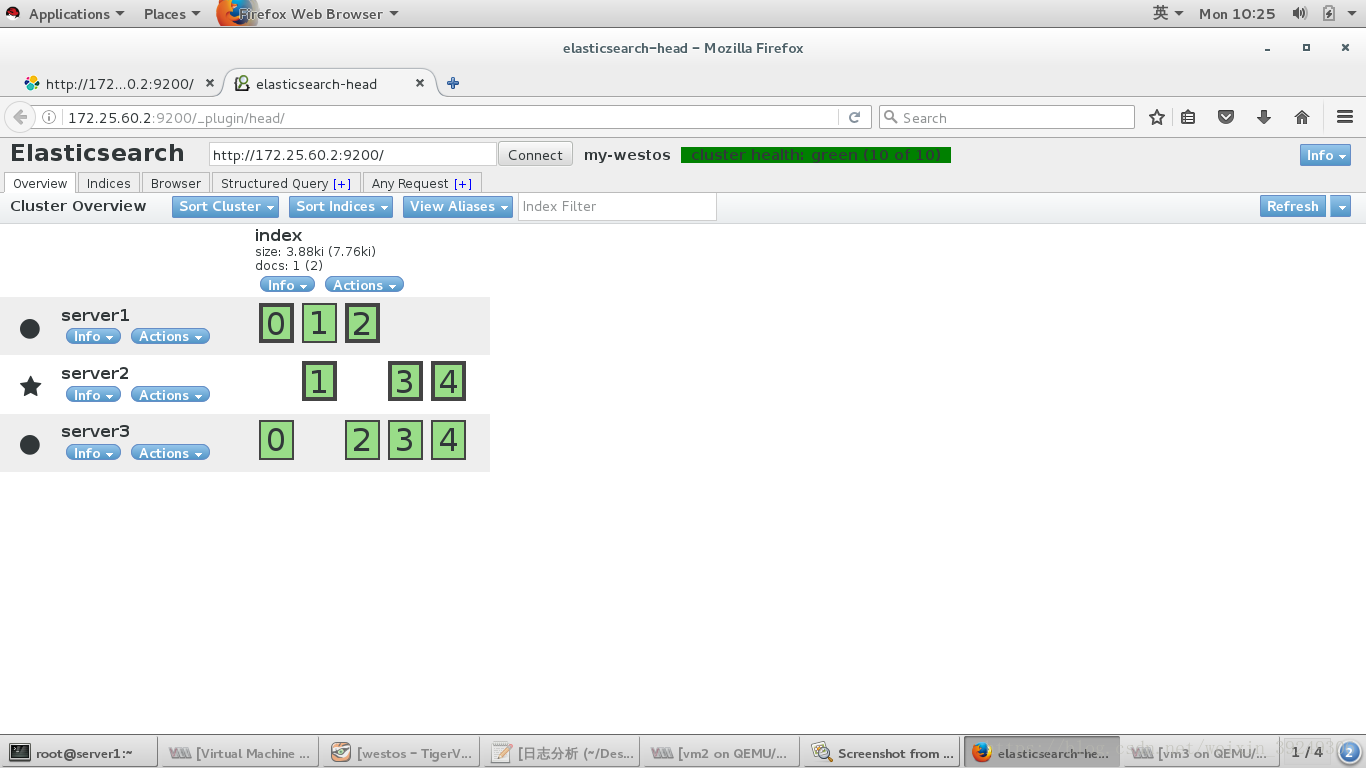

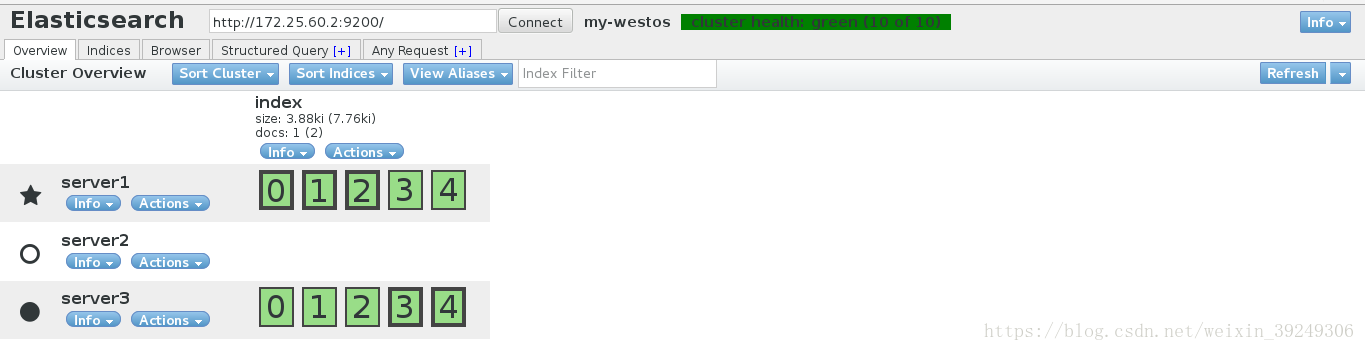

54 network.host: 172.25.60.2 修改为172.25.60.1或者172.25.60.3浏览器查看节点设置:绿色框框代表数据存储

集群节点需要设置mstar,我们将server2设置为master,其他为slave,并且master不进行存储数据:

master操作:

[root@server2 ~]# vim /etc/elasticsearch/elasticsearch.yml

25 node.master: True

27 node.data: false

slave操作:

[root@server2 ~]# vim /etc/elasticsearch/elasticsearch.yml

25 node.master: false

27 node.data: true

[root@server3 ~]# vim /etc/elasticsearch/elasticsearch.yml

25 node.master: false

27 node.data: true重启服务浏览器测试:空心圆代表master,server2没有进行数据存储

logstach数据采集

input数据进入, filter数据过滤, output数据除去

交互式数据的采集与输出:

[root@server2 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

[root@server2 ~]# cd /opt/logstash/

[root@server2 logstash]# bin/logstash -e 'input { stdin { } } output { stdout { } }'

Settings: Default pipeline workers: 1

Pipeline main started

hello

2018-07-30T03:13:49.843Z server2 hello

westos

2018-07-30T03:14:02.809Z server2 westos

[root@server2 logstash]# bin/logstash -e 'input { stdin { } } output { stdout { codec => rubydebug } }'

Settings: Default pipeline workers: 1

Pipeline main started

nihao

{

"message" => "nihao",

"@version" => "1",

"@timestamp" => "2018-07-30T03:19:20.576Z",

"host" => "server2"

}

hello,world

{

"message" => "hello,world",

"@version" => "1",

"@timestamp" => "2018-07-30T03:19:40.978Z",

"host" => "server2"

} ###ctrl+c退出交互式重点内容

[root@server2 logstash]# cd /etc/logstash/conf.d/

[root@server2 conf.d]# ls

[root@server2 conf.d]# vim es.conf

[root@server2 conf.d]# cat es.conf

input {

stdin {}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["172.25.60.2"]

}

}

[root@server2 conf.d]# /opt/logstash/bin/logstash -f es.conf

Settings: Default pipeline workers: 1

Pipeline main started

dwifiwh

{

"message" => "dwifiwh",

"@version" => "1",

"@timestamp" => "2018-07-30T03:26:11.873Z",

"host" => "server2"

}

uugfeif

{

"message" => "uugfeif",

"@version" => "1",

"@timestamp" => "2018-07-30T03:26:20.787Z",

"host" => "server2"

}

wiuiduw

{

"message" => "wiuiduw",

"@version" => "1",

"@timestamp" => "2018-07-30T03:26:26.595Z",

"host" => "server2"

} ###ctrl+c退出交互式

^CSIGINT received. Shutting down the agent. {:level=>:warn}

stopping pipeline {:id=>"main"}

Received shutdown signal, but pipeline is still waiting for in-flight events

to be processed. Sending another ^C will force quit Logstash, but this may cause

data loss. {:level=>:warn}

Pipeline main has been shutdown把logstash伪装成日志采集数据:

[root@server2 conf.d]# vim es.conf

[root@server2 conf.d]# cat es.conf

input {

#file {

# path => "/var/log/messages"

# start_position => "beginning"

#}

syslog {

port => 514

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["172.25.60.2"]

index => ["message-%{+YYYY.MM.dd}"]

}

}

[root@server2 conf.d]# /opt/logstash/bin/logstash -f es.conf

Settings: Default pipeline workers: 1

Pipeline main started

等待输出:

重新远程连接master:查看是否开启514端口

[root@server2 conf.d]# netstat -antplue | grep :514

tcp 0 0 ::ffff:172.25.60.2:514 ::ffff:172.25.60.1:60986 FIN_WAIT2 0 0 -

slave设置采集日志的对象:

[root@server1 ~]# vi /etc/rsyslog.conf

79 #*.* @@remote-host:514

80 # ### end of the forwarding rule ###

81 *.* @@172.25.60.2:514

[root@server1 ~]# /etc/init.d/rsyslog restart

Shutting down system logger: [ OK ]

Starting system logger: [ OK ]

slave执行命令测试:

[root@server1 ~]# logger hello

查看salve的日志信息:

[root@server1 ~]# tail -f /var/log/messages

Jul 30 12:44:39 server1 root: hello

master查看:返回serevr2的命令执行结果信息

{

"message" => "hello\n",

"@version" => "1",

"@timestamp" => "2018-07-30T04:44:39.000Z",

"host" => "172.25.60.1",

"priority" => 13,

"timestamp" => "Jul 30 12:44:39",

"logsource" => "server1",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

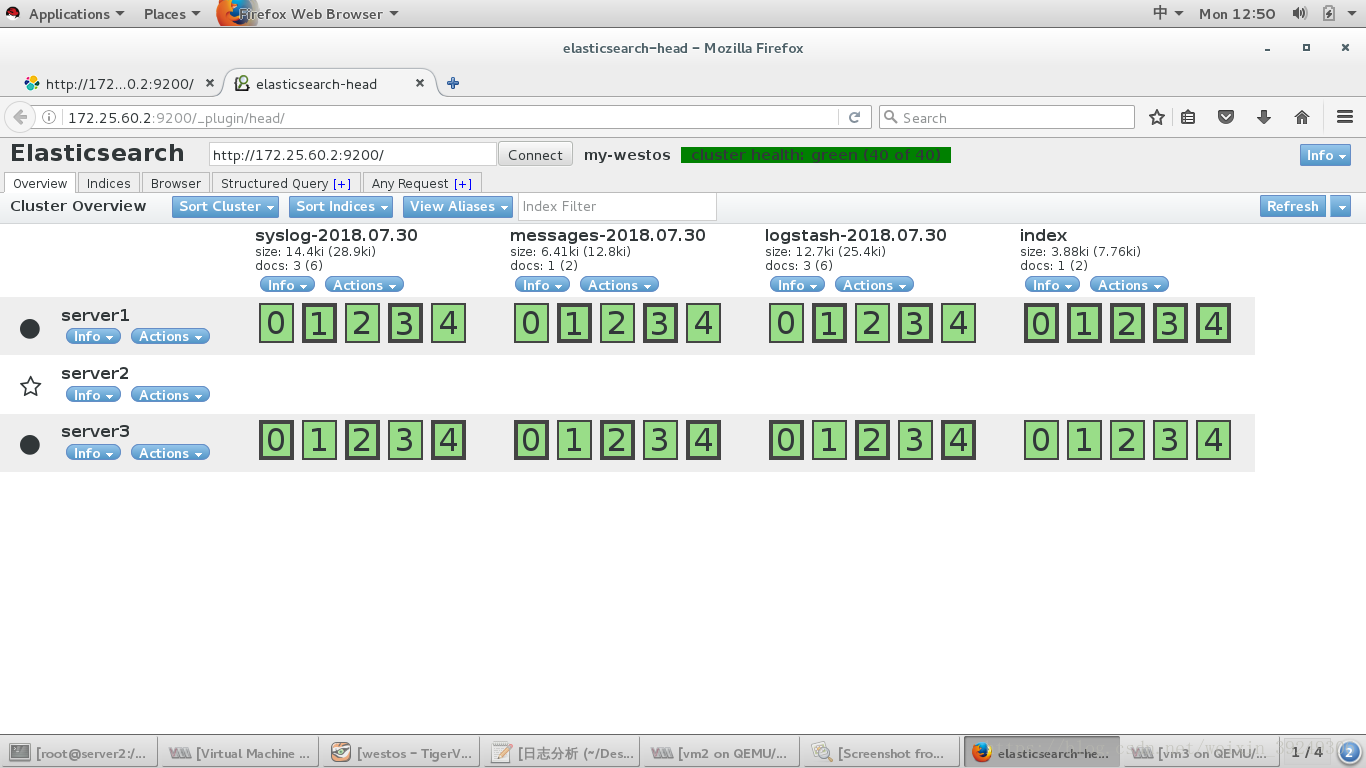

}浏览器查看多处采集数据项:messages