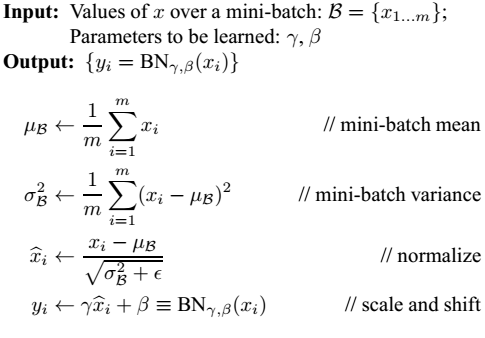

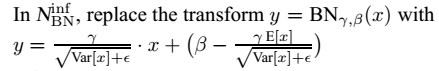

相关公式

NCNN代码

int BatchNorm::forward_inplace(Mat& bottom_top_blob) const

{

// a = bias - slope * mean / sqrt(var)

// b = slope / sqrt(var)

// value = b * value + a

int w = bottom_top_blob.w;

int h = bottom_top_blob.h;

int size = w * h;

const float* a_data_ptr = a_data;

const float* b_data_ptr = b_data;

#pragma omp parallel for

for (int q=0; q<channels; q++)

{

float* ptr = bottom_top_blob.channel(q);

float a = a_data_ptr[q];

float b = b_data_ptr[q];

for (int i=0; i<size; i++)

{

ptr[i] = b * ptr[i] + a;

}

}

return 0;

}参考资料

[1] Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

[2] https://github.com/Tencent/ncnn