Hive On Spark环境中执行select count语句时候报错:

Failedto execute spark task, with exception'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create sparkclient.)

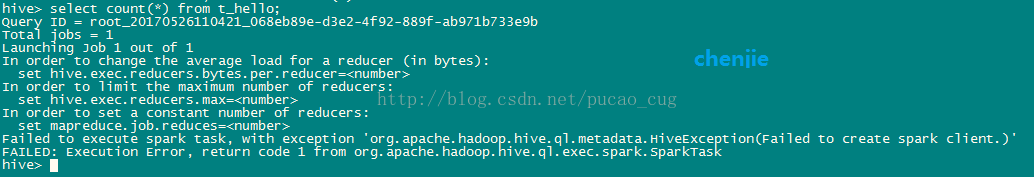

如图:

报错完整信息是:

hive> select count(*) from t_hello;

Query ID= root_20170526110421_068eb89e-d3e2-4f92-889f-ab971b733e9b

Totaljobs = 1

LaunchingJob 1 out of 1

In orderto change the average load for a reducer (in bytes):

sethive.exec.reducers.bytes.per.reducer=<number>

In orderto limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In orderto set a constant number of reducers:

set mapreduce.job.reduces=<number>

Failedto execute spark task, with exception'org.apache.hadoop.hive.ql.metadata.HiveException(Failed to create sparkclient.)'

FAILED:Execution Error, return code 1 fromorg.apache.hadoop.hive.ql.exec.spark.SparkTask

hive>报错原因

Hive On Spark运行时,Hive需要启动一个程序连接Spark集群,因为Hive版本和Spark版本不匹配的原因,或者是配置不对的原因导致Hive连不上Spark集群,无法提交Spark Job都会报这个错误。

解决方法

检查自己当前使用的Hive版本和Spark版本是否支持Hive On Spark。如果是配置的问题,报这个错误很可能是spark.master配置错误了,如果是在hive-site.xml里做配置,这里应该配置为:spark://Ip地址或者机器名称:端口,当然如果你用的端口都是默认的,这里也可以配置为yarn-client,而不是配置为什么client、cluster、yarn之类乱七八糟的东西,我正常运行的Hive On Spark环境里配置的是(master是我的机器名称,在/etc/hosts里面做了IP映射):

<property>

<name>spark.master</name>

<value>spark://master:7077</value>

</property>另外需要说明的是如果这里配置为yarn-client报错不太一样,可能会打印出一串state=SENT,然后提示:

Job hasn't been submitted after 20s.Aborting it.

Possiblereasons include network issues, errors in remote driver or the cluster has no availableresources, etc.

Pleasecheck YARN or Spark driver's logs for further information.

Status: SENT

FAILED:Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.spark.SparkTask如果修改配置也不行,而且报错还是不明确,可以尝试用下面的命令运行select count,以便让Hive On Spark在执行select count 操作时输出详细日志在控制台中,方便查看,命令是:

hive --hiveconf hive.root.logger=DEBUG,console -e "select count(1) from 你自己的hive表名称"说明:如果报错不是因为配置问题,而是因为版本问题导致的,那比较麻烦,如果几经折腾还是搞不定,请参考该博文来搭建可以正常运行Hive On Spark的环境吧。博文地址是:http://blog.csdn.net/pucao_cug/article/category/6941532