server1 172.25.28.1 管理节点&托管节点 haproxy keepalived

server2 172.25.28.2 托管节点 httpd

server3 172.25.28.3 托管节点 nginx

server4 172.25.28.4 托管节点 haproxy keepalived

先把server4配置为salt-minion

一 实现vip的高可用

思路:server4端做keepalived的推送测试,然后整体推送

1,建立keepalived服务目录获取推送资源包

[root@server1 ~]# cd /srv/salt/

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# cd keepalived/

[root@server1 keepalived]# mkdir files

[root@server1 keepalived]# cd files/

lftp 172.25.28.250:/pub> get keepalived-2.0.6.tar.gz

[root@server1 files]# pwd

/srv/salt/keepalived/files2,编写推送install.sls文件,推送测试

[root@server1 keepalived]# vim install.sls

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz[root@server1 keepalived]# salt server4 state.sls keepalived.install

3,server4测试

[root@server4 ~]# cd /mnt/

[root@server4 mnt]# ls

keepalived-2.0.6.tar.gz

4,在server4编译测试,目的是帮助编写instal.sls文件

tar zxf keepalived-2.0.6.tar.gz

cd keepalived-2.0.6

./configure --help

./configure --prefix=/usr/local/keepalived --with-init=SYSV

yum install -y gcc

./configure --prefix=/usr/local/keepalived --with-init=SYSV

yum install -y openssl-devel

./configure --prefix=/usr/local/keepalived --with-init=SYSV

编译成功!

注意:rhel6是SYSV,rhel7是SUSE

5,根据server4的编译,修改install.sls

[root@server1 keepalived]# vim install.sls

[root@server1 keepalived]# cat install.sls

include:

- pkgs.make ## 所需的软件安装haproxy写在这个模块下,直接调用

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/keepalived

[root@server1 keepalived]# cat ../pkgs/make.sls

make-gcc:

pkg.installed:

- pkgs:

- pcre-devel

- openssl-devel

- gcc[root@server1 keepalived]# salt server4 state.sls keepalived.install

6,管理节点获取推送文件

[root@server4 init.d]# scp keepalived server1:/srv/salt/keepalived/files

root@server1's password:

keepalived 100% 1308 1.3KB/s 00:00

[root@server4 keepalived]# scp keepalived.conf server1:/srv/salt/keepalived/files

root@server1's password:

keepalived.conf 100% 3550 3.5KB/s 00:00

7,修改install.sls文件,创建目录,建立链接

[root@server1 keepalived]# vim install.sls

[root@server1 keepalived]# cat install.sls

include:

- pkgs.make

kp-install:

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6 && ./configure --prefix=/usr/local/keepalived --with-init=SYSV &> /dev/null && make &> /dev/null && make install &> /dev/null

- creates: /usr/local/keepalived

/etc/keepalived:

file.directory: # 建立目录

- mode: 755

/etc/sysconfig/keepalived:

file.symlink: # 建立链接

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

[root@server1 keepalived]# salt server4 state.sls keepalived.install

8,建立动态pillar的keepalived目录,仿照web/install.sls文件建立keepalived的pillar文件

[root@server1 keepalived]# ls

files install.sls

[root@server1 keepalived]# vim service.sls

[root@server1 keepalived]# ls files/

keepalived keepalived-2.0.6.tar.gz keepalived.conf

[root@server1 srv]# cd pillar/

[root@server1 pillar]# mkdir keepalived

[root@server1 pillar]# cd keepalived/

[root@server1 keepalived]# cp ../web/install.sls .

[root@server1 keepalived]# vim install.sls

[root@server1 keepalived]# cat install.sls

{% if grains['fqdn'] == 'server1' %}

state: MSATER # server1是主机

vrid: 28

priority: 100 #主备的优先级不一样

{% elif grains['fqdn'] == 'server4' %}

state: BACKUP # server2是备机

vrid: 28

priority: 50

{% endif %}注:这里用pillar的动态参数方式,不一样的主机参数在此定义,vird可以直接写在变量调用的配置文件

9,修改top.sls文件,定义base根

[root@server1 pillar]# vim top.sls

[root@server1 pillar]# cat top.sls

base:

'*':

- web.install

- keepalived.install10,建立服务启动文件service.sls,添加jinjia模块

[root@server1 pillar]# cd /srv/salt/keepalived/

[root@server1 keepalived]# ls

files install.sls service.sls

[root@server1 keepalived]# vim service.sls

[root@server1 keepalived]# cat service.sls

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }} #定义变量内容

VRID: {{ pillar['vrid'] }}

PRIORITY: {{ pillar['priority'] }}

kp-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755 # 这是一个脚本,需要可执行权限

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf11,修改要推送的配置文件

[root@server1 keepalived]# vim files/keepalived.conf

[root@server1 keepalived]# cat files/keepalived.conf

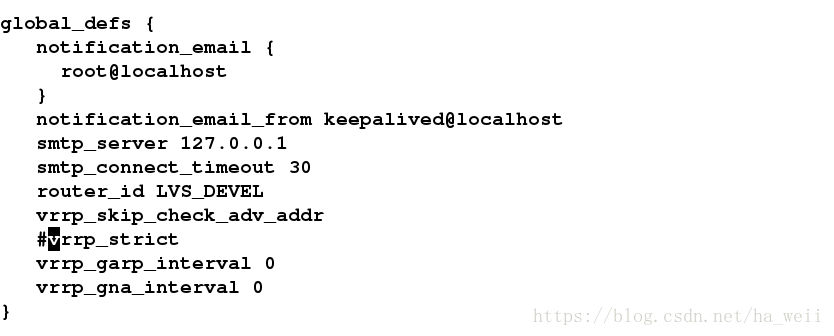

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

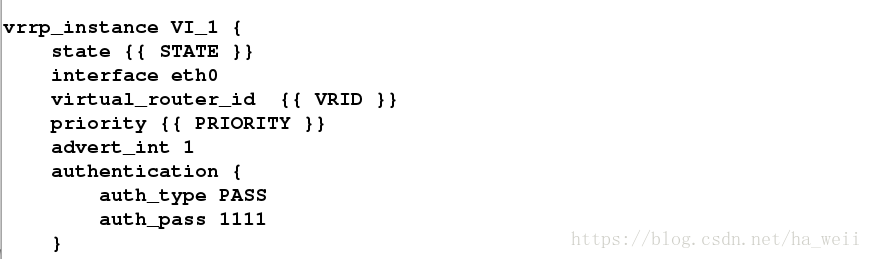

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.28.100

}

}12,在server4上推送测试

[root@server4 keepalived]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:2d:e0:90 brd ff:ff:ff:ff:ff:ff

inet 172.25.28.4/24 brd 172.25.28.255 scope global eth0

inet 172.25.28.100/32 scope global eth0 # vip

inet6 fe80::5054:ff:fe2d:e090/64 scope link

valid_lft forever preferred_lft forever

13,server1和server4安装mailx

14,修改top.sls文件,全局推送

[root@server1 salt]# cat top.sls

base:

'server1':

- haproxy.install

- keepalived.service

'server4':

- haproxy.install

- keepalived.service

'roles:apache':

- match: grain

- httpd.install

'roles:nginx':

- match: grain

- nginx.service注意:server4要配置负载均衡yum源,否则安装haproxy会出错

[root@server1 yum.repos.d]# salt '*' state.highstate

二 haproxy服务的高可用

1,编写服务检测脚本,加上可执行权限

[root@server1 pillar]# cd /opt/

[root@server1 opt]# vim check_haproxy.sh

[root@server1 opt]# cat /opt/check_haproxy.sh

#!/bin/bash

/etc/init.d/haproxy status &> /dev/null || /etc/init.d/haproxy restart &> /dev/null

if [ $? -ne 0 ]; then

/etc/init.d/keepalived stop &> /dev/null

fi

注:查看haproxy的状态,如果是好的,不做改变,如果有错,尝试重启,如果重启失败(返回值不为0,返回值为0表示上一条指令执行成功),关闭本机的keepalived

[root@server1 opt]# chmod +x check_haproxy.sh

2,把脚本传到server4,也可以用推送的方式

[root@server1 files]# scp /opt/check_haproxy.sh server4:/opt/

root@server4's password:

check_haproxy.sh 100% 161 0.2KB/s 00:00

3,修改要推送的文件

[root@server1 opt]# cd /srv/salt/keepalived/files/

[root@server1 files]# vim keepalived.conf

[root@server1 files]# cat keepalived.conf

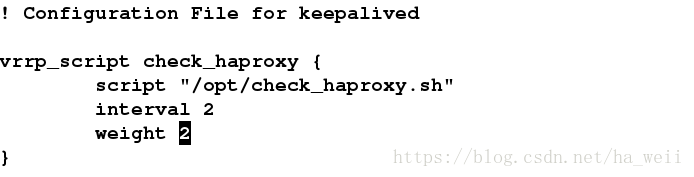

! Configuration File for keepalived

vrrp_script check_haproxy {

script "/opt/check_haproxy.sh" # 脚本路径

interval 2 # 每两秒执行一下脚本

weight 2 # 这个不用写也行,它表示如果haproxy重启不了,那么它的权重就减2,当master的优先级比slave还低时,自动切换到slave的haproxy,我们已经在脚本里写了只要master的haproxy重启不了,那么关闭keepalived,会自动切换到slave端

}

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

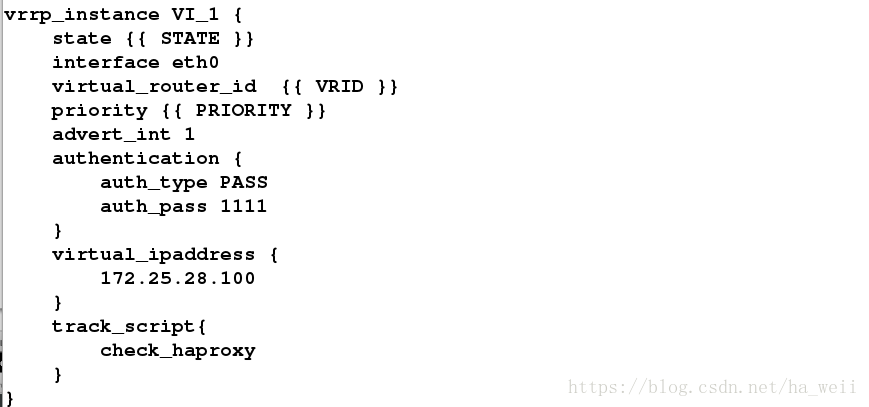

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{ VRID }}

priority {{ PRIORITY }}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.28.100

}

track_script{

check_haproxy #脚本定义

}

}4,推送给server1和server4

[root@server1 keepalived]# salt server1 state.sls keepalived.service

[root@server1 keepalived]# salt server4 state.sls keepalived.service

5,测试

关闭master的haproxy,keepalived立即重启

去掉/etc/init.d/haproxy的可执行权限,keepalived每2s用/etc/init.d/haproxy status检查一次状态,但是这个/etc/init.d/haproxy已经不可用了,也启动不了,所以master关闭keepalived,server4出现vip

[root@server4 keepalived-2.0.6]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:2d:e0:90 brd ff:ff:ff:ff:ff:ff

inet 172.25.28.4/24 brd 172.25.28.255 scope global eth0

inet 172.25.28.100/32 scope global eth0

inet6 fe80::5054:ff:fe2d:e090/64 scope link

valid_lft forever preferred_lft forever

浏览器访问不会受影响

加上可执行权限,此时server1的haproxy启动,没有vip,因为这上面的keepalived已经关闭了,重新打开keepalived,2s后抢回vip

[root@server1 keepalived]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@server1 keepalived]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:82:18:3a brd ff:ff:ff:ff:ff:ff

inet 172.25.28.1/24 brd 172.25.28.255 scope global eth0

inet 172.25.28.100/32 scope global eth0

inet6 fe80::5054:ff:fe82:183a/64 scope link

valid_lft forever preferred_lft forever

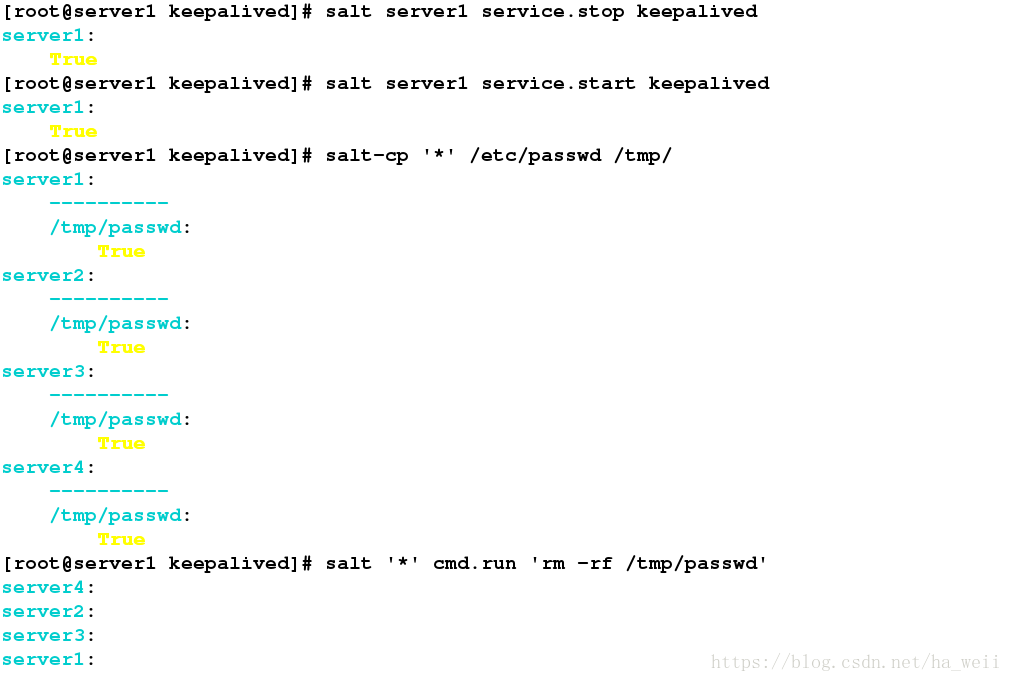

SALT其他命令

salt server1 service.stop keepalived #启动keepalived服务

salt server1 service.start keepalived

salt-cp '*' /etc/passwd /tmp/ # 把本地的/etc/password拷贝到所有管理节点的/tmp下

salt cmd.run 'rm -rf /tmp/passwd' # 删除管理节点的/tmp/passwd

salt server3 state.single pkg.installed httpd #在server3上安装httpd

注意:salt-cp不用于广播大文件,它旨在处理文本文件。 salt-cp可用于分发配置文件。

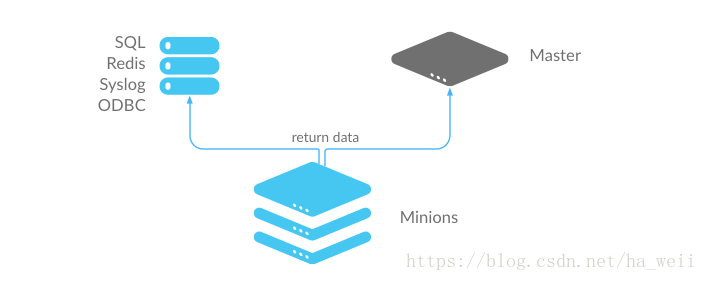

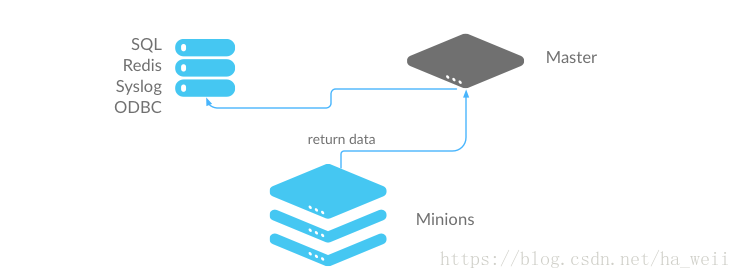

http://docs.saltstack.cn/ref/returners/all/salt.returners.mysql.html#module-salt.returners.mysql

存入数据库

默认情况下salt-master存储24小时

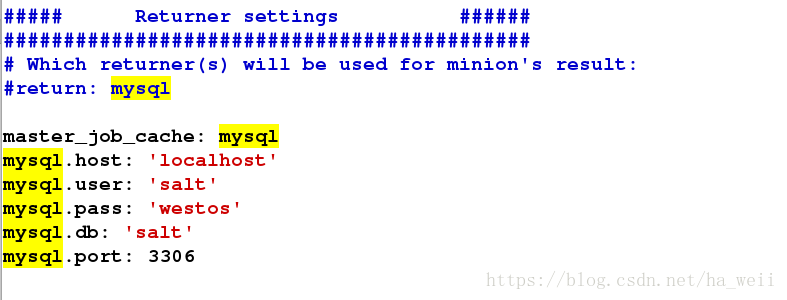

方法一:需要修改minion端和master端

1,安装启动数据库

[root@server1 ~]# yum install -y mysql-server

[root@server1 ~]# /etc/init.d/mysqld start

2,server2安装MySQL-python

[root@server2 ~]# yum install -y MySQL-python

3,修改minion配置文件,重启

[root@server2 ~]# /etc/init.d/salt-minion restart

4,数据库创建用户

mysql> grant all on salt.* to salt@'172.25.28.%' identified by 'westos';

Query OK, 0 rows affected (0.00 sec)

5,导入数据库数据结构

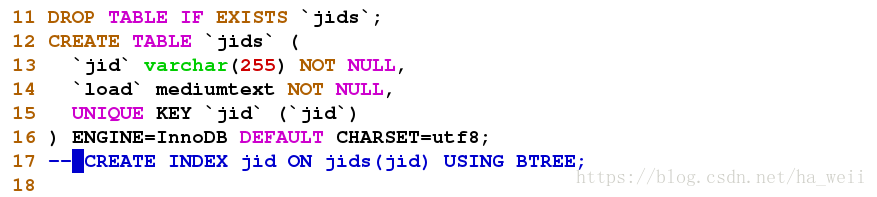

[root@server1 ~]# cat test.sql

CREATE DATABASE `salt`

DEFAULT CHARACTER SET utf8

DEFAULT COLLATE utf8_general_ci;

USE `salt`;

--

-- Table structure for table `jids`

--

DROP TABLE IF EXISTS `jids`;

CREATE TABLE `jids` (

`jid` varchar(255) NOT NULL,

`load` mediumtext NOT NULL,

UNIQUE KEY `jid` (`jid`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- CREATE INDEX jid ON jids(jid) USING BTREE;

--

-- Table structure for table `salt_returns`

--

DROP TABLE IF EXISTS `salt_returns`;

CREATE TABLE `salt_returns` (

`fun` varchar(50) NOT NULL,

`jid` varchar(255) NOT NULL,

`return` mediumtext NOT NULL,

`id` varchar(255) NOT NULL,

`success` varchar(10) NOT NULL,

`full_ret` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

KEY `id` (`id`),

KEY `jid` (`jid`),

KEY `fun` (`fun`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

--

-- Table structure for table `salt_events`

--

DROP TABLE IF EXISTS `salt_events`;

CREATE TABLE `salt_events` (

`id` BIGINT NOT NULL AUTO_INCREMENT,

`tag` varchar(255) NOT NULL,

`data` mediumtext NOT NULL,

`alter_time` TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

`master_id` varchar(255) NOT NULL,

PRIMARY KEY (`id`),

KEY `tag` (`tag`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

注释第17行

[root@server1 ~]# mysql < test.sql

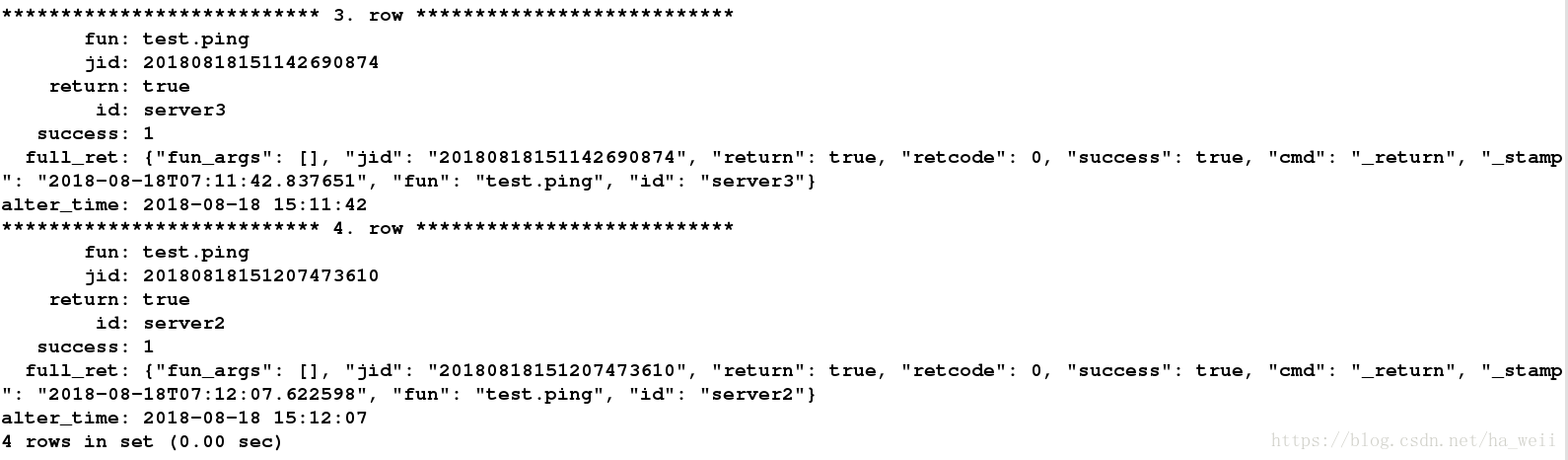

测试:

[root@server1 ~]# salt 'server' test.ping --return mysql # 返回到mysql

查看数据库

mysql> use salt;

mysql> select * from salt_returns;

+-----------+----------------------+--------+---------+---------+-------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| fun | jid | return | id | success | full_ret | alter_time |

+-----------+----------------------+--------+---------+---------+-------------------------------------------------------------------------------------------------------------------------------------+---------------------+

| test.ping | 20180818144547599048 | true | server2 | 1 | {"fun_args": [], "jid": "20180818144547599048", "return": true, "retcode": 0, "success": true, "fun": "test.ping", "id": "server2"} | 2018-08-18 14:45:47 |

+-----------+----------------------+--------+---------+---------+-------------------------------------------------------------------------------------------------------------------------------------+---------------------+

1 row in set (0.00 sec)

http://docs.saltstack.cn/topics/jobs/external_cache.html

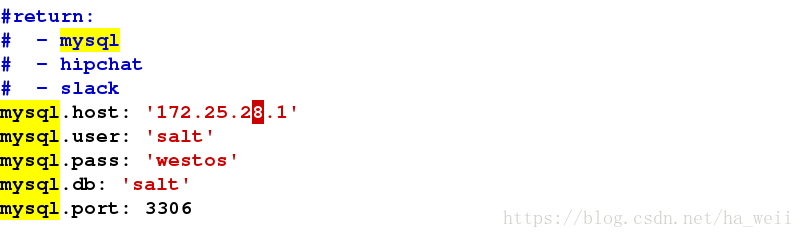

方法二:不修改minion端,只需要修改matser端

1,安装MySQL-python,修改配置文件,重启服务

[root@server1 ~]# yum install -y

[root@server1 ~]# vim /etc/salt/master

数据库在另外的主机上,这里写数据库所在ip

[root@server1 ~]# /etc/init.d/salt-master restart

Stopping salt-master daemon: [ OK ]

Starting salt-master daemon: [ OK ]

2,数据库授权

mysql> grant all on salt.* to salt@localhost identified by 'westos';

mysql> flush privileges;3,测试,数据库查看

[root@server1 ~]# salt 'server3' test.ping

server3:

True

[root@server1 ~]# salt 'server2' test.ping

server2:

True

自定义模块

1,建立外部模块目录和python模块

[root@server1 ~]# mkdir /srv/salt/_modules

[root@server1 ~]# cd /srv/salt/_modules

[root@server1 _modules]# ls

[root@server1 _modules]# vim my_disk.py

[root@server1 _modules]# cat my_disk.py

#!/usr/bin/env python

def df():

return __salt__['cmd.run']('df -h') #调用cmd.run命令,查看内存

2,同步到所有托管节点

[root@server1 _modules]# salt '*' saltutil.sync_modules

3,测试

[root@server1 _modules]# salt '*' my_disk.df

4,查看同步缓存文件

[root@server4 ~]# cd /var/cache/

[root@server4 cache]# ls

ldconfig salt yum

[root@server4 cache]# cd salt/

[root@server4 salt]# ls

minion

[root@server4 salt]# cd minion/

[root@server4 minion]# ls

accumulator extmods files highstate.cache.p module_refresh pkg_refresh proc sls.p

[root@server4 minion]# cd extmods/

[root@server4 extmods]# ls

grains modules

[root@server4 extmods]# cd modules/

[root@server4 modules]# ls

my_disk.py my_disk.pyc

[root@server4 modules]# ls ../grains/

my_grains.py my_grains.pyc

注意:自定义模块方法和grains有点像,同步缓存路径相似

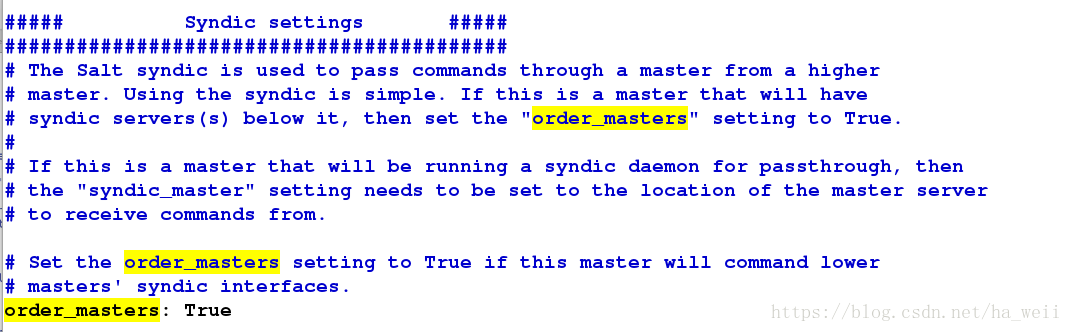

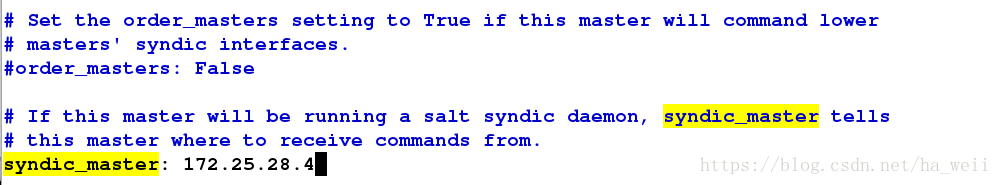

http://docs.saltstack.cn/topics/topology/syndic.html

TOP-MATSTER

master的横向扩展

1,去掉server4托管节点

[root@server1 ~]# salt-key -L

Accepted Keys:

server1

server2

server3

server4

Denied Keys:

Unaccepted Keys:

Rejected Keys:

[root@server1 ~]# salt-key -d server4

The following keys are going to be deleted:

Accepted Keys:

server4

Proceed? [N/y] y

Key for minion server4 deleteed.

[root@server1 ~]# salt-key -L

Accepted Keys:

server1

server2

server3

Denied Keys:

Unaccepted Keys:

Rejected Keys:

2,停掉server4的所有服务

[root@server4 ~]# /etc/init.d/salt-minion stop

Stopping salt-minion:root:server4 daemon: OK

[root@server4 ~]# chkconfig salt-minion off

[root@server4 ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@server4 ~]# /etc/init.d/haproxy stop

Stopping haproxy: [ OK ]

3,安装salt-master,修改配置文件,启动

[root@server4 ~]# yum install -y salt-master

[root@server4 ~]# vim /etc/salt/master

[root@server4 ~]# /etc/init.d/salt-master start

Starting salt-master daemon: [ OK ]

4,管理节点安装salt-syndic,启动,修改配置文件,重启

[root@server1 ~]# yum install -y salt-syndic #syndic和master一定在一起

[root@server1 ~]# /etc/init.d/salt-syndic start

Starting salt-syndic daemon: [ OK ]

[root@server1 ~]# vim /etc/salt/master

[root@server1 ~]# /etc/init.d/salt-master stop

[root@server1 ~]# /etc/init.d/mysqld start

5,top-master和master交换密钥

[root@server4 ~]# salt-key -L

Accepted Keys:

Denied Keys:

Unaccepted Keys:

server1

Rejected Keys:

[root@server4 ~]# salt-key -A

The following keys are going to be accepted:

Unaccepted Keys:

server1

Proceed? [n/Y] Y

Key for minion server1 accepted.

6,测试

[root@server4 ~]# salt '*' test.ping

server3:

True

server2:

True

server1:

True

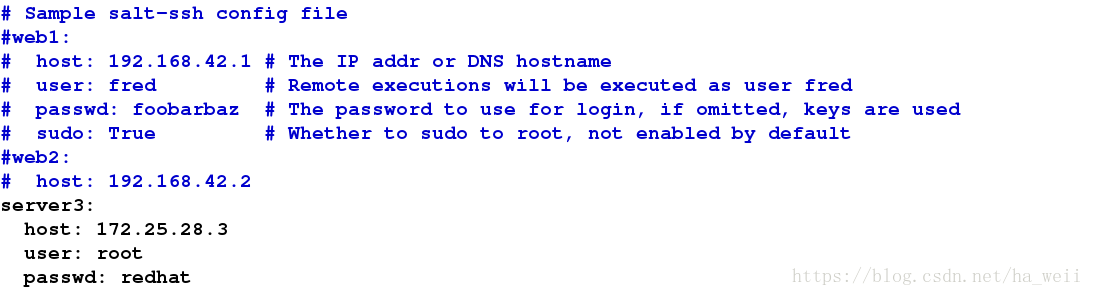

SALT-SSH类似ansible

自动部署server3为托管节点

1,关闭server3的salt-minion

[root@server3 ~]# /etc/init.d/salt-minion stop

Stopping salt-minion:root:server3 daemon: OK

2,安装salt-ssh

[root@server1 ~]# yum install -y salt-ssh

[root@server1 ~]# vim /etc/salt/roster

3,salt-ssh和数据库有冲突,注释

[root@server1 ~]# vim /etc/salt/master

4,测试,此时会提示是否用-i部署为server1的托管节点

[root@server1 ~]# salt-ssh 'server3' test.ping

server3:

----------

retcode:

254

stderr:

stdout:

The host key needs to be accepted, to auto accept run salt-ssh with the -i flag:

The authenticity of host '172.25.28.3 (172.25.28.3)' can't be established.

RSA key fingerprint is bd:61:e0:5f:54:e4:1b:06:f4:7d:58:27:b4:47:d6:79.

Are you sure you want to continue connecting (yes/no)?

[root@server1 ~]# salt-ssh 'server3' test.ping -i

server3:

True

5,server3已经成为管理节点,可以用自定义模板测试

[root@server1 ~]# salt-ssh 'server3' my_disk.df

server3:

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 19G 1.1G 17G 7% /

tmpfs 499M 48K 499M 1% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot

API

http://docs.saltstack.cn/ref/netapi/all/salt.netapi.rest_cherrypy.html

方法一:

1,启动server3的salt-minon服务

[root@server3 ~]# /etc/init.d/salt-minion start

Starting salt-minion:root:server3 daemon: OK

2,安装salt-api

[root@server1 ~]# yum install -y salt-api

3,获取密钥

[root@server1 ~]# cd /etc/pki/

[root@server1 pki]# ls

CA ca-trust entitlement java nssdb product rpm-gpg rsyslog tls

[root@server1 pki]# cd tls/

[root@server1 tls]# ls

ccert.pem certs misc openssl.cnf private

[root@server1 tls]# cd private/

[root@server1 private]# openssl genrsa 1024 > localhost.key

Generating RSA private key, 1024 bit long modulus

........................++++++

..............++++++

e is 65537 (0x10001)

[root@server1 private]# ls

localhost.key

4,获取证书

[root@server1 tls]# cd certs/

[root@server1 certs]# ls

ca-bundle.crt ca-bundle.trust.crt make-dummy-cert Makefile renew-dummy-cert

[root@server1 certs]# make testcert

Country Name (2 letter code) [XX]:cn

State or Province Name (full name) []:shaanxi

Locality Name (eg, city) [Default City]:xi'an

Organization Name (eg, company) [Default Company Ltd]:westos

Organizational Unit Name (eg, section) []:linux

Common Name (eg, your name or your server's hostname) []:server1

Email Address []:root@localhost5,建立api.conf文件,在master的配置文件中可以看到默认读取master.d下的*.conf文件

[root@server1 master.d]# vim api.conf

[root@server1 master.d]# cat api.conf

rest_cherrypy:

port: 8000

ssl_crt: /etc/pki/tls/certs/localhost.crt

ssl_key: /etc/pki/tls/private/localhost.key[root@server1 master.d]# ll /etc/pki/tls/private/localhost.key

-rw-r--r-- 1 root root 887 Aug 18 17:17 /etc/pki/tls/private/localhost.key

[root@server1 master.d]# ll /etc/pki/tls/certs/localhost.crt

-rw------- 1 root root 1029 Aug 18 17:18 /etc/pki/tls/certs/localhost.crt

6,建立auth.conf文件

[root@server1 master.d]# vim auth.conf

[root@server1 master.d]# cat auth.conf

external_auth:

pam:

saltapi:

- '.*'

- '@wheel'

- '@runner'

- '@jobs'7,建立用户

[root@server1 master.d]# useradd saltapi

[root@server1 master.d]# passwd saltapi

Changing password for user saltapi.

New password:

BAD PASSWORD: it is based on a dictionary word

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updated successfully.

8,重启服务,查看端口

[root@server1 master.d]# /etc/init.d/salt-master stop

Stopping salt-master daemon: [ OK ]

[root@server1 master.d]# /etc/init.d/salt-master status

salt-master is stopped

[root@server1 master.d]# /etc/init.d/salt-master start

[root@server1 master.d]# /etc/init.d/salt-api start

Starting salt-api daemon: [ OK ]

[root@server1 master.d]# netstat -antlp | grep :8000

tcp 0 0 0.0.0.0:8000 0.0.0.0:* LISTEN 11156/salt-api -d

tcp 0 0 127.0.0.1:39928 127.0.0.1:8000 TIME_WAIT -

9,登录,获取token码

[root@server1 master.d]# curl -sSk https://localhost:8000/login \

> -H 'Accept: application/x-yaml' \

> -d username=saltapi \

> -d password=redhat \ # 创建saltapi用户的密码

> -d eauth=pam

return:

- eauth: pam

expire: 1534628478.2735479

perms:

- .*

- '@wheel'

- '@runner'

- '@jobs'

start: 1534585278.2735469

token: f1313d605be055f66c7af3a45e691f5f4a5c9d39

user: saltapi

10,连接,测试

[root@server1 master.d]# curl -sSk https://localhost:8000 \

> -H 'Accept: application/x-yaml' \

> -H 'X-Auth-Token: f1313d605be055f66c7af3a45e691f5f4a5c9d39'\

> -d client=local \

> -d tgt='*' \

> -d fun=test.ping

return:

- server1: true

server2: true

server3: true

方法二

直接写python文件

[root@server1 ~]# vim saltapi.py

[root@server1 ~]# cat saltapi.py

# -*- coding: utf-8 -*-

import urllib2,urllib

import time

try:

import json

except ImportError:

import simplejson as json

class SaltAPI(object):

__token_id = ''

def __init__(self,url,username,password):

self.__url = url.rstrip('/')

self.__user = username

self.__password = password

def token_id(self):

''' user login and get token id '''

params = {'eauth': 'pam', 'username': self.__user, 'password': self.__password}

encode = urllib.urlencode(params)

obj = urllib.unquote(encode)

content = self.postRequest(obj,prefix='/login')

try:

self.__token_id = content['return'][0]['token']

except KeyError:

raise KeyError

def postRequest(self,obj,prefix='/'):

url = self.__url + prefix

headers = {'X-Auth-Token' : self.__token_id}

req = urllib2.Request(url, obj, headers)

opener = urllib2.urlopen(req)

content = json.loads(opener.read())

return content

def list_all_key(self):

params = {'client': 'wheel', 'fun': 'key.list_all'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

minions = content['return'][0]['data']['return']['minions']

minions_pre = content['return'][0]['data']['return']['minions_pre']

return minions,minions_pre

def delete_key(self,node_name):

params = {'client': 'wheel', 'fun': 'key.delete', 'match': node_name}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0]['data']['success']

return ret

def accept_key(self,node_name):

params = {'client': 'wheel', 'fun': 'key.accept', 'match': node_name}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0]['data']['success']

return ret

def remote_noarg_execution(self,tgt,fun):

''' Execute commands without parameters '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0][tgt]

return ret

def remote_execution(self,tgt,fun,arg):

''' Command execution with parameters '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun, 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

ret = content['return'][0][tgt]

return ret

def target_remote_execution(self,tgt,fun,arg):

''' Use targeting for remote execution '''

params = {'client': 'local', 'tgt': tgt, 'fun': fun, 'arg': arg, 'expr_form': 'nodegroup'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def deploy(self,tgt,arg):

''' Module deployment '''

params = {'client': 'local', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

return content

def async_deploy(self,tgt,arg):

''' Asynchronously send a command to connected minions '''

params = {'client': 'local_async', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def target_deploy(self,tgt,arg):

''' Based on the node group forms deployment '''

params = {'client': 'local_async', 'tgt': tgt, 'fun': 'state.sls', 'arg': arg, 'expr_form': 'nodegroup'}

obj = urllib.urlencode(params)

self.token_id()

content = self.postRequest(obj)

jid = content['return'][0]['jid']

return jid

def main():

sapi = SaltAPI(url='https://172.25.0.3:8000',username='saltapi',password='westos')

sapi.token_id()

print sapi.list_all_key()

#sapi.delete_key('test-01')

#sapi.accept_key('test-01')

sapi.deploy('*','httpd.apache')

#print sapi.remote_noarg_execution('test-01','grains.items')

if __name__ == '__main__':

main()

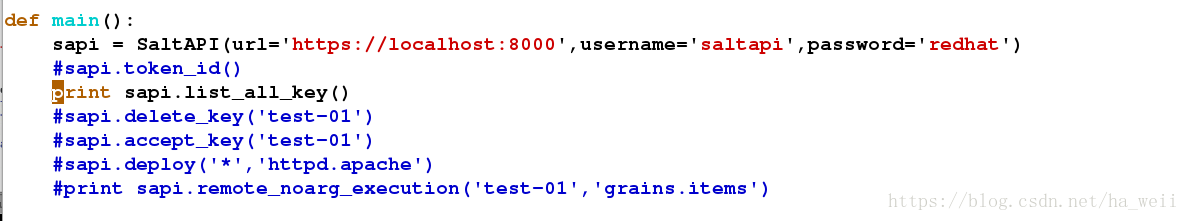

测试一:

修改def main()为

[root@server1 ~]# python saltapi.py

([u'server1', u'server2', u'server3'], [])

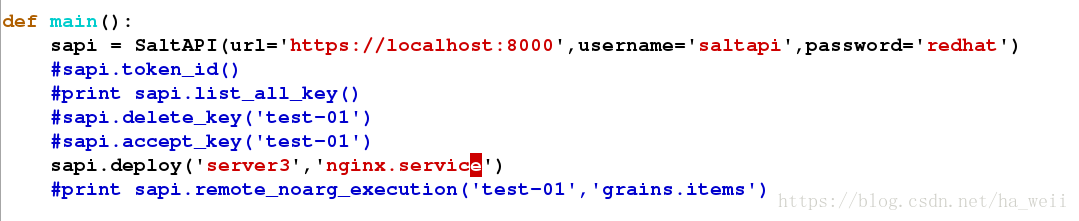

测试二:

修改def main()为

这个nginx.service是我们自己用.sls文件定义的方法,直接在这里调用

[root@server3 ~]# /etc/init.d/nginx status

nginx is stopped

[root@server1 ~]# python saltapi.py

[root@server3 ~]# /etc/init.d/nginx status

nginx (pid 3947) is running...