请忽略丑陋的代码排版,我是在本地码好复制上来的,就成了这样

library("RCurl")

library("XML")

library("dplyr")

#生成随机User_Agent

User_Agent<-c()

User_Agent[1]<-'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'

User_Agent[2]<-'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50'

User_Agent[3]<-'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50'

User_Agent[4]<-'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)'

User_Agent[5]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)'

User_Agent[6]<-'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)'

User_Agent[7]<-'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv,2.0.1) Gecko/20100101 Firefox/4.0.1'

User_Agent[8]<-'Mozilla/5.0 (Windows NT 6.1; rv,2.0.1) Gecko/20100101 Firefox/4.0.1'

User_Agent[9]<-'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11'

User_Agent[10]<-'Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11'

User_Agent[11]<-'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11'

User_Agent[12]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)'

User_Agent[13]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; TencentTraveler 4.0)'

User_Agent[14]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)'

User_Agent[15]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; The World)'

User_Agent[16]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)'

User_Agent[17]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)'

User_Agent[18]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Avant Browser)'

User_Agent[19]<-'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)'

#生成随机UA

RandomUA<-function() sample(User_Agent,1,replace=TRUE)

RandomUA()获取西刺免费代理IP

#获取代理IP

Get_Proxies<-function() {

init_proxies=c()

d<-debugGatherer()

chandle<-getCurlHandle(debugfunction=d$update,followlocation=TRUE,cookiefile="",verbose=TRUE)

#爬取8页

for (i in 1:8) {

url<-paste0("http://www.xicidaili.com/nn/",i)

headers<-c("User-Agent"=RandomUA())

result=tryCatch({

content<-url %>% getURL(curl=chandle,httpheader=headers,.encoding='utf-8');

1+1

},error=function(e) {

cat("ERROR:",conditionMessage(e),"\n")

return(e$message)

})

if (result !=2) break

myproxy<-content %>% htmlParse()

#提取IP地址+端口+类型

ip_addrs<-myproxy %>% xpathSApply('//tr/td[2]',xmlValue) #IP地址

port<-myproxy %>% xpathSApply('//tr/td[3]',xmlValue) #端口

genre_class<-myproxy %>% xpathSApply('//tr/td[6]',xmlValue) #类型

for (j in 1:length(ip_addrs)) {

ip<-paste0(ip_addrs[j],":",port[j])

names(ip)<-genre_class[j]

init_proxies <- c(init_proxies,ip)

}

}

return (init_proxies)

}

myproxies<-Get_Proxies()以爬取浙江法院公开网首页来验证代理IP的有效性

#检验代理IP是否有效:

testProxy<-function(myproxies) {

tmp_proxies=c()

tarURL<-"http://www.zjsfgkw.cn/" #浙江法院公开网首页

headers<-c("User-Agent"=RandomUA())

d<-debugGatherer()

for (ip in myproxies[which(names(myproxies)=='HTTP')]) {

chandle<-getCurlHandle(debugfunction=d$update,followlocation=TRUE,cookiefile="",proxy=ip,verbose=TRUE)

Error<-try(tarURL %>% getURL(curl=chandle,httpheader=headers,.opts=list(maxredirs=2,ssl.verifypeer=FALSE,timeout=4),.encoding="utf-8") %>% htmlParse() %>% xpathSApply('//*[@id="courtMenu"]/ul/li[2]/a',xmlValue) %>% .[1],silent=TRUE) #最大重定向次数=2,请求允许的最长时间4秒,不验证ssl证书

if((!'try-error' %in% class(Error)) & !is.null(Error)){

cat(ip,"\n")

if (Error == "法院概况")

tmp_proxies<-c(tmp_proxies,ip)

}

Sys.sleep(runif(1,0.2,0.3))

}

return(tmp_proxies)

}

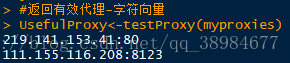

#返回有效代理-字符向量

UsefulProxy<-testProxy(myproxies)最终800个只有两个是有用的