说明:

Hbase部署模式有以下三种

(1)本地模式:本地模式不依赖于HDFS存储,将文件存储到操作系统本地目录,好处是我们不需要搭建HDFS集群,即可学些HBase相关命令及客户端操作。

(2)伪分布模式:一台机器完成Hbase所有组件的配置,需要依赖HDFS分布式存储

(3)全分布模式:多机部署,一般情况下Hmaster和HregionServer分布在不同的服务器,需要依赖底层HDFS分布式存储。

一、安装介质

hbase-0.96.2-hadoop2-bin.tar.gz

二、安装JDK

[root@hadoop-server01 bin]# mkdir -p /usr/local/apps

[root@hadoop-server01 bin]# ll /usr/local/apps/

total 4

drwxr-xr-x. 8 uucp 143 4096 Apr 10 2015 jdk1.7.0_80

[root@hadoop-server01 bin]# pwd

/usr/local/apps/jdk1.7.0_80/bin

[root@hadoop-server01 bin]#vi /etc/profile

export JAVA_HOME=/usr/local/apps/jdk1.7.0_80

export PATH=$PATH:$JAVA_HOME/bin

[root@hadoop-server01 bin]# source /etc/profile

三、上传安装包并解压

1、上传hbase-0.96.2-hadoop2-bin.tar.gz安装包到服务器

2、解压安装包

[root@hadoop-server01 ~]# tar -xvf hbase-0.96.2-hadoop2-bin.tar.gz -C /apps/hbase/

四、修改配置文件

[root@hadoop-server01 conf]# cd /apps/hbase/hbase-0.96.2-hadoop2/conf

修改hbase-env.sh

标×××部分需要进行修改

# The java implementation to use. Java 1.6 required.

# export JAVA_HOME=/usr/java/jdk1.6.0/

export JAVA_HOME=/usr/local/apps/jdk1.7.0_80

# Extra Java CLASSPATH elements. Optional.

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

export HBASE_MANAGES_ZK=true

主要是设置JAVA_HOME和开启HBase自带的ZK

修改你hbase-site.xml

<configuration>

<!--设置HBase数据存放路径 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://hadoop-server01:9000/myhbase</value>

</property>

<!-- 开启分布式集群模式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 制定HBase使用的Zookeeper地址,这里使用HBase自带的ZK -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop-server01</value>

</property>

<!-- 设置HRegion副本数,和HDFS数据块副本数没有关系,由于是伪分布模式,所以设置为1 -->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

五、启动Hbase

由于伪分布模式数据存储在HDFS,因此启动HBase之前需要先启动Hadoop HDFS

1、启动Hadoop HDFS NameNode和DataNode服务

[root@hadoop-server01 conf]# start-dfs.sh

Starting namenodes on [hadoop-server01]

hadoop-server01: starting namenode, logging to /usr/local/apps/hadoop-2.4.1/logs/hadoop-root-namenode-hadoop-server01.out

hadoop-server01: starting datanode, logging to /usr/local/apps/hadoop-2.4.1/logs/hadoop-root-datanode-hadoop-server01.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/local/apps/hadoop-2.4.1/logs/hadoop-root-secondarynamenode-hadoop-server01.out

[root@hadoop-server01 conf]# jps | grep -v Jps

6693 DataNode

6573 NameNode

6841 SecondaryNameNode

2、启动Hbase服务

[root@hadoop-server01 conf]# start-hbase.sh

hadoop-server01: starting zookeeper, logging to /apps/hbase/hbase-0.96.2-hadoop2/bin/../logs/hbase-root-zookeeper-hadoop-server01.out

starting master, logging to /apps/hbase/hbase-0.96.2-hadoop2/logs/hbase-root-master-hadoop-server01.out

The authenticity of host 'localhost (::1)' can't be established.

RSA key fingerprint is 4e:96:f2:47:ce:d4:9d:8b:db:cc:dd:7a:79:63:01:74.

Are you sure you want to continue connecting (yes/no)? yes

localhost: Warning: Permanently added 'localhost' (RSA) to the list of known hosts.

localhost: starting regionserver, logging to /apps/hbase/hbase-0.96.2-hadoop2/bin/../logs/hbase-root-regionserver-hadoop-server01.out

[root@hadoop-server01 conf]# jps | grep -v Jps

6693 DataNode

6573 NameNode

6841 SecondaryNameNode

7242 HMaster

7421 HRegionServer

7175 HQuorumPeer

从启动日志我们可以看出,先启动了Zookeeper服务,在启动HMaster,接着启动HRegionServer

六、验证测试

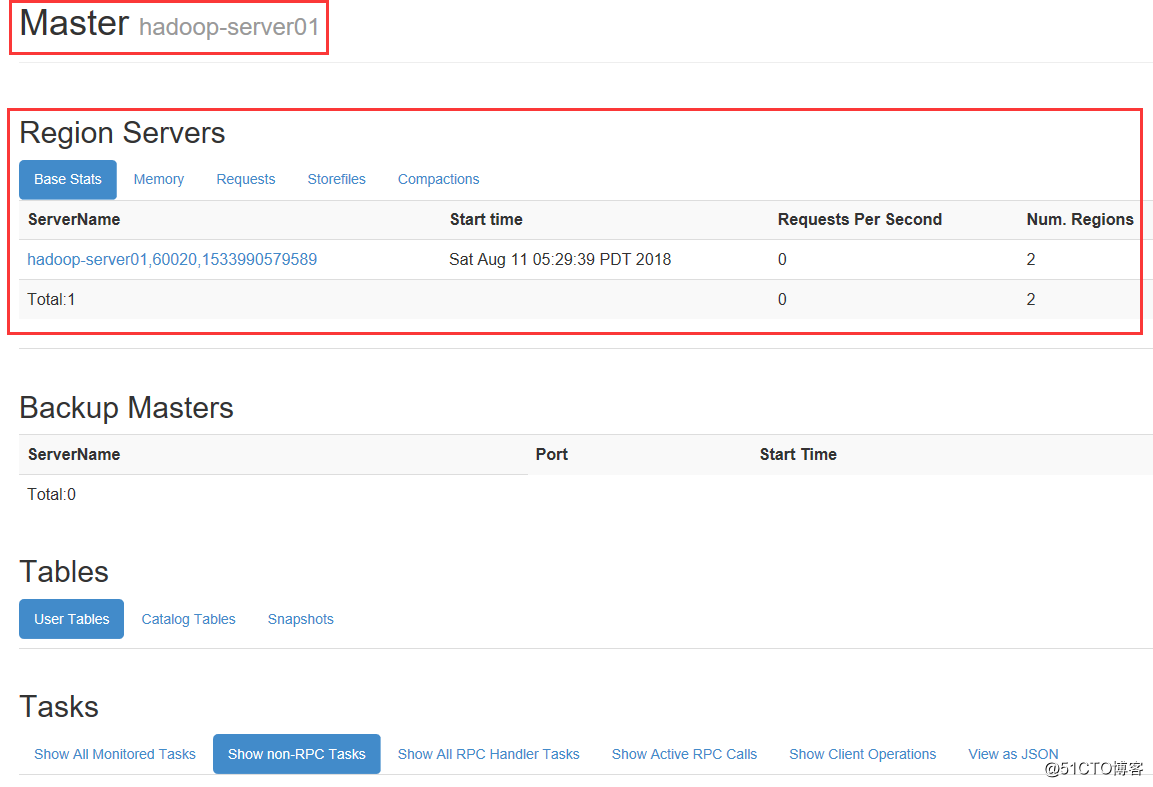

通过网页检查HBase是否正常

访问端口 60010

如下图,Hbase搭建成功

创建表测试

[root@hadoop-server01 conf]# hbase shell

2018-08-11 05:34:01,693 INFO [main] Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 0.96.2-hadoop2, r1581096, Mon Mar 24 16:03:18 PDT 2014

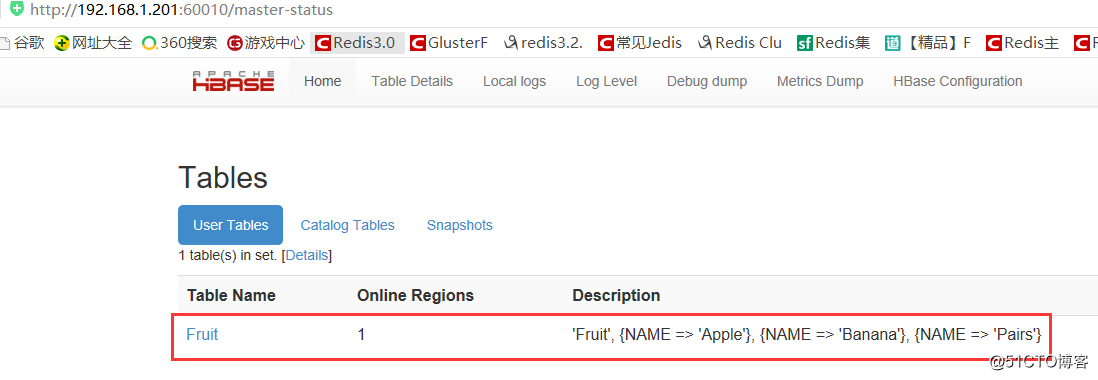

hbase(main):001:0> create 'Fruit','Apple','Banana','Pairs'

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/apps/hbase/hbase-0.96.2-hadoop2/lib/slf4j-log4j12-1.6.4.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/apps/hadoop-2.4.1/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

0 row(s) in 1.3400 seconds

=> Hbase::Table - Fruit

hbase(main):002:0>

到hdfs://192.168.1.201:9000/myhbase目录下检查创建的表是否存在

[root@hadoop-server01 conf]# hdfs dfs -ls /myhbase/data/default

Found 1 items

drwxr-xr-x - root supergroup 0 2018-08-11 05:34 /myhbase/data/default/Fruit

由此可以看出,我们创建的表被写入了HDFS中,同样可以通过HBase WebConsole页面进行查询

为此,Hbase伪分布式集群搭建成功