我们使用到的越来越多的工具或软件是多节点分布式部署形式的,或者是C/S部署形式。无论哪一种,仅创建和启动一个虚机时,连基本的安装部署也完成不了,也不要说进一步做什么模拟测试了。而Vagrant可以帮助我们极大提高多节点的测试环境搭建效率,让你在几分钟内就运行起一套多节点的满足测试需求的主机和网络环境。

我们使用Vagrant来管理自己的模拟测试环境的另一个理由是:Vagrant让我们可以方便得分享测试环境的配置信息,只需要一个描述了该测试环境部署所需的系统镜像、节点资源与网络以及其他资源定制需求的Vagrantfile文件。也许当你决定要测试某一个复杂的工具软件时,只需要获取一分其他人分享的Vagrantfile,然后运行一个vagrant up命令就足够了。如果因为配置某个软件时搞“脏”了测试环境,没关系,执行个vagrant destroy即可销毁当前测试环境,回到起点重来,非常便利。

接下来我们通过部署两个工具软件的模拟测试环境,来演示下怎么使用好Vagrant。这两个工具软件分别是大名鼎鼎的自动化运维工具SaltStack,以及Docker容器集群管理界的当红炸子鸡Kubernetes。我们将为SaltStack的C/S架构部署一套3主机节点的模拟测试环境,为k8s部署一套4主机节点的测试环境基础设施。

注:虽然在这里是使用virtualbox作为虚拟化软件,但实际上Vagrant同时可以支持很多款虚拟化工具软件的,这可以根据自己使用习惯选型。

1、使用Vagrant快速部署出一套SaltStack的多节点模拟测试环境

完成以下配置步骤来设置一个简单的SaltStack环境。

1)安装VirtualBox

https://www.virtualbox.org/

https://www.vagrantup.com/

Vagrant是为所有人设计的,作为创建虚拟环境的最简单快捷的方式!

Vagrant是一款用于在单个工作流程中构建和管理虚拟机环境的工具。 凭借易于使用的工作流程和专注于自动化,Vagrant降低了开发环境设置时间,提高了工作效率。

Vagrant支持在很多种操作系统中安装和使用。我们这里选择使用windows版本作为示例。

3)下载一份定义好的Vagrantfile文件

https://github.com/UtahDave/salt-vagrant-demo

,你可以直接从GitHub使用git或下载项目的zip文件

这是一个使用Vagrant实现的Salt Demo环境,所提供的全部内容就是一个预定义好的Vagrantfile文件。提供了创建一个Salt master节点,两个Salt minions节点组成的Salt C/S模拟测试环境的描述信息。

4)运行vagrant up启动Demo环境

先解压缩下载的zip文件,然后使用命令提示符打开提取出来的目录:

cd %homepath%\Downloads\salt-vagrant-demo-master

启动这套测试环境:

vagrant up

在Vagrant ups(〜10分钟)之后,您又回到了命令提示符下,您就可以继续使用本指南。

以上Demo环境将使用ubuntu-16.04搭建出一个salt master节点和两个minions节点的测试环境。

注:因为需要在线去国外网站下载box镜像文件,往往因为网速问题失败,所以下面提供了一种离线导入系统镜像文件的安装办法。

看到输出下面这些信息,就意味着已经创建Demo环境成功了:

.............................................

Processing triggers for libc-bin (2.23-0ubuntu10) ...

Processing triggers for systemd (229-4ubuntu21.2) ...

Processing triggers for ureadahead (0.100.0-19) ...

* INFO: Running install_ubuntu_stable_post()

* INFO: Running install_ubuntu_check_services()

* INFO: Running install_ubuntu_restart_daemons()

* INFO: Running daemons_running()

* INFO: Salt installed!

Salt successfully configured and installed!

run_overstate set to false. Not running state.overstate.

run_highstate set to false. Not running state.highstate.

orchestrate is nil. Not running state.orchestrate.

D:\tools\salt-vagrant-demo-master>

按下面的方法登录salt master并使用:

D:\tools\salt-vagrant-demo-master>vagrant ssh master

Welcome to Ubuntu 16.04.4 LTS (GNU/Linux 4.4.0-87-generic x86_64)

* Documentation:

https://help.ubuntu.com

* Management:

https://landscape.canonical.com

* Support:

https://ubuntu.com/advantage

43 packages can be updated.

20 updates are security updates.

vagrant@saltmaster:~$ sudo salt * test.ping

minion2:

True

minion1:

True

vagrant@saltmaster:~$

在你运行Vagrant之后,Vagrant会在后台创建并启动多个VirtualBox虚拟机。 这些虚拟机会一直运行,直到你关闭它们,所以确保你在完成时运行vagrant halt:

vagrant halt

再次启动它们,运行vagrant up。如果你想重新开始,你可以运行vagrant destroy,然后vagrant up。

注:如果你忘记了执行vagrant halt来关闭它们,它们会在系统后台以进程的方式一直运行,所以务必还是在使用完毕后记得主动关闭一下。

5)Vagrant常用命令

$ vagrant init # 初始化

$ vagrant up # 启动虚拟机

$ vagrant halt # 关闭虚拟机

$ vagrant reload # 重启虚拟机

$ vagrant ssh # SSH 至虚拟机

$ vagrant status # 查看虚拟机运行状态

$ vagrant destroy # 销毁当前虚拟机

6)离线状态下导入vangrant box镜像文件的方法

资源:

下面是从salt-vagrant-demo-master项目中的Vagrantfile文件中截取的前10行代码,可以看到对Vagrant需要使用的os系统和版本的要求:

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Vagrantfile API/syntax version. Don't touch unless you know what you're doing!

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

os = "bento/ubuntu-16.04"

net_ip = "192.168.50"

我们可以看到salt-vagrant项目中使用的是bento/ubuntu-16.04镜像文件。

注:因为这个镜像文件本身就是公网开源免费使用的资源,所以这里放在网盘上提供也不涉及什么侵权之说。

在salt-vagrant-demo-master中创建一个子目录bento,并将ubuntu-16.04-virtualbox.box文件放进去。然后执行下面命令添加镜像并命名为ubuntu-16.04。

D:\tools\salt-vagrant-demo-master>vagrant box add bento/ubuntu-16.04 file://

/d:/tools/salt-vagrant-demo-master/bento/ubuntu-16.04-virtualbox.box

==> box: Box file was not detected as metadata. Adding it directly...

==> box: Adding box 'bento/ubuntu-16.04' (v0) for provider:

box: Unpacking necessary files from: file:///d:/tools/salt-vagrant-demo-mast

er/bento/ubuntu-16.04-virtualbox.box

box: Progress: 100% (Rate: 66.0M/s, Estimated time remaining: --:--:--)

==> box: Successfully added box 'bento/ubuntu-16.04' (v0) for 'virtualbox'!

D:\tools\salt-vagrant-demo-master>

注意:如果你是 windows 用户,路径形式差不多是这样:file:///c:/users/jellybool/downloads/virtualbox.box

手动添加box的操作方法参考:

https://zhuanlan.zhihu.com/p/25338468

查看已经添加好的镜像文件:

D:\tools\salt-vagrant-demo-master>vagrant box list

bento/ubuntu-16.04 (virtualbox, 0)

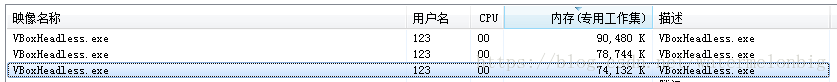

使用Vagrant并运行了salt-vagrant-demo-master项目提供的Demo环境后,可以在自己的windows任务管理器中看到类似下面的3个进程:

7)关于Vagrant的private_network网络的说明

我们推荐使用Vagrant创建虚机时,设置为使用private_network,并可以按自己需求指定虚机使用的ip地址。

在创建出虚机后,如果登录到虚机内部查看网络配置信息时,会发现每个虚机都同时有两块网卡。我们指定的private_network是虚机的第2块网卡,虚机的默认路由是指向的网卡1 。虚机的第1块网卡是配置为NAT网络模式的网卡,因此可以解决虚机访问公网的问题。而我们批量自动创建出来的多个虚机节点之间,可以通过网卡2,即private_network进行通信。

2、使用Vagrant快速部署出一套Kubernetes的多节点模拟测试环境

安装virtualbox与Vagrant的部分略过。

1)测试环境的架构及地址信息

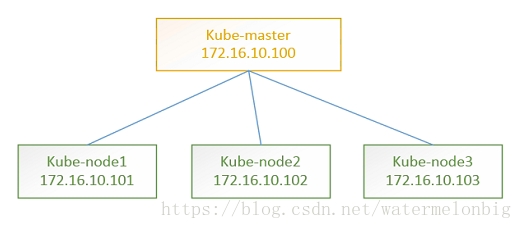

我们将要实现快速部署的这套k8s环境结构和网络地址信息如下图所示。虽然这里是提供了3个node节点,而实际上只需要改动Vagrantfile中的一个参数,就可以根据需要随意决定创建出几个node节点来。

2)负责自动生成4个Centos7.5的测试机的Vagrantfile文件

我们看一下生成这套模拟测试环境使用的Vagrantfile文件:

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "boxes/centos-7.5"

config.vm.box_check_update = false

config.vm.provider "virtualbox"

$num_vms = 3

(1..$num_vms).each do |id|

config.vm.define "kube-node#{id}" do |node|

node.vm.hostname = "kube-node#{id}"

node.vm.network :private_network, ip: "172.16.10.10#{id}", auto_config: true

node.vm.provider :virtualbox do |vb, override|

vb.name = "kube-node#{id}"

vb.gui = false

vb.memory = 1024

vb.cpus = 1

end

end

end

config.vm.define "kube-server" do |node|

node.vm.hostname = "kube-server"

node.vm.network :private_network, ip: "172.16.10.100", auto_config: true

config.vm.network "forwarded_port", guest: 8080, host: 8080 # kube-apiserver

config.vm.network "forwarded_port", guest: 8086, host: 8086 # kubectl proxy

config.vm.network "forwarded_port", guest: 443, host: 4443 # harbor

node.vm.provider :virtualbox do |vb, override|

vb.name = "kube-server"

vb.gui = false

vb.memory = 1024

vb.cpus = 1

end

end

end

从上面文件中我们可以看到,先是定义了一个kube-server,而且把配置了几个宿主机到虚机的转发端口,这样我们就可以从虚机外部访问到它里面的服务了。

然后是通过一个循环,创建出3个kube-node节点,均指定了定制的主机名和ip地址。

3)准备一下运行该Vagrantfile所需要的资源环境

大概需要准备好以下三个环境资源:

- 在自己PC机上创建一个目录,把上面的Vagrantfile文件放入其中。

- 在该目录中再创建一个存放box镜像文件的子目录:boxes

- 最后是找到一个可用的virtualbox镜像文件并导入到Vagrant中

我们这里是使用的自制的centos-7.5.virtualbox.box镜像box文件。

- 先把centos-7.5.virtualbox.box文件放入到boxes目录中;

- 使用vagrant box add命令导入;

- 查看导入后的结果;

D:\soft\k8s-vagrant-demo>vagrant box list

There are no installed boxes! Use `vagrant box add` to add some.

D:\soft\k8s-vagrant-demo>vagrant box add boxes/centos-7.5 file:///d:/soft/k8s-vagrant-demo/boxes/centos-7.5.virtualbox.box

==> box: Box file was not detected as metadata. Adding it directly...

==> box: Adding box 'boxes/centos-7.5' (v0) for provider:

box: Unpacking necessary files from: file:///d:/soft/k8s-vagrant-demo/boxes/

centos-7.5.virtualbox.box

box: Progress: 100% (Rate: 109M/s, Estimated time remaining: --:--:--)

==> box: Successfully added box 'boxes/centos-7.5' (v0) for 'virtualbox'!

D:\soft\k8s-vagrant-demo>vagrant box list

boxes/centos-7.5 (virtualbox, 0)

D:\soft\k8s-vagrant-demo>

上面使用的centos-7.5.virtualbox.box文件,已经放在了网盘中一份,供需要的人使用。

不过您也可以根据自己的需要,自行制作box文件。

关于怎么制作box镜像,有很多种方法,目前来讲最为便捷的应该是使用packer,结合github上共享出来的创建Vagrant box的模板文件,几乎不需要自己做太多事情。

以下为两个关键链接,供参考。

使用packer制作一个Vagrant box,需要安装packer工具,找一个自己适用的json模板,执行packer build命令就可以等着自动安装完成并输出box文件了。

$ cd centos

$ packer build -except=parallels-iso,vmware-iso centos-7.5-x86_64.json

4)启动Vagrant测试环境

- 打开一个命令行终端容器,进入刚才存放了Vagrantfile文件的目录;

- 执行vagrant up命令,等待5~10分钟(视你的电脑性能而定);

D:\soft\k8s-vagrant-demo>vagrant up

Bringing machine 'kube-node1' up with 'virtualbox' provider...

Bringing machine 'kube-node2' up with 'virtualbox' provider...

Bringing machine 'kube-node3' up with 'virtualbox' provider...

Bringing machine 'kube-server' up with 'virtualbox' provider...

==> kube-node1: Clearing any previously set network interfaces...

==> kube-node1: Preparing network interfaces based on configuration...

kube-node1: Adapter 1: nat

kube-node1: Adapter 2: hostonly

==> kube-node1: Forwarding ports...

kube-node1: 22 (guest) => 2222 (host) (adapter 1)

==> kube-node1: Running 'pre-boot' VM customizations...

==> kube-node1: Booting VM...

==> kube-node1: Waiting for machine to boot. This may take a few minutes...

kube-node1: SSH address: 127.0.0.1:2222

kube-node1: SSH username: vagrant

kube-node1: SSH auth method: private key

kube-node1: Warning: Connection reset. Retrying...

kube-node1: Warning: Connection aborted. Retrying...

kube-node1: Warning: Connection reset. Retrying...

kube-node1:

kube-node1: Vagrant insecure key detected. Vagrant will automatically replace

kube-node1: this with a newly generated keypair for better security.

kube-node1:

kube-node1: Inserting generated public key within guest...

kube-node1: Removing insecure key from the guest if it's present...

kube-node1: Key inserted! Disconnecting and reconnecting using new SSH key...

==> kube-node1: Machine booted and ready!

==> kube-node1: Checking for guest additions in VM...

==> kube-node1: Setting hostname...

==> kube-node1: Configuring and enabling network interfaces...

kube-node1: SSH address: 127.0.0.1:2222

kube-node1: SSH username: vagrant

kube-node1: SSH auth method: private key

==> kube-node1: Mounting shared folders...

kube-node1: /vagrant => D:/soft/k8s-vagrant-demo

==> kube-node2: Importing base box 'boxes/centos-7.5'...

==> kube-node2: Matching MAC address for NAT networking...

==> kube-node2: Setting the name of the VM: kube-node2

==> kube-node2: Fixed port collision for 22 => 2222. Now on port 2200.

==> kube-node2: Clearing any previously set network interfaces...

==> kube-node2: Preparing network interfaces based on configuration...

kube-node2: Adapter 1: nat

kube-node2: Adapter 2: hostonly

==> kube-node2: Forwarding ports...

kube-node2: 22 (guest) => 2200 (host) (adapter 1)

==> kube-node2: Running 'pre-boot' VM customizations...

==> kube-node2: Booting VM...

==> kube-node2: Waiting for machine to boot. This may take a few minutes...

kube-node2: SSH address: 127.0.0.1:2200

kube-node2: SSH username: vagrant

kube-node2: SSH auth method: private key

kube-node2: Warning: Connection aborted. Retrying...

kube-node2: Warning: Connection aborted. Retrying...

kube-node2: Warning: Connection reset. Retrying...

kube-node2: Warning: Remote connection disconnect. Retrying...

kube-node2: Warning: Connection aborted. Retrying...

kube-node2: Warning: Connection reset. Retrying...

kube-node2:

kube-node2: Vagrant insecure key detected. Vagrant will automatically replace

kube-node2: this with a newly generated keypair for better security.

kube-node2:

kube-node2: Inserting generated public key within guest...

kube-node2: Removing insecure key from the guest if it's present...

kube-node2: Key inserted! Disconnecting and reconnecting using new SSH key...

==> kube-node2: Machine booted and ready!

==> kube-node2: Checking for guest additions in VM...

==> kube-node2: Setting hostname...

==> kube-node2: Configuring and enabling network interfaces...

kube-node2: SSH address: 127.0.0.1:2200

kube-node2: SSH username: vagrant

kube-node2: SSH auth method: private key

==> kube-node2: Mounting shared folders...

kube-node2: /vagrant => D:/soft/k8s-vagrant-demo

==> kube-node3: Importing base box 'boxes/centos-7.5'...

==> kube-node3: Matching MAC address for NAT networking...

==> kube-node3: Setting the name of the VM: kube-node3

==> kube-node3: Fixed port collision for 22 => 2222. Now on port 2201.

==> kube-node3: Clearing any previously set network interfaces...

==> kube-node3: Preparing network interfaces based on configuration...

kube-node3: Adapter 1: nat

kube-node3: Adapter 2: hostonly

==> kube-node3: Forwarding ports...

kube-node3: 22 (guest) => 2201 (host) (adapter 1)

==> kube-node3: Running 'pre-boot' VM customizations...

==> kube-node3: Booting VM...

==> kube-node3: Waiting for machine to boot. This may take a few minutes...

kube-node3: SSH address: 127.0.0.1:2201

kube-node3: SSH username: vagrant

kube-node3: SSH auth method: private key

kube-node3: Warning: Remote connection disconnect. Retrying...

kube-node3: Warning: Connection aborted. Retrying...

kube-node3: Warning: Connection reset. Retrying...

kube-node3: Warning: Remote connection disconnect. Retrying...

kube-node3: Warning: Connection aborted. Retrying...

kube-node3: Warning: Remote connection disconnect. Retrying...

kube-node3: Warning: Connection aborted. Retrying...

kube-node3:

kube-node3: Vagrant insecure key detected. Vagrant will automatically replace

kube-node3: this with a newly generated keypair for better security.

kube-node3:

kube-node3: Inserting generated public key within guest...

kube-node3: Removing insecure key from the guest if it's present...

kube-node3: Key inserted! Disconnecting and reconnecting using new SSH key...

==> kube-node3: Machine booted and ready!

==> kube-node3: Checking for guest additions in VM...

==> kube-node3: Setting hostname...

==> kube-node3: Configuring and enabling network interfaces...

kube-node3: SSH address: 127.0.0.1:2201

kube-node3: SSH username: vagrant

kube-node3: SSH auth method: private key

==> kube-node3: Mounting shared folders...

kube-node3: /vagrant => D:/soft/k8s-vagrant-demo

==> kube-server: Clearing any previously set forwarded ports...

==> kube-server: Fixed port collision for 22 => 2222. Now on port 2202.

==> kube-server: Clearing any previously set network interfaces...

==> kube-server: Preparing network interfaces based on configuration...

kube-server: Adapter 1: nat

kube-server: Adapter 2: hostonly

==> kube-server: Forwarding ports...

kube-server: 8080 (guest) => 8080 (host) (adapter 1)

kube-server: 8086 (guest) => 8086 (host) (adapter 1)

kube-server: 443 (guest) => 4443 (host) (adapter 1)

kube-server: 22 (guest) => 2202 (host) (adapter 1)

==> kube-server: Running 'pre-boot' VM customizations...

==> kube-server: Booting VM...

==> kube-server: Waiting for machine to boot. This may take a few minutes...

kube-server: SSH address: 127.0.0.1:2202

kube-server: SSH username: vagrant

kube-server: SSH auth method: private key

kube-server: Warning: Connection reset. Retrying...

kube-server: Warning: Connection aborted. Retrying...

==> kube-server: Machine booted and ready!

==> kube-server: Checking for guest additions in VM...

==> kube-server: Setting hostname...

==> kube-server: Configuring and enabling network interfaces...

kube-server: SSH address: 127.0.0.1:2202

kube-server: SSH username: vagrant

kube-server: SSH auth method: private key

==> kube-server: Mounting shared folders...

kube-server: /vagrant => D:/soft/k8s-vagrant-demo

==> kube-server: Machine already provisioned. Run `vagrant provision` or use the`--provision`

==> kube-server: flag to force provisioning. Provisioners marked to run always will still run.

D:\soft\k8s-vagrant-demo>

查看Vagrant虚机列表:

D:\soft\k8s-vagrant-demo>vagrant status

Current machine states:

kube-node1 running (virtualbox)

kube-node2 running (virtualbox)

kube-node3 running (virtualbox)

kube-server running (virtualbox)

This environment represents multiple VMs. The VMs are all listed

above with their current state. For more information about a specific

VM, run `vagrant status NAME`.

登录到kube-server虚机中:

D:\soft\k8s-vagrant-demo>vagrant ssh kube-server

[vagrant@kube-server ~]$

[vagrant@kube-server ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:39:53:60 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic enp0s3

valid_lft 86115sec preferred_lft 86115sec

inet6 fe80::56a6:ce35:5951:f6d8/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:8c:ef:53 brd ff:ff:ff:ff:ff:ff

inet 172.16.10.100/24 brd 172.16.10.255 scope global noprefixroute enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe8c:ef53/64 scope link

valid_lft forever preferred_lft forever

[vagrant@kube-server ~]$

[vagrant@kube-server ~]$ ip r

default via 10.0.2.2 dev enp0s3 proto dhcp metric 100

10.0.2.0/24 dev enp0s3 proto kernel scope link src 10.0.2.15 metric 100

172.16.10.0/24 dev enp0s8 proto kernel scope link src 172.16.10.100 metric 101

至此,这套4节点的k8s测试环境基础设施我们就搭建完成了。在这个环境上,我们可以方便得部署和验证软件功能。关闭环境执行vagrant halt,销毁环境执行vagrant destroy。无论是执行的halt还是destroy,一旦执行了vagrant up后,都会让你重新运行起来这套测试环境。区别仅在于destroy后,再创建出来的是一个回来起点时的环境了。