LaTeX中实现算法的呈现主要有两种方式:

使用宏包

algorithm2e, 这个宏包有很多可选项进行设定。使用宏包

algorithm与algorithmic, 好像挺多人喜欢用,周志华老师的<<机器学习>>一书中的算法描述应该就是使用的这两个宏包。

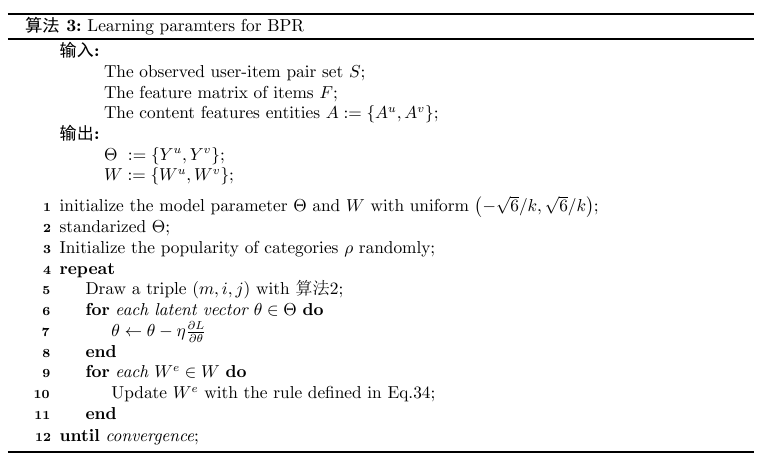

使用宏包algorithm2e:

\usepackage[linesnumbered,boxed,ruled,commentsnumbered]{algorithm2e}%%算法包,注意设置所需可选项\IncMargin{1em} % 使得行号不向外突出

\begin{algorithm}

\SetAlgoNoLine % 不要算法中的竖线

\SetKwInOut{Input}{\textbf{输入}}\SetKwInOut{Output}{\textbf{输出}} % 替换关键词

\Input{

\\

The observed user-item pair set $S$\;\\

The feature matrix of items $F$\;\\

The content features entities $A := \{A^u,A^v\}$\;\\}

\Output{

\\

$\Theta \ := \{Y^u,Y^v\}$\;\\

$W := \{W^u,W^v\}$\;\\}

\BlankLine

initialize the model parameter $\Theta$ and $W$ with uniform $\left(-\sqrt{6}/{k},\sqrt{6}/{k}\right)$\; % 分号 \; 区分一行结束

standarized $\Theta$\;

Initialize the popularity of categories $\rho$ randomly\;

\Repeat

{\text{convergence}}

{Draw a triple $\left(m,i,j\right)$ with 算法\ref{al2}\;

\For {each latent vector $\theta \in \Theta$}{

$\theta \leftarrow \theta - \eta\frac{\partial L}{\partial \theta}$

}

\For {each $W^e \in W$}{

Update $W^e$ with the rule defined in Eq.\ref{equ:W}\;

}

}

\caption{Learning paramters for BPR\label{al3}}

\end{algorithm}

\DecMargin{1em}

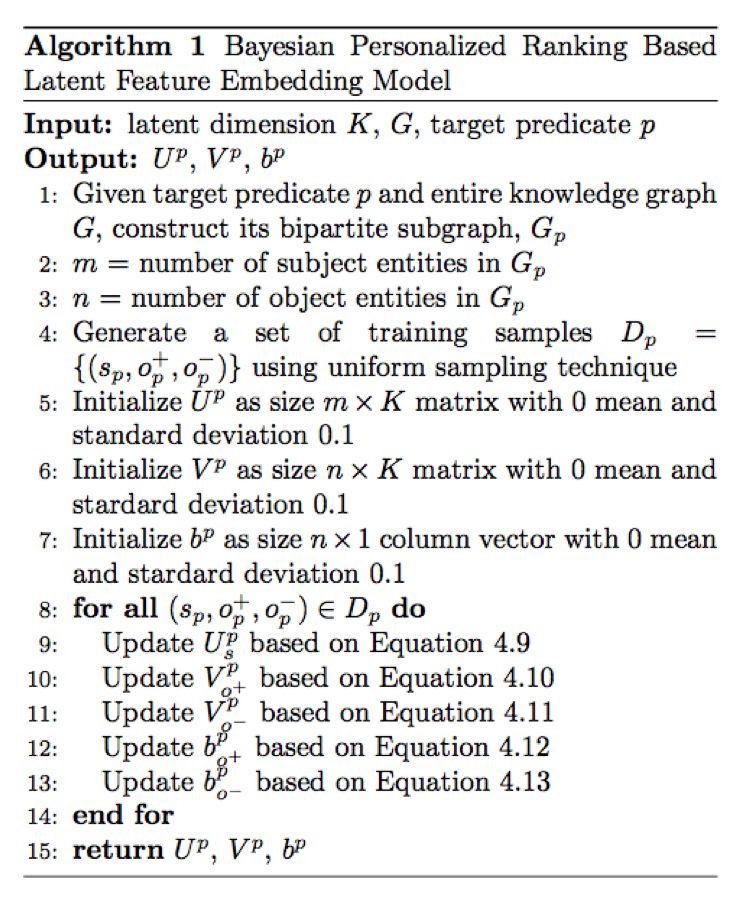

使用宏包algorithm与algorithmic

\usepackage{algorithm, algorithmic}\begin{algorithm}

\renewcommand{\algorithmicrequire}{\textbf{Input:}}

\renewcommand{\algorithmicensure}{\textbf{Output:}}

\caption{Bayesian Personalized Ranking Based Latent Feature Embedding Model}

\label{alg:1}

\begin{algorithmic}[1]

\REQUIRE latent dimension $K$, $G$, target predicate $p$

\ENSURE $U^{p}$, $V^{p}$, $b^{p}$

\STATE Given target predicate $p$ and entire knowledge graph $G$, construct its bipartite subgraph, $G_{p}$

\STATE $m$ = number of subject entities in $G_{p}$

\STATE $n$ = number of object entities in $G_{p}$

\STATE Generate a set of training samples $D_{p} = \{(s_p, o^{+}_{p}, o^{-}_{p})\}$ using uniform sampling technique

\STATE Initialize $U^{p}$ as size $m \times K$ matrix with $0$ mean and standard deviation $0.1$

\STATE Initialize $V^{p}$ as size $n \times K$ matrix with $0$ mean and stardard deviation $0.1$

\STATE Initialize $b^{p}$ as size $n \times 1$ column vector with $0$ mean and stardard deviation $0.1$

\FORALL{$(s_p, o^{+}_{p}, o^{-}_{p}) \in D_{p}$}

\STATE Update $U_{s}^{p}$ based on Equation~\ref{eq:sgd1}

\STATE Update $V_{o^{+}}^{p}$ based on Equation~\ref{eq:sgd2}

\STATE Update $V_{o^{-}}^{p}$ based on Equation~\ref{eq:sgd3}

\STATE Update $b_{o^{+}}^{p}$ based on Equation~\ref{eq:sgd4}

\STATE Update $b_{o^{-}}^{p}$ based on Equation~\ref{eq:sgd5}

\ENDFOR

\STATE \textbf{return} $U^{p}$, $V^{p}$, $b^{p}$

\end{algorithmic}

\end{algorithm}此文章转载自:https://blog.csdn.net/simple_the_best/article/details/52710794