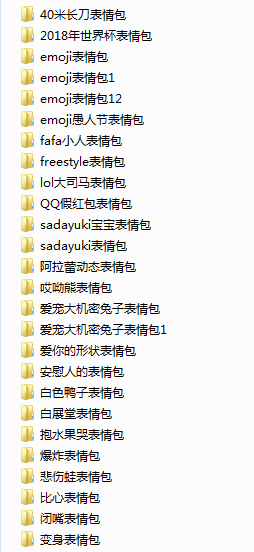

继续数据抓取练习,这次选取了一个小网站,目标网址:http://www.bbsnet.com/。目标网站很简陋,网页请求分析也很简单直接就能看到。所以我直接把整个网站的所有图片都爬取了下来(它那个网站上图片应该也是爬取其他网站的)。由于数据比较多所以我使用了多进程抓取。我按照每个链接建立了对应目录,而且有一个简单的进度显示,方便查看使用。我本机测试一共抓取到200多个文件夹合计2700多张图片(网站一直有更新所以结果不一定一样),有些文件夹内是空的,可能是格式没按套路来没抓到,我没太纠结毕竟只是练习而已..下面展示下抓取示例结果和源码:

代码:

1 # -*- coding: UTF-8 -*- 2 3 from multiprocessing import Process, Queue 4 from bs4 import BeautifulSoup 5 import urllib.request 6 import os 7 8 #存储路径 9 PATH = 'D:\\斗图\\' 10 11 #爬取目标网站url 12 CRAWL_TARGET_URL = 'http://www.bbsnet.com/' 13 14 #伪装头,有可能不需要 15 HEADERS = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 \ 16 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36'} 17 18 #伪装头1,有可能不需要 19 HEADERS1 = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 \ 20 (KHTML, like Gecko) Chrome/67.0.3396.99 Safari/537.36', 'Referer': 'http://www.bbsnet.com/'} 21 22 #进程数 23 NUMBER_OF_PROCESSES = 4 24 25 def download_image(url, filename): 26 try: 27 res = urllib.request.urlopen(urllib.request.Request(url=url, headers= HEADERS1), timeout=10) 28 with open(filename, "wb") as f: 29 f.write(res.read()) 30 except Exception as e: 31 print(e) 32 33 34 def crawl_images(url, path, title): 35 try: 36 res = urllib.request.urlopen(urllib.request.Request(url=url, headers= HEADERS), timeout=10) 37 except Exception as e: 38 print(e) 39 return 40 41 bf = BeautifulSoup(res.read(), 'html.parser') 42 links = bf.find_all('img', title = title) 43 i = 0; 44 for link in links: 45 src = link.get('src') 46 download_image(src, path+"\\"+str(i)+src[src.rfind('.'):]) 47 i = i+1 48 49 50 def crawl_urls(sUrls, q): 51 for url in sUrls: 52 try: 53 req = urllib.request.urlopen(urllib.request.Request(url=url, headers= HEADERS), timeout=10) 54 except Exception as e: 55 print(e) 56 continue 57 bf = BeautifulSoup(req.read(), 'html.parser') 58 links = bf.find_all('a', class_ = 'zoom') 59 for link in links: 60 #根据每一个页面标题创建一个文件夹 61 title = link.get('title').split()[0] 62 path = PATH + title 63 sequence = 1 64 while os.path.exists(path): 65 path = path + str(sequence) 66 sequence = sequence + 1 67 os.makedirs(path) 68 crawl_images(link.get('href'), path, title) 69 #完成抓取一个翻页内所有数据向主进程汇报一次进度 70 q.put(1) 71 72 73 def start_crawl(): 74 try: 75 req = urllib.request.urlopen(urllib.request.Request(url=CRAWL_TARGET_URL, headers= HEADERS)) 76 except Exception as e: 77 raise e 78 79 #获取翻页url信息 80 bs = BeautifulSoup(req.read(), 'html.parser') 81 lastPageUrl = bs.find('a', class_ = 'extend')['href'] 82 pos = lastPageUrl.rfind('/') + 1 83 oriPageUrl = lastPageUrl[:pos] 84 maxPageNum = int(lastPageUrl[pos:]) 85 86 #分配待抓取url到各自进程 87 mUrls = {} 88 for i in range(NUMBER_OF_PROCESSES): 89 mUrls[i] = [] 90 for n in range(maxPageNum): 91 mUrls[n%NUMBER_OF_PROCESSES].append(oriPageUrl+str(n+1)) 92 93 #创建进程,使用一个队列来统计完成率 94 q = Queue() 95 for i in range(NUMBER_OF_PROCESSES): 96 p = Process(target=crawl_urls, args=(mUrls[i], q)) 97 p.start() 98 99 #完成率统计 100 schedule = 0 101 while 1: 102 q.get() 103 schedule = schedule+1 104 print("已完成:", int(schedule*100/maxPageNum), '%') 105 106 107 if __name__ == '__main__': 108 start_crawl()