#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:love_cat

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

# 输入数据

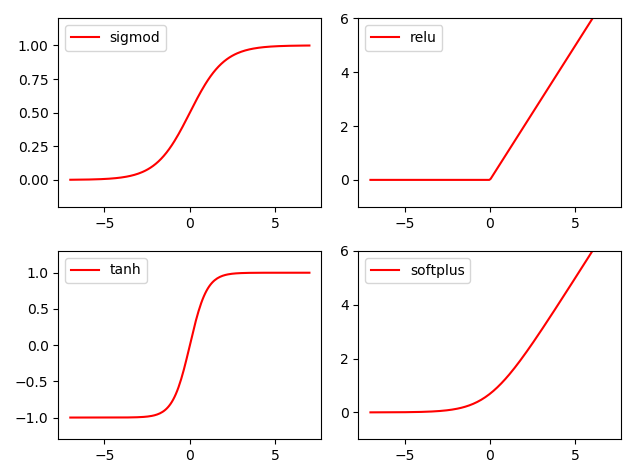

x = np.linspace(-7, 7, 180)

def sigmoid(inputs):

y = [1 / float(1 + np.exp(-t)) for t in inputs]

return y

def relu(inputs):

y = [t * (t > 0) for t in inputs]

return y

def tanh(inputs):

y = [(np.exp(t) - np.exp(-t)) / (np.exp(t) + np.exp(-t)) for t in inputs]

return y

def softplus(inputs):

y = [np.log(1 + np.exp(t)) for t in inputs]

return y

# 经过TensorFlow激活函数处理的每个y值

y_sigmoid = tf.nn.sigmoid(x)

y_relu = tf.nn.relu(x)

y_tanh = tf.nn.tanh(x)

y_softplus = tf.nn.softplus(x)

# 创建会话

sess = tf.Session()

# 运行

y_sigmoid, y_relu, y_tanh, y_softplus = sess.run([y_sigmoid, y_relu, y_tanh, y_softplus])

# 创建各个激活函数的图像

plt.subplot(221)

plt.plot(x, y_sigmoid, color='red', label='sigmod')

plt.ylim(-0.2, 1.2)

plt.legend(loc='best')

plt.subplot(222)

plt.plot(x, y_relu, color='red', label='relu')

plt.ylim(-1, 6)

plt.legend(loc='best')

plt.subplot(223)

plt.plot(x, y_tanh, color='red', label='tanh')

plt.ylim(-1.3, 1.3)

plt.legend(loc='best')

plt.subplot(224)

plt.plot(x, y_softplus, color='red', label='softplus')

plt.ylim(-1, 6)

plt.legend(loc='best')

# 显示图像

plt.show()

# 关闭会话

sess.close()