开发中通常会遇到的音频流的处理,我们知道从苹果设备麦克风上面获取的声音是驳杂的,我们利用原生框架<AVFoundation/AVFoundation.h>可以把音频源经过PCM编码后得到音频裸流,这个音频流理论上你也可以在网络上传输(推流),但是这个裸流内容量太大,不仅传输慢,而且服务器承受不了。这时候我们就需要将其编码,进而引入AAC高级音频编码!

1、PCM编码

通常我们采用的是脉冲代码调制编码,即PCM编码。PCM通过抽样、量化、编码三个步骤将连续变化的模拟信号转换为数字编码。

抽样:对模拟信号进行周期性扫描,把时间上连续的信号变成时间上离散的信号;

量化:用一组规定的电平,把瞬时抽样值用最接近的电平值来表示,通常是用二进制表示;

编码:用一组二进制码组来表示每一个有固定电平的量化值;

采样后的数据大小 = 采样率值×采样大小值×声道数 bps。

一个采样率为44.1KHz,采样大小为16bit,双声道的PCM编码的WAV文件,它的数据速率=44.1K×16×2 bps=1411.2 Kbps= 176.4 KB/s。

这个速率和压缩后的视频数据速率差不多!

延伸出来AAC高级音频编码。

2、AAC高级音频编码

AAC(Advanced Audio Coding),中文名:高级音频编码,出现于1997年,基于MPEG-2的音频编码技术。由Fraunhofer IIS、杜比实验室、AT&T、Sony等公司共同开发,目的是取代MP3格式。更具体的可以看看AAC的维基百科。

3、AAC音频格式

AAC音频格式有ADIF和ADTS:

ADIF:Audio Data Interchange Format 音频数据交换格式。这种格式的特征是可以确定的找到这个音频数据的开始,不需进行在音频数据流中间开始的解码,即它的解码必须在明确定义的开始处进行。故这种格式常用在磁盘文件中。

ADTS:Audio Data Transport Stream 音频数据传输流。这种格式的特征是它是一个有同步字的比特流,解码可以在这个流中任何位置开始,更利于网络传输。它的特征类似于mp3数据流格式。

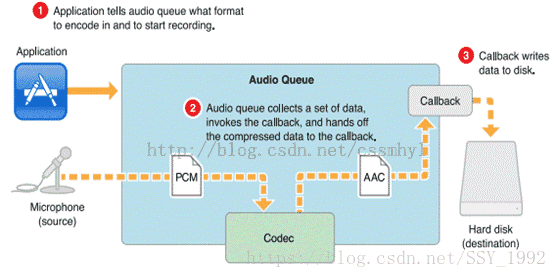

4、iOS上把PCM音频编码成AAC音频流

1.设置编码器(codec),并开始录制;

2.收集到PCM数据,传给编码器;

3.编码完成回调callback,写入文件。

具体实现步骤:

ViewController.m

1.创建文件夹句柄

#pragma mark 创建文件夹句柄

- (void)createFileToDocument{

NSString *audioFile = [[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) lastObject] stringByAppendingPathComponent:@"abc.aac"];

// 有就移除掉

[[NSFileManager defaultManager] removeItemAtPath:audioFile error:nil];

// 移除之后再创建

[[NSFileManager defaultManager] createFileAtPath:audioFile contents:nil attributes:nil];

self.audioFileHandle = [NSFileHandle fileHandleForWritingAtPath:audioFile];

}2.创建并配置AVCaptureSession

#pragma mark - 设置音频

- (void)setupAudioCapture {

self.aacEncoder = [[AACEncoder alloc] init];

self.session = [[AVCaptureSession alloc] init];

AVCaptureDevice *audioDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

NSError *error = nil;

AVCaptureDeviceInput *audioInput = [[AVCaptureDeviceInput alloc]initWithDevice:audioDevice error:&error];

if (error) {

NSLog(@"Error getting audio input device:%@",error.description);

}

if ([self.session canAddInput:audioInput]) {

[self.session addInput:audioInput];

}

self.AudioQueue = dispatch_queue_create("Audio Capture Queue", DISPATCH_QUEUE_SERIAL);

AVCaptureAudioDataOutput *audioOutput = [AVCaptureAudioDataOutput new];

[audioOutput setSampleBufferDelegate:self queue:self.AudioQueue];

if ([self.session canAddOutput:audioOutput]) {

[self.session addOutput:audioOutput];

}

self.audioConnection = [audioOutput connectionWithMediaType:AVMediaTypeAudio];

}

3.接收到外界音频后回调代理方法

#pragma mark - 实现 AVCaptureOutputDelegate:

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection {

if (connection == _audioConnection)

{

// 音频

[self.aacEncoder encodeSampleBuffer:sampleBuffer completionBlock:^(NSData *encodedData, NSError *error) {

if (encodedData) {

//NSLog(@"Audio data (%lu):%@", (unsigned long)encodedData.length,encodedData.description);

[self.audioFileHandle writeData:encodedData];

}else {

NSLog(@"Error encoding AAC: %@", error);

}

}];

}else{

// 视频

}

}AACEncoder.m

4、创建转换器

AudioStreamBasicDescription是输出流的结构体描述,

配置好outAudioStreamBasicDescription后,

根据AudioClassDescription(编码器),

调用AudioConverterNewSpecific创建转换器。

- (void) setupEncoderFromSampleBuffer:(CMSampleBufferRef)sampleBuffer {

AudioStreamBasicDescription inAudioStreamBasicDescription = *CMAudioFormatDescriptionGetStreamBasicDescription((CMAudioFormatDescriptionRef)CMSampleBufferGetFormatDescription(sampleBuffer));

AudioStreamBasicDescription outAudioStreamBasicDescription = {0}; // 初始化输出流的结构体描述为0. 很重要。

outAudioStreamBasicDescription.mSampleRate = inAudioStreamBasicDescription.mSampleRate; // 音频流,在正常播放情况下的帧率。如果是压缩的格式,这个属性表示解压缩后的帧率。帧率不能为0。

outAudioStreamBasicDescription.mFormatID = kAudioFormatMPEG4AAC; // 设置编码格式

outAudioStreamBasicDescription.mFormatFlags = kMPEG4Object_AAC_LC; // 无损编码 ,0表示没有

outAudioStreamBasicDescription.mBytesPerPacket = 0; // 每一个packet的音频数据大小。如果的动态大小,设置为0。动态大小的格式,需要用AudioStreamPacketDescription 来确定每个packet的大小。

outAudioStreamBasicDescription.mFramesPerPacket = 1024; // 每个packet的帧数。如果是未压缩的音频数据,值是1。动态帧率格式,这个值是一个较大的固定数字,比如说AAC的1024。如果是动态大小帧数(比如Ogg格式)设置为0。

outAudioStreamBasicDescription.mBytesPerFrame = 0; // 每帧的大小。每一帧的起始点到下一帧的起始点。如果是压缩格式,设置为0 。

outAudioStreamBasicDescription.mChannelsPerFrame = 1; // 声道数

outAudioStreamBasicDescription.mBitsPerChannel = 0; // 压缩格式设置为0

outAudioStreamBasicDescription.mReserved = 0; // 8字节对齐,填0.

AudioClassDescription *description = [self

getAudioClassDescriptionWithType:kAudioFormatMPEG4AAC

fromManufacturer:kAppleSoftwareAudioCodecManufacturer]; //软编

OSStatus status = AudioConverterNewSpecific(&inAudioStreamBasicDescription, &outAudioStreamBasicDescription, 1, description, &_audioConverter); // 创建转换器

if (status != 0) {

NSLog(@"setup converter: %d", (int)status);

}

}获取编码器的方法

- (AudioClassDescription *)getAudioClassDescriptionWithType:(UInt32)type

fromManufacturer:(UInt32)manufacturer

{

static AudioClassDescription desc;

UInt32 encoderSpecifier = type;

OSStatus st;

UInt32 size;

st = AudioFormatGetPropertyInfo(kAudioFormatProperty_Encoders,

sizeof(encoderSpecifier),

&encoderSpecifier,

&size);

if (st) {

NSLog(@"error getting audio format propery info: %d", (int)(st));

return nil;

}

unsigned int count = size / sizeof(AudioClassDescription);

AudioClassDescription descriptions[count];

st = AudioFormatGetProperty(kAudioFormatProperty_Encoders,

sizeof(encoderSpecifier),

&encoderSpecifier,

&size,

descriptions);

if (st) {

NSLog(@"error getting audio format propery: %d", (int)(st));

return nil;

}

for (unsigned int i = 0; i < count; i++) {

if ((type == descriptions[i].mSubType) &&

(manufacturer == descriptions[i].mManufacturer)) {

memcpy(&desc, &(descriptions[i]), sizeof(desc));

return &desc;

}

}

return nil;

}5、获取到PCM数据并传入编码器

用CMSampleBufferGetDataBuffer获取到CMSampleBufferRef里面的CMBlockBufferRef,

再通过CMBlockBufferGetDataPointer获取到_pcmBufferSize和_pcmBuffer;

调用AudioConverterFillComplexBuffer传入数据,并在callBack函数调用填充buffer的方法。

- (void) encodeSampleBuffer:(CMSampleBufferRef)sampleBuffer completionBlock:(void (^)(NSData * encodedData, NSError* error))completionBlock {

CFRetain(sampleBuffer);

dispatch_async(_encoderQueue, ^{

if (!_audioConverter) {

[self setupEncoderFromSampleBuffer:sampleBuffer];

}

CMBlockBufferRef blockBuffer = CMSampleBufferGetDataBuffer(sampleBuffer);

CFRetain(blockBuffer);

OSStatus status = CMBlockBufferGetDataPointer(blockBuffer, 0, NULL, &_pcmBufferSize, &_pcmBuffer);

NSError *error = nil;

if (status != kCMBlockBufferNoErr) {

error = [NSError errorWithDomain:NSOSStatusErrorDomain code:status userInfo:nil];

}

//NSLog(@"PCM Buffer Size: %zu", _pcmBufferSize);

memset(_aacBuffer, 0, _aacBufferSize);

AudioBufferList outAudioBufferList = {0};

outAudioBufferList.mNumberBuffers = 1;

outAudioBufferList.mBuffers[0].mNumberChannels = 1;

outAudioBufferList.mBuffers[0].mDataByteSize = (int)_aacBufferSize;

outAudioBufferList.mBuffers[0].mData = _aacBuffer;

AudioStreamPacketDescription *outPacketDescription = NULL;

UInt32 ioOutputDataPacketSize = 1;

status = AudioConverterFillComplexBuffer(_audioConverter, inInputDataProc, (__bridge void *)(self), &ioOutputDataPacketSize, &outAudioBufferList, outPacketDescription);

//NSLog(@"ioOutputDataPacketSize: %d", (unsigned int)ioOutputDataPacketSize);

NSData *data = nil;

if (status == 0) {

NSData *rawAAC = [NSData dataWithBytes:outAudioBufferList.mBuffers[0].mData length:outAudioBufferList.mBuffers[0].mDataByteSize];

NSData *adtsHeader = [self adtsDataForPacketLength:rawAAC.length];

NSMutableData *fullData = [NSMutableData dataWithData:adtsHeader];

[fullData appendData:rawAAC];

data = fullData;

} else {

error = [NSError errorWithDomain:NSOSStatusErrorDomain code:status userInfo:nil];

}

if (completionBlock) {

dispatch_async(_callbackQueue, ^{

completionBlock(data, error);

});

}

CFRelease(sampleBuffer);

CFRelease(blockBuffer);

});

}Callback函数

static OSStatus inInputDataProc(AudioConverterRef inAudioConverter, UInt32 *ioNumberDataPackets, AudioBufferList *ioData, AudioStreamPacketDescription **outDataPacketDescription, void *inUserData)

{

AACEncoder *encoder = (__bridge AACEncoder *)(inUserData);

UInt32 requestedPackets = *ioNumberDataPackets;

//NSLog(@"Number of packets requested: %d", (unsigned int)requestedPackets);

size_t copiedSamples = [encoder copyPCMSamplesIntoBuffer:ioData];

if (copiedSamples < requestedPackets) {

//NSLog(@"PCM buffer isn't full enough!");

*ioNumberDataPackets = 0;

return -1;

}

*ioNumberDataPackets = 1;

//NSLog(@"Copied %zu samples into ioData", copiedSamples);

return noErr;

}

/**

* 填充PCM到缓冲区

*/

- (size_t) copyPCMSamplesIntoBuffer:(AudioBufferList*)ioData {

size_t originalBufferSize = _pcmBufferSize;

if (!originalBufferSize) {

return 0;

}

ioData->mBuffers[0].mData = _pcmBuffer;

ioData->mBuffers[0].mDataByteSize = (int)_pcmBufferSize;

_pcmBuffer = NULL;

_pcmBufferSize = 0;

return originalBufferSize;

}6、得到rawAAC码流,添加ADTS头,并写入文件

AudioConverterFillComplexBuffer返回的是AAC原始码流,需要在AAC每帧添加ADTS头,调用adtsDataForPacketLength方法生成,最后把数据写入audioFileHandle的文件。

/**

* Add ADTS header at the beginning of each and every AAC packet.

* This is needed as MediaCodec encoder generates a packet of raw

* AAC data.

*

* Note the packetLen must count in the ADTS header itself.

* See: http://wiki.multimedia.cx/index.php?title=ADTS

* Also: http://wiki.multimedia.cx/index.php?title=MPEG-4_Audio#Channel_Configurations

**/

- (NSData*) adtsDataForPacketLength:(NSUInteger)packetLength {

int adtsLength = 7;

char *packet = malloc(sizeof(char) * adtsLength);

// Variables Recycled by addADTStoPacket

int profile = 2; //AAC LC

//39=MediaCodecInfo.CodecProfileLevel.AACObjectELD;

int freqIdx = 4; //44.1KHz

int chanCfg = 1; //MPEG-4 Audio Channel Configuration. 1 Channel front-center

NSUInteger fullLength = adtsLength + packetLength;

// fill in ADTS data

packet[0] = (char)0xFF; // 11111111 = syncword

packet[1] = (char)0xF9; // 1111 1 00 1 = syncword MPEG-2 Layer CRC

packet[2] = (char)(((profile-1)<<6) + (freqIdx<<2) +(chanCfg>>2));

packet[3] = (char)(((chanCfg&3)<<6) + (fullLength>>11));

packet[4] = (char)((fullLength&0x7FF) >> 3);

packet[5] = (char)(((fullLength&7)<<5) + 0x1F);

packet[6] = (char)0xFC;

NSData *data = [NSData dataWithBytesNoCopy:packet length:adtsLength freeWhenDone:YES];

return data;

}

到这里基本上就实现了从麦克风录制音频并编码成AAC码流,代码实现起来不难,大都可以在网上找到,但是想要深究的话。还是需要细细的琢磨,要熟悉C语言才能更好的理解。这里感谢简书的落影loyinglin大牛,他对音视频编解码以及OpenGL都有很深的理解,值得学习!