查看Ceph CRUSH map

查看CRUSH map

从monitor节点上获取CRUSH map

[root@ceph ceph]# ceph osd getcrushmap -o crushmap_compiled_file

反编译CRUSH map

[root@ceph ceph]# crushtool -d crushmap_compiled_file -o crushmap_decompiled_file

修改完成后,我们需要编译他

[root@ceph ceph]# crushtool -d crushmap_decompiled_file -o newcrushmap

将新CRUSH map导入集群中

[root@ceph ceph]# ceph osd setcrushmap -i newcrushmap

[root@ceph ceph]# cat crushmap_decompiled_file

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable straw_calc_version 1

# devices

device 0 osd.0

device 1 osd.1

device 2 osd.2

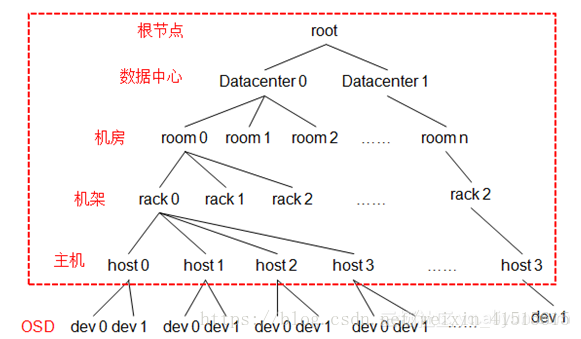

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

# buckets

host ceph-node1 {

id -2 # do not change unnecessarily

# weight 0.000

alg straw

hash 0 # rjenkins1

}

host ceph {

id -3 # do not change unnecessarily

# weight 0.044

alg straw

hash 0 # rjenkins1

item osd.2 weight 0.015

item osd.1 weight 0.015

item osd.0 weight 0.015

}

root default {

id -1 # do not change unnecessarily

# weight 0.044

alg straw

hash 0 # rjenkins1

item ceph-node1 weight 0.000

item ceph weight 0.044

}

# rules

rule replicated_ruleset {

ruleset 0 #rule编号

type replicated

#定义pool类型为replicated(还有esurecode模式)

min_size 1

#pool中最小指定的副本数量不能小1

max_size 10

#pool中最大指定的副本数量不能大于10

step take default

#定义pg查找副本的入口点

step chooseleaf firstn 0 type host

#选叶子节点、深度优先、隔离host

step emit

#结束

}

# end crush map