本文 elasticsearch + logstash + kibana 均为 6.3.1 版本做实践

# elasticsearch 安装启动

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.3.1.tar.gz

tar -zxf elasticsearch-6.3.1.tar.gz

cd elasticsearch-6.3.1/bin

elasticsearch -d# logstash 安装启动

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.3.1.tar.gz

tar -zxf logstash-6.3.1.tar.gz

cd logstash-6.3.1/bin

logstash -f logstash-apache.conf# kibana 安装启动

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.3.1-linux-x86_64.tar.gz

tar -zxf kibana-6.3.1-linux-x86_64.tar.gz

cd kibana-6.3.1-linux-x86_64/bin

kibana -d

浏览器访问 localhost:5601windows下部署 ELK, 参考 https://segmentfault.com/a/1190000013756857

其中最重要的就是 logstash-apache.conf 这个文件了 (名字可以自定义)

logstash 启动方法有两种:

1. logstash -e '配置文件内容'

2. logstash -f 配置文件 (本文所用方法)

在 logstash-6.3.1/bin 目录内新建文件 logstash-apache.conf

input {

file {

type => "apache_access"

path => ["/var/log/apache/access.log"]

start_position => beginning

ignore_older => 0

}

file {

type => "apache_error"

path => ["/var/log/apache/error.log"]

start_position => beginning

ignore_older => 0

}

}

filter {

if [type] == "apache_access"{

grok {

match => { "message" => "%{COMBINEDAPACHELOG}"}

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

}

geoip {

source => "clientip"

}

useragent {

source => "agent"

target => "useragent"

}

} else if [type] == "apache_error"{

grok {

match => { "message" => "\[(?<mytimestamp>%{DAY:day} %{MONTH:month} %{MONTHDAY} %{TIME} %{YEAR})\] \[%{WORD:module}:%{LOGLEVEL:loglevel}\] \[pid %{NUMBER:pid}:tid %{NUMBER:tid}\]( \(%{POSINT:proxy_errorcode}\)%{DATA:proxy_errormessage}:)?( \[client %{IPORHOST:client}:%{POSINT:clientport}\])? %{DATA:errorcode}: %{GREEDYDATA:message}" }

}

date {

match => [ "mytimestamp" , "EEE MMM dd HH:mm:ss.SSSSSS yyyy" ]

}

}

}

output {

if [type] == "apache_access"{

elasticsearch {

hosts => [ "localhost:9200" ]

index => "apache-access-log-%{+YYYY.MM}"

}

} else if [type] == "apache_error"{

elasticsearch {

hosts => [ "localhost:9200" ]

index => "apache-error-log"

}

}

}input 为数据源入口

filter 为数据过滤

output 为数据输出

本文是以 apache 日志为数据源, 通过 logstash 过滤后输出到 elasticsearch 收集起来, 然后在 kibana 进行可视化分析

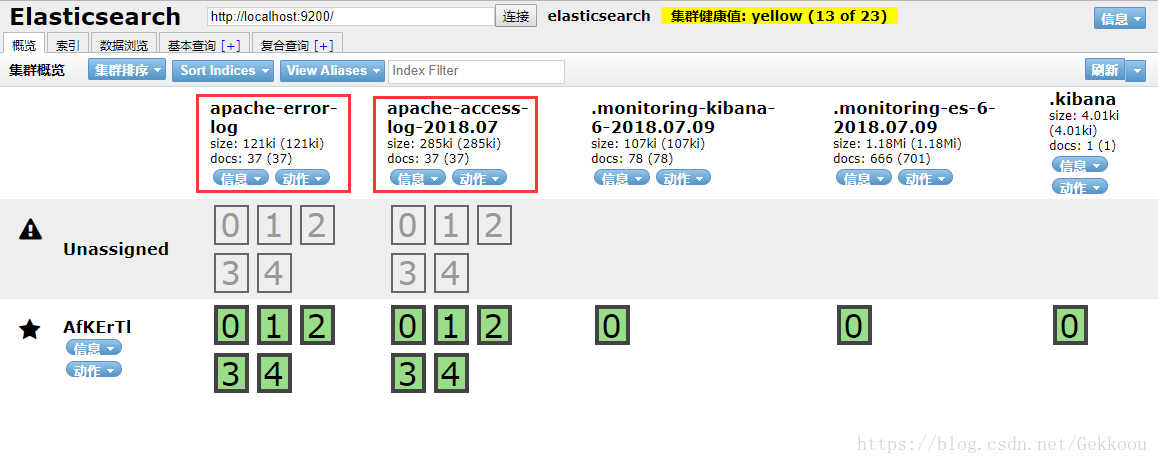

logstash -f logstash-apache.conf 命令执行完后打开 chrome 插件 ElasticSearch Head, 效果如下图

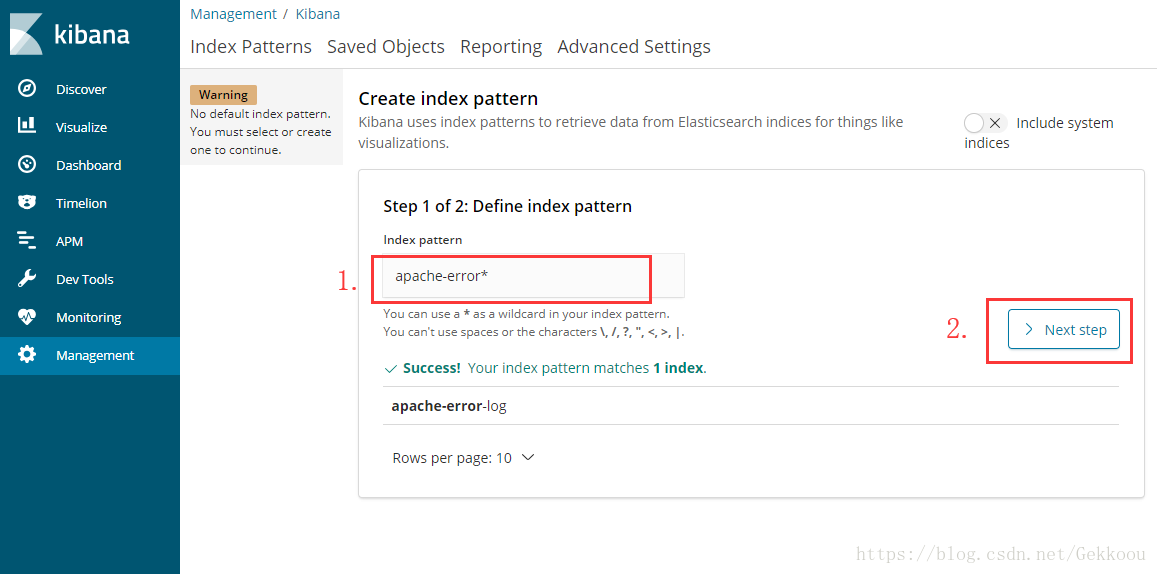

浏览器访问 localhost:5601, 如下图

- 定义索引模式, 匹配到

elasticsearch的索引 - 按下一步

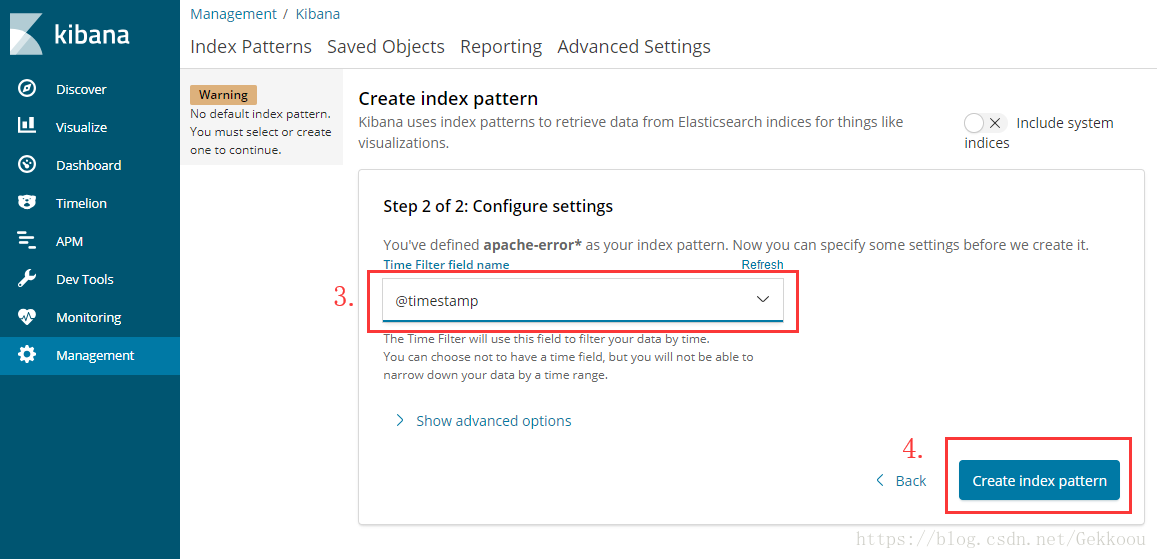

- 选择一个字段为时间过滤器方便数据过滤筛选

- 创建索引模式

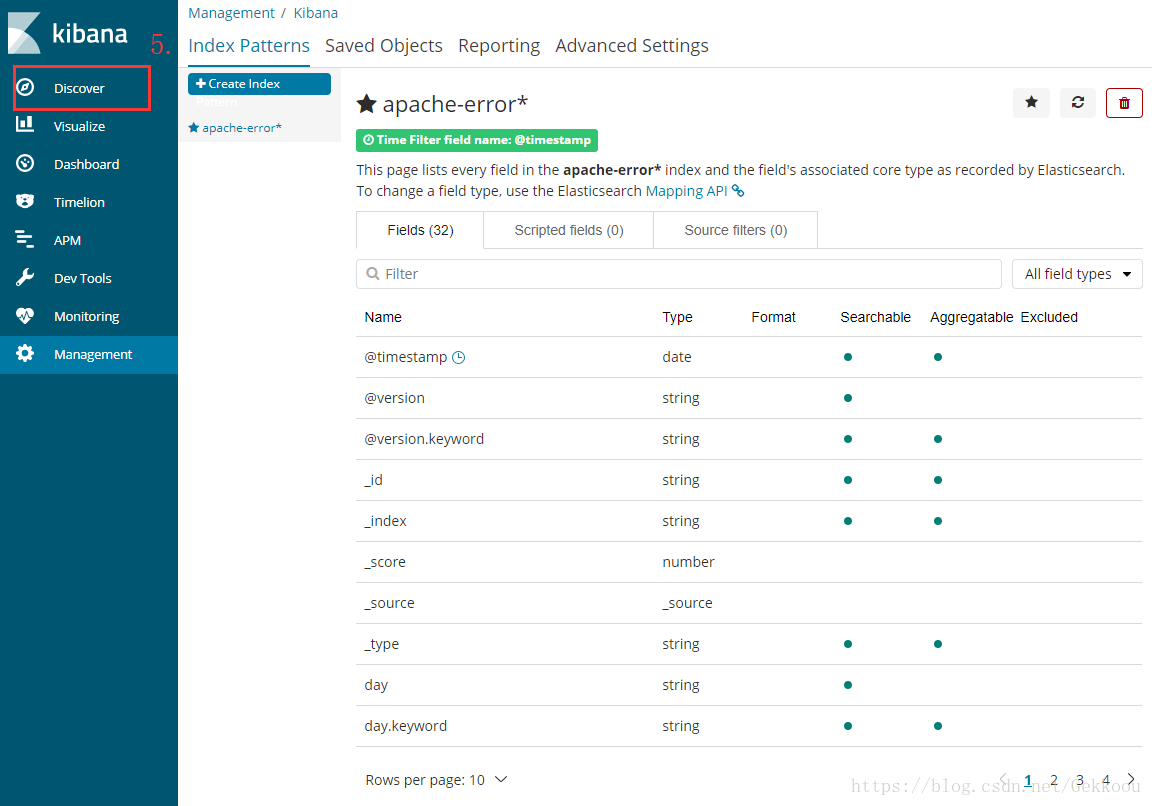

- 点击

Discover - 可以尽情过滤筛选操作数据了~

(其实我对这 kibana 不熟, 看得不明不白或不爽的 dalao 们还是谷歌或百度吧, 好尴尬 ⊙_⊙!!!!)

推荐阅读

Elasticsearch 权威指南(中文)

https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

Logstash 参考

https://www.elastic.co/guide/en/logstash/6.3/index.html

Logstash filter 详解

https://www.elastic.co/guide/en/logstash/6.3/filter-plugins.html

Kibana 用户手册

https://www.elastic.co/guide/cn/kibana/current/index.html