Spark2.3 - 运行异常NoSuchMethodError:io.netty.buffer.PooledByteBufAllocator.metric()

一、问题说明

在一个项目中同时引入了多个框架

- hbase 1.4.1

- kafka 1.1.0

- spark 2.3.0

在以local模型运行spark示例程序的时候,出现如下报错:

Exception in thread "main" java.lang.NoSuchMethodError: io.netty.buffer.PooledByteBufAllocator.metric()Lio/netty/buffer/PooledByteBufAllocatorMetric;

at org.apache.spark.network.util.NettyMemoryMetrics.registerMetrics(NettyMemoryMetrics.java:80)

at org.apache.spark.network.util.NettyMemoryMetrics.<init>(NettyMemoryMetrics.java:76)

at org.apache.spark.network.client.TransportClientFactory.<init>(TransportClientFactory.java:109)

at org.apache.spark.network.TransportContext.createClientFactory(TransportContext.java:99)

at org.apache.spark.rpc.netty.NettyRpcEnv.<init>(NettyRpcEnv.scala:71)

at org.apache.spark.rpc.netty.NettyRpcEnvFactory.create(NettyRpcEnv.scala:461)

at org.apache.spark.rpc.RpcEnv$.create(RpcEnv.scala:57)

at org.apache.spark.SparkEnv$.create(SparkEnv.scala:249)

at org.apache.spark.SparkEnv$.createDriverEnv(SparkEnv.scala:175)

at org.apache.spark.SparkContext.createSparkEnv(SparkContext.scala:256)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:423)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2486)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:930)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:921)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:921)

at spark.ml.JavaAFTSurvivalRegressionExample.main(JavaAFTSurvivalRegressionExample.java:50)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:147)二、问题分析

在通过搜索,根据:http://www.aboutyun.com/thread-24429-1-1.html 把问题定位到,可能是依赖中存在多个版本的 netty导致程序出现NoSuchMethodError异常。

接下来使用命令mvn dependency:tree >> log/dependency.log用于分析现在程序中已有的jar包依赖,通过搜索日志文件中发现:

org.apache.hbase:hbase-client:jar:1.4.1:compile中包含有io.netty:netty-all:jar:4.1.8.Final:compilejar包org.apache.spark:spark-core_2.11:jar:2.3.0:compile中包含有io.netty:netty:jar:3.9.9.Final:compile

初步定位就是这两个jar包导致的冲突。

我首先采用的方案是使用 <exclusions></exclusions>标签剔除 spark-core 中所有的低版本的netty,无果。

后来发现在 stackoverflow 中有人发现类似的问题:https://stackoverflow.com/questions/49137397/spark-2-3-0-netty-version-issue-nosuchmethod-io-netty-buffer-pooledbytebufalloc?utm_medium=organic&utm_source=google_rich_qa&utm_campaign=google_rich_qa

在这里面主要强调了一遍maven的传递依赖问题,https://maven.apache.org/guides/introduction/introduction-to-dependency-mechanism.html。

并且评论中的解决方法是使用<dependencyManagement></dependencyManagement>对于项目中的多版本进行管理,添加依赖如下:

<dependencyManagement>

<dependencies>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>4.1.18.Final</version>

</dependency>

</dependencies>

</dependencyManagement>注: 这里的版本是根据 mvn 生成的日志文件中的 netty 的版本所确定的。

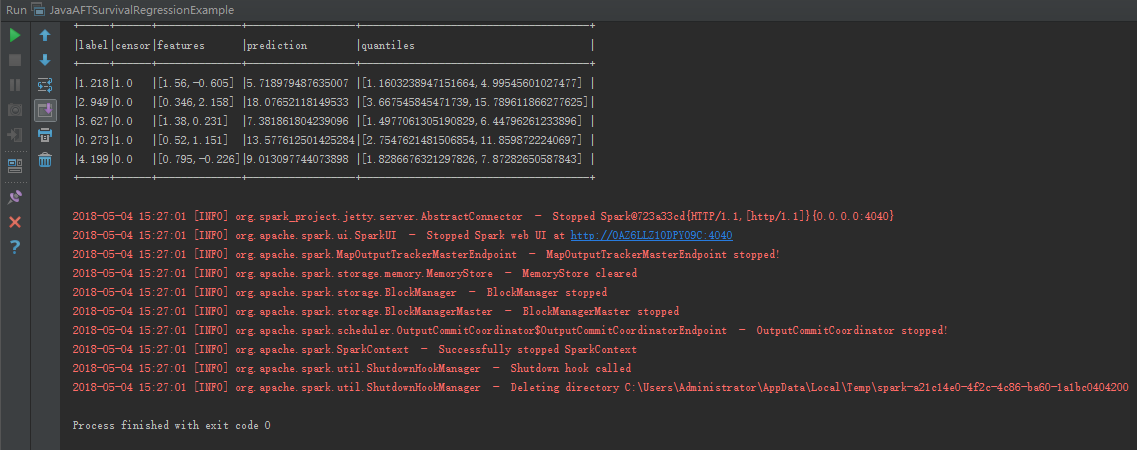

三、运行结果

在增添该配置以后,程序可以正常运行:

四、完整依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<properties>

<java.version>1.8</java.version>

<scala.version>2.11.12</scala.version>

<hbase.version>1.4.1</hbase.version>

<kafka.version>1.1.0</kafka.version>

<spark.version>2.3.0</spark.version>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>4.1.18.Final</version>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<!-- 引入hbase -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>

<!-- 引入kafka -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.version}</version>

</dependency>

<!-- 引入spark -->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

<exclusions>

<exclusion>

<groupId>io.netty</groupId>

<artifactId>netty</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- 引入日志系统 -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.21</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.21</version>

</dependency>

<!-- 简化配置lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.16.20</version>

</dependency>

<!-- 引入测试 -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.5.1</version>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

</configuration>

</plugin>

</plugins>

</build>

</project>