key value型的内存数据库。 没有持久化情况下 看做是一块内存,所有的数据要设置失效时间。或者 自己记得key 不然数据就会一直在里面。

背景:

x省移动临时提的一个需求 ,用户上网日志实时数据 取访问开始时间 手机号 访问app的编码 实时分析每个用户近一小时访问次数top10的app,软件环境:

实时流处理ibm stream平台集群环境现成;kafka消息源topic现成;redis 或 gemfirexd集群现成(其中redis集群跑的业务少 gemfirexd跑的较多)

数据源字段 号码 访问开始时间 appId;数据源规模 230亿条记录/24h ;均值20w/s ;但经过滤一小时前数据后 只有4/5w /s。

初步设想方案

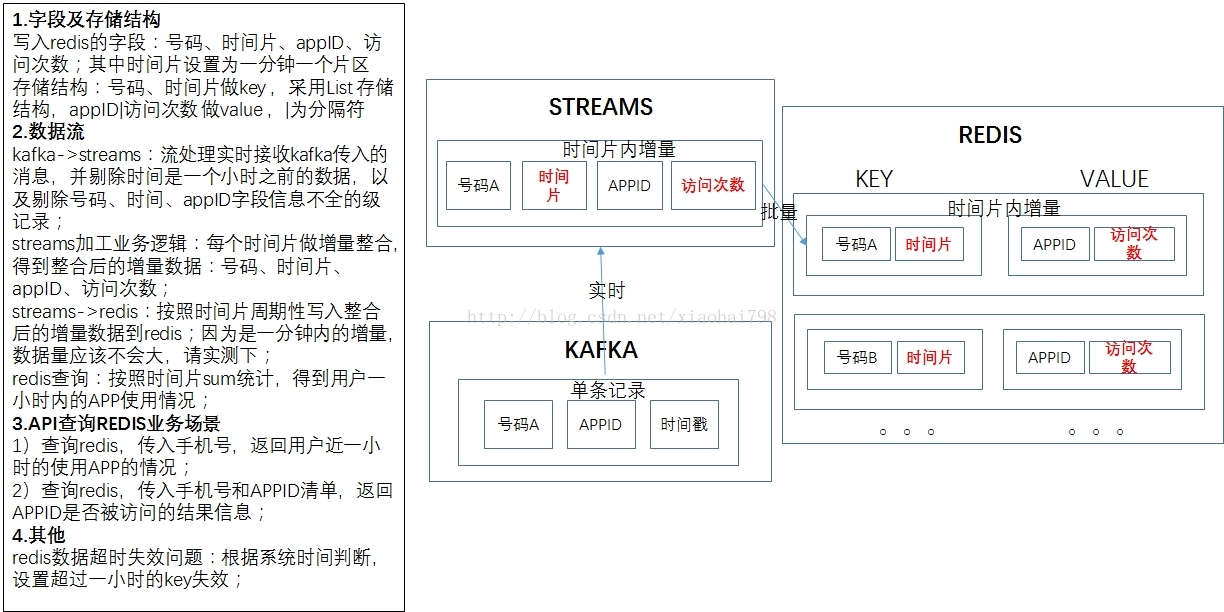

1 流处理一条条过滤 源数据 剔除 三个字段任何一个为空的情况,一小时前的数据直接丢弃,给每条数据加上时间片 格式2016-11-15 17:10:00 ,一分钟加一次。

然后写入redis集群 写一次 设置下失效时间为一小时 ;然后api端做统计处理。此方法需要写redis集群的时间达到4/5w/s 预计

2 流处理一条条过滤 源数据 剔除 三个字段任何一个为空的情况,一小时前的数据直接丢弃,每分钟产生一个信号时间(当前时间) 以该时间为准 ,选一分钟的时间窗口

取访问时间在当前分钟往前推一分钟的时间间隔里的 用户号码 时间信号 appId的次数 ,以这样的统计结果增量入redis。

api端 拆分成60个时间片区(时间信号)取值后再统计

最终按照第一方案实现

附代码:

kafka消费的stream代码:

namespace com.asiainfo.position;

use com.zjmcc.kafka.consumer::*;

type ti_userBeav=rstring msisdn,rstring dataTime,rstring appId;

type fullMseeage=rstring a0,rstring a1,rstring a2,rstring a3,rstring a4,rstring a5,rstring a6,rstring a7,rstring a8,rstring a9,rstring a10,rstring a11,rstring a12,rstring a13,rstring a14,rstring a15,rstring a16,rstring a17,rstring a18,rstring a19,rstring a20,rstring a21,rstring a22;

composite UserBehaviorSource {

graph

stream<rstring message> UserBehavStream = KafkaConsumer()

{

param

vmArg:"-Xmx4096m";

topic: "seq_101_internet";

threadsPerTopic:15;

propertiesFile:"etc/consumer.properties";

//CONCURRENCY_THREAD_NUM:10;

//topic_partition:getChannel();

config placement:host("pc-zjqbsp15");

}

/*

() as Sink5 = FileSink(UserBehavStream)

{

param

file : "/opt/data/streamdtklad/data/kafkaUserBeav.dat";

closeMode : dynamic;

append : true;

config placement:host("pc-zjqbsp16");

}

* */

@parallel(width=30)

() as Res = UserBeavSignalProccess(UserBehavStream){

config placement:host(sqlPool3);

}

config hostPool : sqlPool3=[ "pc-zjqbsp05","pc-zjqbsp06", "pc-zjqbsp16", "pc-zjqbsp24", "pc-zjqbsp25"];

}

composite UserBeavSignalProccess(input UserBehavStream){

graph

(stream<timestamp tag1> currentTime1) as Beaconcuu = Beacon()

{

param

period : 60.0 ;

initDelay : 60.0 ;

output

currentTime1 : tag1 = getTimestamp() ;

}

(stream<ti_userBeav> ub_tmp) = Custom(UserBehavStream;currentTime1)

{

logic state :{

mutable list<rstring> res;

mutable rstring msisdn;

mutable rstring dataTime;

mutable rstring appId;

mutable timestamp standrtime;

}

onTuple currentTime1:{

standrtime=currentTime1.tag1;

}

onTuple UserBehavStream:{////

res=tokenize(UserBehavStream.message," ",true);

if(size(res) >= 22&&diffAsSecs(standrtime,toTimestamp(Sys.YYYY_MM_DD_hh_mm_ss ,res[2]))<3600.0){

submit({msisdn=res[1],dataTime=res[2],appId=res[21]},ub_tmp);

//submit({a0=res[0],a1=res[1],a2=res[2],a3=res[3],a4=res[4],a5=res[5],a6=res[6],a7=res[7],a8=res[8],a9=res[9],a10=res[10],a11=res[11],a12=res[12],a13=res[13],a14=res[14],a15=res[15],a16=res[16],a17=res[17],a18=res[18],a19=res[19],a20=res[20],a21=res[21],a22=res[22]},fullMseeageIfs);

}

}

}

() as SiRes = Export(ub_tmp)

{

param

streamId : "KafkaUBSignal_"+(rstring)getChannel() ;

}

}

stream汇总 及写redis代码:

namespace com.asiainfo.position;

use application::*;

composite BasicUerBehav {

graph

@parallel(width=30)

stream<rstring minTime,rstring msisdn,rstring appId,int32 cnts> userBeaData = getBeaData(){}

@parallel(width=20,partitionBy=[{port=userBeaData, attributes=[msisdn]}])

() as redisRes = RedisSink(userBeaData)

{

}

config hostPool : sqlPoollw=["pc-zjqbsp06", "pc-zjqbsp16", "pc-zjqbsp24" , "pc-zjqbsp25","pc-zjqbsp05"]; // "pc-zjqbsp04",,

placement: host(sqlPoollw);

}

composite getBeaData(output addInfo){

graph

stream<rstring msisdn,rstring dataTime,rstring appId> Si_init = Import()

{

param

applicationName : "com.asiainfo.position::UserBehaviorSource";

streamId : "KafkaUBSignal_"+(rstring)getChannel() ;

}

stream<rstring msisdn,rstring dataTime,rstring appId> Si_tmp = Filter(Si_init)

{

}

(stream<timestamp tag1> currentTime1) as Beacon_1 = Beacon()

{

param

period : 60.0 ;

//initDelay : 60.0 ;

output

currentTime1 : tag1 = getTimestamp();//add(,-86400.0)

}

stream<rstring msisdn,timestamp dataTime,rstring appId> userBeaData = Custom(Si_tmp;currentTime1){

logic state:{

mutable timestamp standrtime;

}

/*

onTuple currentTime1:{

standrtime=currentTime1.tag1;

}

*/

onTuple Si_tmp:{//diffAsSecs(standrtime,toTimestamp(Sys.YYYY_MM_DD_hh_mm_ss ,Si_tmp.dataTime))<3600.0

if(length(Si_tmp.msisdn)>0&&length(Si_tmp.dataTime)>0&&length(Si_tmp.appId)>0){

//submit({msisdn=Si_tmp.msisdn,dataTime=Si_tmp.dataTime,appId=Si_tmp.appId,minTime=toString(standrtime, "%Y-%m-%d")+" "+toString(standrtime, "%H:%M"+":00")},userBeaData);

submit({msisdn=Si_tmp.msisdn,dataTime=toTimestamp(Sys.YYYY_MM_DD_hh_mm_ss ,Si_tmp.dataTime),appId=Si_tmp.appId},userBeaData);

//}

}

}

}

(stream<timestamp minTime,rstring msisdn,rstring appId,rstring dataTime> add_user_bea) as Join_Add_UserBea =

Join(userBeaData as LS ; currentTime1 as RS)

{

window

LS : sliding, time(180.000), partitioned ;

RS : sliding, count(0) ;

param

algorithm : inner ;

partitionByLHS : LS.appId ;

// partitionByLHS : LS.msisdn;

match : LS.dataTime <= RS.tag1 && diffAsSecs(RS.tag1, LS.dataTime)

<= 60.000 ;

output

add_user_bea : minTime = RS.tag1, msisdn = LS.msisdn,appId=LS.appId,dataTime=toString(LS.dataTime, "%Y-%m-%d")+" "+toString(LS.dataTime, "%H:%M:%S") ;//toString(So_all_region_grp.minTime, "%Y-%m-%d")+" "+toString(So_all_region_grp.minTime, "%H:%M:%S") stayDura add by hezh at 20160711

}

(stream<timestamp minTime,rstring msisdn,rstring appId,int32 cnts> So_all_region_grp) as Aggregate_all =

Aggregate(add_user_bea as inPort0Alias)

{

window

inPort0Alias : tumbling, punct() ;

param

groupBy : minTime, msisdn ,appId;

output

So_all_region_grp : cnts = Sum(1);

}

stream<rstring minTime,rstring msisdn,rstring appId,int32 cnts> addInfo =Custom(So_all_region_grp){

logic

onTuple So_all_region_grp:{

submit({minTime=toString(So_all_region_grp.minTime, "%Y-%m-%d")+" "+toString(So_all_region_grp.minTime, "%H:%M:00"),msisdn=So_all_region_grp.msisdn,appId=So_all_region_grp.appId,cnts=So_all_region_grp.cnts},addInfo);

}

}

/*

() as Sink5 = FileSink(addInfo)

{

param

file : "/opt/data/streamdtklad/data/kafkaUserBeavsnap"+(rstring)getChannel()+".dat";

closeMode : dynamic;

append : true;

config placement:host("pc-zjqbsp16");

}

*/

}

reidsCoonfig.java

package redis;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.util.Properties;

import java.io.InputStream;

import java.lang.reflect.Field;

public class RedisConfig {

/******************Message*************************/

public static String MSG_SPLIT=",";

public static int MSG_NO_MSISDN=0;

public static int MSG_NO_LAC=2;

public static int MSG_NO_CELL=3;

public static int MSG_NO_EVENTTIME=4;

/******************Redis*************************/

//public static String REDIS_HOST = "redis-single";

public static String REDIS_HOST = "10.70.134.140";

public static int REDIS_PORT = 16379;

public static int REDIS_CONN_TOTAL = 100;

public static int REDIS_CONN_IDLE = 5;

public static Long REDIS_CONN_WAIT = 30000l;

public static boolean REDIS_TESTONBORROW = false;

public static int REDIS_DB_TOTAL = 10;

public static int REDIS_DB_NO = 0;

/******************Kafka*************************/

public static String KAFKA_ZK_CONN = "192.168.1.20:2181";

public static String KAFKA_GRP_ID = "TEST";

public static String KAFKA_TOPIC = "signal0000";

public static String KAFKA_ZK_TMOUT = "4000";

public static String KAFKA_ZK_SYNC = "200";

public static String KAFKA_AUTO_COMM = "1000";

public static String KAFKA_OFFSET = "smallest";

/*******************File****************************/

public static int FILE_FLUSH_BATCH = 5000;

public static String FILE_LOCUS_DIR = "F:\\Test\\Output\\";

/**

*

* @param file

*/

public static void overStaticInfo(File file){

Properties prop = new Properties();

InputStream fis = null;

try {

fis = new FileInputStream(file);

prop.load(fis);

Field[] field = RedisConfig.class.getDeclaredFields();

for(int i=0;i<field.length;i++){

String val = prop.getProperty(field[i].getName());

if(val != null){

if(field[i].getType() == boolean.class){

field[i].set(null,Boolean.parseBoolean(val));

}else if(field[i].getType() == Long.class){

field[i].set(null,Long.parseLong(val));

}else if(field[i].getType() == int.class){

field[i].set(null,Integer.parseInt(val));

}else{

field[i].set(null,val);

}

}

}

} catch (Exception e) {

System.out.println("ps:ERROR Init RedisConfig failed !");

e.printStackTrace();

System.exit(-1);

}finally{

try {

if(fis != null){

fis.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

public static void printInfo(){

System.out.println("########----Config Info:----########");

Field[] field = RedisConfig.class.getDeclaredFields();

for(int i=0;i<field.length;i++){

try {

System.out.println("ps:INFO "+field[i].getName()+"="+field[i].get(null));

} catch (Exception e) {

e.printStackTrace();

}

}

System.out.println("########----:Config Info----########");

}

}redis实例:

package redis;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.LinkedList;

import java.util.List;

import java.util.Set;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import redis.RedisConfig;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.HostAndPort;

//import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisCluster;

import redis.clients.jedis.JedisPoolConfig;

import redis.clients.jedis.JedisShardInfo;

import redis.clients.jedis.ShardedJedis;

import redis.clients.jedis.ShardedJedisPool;

public class RedisDataSource {

//private Jedis jedis;//?..?.?瀹㈡.绔.??

//private static JedisPool jedisPool;//?..?.??ユ?

//private ShardedJedis shardedJedis;//?..棰..?风.杩..

//private ShardedJedisPool shardedJedisPool;//?..杩..姹

private static List<JedisPool> JedisPoolList = new ArrayList<>();

private static JedisPool jedisPool;

private static JedisCluster jedisCluster//= new JedisCluster(getJedisClusterNodes(), 10000,getCfg())

;

public static Jedis getSource(int database){

if(JedisPoolList.size() == 0){

initJedisPoolList();

}

if(JedisPoolList.size() < database){

return null;

}else{

return JedisPoolList.get(database).getResource();

}

}

public static JedisCluster getSource(){

/*if(jedisPool == null){

initJedisPool();

}

return jedisPool.getResource();*/

if(jedisCluster==null){

initJedisPool();

}

return jedisCluster;

}

private static GenericObjectPoolConfig getCfg(){

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMaxTotal(RedisConfig.REDIS_CONN_TOTAL);

config.setMaxIdle(RedisConfig.REDIS_CONN_IDLE);

config.setMaxWaitMillis(RedisConfig.REDIS_CONN_WAIT);

config.setTestOnBorrow(RedisConfig.REDIS_TESTONBORROW);

return config;

}

private static Set<HostAndPort> getJedisClusterNodes(){

Set<HostAndPort> jedisClusterNodes = new HashSet<HostAndPort>();

//Jedis Cluster will attempt to discover cluster nodes automatically

jedisClusterNodes.add(new HostAndPort(RedisConfig.REDIS_HOST, RedisConfig.REDIS_PORT));

return jedisClusterNodes;

}

private static void initJedisPoolList(){

for(int i=0;i<RedisConfig.REDIS_DB_TOTAL;i++){

JedisPoolList.add(new JedisPool(getCfg(),RedisConfig.REDIS_HOST,RedisConfig.REDIS_PORT,10000,null,i));

}

}

private static void initJedisPool(){

Set<HostAndPort> jedisClusterNodes = new HashSet<HostAndPort>();

//Jedis Cluster will attempt to discover cluster nodes automatically

jedisClusterNodes.add(new HostAndPort(RedisConfig.REDIS_HOST, RedisConfig.REDIS_PORT));

jedisCluster= new JedisCluster(jedisClusterNodes, 10000,getCfg());

//jedisCluster= new JedisCluster(jedisClusterNodes, 10000);

//singal instance redis

//jedisPool = new JedisPool(getCfg(),RedisConfig.REDIS_HOST,RedisConfig.REDIS_PORT,10000,null,RedisConfig.REDIS_DB_NO);

}

/*

//?.??..?..姹

private static void initialPool()

{

// 姹..?..缃

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMaxTotal(1);

config.setMaxIdle(5);

config.setMaxWaitMillis(1000l);

config.setTestOnBorrow(false);

jedisPool = new JedisPool(config,"192.168.1.22",6379);

}

// ?.??..?.?

private void initialShardedPool()

{

// 姹..?..缃.

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMaxTotal(20);

config.setMaxIdle(5);

config.setMaxWaitMillis(1000l);

config.setTestOnBorrow(false);

// slave?炬.

List<JedisShardInfo> shards = new ArrayList<JedisShardInfo>();

shards.add(new JedisShardInfo("192.169.1.22", 6379, "master"));

// ?..?

shardedJedisPool = new ShardedJedisPool(config, shards);

}

public void show() {

KeyOperate();

StringOperate();

ListOperate();

SetOperate();

SortedSetOperate();

HashOperate();

}

private void KeyOperate() {

}

private void StringOperate() {

}

private void ListOperate() {

}

private void SetOperate() {

}

private void SortedSetOperate() {

}

private void HashOperate() {

}

*/

}继承stream的java类 调用获取实例:

package application;

import java.io.IOException;

import java.sql.SQLException;

import java.util.HashMap;

import java.util.Map.Entry;

import org.apache.log4j.Logger;

import redis.RedisDataSource;

import redis.clients.jedis.JedisCluster;

import com.ibm.streams.operator.AbstractOperator;

import com.ibm.streams.operator.OperatorContext;

import com.ibm.streams.operator.StreamSchema;

import com.ibm.streams.operator.Type;

import com.ibm.streams.operator.StreamingData.Punctuation;

import com.ibm.streams.operator.StreamingInput;

import com.ibm.streams.operator.Tuple;

import com.ibm.streams.operator.model.InputPortSet;

import com.ibm.streams.operator.model.InputPortSet.WindowMode;

import com.ibm.streams.operator.model.InputPortSet.WindowPunctuationInputMode;

import com.ibm.streams.operator.model.InputPorts;

import com.ibm.streams.operator.model.Libraries;

import com.ibm.streams.operator.model.PrimitiveOperator;

/**

* Class for an operator that consumes tuples and does not produce an output stream.

* This pattern supports a number of input streams and no output streams.

* <P>

* The following event methods from the Operator interface can be called:

* </p>

* <ul>

* <li><code>initialize()</code> to perform operator initialization</li>

* <li>allPortsReady() notification indicates the operator's ports are ready to process and submit tuples</li>

* <li>process() handles a tuple arriving on an input port

* <li>processPuncuation() handles a punctuation mark arriving on an input port

* <li>shutdown() to shutdown the operator. A shutdown request may occur at any time,

* such as a request to stop a PE or cancel a job.

* Thus the shutdown() may occur while the operator is processing tuples, punctuation marks,

* or even during port ready notification.</li>

* </ul>

* <p>With the exception of operator initialization, all the other events may occur concurrently with each other,

* which lead to these methods being called concurrently by different threads.</p>

*/

@PrimitiveOperator(name="RedisSink", namespace="application",

description="Java Operator RedisSink")

@InputPorts({@InputPortSet(description="Port that ingests tuples", cardinality=1, optional=false, windowingMode=WindowMode.NonWindowed, windowPunctuationInputMode=WindowPunctuationInputMode.Oblivious), @InputPortSet(description="Optional input ports", optional=true, windowingMode=WindowMode.NonWindowed, windowPunctuationInputMode=WindowPunctuationInputMode.Oblivious)})

@Libraries({"impl/lib/jedis-2.8.0.jar","impl/lib/commons-pool2-2.4.2.jar"})//

public class RedisSink extends AbstractOperator {

protected JedisCluster jedisCluster;

protected HashMap<Integer,Object> map=new HashMap<Integer,Object>();

private String msisdn;

private String cnts;

private String appId;

private String minTime;

private void initSchema(StreamSchema ss){

// trace.log(TraceLevel.INFO, "");

for(int i=1;i<=ss.getAttributeCount();i++){

map.put(i, ss.getAttribute(i-1).getType().getMetaType());

//trace.info("log "+ss.getAttribute(i-1).getType().getMetaType());

//System.out.println("syso "+ss.getAttribute(i-1).getType().getMetaType());

}

}

private void setTupeValue(Tuple tuple) throws SQLException{

for(java.util.Iterator<Entry<Integer, Object>> it=map.entrySet().iterator();it.hasNext();){

Entry<Integer, Object> entry = it.next();

// System.out.println("----------------"+entry.getValue());

if(entry.getValue().equals(Type.MetaType.RSTRING)){

//pstmt.setString(entry.getKey(),tuple.getString(entry.getKey()-1));

if(entry.getKey().equals(1)){

minTime=tuple.getString(entry.getKey()-1);

}else if(entry.getKey().equals(2)){

msisdn=tuple.getString(entry.getKey()-1);

}else if(entry.getKey().equals(3)){

appId=tuple.getString(entry.getKey()-1);

}

}if(entry.getValue().equals(Type.MetaType.INT32)){

if(entry.getKey().equals(4)){

cnts=tuple.getString(entry.getKey()-1);

}

}

}

}

/**

* Initialize this operator. Called once before any tuples are processed.

* @param context OperatorContext for this operator.

* @throws Exception Operator failure, will cause the enclosing PE to terminate.

*/

@Override

public synchronized void initialize(OperatorContext context)

throws Exception {

// Must call super.initialize(context) to correctly setup an operator.

super.initialize(context);

//Logger.getLogger(this.getClass()).trace("Operator " + context.getName() + " initializing in PE: " + context.getPE().getPEId() + " in Job: " + context.getPE().getJobId() );

initSchema(getInput(0).getStreamSchema());

jedisCluster = RedisDataSource.getSource();

// TODO:

// If needed, insert code to establish connections or resources to communicate an external system or data store.

// The configuration information for this may come from parameters supplied to the operator invocation,

// or external configuration files or a combination of the two.

}

/**

* Notification that initialization is complete and all input and output ports

* are connected and ready to receive and submit tuples.

* @throws Exception Operator failure, will cause the enclosing PE to terminate.

*/

@Override

public synchronized void allPortsReady() throws Exception {

// This method is commonly used by source operators.

// Operators that process incoming tuples generally do not need this notification.

OperatorContext context = getOperatorContext();

Logger.getLogger(this.getClass()).trace("Operator " + context.getName() + " all ports are ready in PE: " + context.getPE().getPEId() + " in Job: " + context.getPE().getJobId() );

}

/**

* Process an incoming tuple that arrived on the specified port.

* @param stream Port the tuple is arriving on.

* @param tuple Object representing the incoming tuple.

* @throws Exception Operator failure, will cause the enclosing PE to terminate.

*/

@Override

public void process(StreamingInput<Tuple> stream, Tuple tuple)

throws Exception {

// TODO Insert code here to process the incoming tuple,

// typically sending tuple data to an external system or data store.

// String value = tuple.getString("AttributeName");

//String minTime;

//System.out.println("Takes[" + (System.currentTimeMillis() - tmp) + "] ms");

setTupeValue(tuple);

//System.out.println("minTime:"+minTime+"msisdn:"+msisdn+"appId:"+appId+"cnts:"+cnts);

//System.out.println(System.currentTimeMillis());

//long a=System.currentTimeMillis();

if(appId!=null&&cnts!=null){

jedisCluster.rpush(msisdn+","+minTime, appId+"|"+cnts);//如果是redis集群 要用jedisCluster的方法 塞值 如果用jedis对象 会报错。

//long b=System.currentTimeMillis();

//System.out.println("rpush...."+(b-a));

jedisCluster.expire(msisdn+","+minTime, 3600);

}

//long c=System.currentTimeMillis();

//System.out.println("expire...."+(c-b));

}

/**

* Process an incoming punctuation that arrived on the specified port.

* @param stream Port the punctuation is arriving on.

* @param mark The punctuation mark

* @throws Exception Operator failure, will cause the enclosing PE to terminate.

*/

@Override

public void processPunctuation(StreamingInput<Tuple> stream,

Punctuation mark) throws Exception {

// TODO: If window punctuations are meaningful to the external system or data store,

// insert code here to process the incoming punctuation.

}

/**

* Shutdown this operator.

* @throws Exception Operator failure, will cause the enclosing PE to terminate.

*/

@Override

public synchronized void shutdown() throws Exception {

OperatorContext context = getOperatorContext();

Logger.getLogger(this.getClass()).trace("Operator " + context.getName() + " shutting down in PE: " + context.getPE().getPEId() + " in Job: " + context.getPE().getJobId() );

// TODO: If needed, close connections or release resources related to any external system or data store.

jedisCluster.close();

// Must call super.shutdown()

super.shutdown();

}

}