通过python实现爬虫,支持多线程,支持层数自定义

爬取的网址及关键字信息存储在数据库中(mongodb)

环境:

ubuntu 14.04

依赖包 python mongodb pymongo

待优化部分:

1. 数据库插入优化

2.编解码优化

3.整个网页快照保存

4.去重

#-*- coding: utf-8 -*-

'''

author: Derry

date:2016.1.19

'''

from HTMLParser import HTMLParser

import urllib

import time

import random

import os

import re

import Queue

import threading

import sys

import urllib2

import chardet

#mongodb python driver

import pymongo

#当前url队列,将要被解析 ,Queue是线程安全的

CurLevelUrlQueue = Queue.Queue()

NextLevelUrlQueue = Queue.Queue()

#已经解析过得url队列

HistoryUrlQueue = Queue.Queue()

TorrentQueue=Queue.Queue()

HistoryUrlMap={}

HtmlEntryList=[]

FailedUrlList=[]

#连接mongodb

def get_collection(server):

try:

client = pymongo.MongoClient(host=server, port=27017)

except Exception,e:

print 'connect database error',e

return None

db = client['crawl']

coll = db['htmlpage']

print '## connect mongodb.....ok @%s'%(server)

return coll

def saveImage(host,url):

try:

splitPath = url.split('/')

f_name ="%d_"%random.randint(1,99999) + splitPath.pop()

res = re.match('^http',url)

if res is None:

url='http://'+host+"/"+url

cmd='curl -o ./img/%s %s'%(f_name,url)

os.system(cmd)

except Exception,e:

print "[Error]couldn't download: %s:%s" %(f_name,e)

def getHost(url):

hosts = []

res = re.match('^http',url)

if res == None:

return ""

else:

segs=url[7:].split('/')

#segs[0] is the host

return segs[0]

def enqueueByList(q,list):

for elem in list:

q.put(elem)

#HtmlEntry类保存爬取过的网页信息,如title,关键字等

class HtmlEntry():

def __init__(self,depth,url):

self.depth = depth

self.url = url

self.encoding=""

self.title = ""

self.keywords = ""

self.description = ""

def setKeywords(self,keywords):

self.keywords = keywords

def setDescription(self,description):

self.description = description

def setTitle(self,title):

self.title = title

def getUrl(self):

return self.url

def getKeywords(self):

return self.keywords

def getDescription(self):

return self.description

def getTitle(self):

return self.title

class MyParser(HTMLParser):

def __init__(self,url,depth):

HTMLParser.__init__(self)

self.url_list=[]

self.pure_url_list=[]

self.url = url

self.host=getHost(url)

self.depth = depth #当前深度

self.processing = 0

self.title_flag = 0

self.encoding = 'utf-8'

self.title=""

self.keywords=""

self.description=""

self.html_entry = HtmlEntry(depth,url)

def checkVisitedStatus(self,url):

length = len(url)

if HistoryUrlMap.has_key(length) and url in HistoryUrlMap[length]:

#print 'url = %s already visited.'%(url)

return True

return False

def format(self,str):

code=chardet.detect(str)

if code.get('encoding') is not None:

#编码

return str.decode(code.get('encoding')).encode(self.encoding)

else:

return str

def handle_data(self,data):

#if self.processing == 1:

# print 'data=',data

if self.title_flag == 1:

self.html_entry.setTitle(self.format(data))

def handle_starttag(self,tag,attrs):

if tag == 'a':

self.processing = 1

for key ,value in attrs:

if key == 'href':

#print 'dep:%d,url:%s'%(self.depth,value)

if len(value) < 10 or value.find('javascript') != -1:

continue

if value.find('123456bt') != -1:

# print 'torrent =',value

TorrentQueue.put(value)

#没有访问过则加入队列

if False == self.checkVisitedStatus(value):

self.url_list.append(value)

if tag == 'img' and attrs:

for key,value in attrs:

if key=='src':

#print 'img url=',value

#saveImage(self.host,value)

pass

#urllib.urlretrieve(value,genFileName())

if tag == "title":

self.title_flag = 1

if tag == 'meta'and attrs:

for key,value in attrs:

if key == 'name' and (value == 'Description' or value == "description"):

for k,v in attrs:

if k == 'content':

#print 'desc',v

self.html_entry.setDescription(self.format(v))

if key == 'name' and (value == 'keywords' or value == 'keywords'):

for k,v in attrs:

if k == 'content':

self.html_entry.setKeywords(self.format(v))

def handle_endtag(self,tag):

if tag=='a':

self.processing = 0

if tag == 'title':

self.title_flag = 0

def getUrlList(self):

for url in self.url_list:

if url.find(self.url) == -1:

res = re.match('^http',url)

if res is None:

url='http://'+self.host+"/"+url;

self.pure_url_list.append(url)

return self.pure_url_list

def getHtmlEntry(self):

return self.html_entry

class CrawlThread(threading.Thread):

def __init__(self,num,depth):

threading.Thread.__init__(self)

self.num = num

self.depth = depth # 当前的深度

self.total = CurLevelUrlQueue.qsize()

def run(self):

while CurLevelUrlQueue.qsize() > 0:

cur_url = CurLevelUrlQueue.get(block = False)

#print '%d/%d torrent:%d'%(CurLevelUrlQueue.qsize(),self.total,TorrentQueue.qsize())

#print '[thread %d]visiting [%s]' %(self.num,cur_url)

HistoryUrlQueue.put(cur_url)

url_len = len(cur_url)

#根据字符串长度hash一次,提高匹配速度

if not HistoryUrlMap.has_key(url_len):

HistoryUrlMap[url_len] = []

HistoryUrlMap[url_len].append(cur_url)

try:

req_header = {'User-Agent':'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Accept':'text/html;q=0.9,*/*;q=0.8',

'Connection':'close'

}

req = urllib2.Request(cur_url,None,req_header)

resp = urllib2.urlopen(req,None,5)

#page = resp.read().decode('gb2312').encode('utf-8')

page = resp.read()

resp.close()

except Exception,e:

print 'url open error',e

FailedUrlList.append(cur_url)

continue

parser = MyParser(cur_url,self.depth)

#print page

try:

parser.feed(page)

except Exception,e:

#print 'feed error',e

continue

entry=parser.getHtmlEntry()

HtmlEntryList.append(entry)

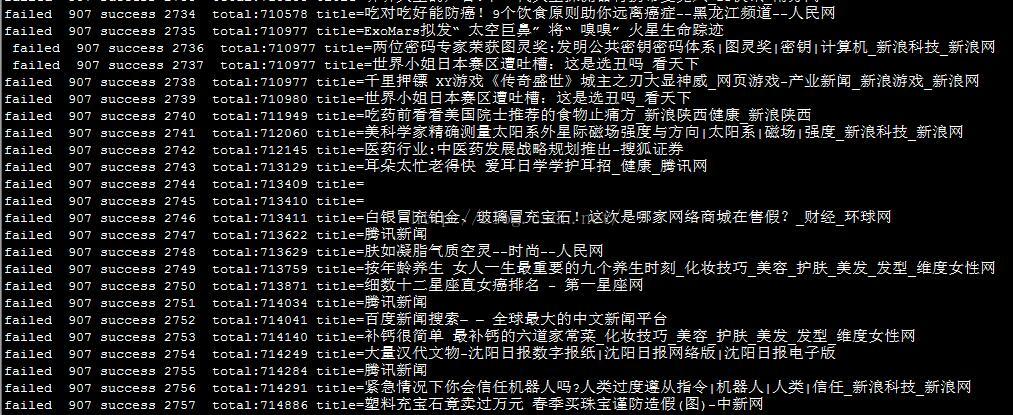

print 'failed %4d success %d total:%5d title=%s'%(len(FailedUrlList),len(HtmlEntryList),HistoryUrlQueue.qsize(),parser.html_entry.getTitle())

#保存数据库,可以开一个线程负责,待优化,需要增加重复数据判断

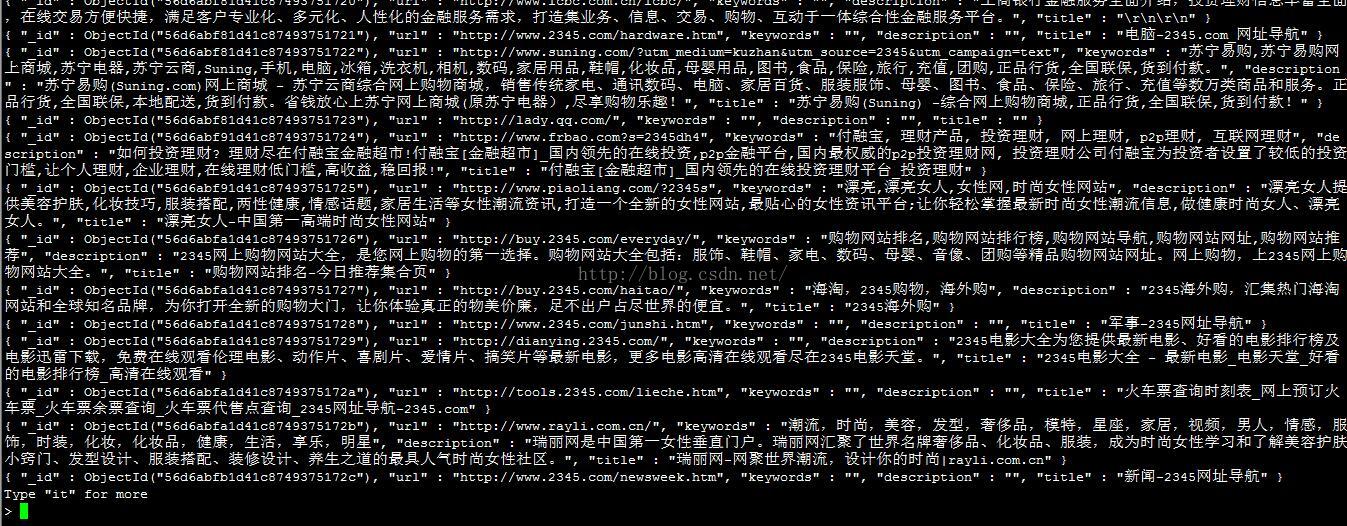

html_entry = {"url":entry.getUrl(), "title": entry.getTitle(),"keywords":entry.getKeywords(),"description":entry.getDescription()}

g_collection.insert(html_entry)

# 将爬取的链接放入队列中,供下层使用

enqueueByList(NextLevelUrlQueue,parser.getUrlList())

enqueueByList(HistoryUrlQueue,parser.getUrlList())

class MyCrawl:

def __init__(self,urls,depth,thread_num):

self.depth = depth

self.url_list = urls

self.thread_num = thread_num

self.threads=[]

self.dep=0

enqueueByList(CurLevelUrlQueue,self.url_list)

def updateQueue(self):

#while TorrentQueue.qsize() > 0:

# CurLevelUrlQueue.put(TorrentQueue.get(block=False))

fd = open('urls_%d.txt'%(self.dep),'w')

while NextLevelUrlQueue.qsize() > 0:

url=NextLevelUrlQueue.get(block=False)

CurLevelUrlQueue.put(url)

fd.write(url)

fd.write('\n')

fd.close()

print 'update queue success, next queue size [%d],cur queue size[%d]'%(NextLevelUrlQueue.qsize(),CurLevelUrlQueue.qsize())

def wait_allcomplete(self):

for item in self.threads:

if item.isAlive():

item.join()

def process(self):

start = time.time()

self.dep = 0

while self.dep < self.depth:

if self.dep != 0:

self.updateQueue()

for i in range(self.thread_num):

thread = CrawlThread(i,self.dep)

thread.start()

self.threads.append(thread)

#主线程等待

self.wait_allcomplete()

threads=[]

self.dep = self.dep + 1;

print 'self.dep=',self.dep

end = time.time()

total=0

print 'time:%ds,urls=%d'%(end-start,HistoryUrlQueue.qsize())

reload(sys)

sys.setdefaultencoding('utf-8')

url_list=["http://www.2345.com/"]

#连接数据库

g_collection = get_collection("localhost")

if g_collection is None:

exit()

#开100个线程,爬取深度为3层

crawl = MyCrawl(url_list,3,100)

crawl.process();