环境搭建

-

使用的组件及版本

- operator-sdk v1.22.0

- go 1.20.0 linux/amd64

- git 1.8.3.1

- k8s 1.18.5

- docker 20.10.5

-

前期配置

- 安装Git

yum install git - 安装docker

yum install docker-ce - 安装go 官网下载

tar -C /usr/local/ -xvf go1.20.linux-amd64.tar.gz

- 安装Git

-

环境配置

// 将go配置到/etc/profile

export GOROOT=/usr/local/go

export GOPATH=/data/gopath // 路径自定义

export PATH=$GOROOT/bin:$PATH

export GO111MODULE=on //可有可无

export GOPROXY=https://goproxy.cn

source /etc/profile

> - `GO111MODULE=off` 无模块支持,go 会从 GOPATH 和 vendor 文件夹寻找包。

> - `GO111MODULE=on` 模块支持,go 会忽略 GOPATH 和 vendor 文件夹,只根据 `go.mod` 下载依赖。

> - `GO111MODULE=auto` 在 `$GOPATH/src` 外面且根目录有 `go.mod` 文件时,开启模块支持。

- 安装operator-sdk(直接下载可执行文件)

curl -LO https://github.com/operator-framework/operator-sdk/releases/download/v1.7.2/operator-sdk_linux_amd64

chmod +x operator-sdk_linux_amd64

mv operator-sdk_linux_amd64 /usr/local/bin/operator-sdk

创建项目

- 创建一个项目目录,使用operator-sdk进行初始化init

mkdir rds-operator

cd rds-operator

operator-sdk init --domain=example.com --repo=paas.cvicse.com/rds/app

- 创建api

*(注意,若使用低版本如v1.1.0则还需要加一个参数 --make=false,否则会报错。当前使用的版本只需要执行以下命令即可)*

operator-sdk create api --group rds --version v1 --kind Rds --resource=true --controller=true

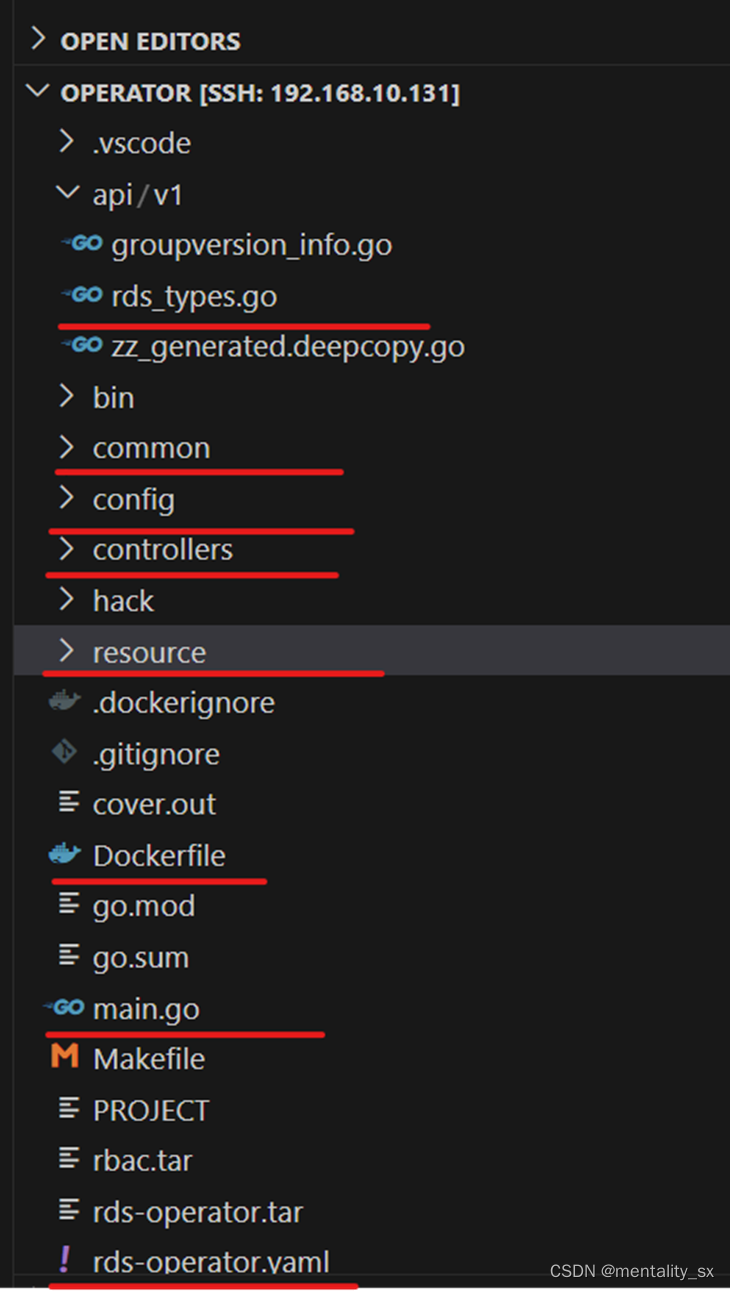

- 修改代码(红色标记处需要替换)

修改完后执行命令,重新生成 zz_generated.deepcopy.go 文件

make generate

运行

运行方式两种

- 本地代码运行

- 代码在k8s节点上,直接在项目的根目录运行如下命令(此方式主要开发测试使用)

make generate && make manifests && make install && make run

- 代码在k8s节点上,直接在项目的根目录运行如下命令(此方式主要开发测试使用)

注意:

- 本机需确保安装了 kubectl 工具,并且证书文件 ~/.kube/config 存在(保证为集群管理员权限)

- 测试完毕后使用 ctrl + c 停止程序,然后

make uninstall删除 crd 定义- 若没有发生依赖改变,可直接使用

go run main.go指令执行代码

make generate:生成包含 DeepCopy、DeepCopyInto 和 DeepCopyObject 方法实现的代码

make manifests:生成 WebhookConfiguration、ClusterRole 和 CustomResourceDefinition 对象

make install:将 CRD 安装到 ~/.kube/config 中指定的 K8s 集群中

make run:运行代码

make uninstall: 从 ~/.kube/config 中指定的 K8s 集群中卸载 CRD

- 在k8s集群中运行

修改Dockerfile文件(文件在项目的根目录下),修改内容如下

# Build the manager binary

FROM golang:1.15 as builder

WORKDIR /workspace

# Copy the Go Modules manifests

COPY go.mod go.mod

COPY go.sum go.sum

# cache deps before building and copying source so that we don't need to re-download as much

# and so that source changes don't invalidate our downloaded layer

ENV GOPROXY https://goproxy.cn,direct

RUN go mod download

# Copy the go source

COPY main.go main.go

COPY api/ api/

COPY controllers/ controllers/

COPY resource/ resource/

# Build

RUN CGO_ENABLED=0 GOOS=linux GOARCH=amd64 GO111MODULE=on go build -a -o manager main.go

# Use distroless as minimal base image to package the manager binary

# Refer to https://github.com/GoogleContainerTools/distroless for more details

# 使用 distroless 作为最小基础镜像来打包管理器二进制文件

# FROM gcr.io/distroless/static:nonroot

FROM kubeimages/distroless-static:latest

WORKDIR /

COPY --from=builder /workspace/manager .

USER 65532:65532

ENTRYPOINT ["/manager"]

- 增加了环境变量 ENV GOPROXY https://goproxy.cn,direct

- 增加了COPY resource/ resource/

- 修改FROM镜像 FROM kubeimages/distroless-static:latest

执行如下命令制作镜像

make docker-build IMG=***.***.**.**:5000/redis-operator:v1.0

运行operator-controller-manager

operator-controller-manager运行后会启动两个容器 [kube-rbac-proxy manager]

容器manager所使用的镜像是【步骤2】所制作的镜像

容器kube-rbac-proxy所使用的镜像需要修改(只需修改镜像), 路径: 项目根目录/config/default/manager_auth_proxy_patch.yaml,内容如下:

# This patch inject a sidecar container which is a HTTP proxy for the

# controller manager, it performs RBAC authorization against the Kubernetes API using SubjectAccessReviews.

apiVersion: apps/v1

kind: Deployment

metadata:

name: controller-manager

namespace: system

spec:

template:

spec:

containers:

- name: kube-rbac-proxy

image: 124.223.82.79:5000/kube-rbac-proxy:v0.11.0 # 默认镜像为grc.io/kubesphere/kube-rbac-proxy:v0.8.0, 拉取不到,可从dockerhub上拉取, 此镜像需要修改!!!

args:

- "--secure-listen-address=0.0.0.0:8443"

- "--upstream=http://127.0.0.1:8080/"

- "--logtostderr=true"

- "--v=10"

ports:

- containerPort: 8443

name: https

- name: manager

args:

- "--health-probe-bind-address=:8081"

- "--metrics-bind-address=127.0.0.1:8080"

- "--leader-elect"

kube-rbac-proxy镜像拉取地址: https://hub.docker.com/r/kubesphere/kube-rbac-proxy/tags

执行如下命令创建 operator-controller-manager

make deploy IMG=**.**.**.**:5000/redis-operator:v1.0

-

创建ClusterRoleBinding

创建operator-controller-manager后,直接创建自定义资源,查看controller的日志会发现权限报错,报错信息如下:

E0210 05:45:33.131287 1 reflector.go:138] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:167: Failed to watch *v1.StatefulSet: failed to list *v1.StatefulSet: statefulsets.apps is forbidden: User "system:serviceaccount:redis-operator-system:redis-operator-controller-manager" cannot list resource "statefulsets" in API group "apps" at the cluster scope E0210 05:45:34.271962 1 reflector.go:138] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:167: Failed to watch *v1.StatefulSet: failed to list *v1.StatefulSet: statefulsets.apps is forbidden: User "system:serviceaccount:redis-operator-system:redis-operator-controller-manager" cannot list resource "statefulsets" in API group "apps" at the cluster scope E0210 05:45:36.971944 1 reflector.go:138] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:167: Failed to watch *v1.StatefulSet: failed to list *v1.StatefulSet: statefulsets.apps is forbidden: User "system:serviceaccount:redis-operator-system:redis-operator-controller-manager" cannot list resource "statefulsets" in API group "apps" at the cluster scope E0210 05:45:40.383080 1 reflector.go:138] pkg/mod/k8s.io/[email protected]/tools/cache/reflector.go:167: Failed to watch *v1.StatefulSet: failed to list *v1.StatefulSet: statefulsets.apps is forbidden: User "system:serviceaccount:redis-operator-system:redis-operator-controller-manager" cannot list resource "statefulsets" in API group "apps" at the cluster scope- 方案一:直接将controller-manager绑定到集群管理员cluster-admin

创建cluster-admin.yaml文件,内容如下:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: cluster-admin-rolebinding roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: redis-operator-controller-manager namespace: redis-operator-system创建命令如下:

kubectl apply -f clutser-admin.yml- 方案二:修改rbac目录下role.yaml

-

创建CRD资源

文件路径:

项目根目录/config/samples/rds_v1_rds.yaml在项目根目录下执行如下命令进行创建:

kubectl apply -f ./config/samples/rds_v1_rds.yaml -

删除CRD资源

make uninstall -

删除controller-manager

make undeploy

make undeploy: 从 ~/.kube/config 中指定的 K8s 集群中卸载controller