本文介绍一种跨集群应用灰度发布方案,通过 submariner 实现不同集群内 Pod 和 Service 的互联互通,基于 Traefik 完成流量的权重控制。

一、准备两个集群

准备两台机器,分别部署两个 K3s 集群,网络配置如下:

| Node | OS | Cluster | Pod CIDR | Service CIDR |

|---|---|---|---|---|

| node-a | CentOS 7 | cluster-a | 10.44.0.0/16 | 10.45.0.0/16 |

| node-b | CentOS 7 | cluster-b | 10.144.0.0/16 | 10.145.0.0/16 |

在 node-a 执行如下命令,初始化 Kubernetes 集群:

systemctl disable firewalld --now

POD_CIDR=10.44.0.0/16

SERVICE_CIDR=10.45.0.0/16

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--cluster-cidr $POD_CIDR --service-cidr $SERVICE_CIDR" sh -s -

curl -Lo /usr/bin/yq https://github.com/mikefarah/yq/releases/download/v4.14.2/yq_linux_amd64

chmod +x /usr/bin/yq

cp /etc/rancher/k3s/k3s.yaml kubeconfig.cluster-a

export IP=$(hostname -I | awk '{print $1}')

yq -i eval \

'.clusters[].cluster.server |= sub("127.0.0.1", env(IP)) | .contexts[].name = "cluster-a" | .current-context = "cluster-a"' \

kubeconfig.cluster-a

在 node-b 执行如下命令,初始化 Kubernetes 集群:

systemctl disable firewalld --now

POD_CIDR=10.144.0.0/16

SERVICE_CIDR=10.145.0.0/16

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--cluster-cidr $POD_CIDR --service-cidr $SERVICE_CIDR" sh -s -

curl -Lo /usr/bin/yq https://github.com/mikefarah/yq/releases/download/v4.14.2/yq_linux_amd64

chmod +x /usr/bin/yq

cp /etc/rancher/k3s/k3s.yaml kubeconfig.cluster-b

export IP=$(hostname -I | awk '{print $1}')

yq -i eval \

'.clusters[].cluster.server |= sub("127.0.0.1", env(IP)) | .contexts[].name = "cluster-b" | .current-context = "cluster-b"' \

kubeconfig.cluster-b

在 node-b 上,将 kubeconfig.cluster-b 文件拷贝到 node-a,以便后续使用

scp /root/kubeconfig.cluster-b root@<node-a ip>:/root/kubeconfig.cluster-b

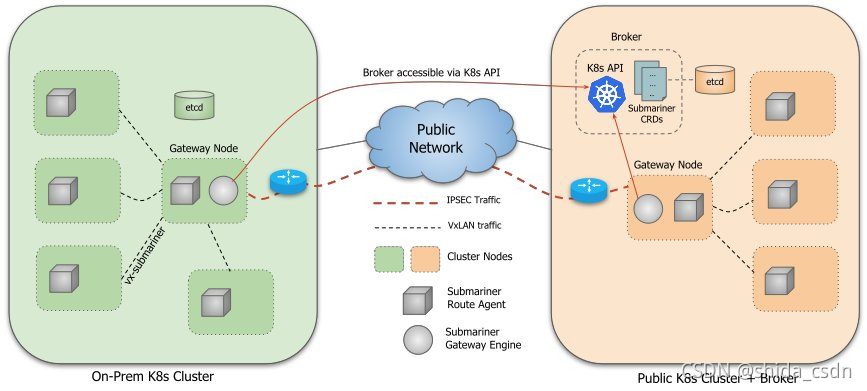

二、安装 submariner

submariner 需要将 broker 部署在某个集群(我们暂且称该集群为 hub 集群),其他集群都要连接到 hub 集群做数据交换。在本次实验中,我们假定 cluster-a 为 hub 集群,cluster-a 和 cluster-b 都 join 到 cluster-a 的 broker。

在 node-a 上执行以下命令,安装 submariner broker:

curl -Ls https://get.submariner.io | bash

export PATH=$PATH:~/.local/bin

echo export PATH=\$PATH:~/.local/bin >> ~/.bashrc

subctl deploy-broker --kubeconfig kubeconfig.cluster-a

将 cluster-a join 到 broker :

subctl join --kubeconfig kubeconfig.cluster-a broker-info.subm --clusterid cluster-a --natt=false

将 cluster-b join 到 broker :

subctl join --kubeconfig kubeconfig.cluster-b broker-info.subm --clusterid cluster-b --natt=false

由于 submariner 的 gateway 组件只会运行在打了 submariner.io/gateway=true 的 node 上,因此,需要给 node-a 和 node-b 分别打上这个 label:

kubectl --kubeconfig kubeconfig.cluster-a label node <node-a hostname> submariner.io/gateway=true

kubectl --kubeconfig kubeconfig.cluster-b label node <node-b hostname> submariner.io/gateway=true

这样,submariner 就安装好了,分别查看 cluster-a 和 cluster-b 中运行的 submariner 组件,类似如下状态即为正常:

三、验证 cluster-a 和 cluster-b Pod/Service 可直接互通

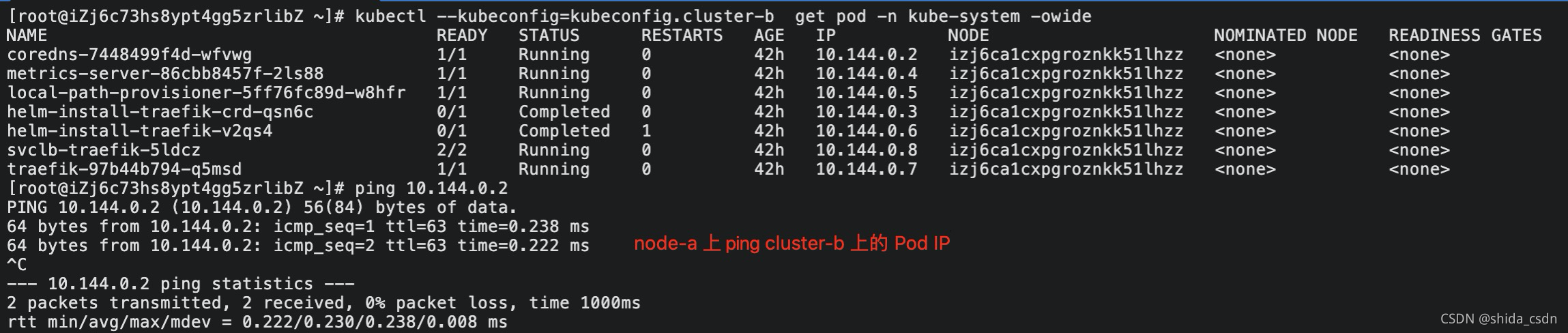

验证 cluster 间 Pod IP 可直通:

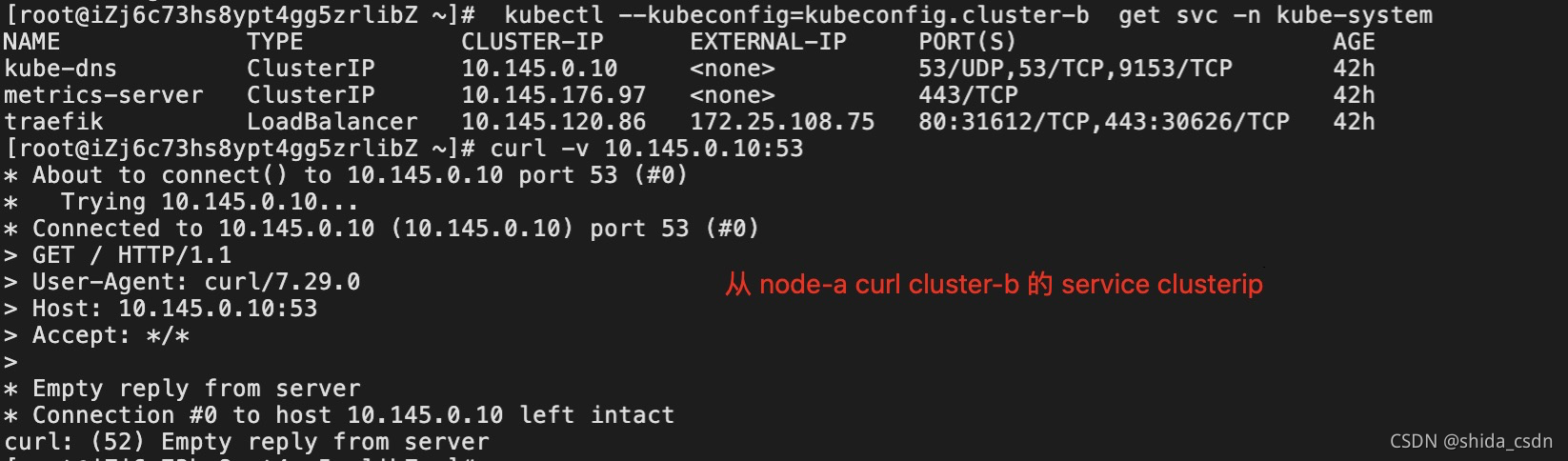

验证 cluster 间 Service ClusterIP 可直通:

四、部署 Blue/Green 两个版本的应用

准备 nginx-blue.yaml 文件,内容如下:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-blue

name: nginx-blue

namespace: default

spec:

selector:

matchLabels:

app: nginx-blue

template:

metadata:

labels:

app: nginx-blue

spec:

containers:

- image: shidaqiu/nginx:blue

imagePullPolicy: Always

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-blue

name: nginx-blue

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-blue

type: ClusterIP

将 nginx-blue.yaml 部署到 cluster-a 集群:

kubectl --kubeconfig=kubeconfig.cluster-a apply -f nginx-blue.yaml

准备 nginx-green.yaml 文件,内容如下:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-green

name: nginx-green

namespace: default

spec:

selector:

matchLabels:

app: nginx-green

template:

metadata:

labels:

app: nginx-green

spec:

containers:

- image: shidaqiu/nginx:green

imagePullPolicy: Always

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-green

name: nginx-green

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-green

type: ClusterIP

将 nginx-green.yaml 部署到 cluster-b 集群:

kubectl --kubeconfig=kubeconfig.cluster-b apply -f nginx-green.yaml

五、跨集群暴露服务

subctl export service --kubeconfig kubeconfig.cluster-b --namespace default nginx-green

六、部署 Traefik 灰度服务

准备 route.yaml 文件,内容如下:

apiVersion: v1

kind: Service

metadata:

name: nginx-green

namespace: default

spec:

externalName: nginx-green.default.svc.clusterset.local

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

type: ExternalName

---

apiVersion: traefik.containo.us/v1alpha1

kind: TraefikService

metadata:

name: nginx-blue-green-tsvc

spec:

weighted:

services:

- name: nginx-blue

weight: 8 # 定义权重

port: 80

kind: Service # 可选,默认就是 Service

- name: nginx-green

weight: 2

port: 80

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: nginx-traefik-ingress

namespace: default

spec:

entryPoints:

- web

routes:

- match: Host(`nginx-blue-green.tech.com`)

kind: Rule

services:

- name: nginx-blue-green-tsvc

kind: TraefikService

部署 route.yaml 到 cluster-a 集群:

kubectl --kubeconfig kubeconfig.cluster-a apply -f route.yaml

配置 node-a /etc/hosts,将域名地址 nginx-blue-green.tech.com 指向 node-a 的本机 IP:

echo "<node-a ip> nginx-blue-green.tech.com" >> /etc/hosts

七、验证流量已按比例在 cluster-a 和 cluster-b 间负载均衡

在 node-a 上多次执行 curl nginx-blue-green.tech.com,可以看到访问到的页面在 VERSION ONE 和 VERSION TWO 间切换,而且 比例近似 8:2。

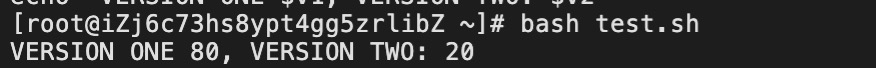

准备一个简单的测试脚本:

#!/bin/bash

v1=0

v2=0

for ((i=1; i<=100; i++))

do

res=$(curl -s nginx-blue-green.tech.com)

if echo $res | grep ONE >/dev/null; then

((v1++))

else

((v2++))

fi

done

echo "VERSION ONE $v1, VERSION TWO: $v2"

执行结果类似如下这样,证明流量按比例划分了: