程序功能:构造一

个满足一元二次函数 y = ax 2 +b 的原始数据,然后构建一个最简单的神经网络,仅包含一个输入

层、一个隐藏层和一个输出层。通过 TensorFlow 将隐藏层和输出层的 weights 和 biases 的值学

个满足一元二次函数 y = ax 2 +b 的原始数据,然后构建一个最简单的神经网络,仅包含一个输入

层、一个隐藏层和一个输出层。通过 TensorFlow 将隐藏层和输出层的 weights 和 biases 的值学

习出来,看看随着训练次数的增加,损失值是不是不断在减小。

代码如下:

# -*- coding:utf-8 -*-

import tensorflow as tf

import numpy as np

# 构造满足一元二次函数 y = ax^2 +b 的原始数据

x_data = np.linspace(-1, 1, 300)[:, np.newaxis]

noise = np.random.normal(0, 0.05, x_data.shape)

y_data = np.square(x_data) - 0.5 + noise

xs = tf.placeholder(tf.float32, [None, 1])

ys = tf.placeholder(tf.float32, [None, 1])

# 定义隐藏层和输出层

def add_layer(inputs, in_size, out_size, activation_functi=None):

weights = tf.Variable(tf.random_normal([in_size, out_size]))

biases = tf.Variable(tf.zeros([1, out_size]) + 0.1)

Wx_plus_b = tf.matmul(inputs, weights) + biases

if activation_functi is None:

outputs = Wx_plus_b

else:

outputs = activation_functi(Wx_plus_b)

return outputs

h1 = add_layer(xs, 1, 20, activation_functi=tf.nn.relu)

prediction = add_layer(h1, 20, 1, activation_functi=None)

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction), reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

# 训练模型

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

for i in range(1000):

sess.run(train_step, feed_dict={xs: x_data, ys:y_data})

if i % 50 == 0:

print(sess.run(loss, feed_dict={xs: x_data, ys: y_data}))

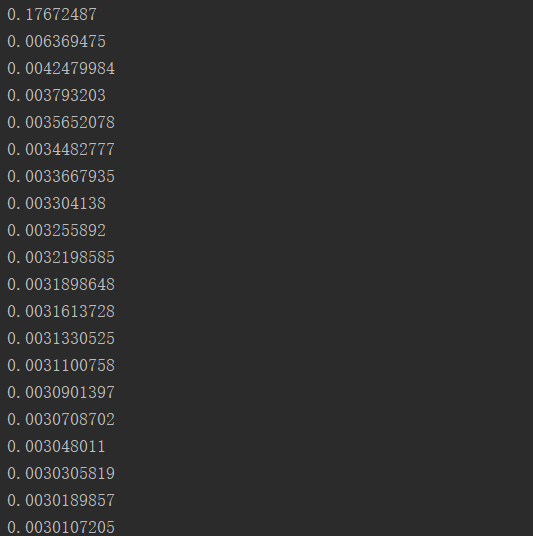

结果如下(每次运行结果不一定会相同):