深度学习论文: FastFlow: Unsupervised Anomaly Detection and Localization via 2D Normalizing Flows及其PyTorch实现

FastFlow: Unsupervised Anomaly Detection and Localization via 2D Normalizing Flows

PDF: https://arxiv.org/pdf/2111.07677v2.pdf

PyTorch代码: https://github.com/shanglianlm0525/CvPytorch

PyTorch代码: https://github.com/shanglianlm0525/PyTorch-Networks

1 概述

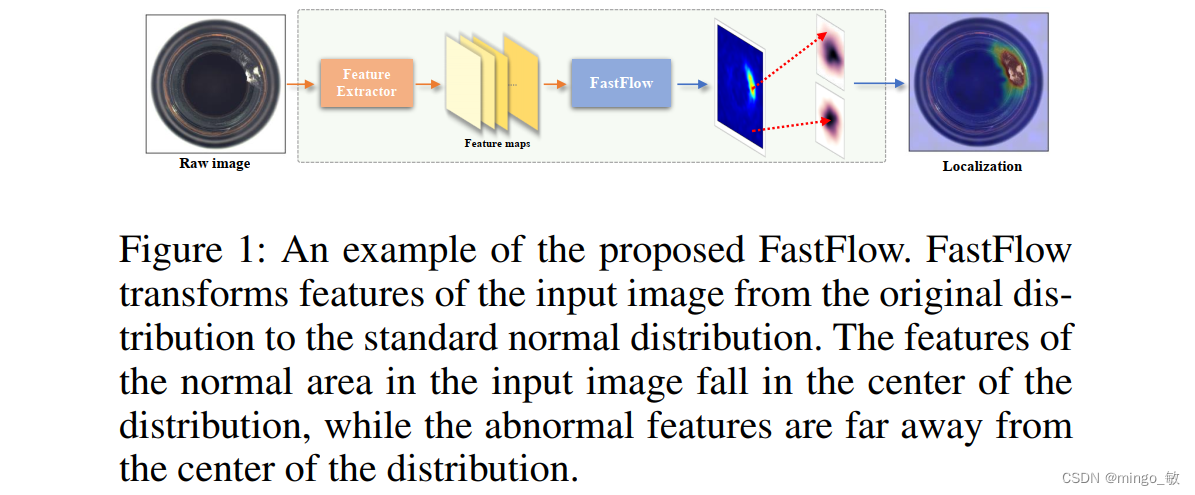

大多数现有的基于表示的方法使用深度卷积神经网络提取正常图像特征,并通过非参数分布估计方法对相应的分布进行表征。通过测量测试图像的特征与估计分布之间的距离来计算异常分数。然而,当前的方法不能有效地将图像特征映射到可处理的基础分布,并忽略了识别异常所必需的局部和全局特征之间的关系。为此,提出了使用2D正则化流实现的FastFlow,并将其用作概率分布估计器。提出的FastFlow解决了原始的一维归一化流模型破坏了二维图像固有的空间位置关系,限制了流模型的能力,同时推断的复杂性很高,限制了实用价值等问题。

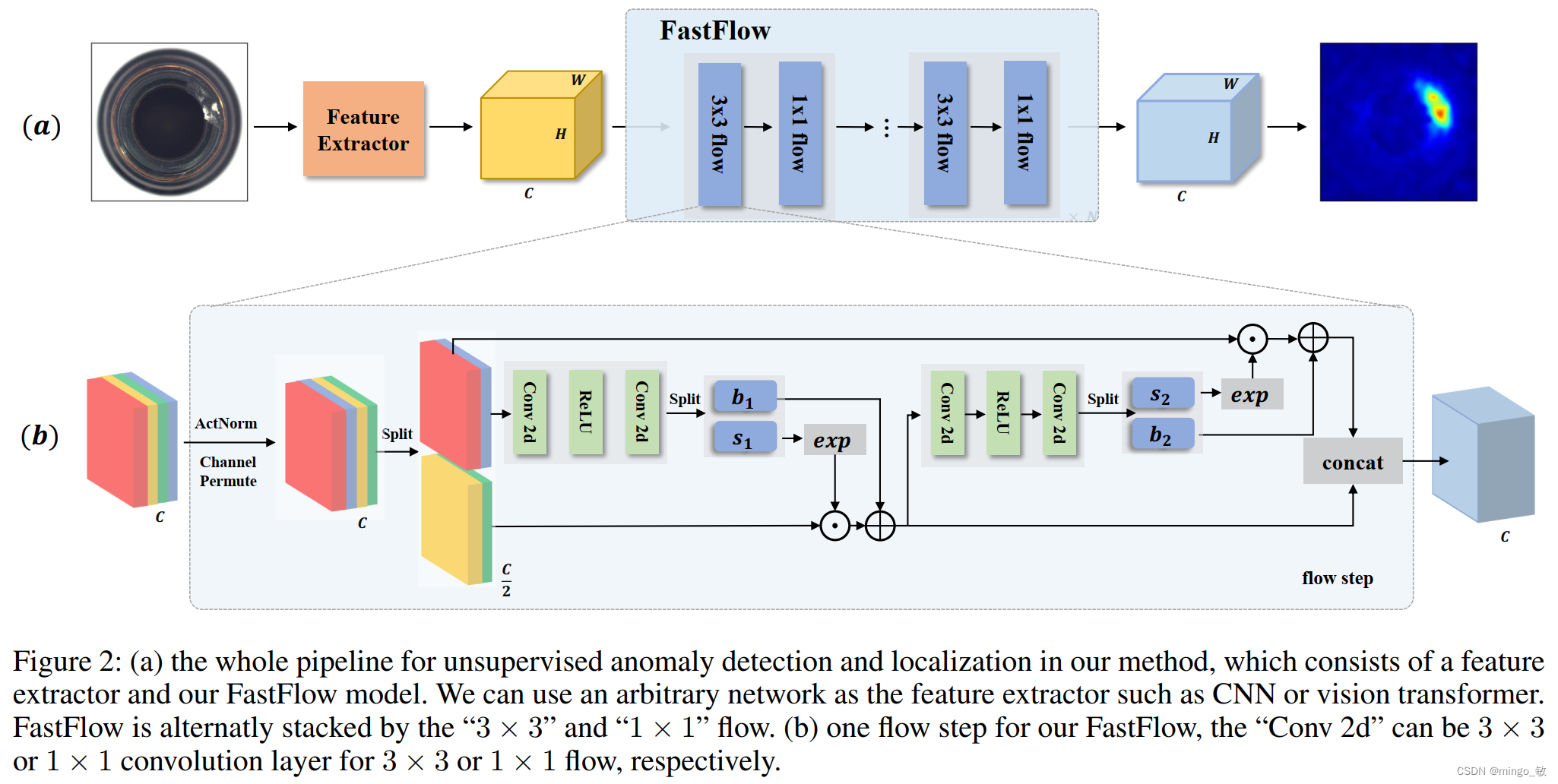

FastFlow是基于二维正则化流的概率分布估计器。它可以作为插件模块与任何深度特征提取器(如ResNet和视觉变换器)一起使用,用于无监督的异常检测和定位。在训练阶段,FastFlow学习将输入的视觉特征转换为可处理的分布,在推理阶段评估识别异常的可能性。

2 FastFlow

FastFlow结构如下:

2-1 Feature Extractor

Feature Extractor 通过ResNet或视觉变换器从输入图像中提取代表性特征。异常检测任务中的一个重要挑战是全局关系的把握,以区分异常区域和其他局部部分。因此,当使用视觉变换器(ViT)作为特征提取器时,可以只使用某一特定层的特征,因为ViT具有更强的能力来捕捉局部补丁和全局特征之间的关系。但是对于ResNet,需要直接使用前三个块中最后一层的特征,并将这些特征放入三个相应的FastFlow模型中。

2-2 2D Flow Model

将X视为一个二维的数据分布,利用二维正则化流(2D normalizing flow)将其变换到一个标准正态分布(standard normal distribution),得到一个H×W×D维的隐空间矩阵Z。二维正则化流是一种可逆的、高效的、并行的变换,它使用了一种特殊的正则化流,称为RealNVP,它可以实现可逆的、高效的、并行的变换。RealNVP使用了一种掩码技术,将输入向量分成两部分,一部分保持不变,另一部分通过一个仿射变换(affine transformation)与前一部分相加。然后交换两部分的位置,重复这个过程多次,得到最终的输出向量。

在推理中,异常图像的特征应该是在分布之外的,因此比正常图像具有更低的似然,似然可以作为异常得分。具体来说,将每个通道的二维概率求和,得到最终的概率图,并使用双线性插值将其上采样到输入图像的分辨率。

PyTorch代码如下:

FastFlow(

(feature_extractor): FeatureListNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act1): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(act2): ReLU(inplace=True)

)

)

)

(norms): ModuleList(

(0): LayerNorm((64, 64, 64), eps=1e-05, elementwise_affine=True)

(1): LayerNorm((128, 32, 32), eps=1e-05, elementwise_affine=True)

(2): LayerNorm((256, 16, 16), eps=1e-05, elementwise_affine=True)

)

(nf_flows): ModuleList(

(0): SequenceINN(

(module_list): ModuleList(

(0): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(1): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(2): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(3): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(4): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(5): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(6): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(7): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

)

)

(1): SequenceINN(

(module_list): ModuleList(

(0): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(1): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(2): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(3): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(4): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(5): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(6): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(7): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

)

)

(2): SequenceINN(

(module_list): ModuleList(

(0): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(1): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(2): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(3): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(4): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(5): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(6): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

(7): AllInOneBlock(

(softplus): Softplus(beta=0.5, threshold=20)

(subnet): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=same)

(1): ReLU()

(2): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=same)

)

)

)

)

)

)

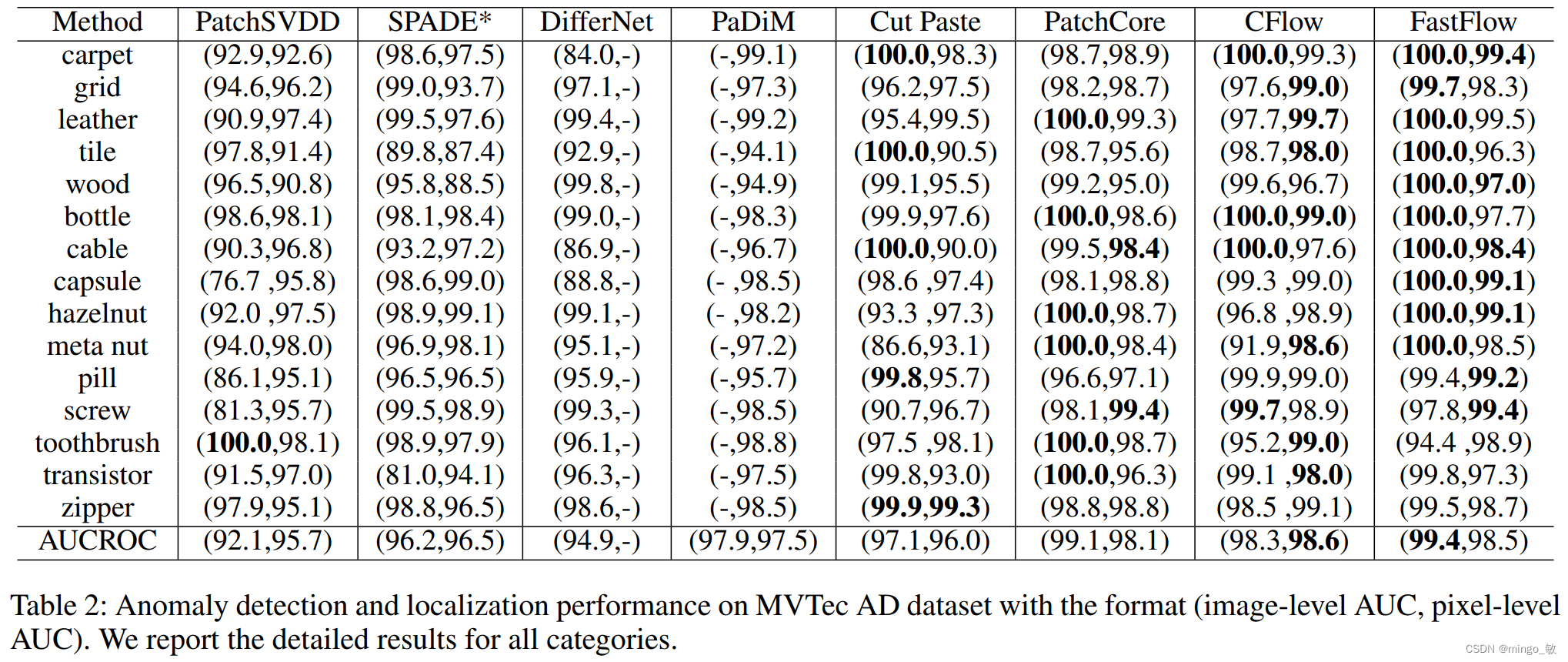

3 Experiments

Quantitative Results

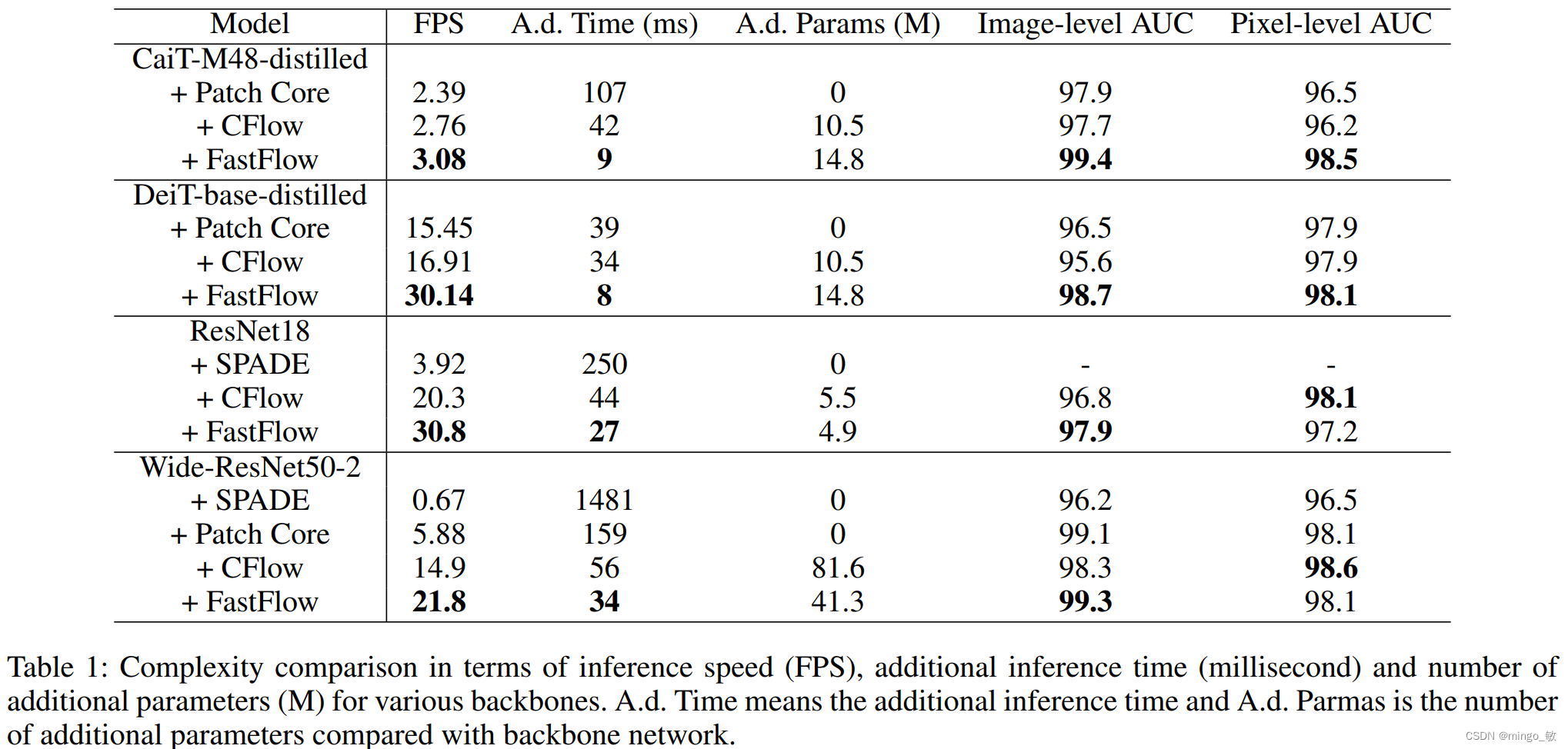

Complexity Analysis