为了熟悉神经网络,搭建建议结构,简化大型网络。

1. Train.py

注:只要更改输入网络名称即可

2. 使用cifar10数据集

首先要完成数据集得解压

#把官网下载的cifar-10文件转化为图片保存在新建文件夹Train和Test中

import pickle

import glob

import os

import cv2

import numpy as np

#通过该函数可以拿到字典类型的数据,包含原始图片数据和label

def unpickle(file):

with open(file, 'rb') as fo:

dict = pickle.load(fo, encoding='bytes')

return dict

label_name= ["airplane",

"automobile",

"bird",

"cat",

"deer",

"dog",

"frog",

"horse",

"ship",

"truck"]

#通过glob:文件匹配的方式读取当前文件夹下相匹配的文件

train_list = glob.glob("—————————改成自己文件夹名称———————————————\Test_batch*") #data_batch_*-->Test_batch*

print(train_list)

save_path = "—————————改成自己文件夹名称———————————————\Test" #Train-->Test

for l in train_list:

print(l)

l_dict = unpickle(l)

#print(l_dict) #print all

print(l_dict.keys()) #dict_keys([b'batch_label', b'labels', b'data', b'filenames'])

for im_idx, im_data in enumerate(l_dict[b'data']): #enumerate() 函数用于将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,一般用在 for 循环当中

#print(im_idx)

#print(im_data) #图片以向量的形式存在

#打印出索引值和图片,但是图片以向量形式表示,要reshape成为3X32X32,再对3这个维度进行交换,变为32X32X3

#利用索引值拿到label,name

im_label = l_dict[b'labels'][im_idx]

im_name = l_dict[b'filenames'][im_idx]

# print(im_label, im_name, im_data)

#将数据存放到新建的训练集文件夹下

im_label_name = label_name[im_label]

im_data = np.reshape(im_data, [3, 32, 32])

im_data = np.transpose(im_data, (1, 2, 0))

#cv2.imshow("im_data", cv2.resize(im_data, (200, 200)))

#cv2.waitKey(0)

#在Train文件夹下对每一个类创建一个文件夹

#os作用:如果不存在文件夹,则创建一个新的

if not os.path.exists("{}/{}".format(save_path,

im_label_name)):

os.mkdir("{}/{}".format(save_path, im_label_name))

#写入图片

cv2.imwrite("{}/{}/{}".format(save_path,

im_label_name,

im_name.decode("utf-8")), #此时im_name为byte型,要转化为字符串型,加decode

im_data)改文件夹的位置,先写入Train,再写入Test,即运行两次

3. VGG

import torch

import torch.nn as nn

import torch.nn.functional as F

class VGGbase(nn.Module):

def __init__(self):

super(VGGbase, self).__init__()

# 3 * 28 * 28 (经过crop之后 32 resize到 28)

self.conv1 = nn.Sequential( #序列容器,用于搭建神经网络的模块被按照被传入构造器的顺序添加到nn.Sequential()容器中

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1), #输入通道为3,输出通道为64

nn.BatchNorm2d(64), #归一化处理,输出channel数64

nn.ReLU()

)

#卷积不改变图片大小,只改变维度

# 28 * 28

self.max_pooling1 = nn.MaxPool2d(kernel_size=2, stride=2)

# 14 * 14

self.conv2_1 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU()

)

self.conv2_2 = nn.Sequential(

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU()

)

self.max_pooling2 = nn.MaxPool2d(kernel_size=2, stride=2)

# 7 * 7

#遇上奇数,设置padding=1

self.conv3_1 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU()

)

self.conv3_2 = nn.Sequential(

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU()

)

self.max_pooling3 = nn.MaxPool2d(kernel_size=2, stride=2, padding=1)

# 4 * 4

self.conv4_1 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU()

)

self.conv4_2 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(512),

nn.ReLU()

)

self.max_pooling4 = nn.MaxPool2d(kernel_size=2, stride=2)

# 2 * 2

#定义FC层

# batchsize * 512 * 2 * 2 -->batchsize * (512 * 4)

self.fc = nn.Linear(512*4, 10) #nn.Linear(in_features, out_features)

#串联,构造分类网络

def forward(self, x):

batchsize = x.size(0) #第0维度的数据数量

out = self.conv1(x)

out = self.max_pooling1(out)

out = self.conv2_1(out)

out = self.conv2_2(out)

out = self.max_pooling2(out)

out = self.conv3_1(out)

out = self.conv3_2(out)

out = self.max_pooling3(out)

out = self.conv4_1(out)

out = self.conv4_2(out)

out = self.max_pooling4(out)

#展平

out = out.view(batchsize, -1) #-1:根据具体维度进行计算

#FC层

# batchsize * c * h * w --> batchsize * n

out = self.fc(out)

out = F.log_softmax(out, dim=1)

return out

def VGGNet():

return VGGbase()4. Resnet

"""

resnet 跳连结构

"""

import torch

import torch.nn as nn

import torch.nn.functional as F

class ResBlock(nn.Module):

def __init__(self, in_channel, out_channel, stride=1):

super(ResBlock, self).__init__()

#定义主干分支

self.layer = nn.Sequential(

nn.Conv2d(in_channel, out_channel, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(out_channel),

nn.ReLU(),

nn.Conv2d(out_channel, out_channel, kernel_size=3,stride=1,padding=1),

nn.BatchNorm2d(out_channel),

)

#定义跳连分支

self.shortcut = nn.Sequential()

if in_channel != out_channel or stride > 1:

#跳连分支,将输入的结果直连

self.shortcut=nn.Sequential(

nn.Conv2d(in_channel, out_channel, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(out_channel),

)

def forward(self, x):

out1 = self.layer(x)

out2 = self.shortcut(x)

out = out1 + out2

out = F.relu(out)

return out

class ResNet(nn.Module):

#对多个层的定义

def make_layer(self, block, out_channel, stride, num_block): #num_block表示定义多少个卷积层

layer_list = [] #存放相应的层

for i in range(num_block):

if i == 0:

in_stride = stride

else:

in_stride = 1

layer_list.append(block(self.in_channel, out_channel, in_stride))

self.in_channel = out_channel

return nn.Sequential(*layer_list)

def __init__(self, ResBlock):

super(ResNet, self).__init__()

self.in_channel = 32

self.conv1 = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(32),

nn.ReLU()

)

self.layer1 = self.make_layer(ResBlock, 64, 2, 2)

self.layer2 = self.make_layer(ResBlock, 128, 2, 2)

self.layer3 = self.make_layer(ResBlock, 256, 2, 2)

self.layer4 = self.make_layer(ResBlock, 512, 2, 2)

self.fc = nn.Linear(512, 10) #10个类别

def forward(self, x):

out = self.conv1(x)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = F.avg_pool2d(out, 2) #输出结果转换为1 * 1

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

def resnet():

return ResNet(ResBlock)

5. mobileNet

import torch

import torch.nn as nn

import torch.nn.functional as F

class mobilenet(nn.Module):

#mobilenet 基本单元

def conv_dw(self, in_channel, out_channel, stride):

return nn.Sequential(

nn.Conv2d(in_channel, in_channel, kernel_size=3, stride=stride, padding=1, groups=in_channel, bias=False),

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel, out_channel, kernel_size=1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_channel),

nn.ReLU(),

)

def __init__(self):

#核心算子的定义

super(mobilenet, self).__init__()

self.conv1 = nn.Sequential( # 序列容器,用于搭建神经网络的模块被按照被传入构造器的顺序添加到nn.Sequential()容器中

nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1), # 输入通道为3,输出通道为64

nn.BatchNorm2d(32), # 归一化处理,输出channel数64

nn.ReLU()

)

self.conv_dw2 = self.conv_dw(32, 32, 1)

self.conv_dw3 = self.conv_dw(32, 64, 2) #进行一次下采样

self.conv_dw4 = self.conv_dw(64, 64, 1)

self.conv_dw5 = self.conv_dw(64, 128, 2)

self.conv_dw6 = self.conv_dw(128, 128, 1)

self.conv_dw7 = self.conv_dw(128, 256, 2)

self.conv_dw8 = self.conv_dw(256, 256, 1)

self.conv_dw9 = self.conv_dw(256, 512, 2)

#以上conv_dw(*, *, 2) 4个2,即进行2^4=16倍的下采样

self.fc = nn.Linear(512,10)

#对以上算子进行串联,完成前项运算

def forward(self, x):

out = self.conv1(x)

out = self.conv_dw2(out)

out = self.conv_dw3(out)

out = self.conv_dw4(out)

out = self.conv_dw5(out)

out = self.conv_dw6(out)

out = self.conv_dw7(out)

out = self.conv_dw8(out)

out = self.conv_dw9(out)

out = F.avg_pool2d(out, 2) #上面16倍的下采样,此处采用2 * 2 的&进行average pooling

out = out.view(-1, 512) #转为2维图

out = self.fc(out) #对5122维向10维分布转化

return out

def mobilenetv1_small():

return mobilenet()

6. inceptionNet

import torch

import torch.nn as nn

import torch.nn.functional as F

"""

input: A

对于resnet

B = A + f(A) 为保证A 和 f(A) 的feature map特征图相同

所以

B = g(A) + f(A)

g(A): 对A进行判断:如果输入channel和输出channel不相同,或者stride > 2, 对A进行卷积运算,保证和输出的特征图大小一致

______________________________________________________________________________________________________

对于inception

对输入A进行不同的卷积和pooling运算,得到不同的结果后(B1, B2, B3...)进行串联

B1 = f1(A)

B2 = f2(A)

B3 = f3(A)

B4 = f4(A)

concat([B1, B2, B3, B4])

"""

def ConvBNRelu(in_channel, out_channel, kernel_size):

return nn.Sequential(

nn.Conv2d(in_channel, out_channel, kernel_size=kernel_size, stride=1, padding=kernel_size//2), # //2:整除,得商kernel_size=3, padding=1; kernel_size=5, padding=2; kernel_size=7, padding=3

nn.BatchNorm2d(out_channel), # 归一化处理,输出channel数64

nn.ReLU()

)

class BaseInception(nn.Module):

def __init__(self, in_channel, out_channel_list, reduce_channel_list):

super(BaseInception, self).__init__()

self.branch1_conv = ConvBNRelu(in_channel, out_channel_list[0], 1)

self.branch2_conv1 = ConvBNRelu(in_channel, reduce_channel_list[0], 1) #压缩

self.branch2_conv2 = ConvBNRelu(reduce_channel_list[0], out_channel_list[1], 3)

self.branch3_conv1 = ConvBNRelu(in_channel, reduce_channel_list[1], 1)

self.branch3_conv2 = ConvBNRelu(reduce_channel_list[1], out_channel_list[2], 5)

self.branch4_pool = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.branch4_conv = ConvBNRelu(in_channel, out_channel_list[3], 3)

def forward(self, x):

out1 = self.branch1_conv(x)

out2 = self.branch2_conv1(x)

out2 = self.branch2_conv2(out2)

out3 = self.branch3_conv1(x)

out3 = self.branch3_conv2(out3)

out4 = self.branch4_pool(x)

out4 = self.branch4_conv(out4)

out = torch.cat([out1, out2, out3, out4], dim=1)

return out

class InceptionNet(nn.Module):

#初始化

def __init__(self):

super(InceptionNet, self).__init__()

self.block1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=1),

nn.BatchNorm2d(64),

nn.ReLU()

)

self.block2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(128),

nn.ReLU()

)

self.block3 = nn.Sequential(

BaseInception(in_channel=128, out_channel_list=[64, 64, 64, 64], reduce_channel_list=[16, 16]),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.block4 = nn.Sequential(

BaseInception(in_channel=256, out_channel_list=[96, 96, 96, 96], reduce_channel_list=[32, 32]),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

self.fc = nn.Linear(384, 10) #96 * 4 = 384

#组装算子,完成前向推理

def forward(self, x):

out = self.block1(x)

out = self.block2(out)

out = self.block3(out)

out = self.block4(out)

out = F.avg_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = self.fc(out)

return out

def InceptionNetSmall():

return InceptionNet()

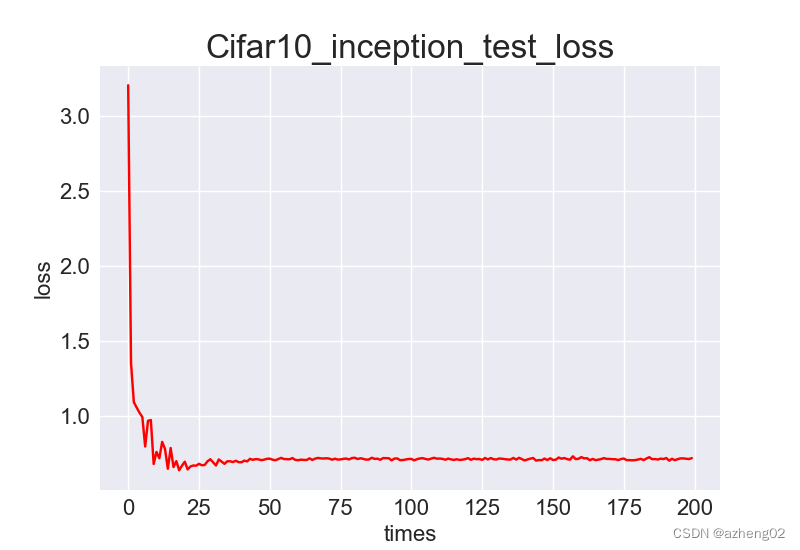

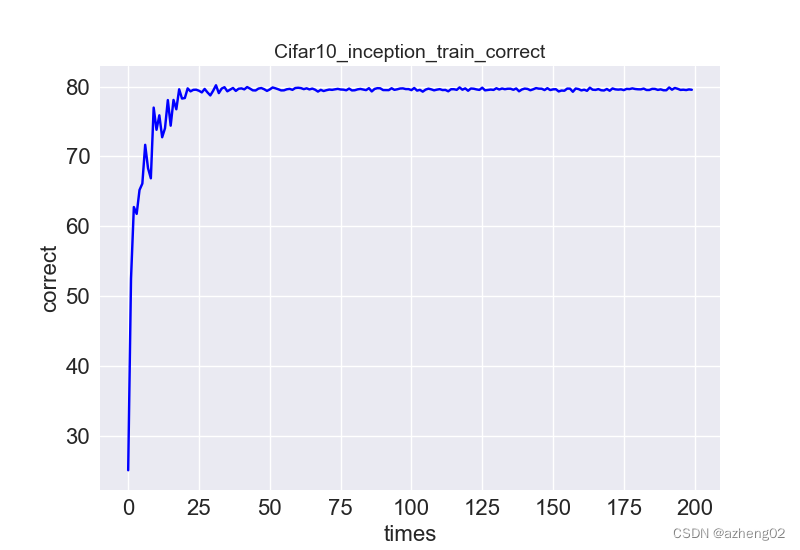

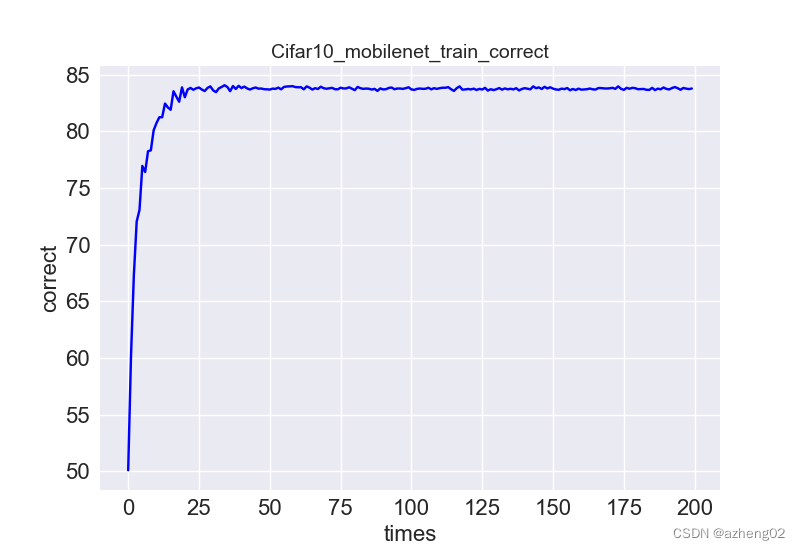

运行结果(Test)

VGG

resnet

mobileNet

inceptionNet