作者:Yury Gorbachev;翻译:武卓,杨亦诚

Over past year we have seen explosion of Generative AI use cases and models. New noticeable generative models are now released almost every week for different domains, trained on ever-increasing datasets and with various computational complexities. With approaches like LoRA huge models could be tuned on very modest training accelerators and this is unlocking more modifications of foundational models. Deploying those models remains challenge still due to resource consumption and highly relies on serving of the models in the cloud.

With 23.2 release of OpenVINO we want to bring power of Generative AI to regular desktops and laptops, make it possible to run those models locally in resource constrained environments and allow you to experiment with them and integrate in your applications. We worked across our product to optimize for those scenarios, implemented few critical features and established foundation for further work that we are planning to do.

That said, our changes were not limited to Generative AI, we have improved other parts of the product and hope that it makes your work with it easier and brings you additional value.

Let’s walk through those changes and see what those are exactly.

在过去的一年里,我们看到了生成式AI用例和模型的爆炸式增长。现在几乎每周都会针对不同的领域发布新的值得注意的生成式模型,这些模型在不断增加的数据集上训练,具有各种计算复杂性。使用像LoRA这样的方法,可以在非常适度的训练加速器上微调大模型,这解锁了对基础模型的更多修改。由于资源消耗,部署这些模型仍然是挑战,并且高度依赖于在云端部署模型。

随着 OpenVINO™ 2023.1 版本的发布,我们希望将生成式 AI 的强大功能引入常规台式机和笔记本电脑,让这些模型可以运行在在资源受限的本地环境中,,并被您尝试集成到自己的应用程序中。我们在整个产品中针对这些场景进行了优化,实现了一些关键功能,并为我们的下一步工作计划奠定了基础。

也就是说,我们的变化不仅限于生成式AI,我们还改进了产品的其它部分,并希望它能使您的工作更轻松,并为您带来额外的价值。 让我们来看看这些变化到底是什么。。

Generative AI features

生成式 AI 功能

Overall stack optimizations for large models. Models from Generative AI family have one thing in common – they are resource hungry. Model size is huge, amount of memory that is required to run them is very high, demands for memory bandwidth is tremendous. Simple things like unnecessary copy of weights for operation could result in inability to run model due to lack of memory.

大模型的整体堆栈优化。来自生成式AI家族的模型有一个共同点——它们亟需资源。模型尺寸巨大,运行它们所需的内存量非常高,对内存带宽的需求也非常大。例如不必要的权重搬运这样简单的问题,都可能会导致由于内存不足而无法运行模型

To better accommodate that we have worked across our inference stack, both for CPU and GPU (both integrated and discrete) targets to optimize how we work with those models, including optimization for memory that is required to read and compile model, optimizations on how we handle input and output tensors for models and other internals that improved model execution time.

为了更好地适应这一点,我们已经跨推理堆栈工作,包括 CPU 和 GPU(集成显卡和独立显卡),目标就是优化我们使用这些模型的方式,包括优化读取和编译模型所需的内存,优化如何处理模型的输入和输出张量以及其他内部结构,从而缩短模型执行时间。

Weights quantization for Large Language Models. LLMs require significant memory bandwidth when execute. To optimize for this, we have implemented int8 LLM weights quantization feature within our NNCF optimization framework and for CPU inference.

大型语言模型的权重量化。LLM 在执行时需要大量的内存带宽。为了对此进行优化,我们在 NNCF(神经网络压缩框架) 优化框架和 CPU 推理中实现了 int8 LLM 权重量化功能。

When using this feature, NNCF will generate optimized IR file that will be twice smaller comparing to regular model file that is in fp16 precision. IR file will enable additional optimizations within CPU plugin which will result in latency improvements and reduction in runtime memory consumption. Similar feature for GPU is under implementation and will be available in coming releases.

使用此功能时,NNCF 将生成优化的 IR 模型文件,与精度为 fp16 的常规模型文件相比,该文件能够将尺寸减小一半。IR 文件将在 CPU 插件中被执行额外的优化,这将改善延迟并减少运行时内存消耗。GPU 的类似功能正在实施中,并将在后续发布的版本中提供。

Easier conversion of models. Most of LLMs are currently coming from PyTorch-based environment. To convert those models, you can now use our direct PyTorch conversion feature. For LLMs this significantly accelerates conversion time and reduces memory demands comparing with our previous path via ONNX format.

更容易转换模型。大多数LLM目前来自基于PyTorch的环境。要转换这些模型,您现在可以使用我们的直接 PyTorch 转换功能。对于LLM,与我们之前通过ONNX格式的路径相比,这大大加快了转换时间并减少了内存需求。

Overall, as a result of our optimizations we were able to improve LLM performance by factor of TBD on CPU and factor of TBD on GPU. In addition, we have reduced amount of memory that is required to run those models by factor of xxx. In some cases, optimizations that we have done allowed model to run since previously inference was running out of memory. We have been validating our work on dozens of LLMs of different sizes and for different tasks to ensure that our approach scales well to all our platforms and supported OSes.

总体而言,由于我们的优化,我们能够在CPU和GPU上均能提高 LLM 性能。[WZ1] 此外,我们还按 倍数级减少了运行这些模型所需的内存量。在某些情况下,新版本OpenVINO可以让我们运行那些以前由于内存不足而失败的模型。我们一直在数十个不同规模和不同任务的LLM上验证我们的工作,以确保我们的方法能够很好地扩展到我们所有的平台和支持的操作系统。

Our conversion API and weights quantization feature is also integrated into Hugging Face optimum-intel extension that allows you to run Generative models using OpenVINO as inference stack or export models to OpenVINO format in convenient way.

我们的转换 API 和权重量化功能也集成到Hugging Face optimum-intel扩展中,允许您使用 OpenVINO 作为推理堆栈运行生成式模型,或以方便的方式将模型导出为 OpenVINO 格式。

Simplifying your workflow

简化您的工作流

No more development package, unified tools availability. Starting with 23.2 release we are no longer asking you to install separate packages for runtime and development. We have worked to simplify our tooling and integrate all necessary components into single openvino package. This also means that model conversion and inference is available in uniform manner, via all OpenVINO distribution mechanisms: pip, conda, brew and archives.

In addition, starting from this release OpenVINO Python API is available from all packages that support minimum required Python version (3.7). That means conda, brew and selected apt versions in addition to pip that was available previously.

不再需要开发包,提供统一的工具。从 2023.1 版本开始,我们不再要求您为运行时和开发环境分别安装单独的软件包。我们一直致力于简化我们的工具,并将所有必要的组件集成到单个 OpenVINO 软件包中。这也意味着模型转换和推理可以通过所有OpenVINO分发机制以统一的方式获得:pip,conda,brew和archive。

此外,从此版本开始,OpenVINO Python API 可从所有支持最低要求 Python 版本 (3.7) 的软件包中获得。这意味着除了以前可用的 pip 之外,还有conda、brew和指定的 apt 版本。

More efficient and friendly model conversion. We are introducing OpenVINO Model Conversion tool (OVC) that is replacing our well-known Model Optimizer (mo) tool in offline model conversion tasks. This tool is available in openvino package, relies on internal model frontends to read framework formats and does not require original framework to perform model conversion. For instance, you don’t need to have TensorFlow installed if you want to convert TF model to OpenVINO. Similarly, as you don’t need to have TensorFlow if you want to simply read this model within OpenVINO runtime for inference without conversion.

更高效、更友好的模型转换。我们正在推出 OpenVINO 模型转换工具 (OVC),该工具正在取代我们众所周知的离线模型转换任务中的模型优化器 (MO) 工具。该工具以OpenVINO 包形式提供,依靠内部模型前端来读取框架格式,不需要原始框架来执行模型转换。例如,如果您想将TF模型转换为OpenVINO,则不需要安装TensorFlow。同样,如果您想简单地在 OpenVINO 运行时中读取此模型以进行推理而无需转换,同样也不需要 TensorFlow。

To convert models within Python script we have further improved our convert_model API. For instance, it allows to convert model from PyTorch object to OpenVINO model and compile model for inference or save it to IR, see example below:

为了在 Python 脚本中转换模型,我们进一步改进了convert_model API。例如,它允许将模型从 PyTorch 对象转换为 OpenVINO 模型,并编译模型以进行推理或将其保存到 IR格式,请参见以下示例:

Note that we have also streamlined our Python APIs, so those are available directly from openvino namespace, this is way simpler now. You can still access functions from old namespaces and install openvino-dev if you require mo tool. Tool itself is also still available, but we recommend migrating to ovc tool instead.

请注意,我们还简化了我们的Python API,可以直接从OpenVINO命名空间获得这些API,因此这一切变得更简单了。您仍然可以从旧命名空间访问函数,如果您需要 mo 工具,可以安装 openvino-dev。这个工具本身也仍然可用,但我们建议迁移到 ovc 工具。

Switching to fp16 IR by default. With changes in our conversion tooling, we are now switching to use of fp16 precision as a datatype in our IR. This allows to reduce model size by factor of 2 and has no impact on accuracy according to tests that we have performed. It is important to mention that IR precision does not impact execution precision within plugins, which continue to execute in best performance by default always.

默认切换到 fp16 IR。随着转换工具的变化,我们现在切换到使用 fp16 精度作为 IR 中的数据类型。这允许将模型大小减小 一半(相对FP32精度的模型),并且根据我们执行的测试对准确性没有影响。值得一提的是,IR 精度不会影响硬件插件的执行精度,默认情况下,插件始终以最佳性能继续执行。

Better PyTorch compatibility

更好的 PyTorch 兼容性

It was already mentioned that our direct PyTorch conversion feature is now mature and we are using it ourselves for instance within our HuggingFace optimum-intel solution to convert models. So, it is easier to convert models now since you are bypassing extra step of ONNX format (that we still support without limitations).

前面已经提到,直接转换PyTorch模型的功能现在已经成熟,该方案已经被集成在我们的 HuggingFace optimum-intel中进行模型转换。因此,现在转换模型更容易,因为您绕过了 ONNX 格式的额外步骤(我们仍然无限制地支持)。

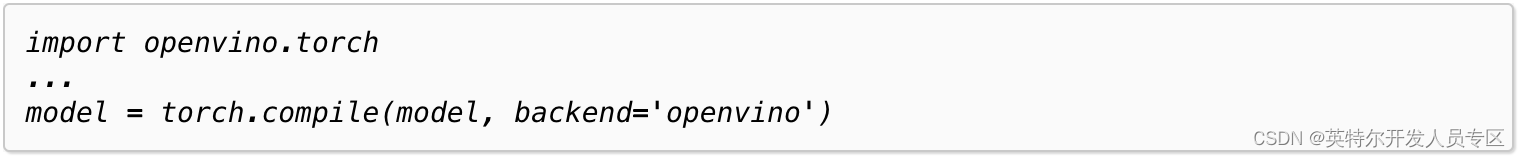

To bring OpenVINO even closer to PyTorch ecosystem we are introducing support for torch.compile and corresponding backend. You can now run your model through OpenVINO stack by compiling it via torch.compile and specifying openvino as a backend!

为了使OpenVINO更接近PyTorch生态系统,我们引入了对torch.compile和相应后端的支持。您现在可以通过 OpenVINO 堆栈运行您的模型,方法是通过 torch.compile 编译它并指定 openvino 作为后端!

See below for example:

如下例所示:

This feature is being actively enhanced, we are expecting better performance and operation coverage, but it is already used in our integrations, for instance with Stable Diffusion WebUI (link).

此功能正在积极增强,我们期待更好的性能和操作覆盖范围,但它已经在我们的集成中使用,例如Stable Diffusion WebUI(链接)。

New notebooks with exciting use cases

令人兴奋的新Notebook用例

To showcase new capabilities that you can try right from your laptop, we have made few notebooks and updated existing ones. Below are the ones that we are most excited about:

为了展示您可以直接从笔记本电脑上试用的新功能,我们制作了一些Jupyter notebooks示例并更新了现有notebooks。以下是最令我们兴奋的:

基于大语言模型的聊天机器人(LLM Chatbot):

https://github.com/openvinotoolkit/openvino_notebooks/tree/main/notebooks/254-llm-chatbot

文生图模型StableDiffusion XL:

https://github.com/openvinotoolkit/openvino_notebooks/tree/main/notebooks/248-stable-diffusion-xl

文生图模型 Tiny SD:

生成音乐模型 MusicGen:

https://github.com/openvinotoolkit/openvino_notebooks/blob/main/notebooks/250-music-generation

生成视频模型Text-to-video:

https://github.com/openvinotoolkit/openvino_notebooks/tree/main/notebooks/253-zeroscope-text2video

| Darth Vader is surfing on waves |