1、系统软件

1.1 、系统版本

系统:centos 7.1及以上;Ubuntu 16.04及以上

软件:Java 1.8及以上;GCC 4.8.2及以上;

1.2、环境检查

1.2.1、gcc安装

1、Centos

ansible cluster -m shell -a "yum -y install gcc gcc-c++ autoconf pcre pcre-devel make automake"

ansible cluster -m shell -a "yum -y install wget httpd-tools vim"

ansible cluster -m shell -a "gcc --version"

2、Ubuntu

sudo apt install -y gcc

1.3、时钟同步(必须)

1.4、关闭交换分区(swap)(必须)

echo "0" > /proc/sys/vm/swappiness

echo "0" > /proc/sys/vm/overcommit_memory

sysctl -p

1.5、把limits调到最大(必须)

cat << EOF >> /etc/security/limits.conf

* soft noproc 65535

* hard noproc 65535

* soft nofile 65535

* hard nofile 65535

* hard memlock unlimited

* soft memlock unlimited

EOF

1.6、Jdk是1.8

# jdk

export JAVA_HOME=/usr/java/jdk1.8.0_201-amd64

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

1.7、系统参数优化

cat > /etc/sysctl.conf << EOF

vm.max_map_count = 262144

net.ipv4.tcp_retries2 = 5

fs.file-max = 6553560

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.tcp_max_syn_backlog = 16384

net.core.netdev_max_backlog = 32768

net.core.somaxconn = 32768

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_fin_timeout = 20

net.ipv4.tcp_synack_retries = 2

net.ipv4.tcp_syn_retries = 2

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.ip_local_port_range = 1024 65000

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

kernel.numa_balancing = 0

kernel.shmmax = 68719476736

kernel.printk = 5

kernel.sysrq = 1

vm.overcommit_memory = 0

vm.swappiness = 1

vm.dirty_background_ratio = 5

vm.dirty_ratio = 80

EOF

sysctl -p

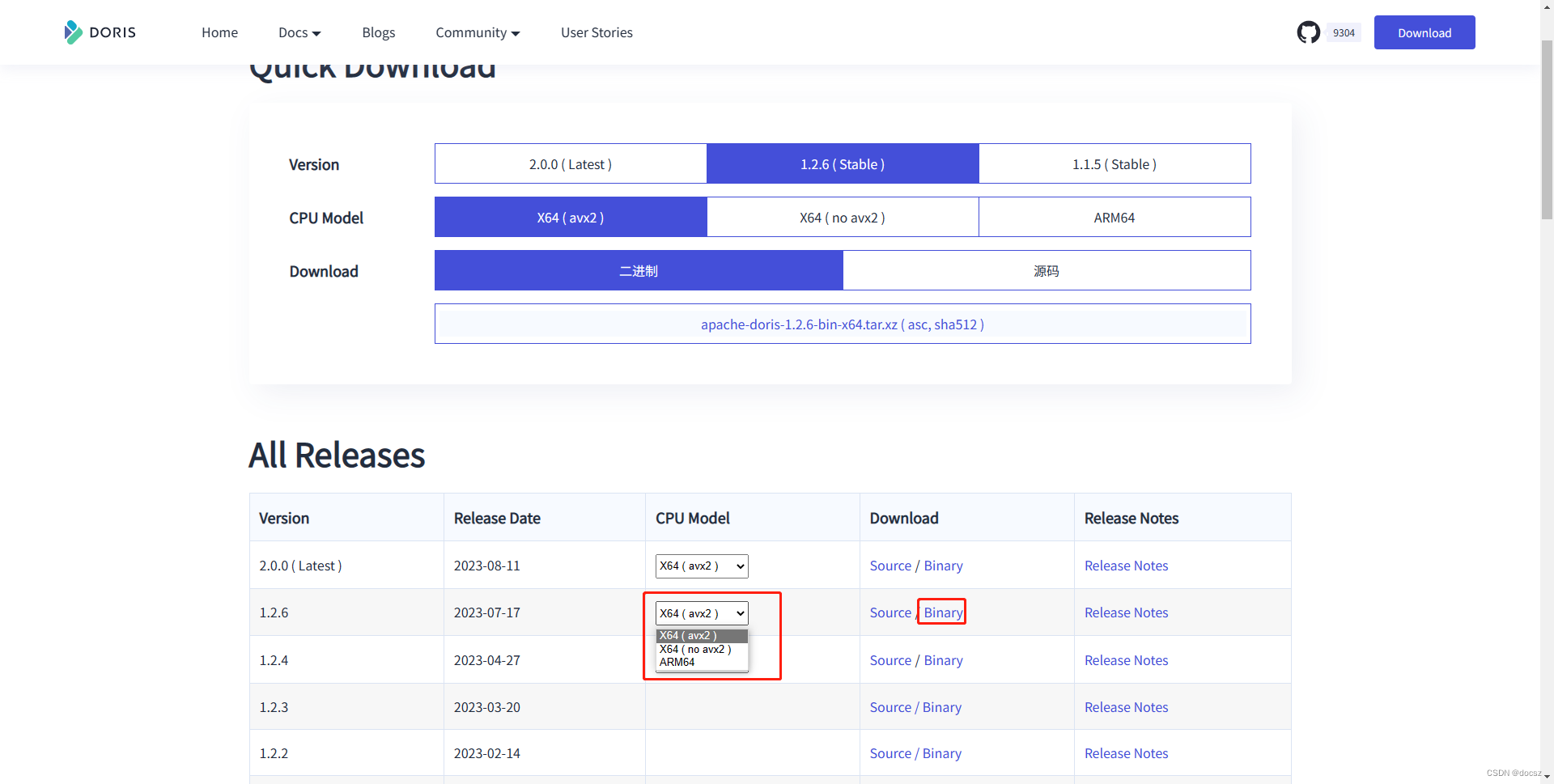

1.8、检查avx2指令

查看是否支持avx2指令集,返回0,说明不支持

cat /proc/cpuinfo | grep avx2 | wc -l

1.9、下载安装包

根据JDK版本,CPU架构,是否支持avx2,下载对应的Doris安装包

下载地址:https://doris.apache.org/zh-CN/download

2、集群部署

2.1、服务器规划及配置

2.2、部署目录规划

2.2.1、FE

部署目录: /opt/module/doris/fe

日志目录: /data/doris/log/fe

元数据目录: /data/doris/data/meta

2.2.2、BE

部署目录: /opt/module/doris/be

日志目录: /data/doris/log/be

数据存储目录: /data/doris/data/storage

2.2.3、Broker

部署目录: /opt/module/doris/hdfs_broker

2.2.4、创建目录

ansible cluster -m shell -a "mkdir -p /opt/module/doris/"

ansible cluster -m shell -a "mkdir -p /data/doris/log/{fe,be}"

ansible cluster -m shell -a "mkdir -p /data/doris/data/{meta,storage.SSD}"

2.3、集群部署

2.3.1、解压

tar -xvJf apache-doris-1.2.6-bin-x64.tar.xz

chown -R root:root apache-doris-1.2.6-bin-x64

2.3.2、修改FE配置

AVA_HOME=/usr/java/jdk1.8.0_201-amd64

# the output dir of stderr and stdout

LOG_DIR = /data/doris/log/fe

DATE = `date +%Y%m%d-%H%M%S`

JAVA_OPTS="-Xmx8192m -XX:+UseMembar -XX:SurvivorRatio=8 -XX:MaxTenuringThreshold=7 -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSClassUnloadingEnabled -XX:-CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -Xloggc:/data/doris/log/fe/fe.gc.log.$DATE"

# For jdk 9+, this JAVA_OPTS will be used as default JVM options

JAVA_OPTS_FOR_JDK_9="-Xmx8192m -XX:SurvivorRatio=8 -XX:MaxTenuringThreshold=7 -XX:+CMSClassUnloadingEnabled -XX:-CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -Xlog:gc*:/data/doris/log/fe/fe.gc.log.$DATE:time"

##

## the lowercase properties are read by main program.

##

# INFO, WARN, ERROR, FATAL

sys_log_level = INFO

# store metadata, must be created before start FE.

# Default value is ${DORIS_HOME}/doris-meta

# meta_dir = ${DORIS_HOME}/doris-meta

meta_dir = /data/doris/data/meta

# Default dirs to put jdbc drivers,default value is ${DORIS_HOME}/jdbc_drivers

# jdbc_drivers_dir = ${DORIS_HOME}/jdbc_drivers

http_port = 8030

rpc_port = 9020

query_port = 9030

edit_log_port = 9010

mysql_service_nio_enabled = true

# Choose one if there are more than one ip except loopback address.

# Note that there should at most one ip match this list.

# If no ip match this rule, will choose one randomly.

# use CIDR format, e.g. 10.10.10.0/24

# Default value is empty.

# priority_networks = 10.10.10.0/24;192.168.0.0/16

priority_networks = 192.168.0.122/24

# Advanced configurations

# log_roll_size_mb = 1024

sys_log_dir = /data/doris/log/fe

# sys_log_roll_num = 10

# sys_log_verbose_modules = org.apache.doris

audit_log_dir = /data/doris/log/fe

# audit_log_modules = slow_query, query

# audit_log_roll_num = 10

# meta_delay_toleration_second = 10

# qe_max_connection = 1024

# max_conn_per_user = 100

# qe_query_timeout_second = 300

# qe_slow_log_ms = 5000

default_storage_medium = SSD

dynamic_partition_enable = true

2.3.3、修改BE配置

JAVA_HOME=/usr/java/jdk1.8.0_201-amd64

PPROF_TMPDIR="/data/doris/log/be"

CUR_DATE=`date +%Y%m%d-%H%M%S`

JAVA_OPTS="-Xmx1024m -DlogPath=/data/doris/log/be/jni.log -Xloggc:/data/doris/log/be/be.gc.log.$CUR_DATE -Dsun.java.command=DorisBE -XX:-CriticalJNINatives -DJDBC_MIN_POOL=1 -DJDBC_MAX_POOL=100 -DJDBC_MAX_IDEL_TIME=300000 -DJDBC_MAX_WAIT_TIME=5000"

# For jdk 9+, this JAVA_OPTS will be used as default JVM options

JAVA_OPTS_FOR_JDK_9="-Xmx1024m -DlogPath=/data/doris/log/be/jni.log -Xlog:gc:/data/doris/log/be/be.gc.log.$CUR_DATE -Dsun.java.command=DorisBE -XX:-CriticalJNINatives -DJDBC_MIN_POOL=1 -DJDBC_MAX_POOL=100 -DJDBC_MAX_IDEL_TIME=300000 -DJDBC_MAX_WAIT_TIME=5000"

# since 1.2, the JAVA_HOME need to be set to run BE process.

# JAVA_HOME=/path/to/jdk/

# INFO, WARNING, ERROR, FATAL

sys_log_level = INFO

# ports for admin, web, heartbeat service

be_port = 9060

webserver_port = 18040

heartbeat_service_port = 9050

brpc_port = 8060

# Choose one if there are more than one ip except loopback address.

# Note that there should at most one ip match this list.

# If no ip match this rule, will choose one randomly.

# use CIDR format, e.g. 10.10.10.0/24

# Default value is empty.

# priority_networks = 10.10.10.0/24;192.168.0.0/16

priority_networks = 192.168.0.129/24

# data root path, separate by ';'

# you can specify the storage medium of each root path, HDD or SSD

# you can add capacity limit at the end of each root path, separate by ','

# eg:

# storage_root_path = /home/disk1/doris.HDD,50;/home/disk2/doris.SSD,1;/home/disk2/doris

# /home/disk1/doris.HDD, capacity limit is 50GB, HDD;

# /home/disk2/doris.SSD, capacity limit is 1GB, SSD;

# /home/disk2/doris, capacity limit is disk capacity, HDD(default)

#

# you also can specify the properties by setting '<property>:<value>', separate by ','

# property 'medium' has a higher priority than the extension of path

#

# Default value is ${DORIS_HOME}/storage, you should create it by hand.

# storage_root_path = ${DORIS_HOME}/storage

storage_root_path = /data/doris/data/storage.SSD

# Default dirs to put jdbc drivers,default value is ${DORIS_HOME}/jdbc_drivers

# jdbc_drivers_dir = ${DORIS_HOME}/jdbc_drivers

# Advanced configurations

sys_log_dir = /data/doris/log/be

# sys_log_roll_mode = SIZE-MB-1024

# sys_log_roll_num = 10

# sys_log_verbose_modules = *

# log_buffer_level = -1

# palo_cgroups

# 更快的导入速度,默认为2

flush_thread_num_per_store = 5

# 获得更好的查询性能

enable_storage_vectorization = true

enable_low_cardinality_optimize = true

enable_segcompaction = true

# 使用新的compaction算法

enable_vertical_compaction = true

2.3.4、分发安装文件

ansible cluster -m copy -a 'src=/opt/module/apache-doris-1.2.6-bin-x64/fe dest=/opt/module/doris/'

ansible cluster -m copy -a 'src=/opt/module/apache-doris-1.2.6-bin-x64/be dest=/opt/module/doris/'

ansible cluster -m copy -a 'src=/opt/module/apache-doris-1.2.6-bin-x64/extensions/apache_hdfs_broker dest=/opt/module/doris/'

ansible cluster -m copy -a 'src=/opt/module/apache-doris-1.2.6-bin-x64/extensions/audit_loader dest=/opt/module/doris/'

2.3.5、修改BE节点配置

priority_networks = 192.168.0.129/24

priority_networks = 192.168.0.137/24

2.3.6、FE节点启动MySQL客户端

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-common-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-client-plugins-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-libs-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-client-8.0.25-1.el7.x86_64.rpm

rpm -ivh mysql-community-common-8.0.25-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-plugins-8.0.25-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-8.0.25-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-8.0.25-1.el7.x86_64.rpm

或

rpm -Uvh *.rpm --nodeps --force

2.4、启动FE

2.4.1、启动FE

bin/start_fe.sh --daemon

2.4.1、访问FE

注:8030是默认端口

http://192.168.0.122:8030

2.4.2、MySQL连接FE

# 无密码登录

mysql -h127.0.0.1 -P9030 -uroot

SET PASSWORD FOR 'root' = PASSWORD('Boshi*2023');

#退出再次登录即可

mysql -h192.168.0.122 -P9030 -uroot -pBoshi*2023

2.4.3、查看FE状态

mysql-client 连接到 FE

# IsMaster、Join 和 Alive 三列均为true,则表示节点正常

SHOW PROC '/frontends';

通过URL来访问

http://fe_host:fe_http_port/api/bootstrap

fe_host FE节点ip

fe_http_port FE安装节点conf/fe.conf中配置的端口

http://192.168.0.122:8030/api/bootstrap

2.4.4、FE 扩容-读写高可用

1、配置及启动Follower

# 首先第一次启动时,需执行以下命令:

fe/bin/start_fe.sh --helper leader_fe_host:edit_log_port --daemon

fe/bin/start_fe.sh --helper xx.xx.xx.xx:9010 --daemon

2、将 Follower加入到集群

# 使用 mysql-client 连接到已启动的 FE,并执行:

ALTER SYSTEM ADD FOLLOWER "follower_host:edit_log_port";

ALTER SYSTEM ADD FOLLOWER "xx.xx.xx.xx:9010";

3、 再次加入fe2, fe3

# 再次加入fe2, fe3:

fe/bin/start_fe.sh --helper xx.xx.xx.xx:9010 --daemon

ALTER SYSTEM ADD FOLLOWER "xx.xx.xx.xx:9010";

ALTER SYSTEM ADD FOLLOWER "xx.xx.xx.xx:9010";

4、 查看是否成功

SHOW PROC '/frontends';

2.4.5、FE 扩容-读高可用

1、配置及启动Observer

# 首先第一次启动时,需执行以下命令:

fe/bin/start_fe.sh --helper leader_fe_host:edit_log_port --daemon

fe/bin/start_fe.sh --helper xx.xx.xx.xx:9010 --daemon

2、将 Observer 加入到集群

# 使用 mysql-client 连接到已启动的 FE,并执行:

ALTER SYSTEM ADD OBSERVER "observer_host:edit_log_port";

ALTER SYSTEM ADD FOLLOWER "xx.xx.xx.xx:9010";

2.5、启动BE

2.5.1、启动BE

sysctl -w vm.max_map_count=2000000

ulimit -n 65536

bin/start_be.sh --daemon

2.5.2、增删 BE

#添加 BE

ALTER SYSTEM ADD BACKEND "192.168.0.129:9050";

ALTER SYSTEM ADD BACKEND "192.168.0.137:9050";

# 删除BE

ALTER SYSTEM DROPP BACKEND "hostname:9050";

ALTER SYSTEM DROPP BACKEND "192.168.0.129:9050";

ALTER SYSTEM DROPP BACKEND "192.168.0.137:9050";

2.5.3、查看BE状态

mysql-client 连接到 FE

# isAlive 列应为 true

SHOW PROC '/backends' \G;

通过URL来访问

http://be_host:webserver_port/api/health

webserver_port BE安装节点conf/be.conf中配置的端口

http://192.168.0.129:18040/api/health

3、集群管理

3.1、WEBUI

http://fe_host:fe_http_port

http://192.168.0.122:8030

3.2、创建用户

CREATE USER 'doris'@'%' IDENTIFIED BY 'Doris*2023' DEFAULT ROLE 'admin';

SHOW GRANTS FOR doris@'%';

# 登录

mysql -h192.168.0.122 -P9030 -udoris -pDoris*2023

3.3、创建库

create database tmp;

create database ods;

create database dwd;

create database dws;

create database ads;

3.3、创建表

3.3.1、主键唯一模型

CREATE TABLE tmp.dept

(

`deptno` int,

`dname` varchar(14),

`loc` varchar(13)

)

UNIQUE KEY(deptno)

COMMENT "部门表"

DISTRIBUTED BY HASH (deptno) BUCKETS 8

PROPERTIES(

"replication_num" = "1"

);

insert into tmp.dept values (10,'accounting','new york'),(20,'research','dallas'),(30,'sales','chicago'),(40,'operations','boston');

CREATE TABLE tmp.emp

(

`empno` int,

`ename` varchar(10),

`job` varchar(9),

`mgr` int,

`hiredate` date,

`sal` float,

`comm` float,

`deptno` int

)

UNIQUE KEY(empno)

COMMENT "员工表"

DISTRIBUTED BY HASH (empno) BUCKETS 8

PROPERTIES(

"replication_num" = "1"

);

insert into tmp.emp values (7369,'smith','clerk',7902,'1980-12-17',800,null,20),

(7499,'allen','salesman',7698,'1981-02-20',1600,300,30),

(7521,'ward','salesman',7698,'1981-02-22',1250,500,30),

(7566,'jones','manager',7839,'1981-04-02',2975,null,20),

(7654,'martin','salesman',7698,'1981-09-28',1250,1400,30),

(7698,'blake','manager',7839,'1981-05-01',2850,null,30),

(7782,'clark','manager',7839,'1981-06-09',2450,null,10),

(7788,'scott','analyst',7566,'1987-07-13',3000,null,20),

(7839,'king','president',null,'1981-11-07',5000,null,10),

(7844,'turner','salesman',7698,'1981-09-08',1500,0,30),

(7876,'adams','clerk',7788,'1987-07-13',1100,null,20),

(7900,'james','clerk',7698,'1981-12-03',950,null,30),

(7902,'ford','analyst',7566,'1981-12-03',3000,null,20),

(7934,'miller','clerk',7782,'1982-01-23',1300,null,10);

3.3.2、明细模型

CREATE TABLE tmp.salgrade

(

`grade` int,

`losal` int,

`hisal` int

)

DUPLICATE KEY(grade,losal,hisal)

COMMENT "工资水平"

DISTRIBUTED BY HASH(losal,hisal) BUCKETS 8

PROPERTIES (

"replication_num" = "1"

);

insert into tmp.salgrade values (1,700,1200),(2,1201,1400),(3,1401,2000),(4,2001,3000),(5,3001,9999);

3.3.2、聚合模型

1、用户消费和行为聚合模型

-- 这是一个用户消费和行为记录的数据表

CREATE TABLE IF NOT EXISTS tmp.user_info

(

`user_id` LARGEINT NOT NULL COMMENT "用户 id",

`date` DATE NOT NULL COMMENT "数据灌入日期时间",

`city` VARCHAR(20) COMMENT "用户所在城市",

`age` SMALLINT COMMENT "用户年龄",

`sex` TINYINT COMMENT "用户性别",

`last_visit_date` DATETIME REPLACE DEFAULT "1970-01-01 00:00:00" COMMENT "用户最后一次访问时间",

`cost` BIGINT SUM DEFAULT "0" COMMENT "用户总消费",

`max_dwell_time` INT MAX DEFAULT "0" COMMENT "用户最大停留时间",

`min_dwell_time` INT MIN DEFAULT "99999" COMMENT "用户最小停留时间"

)

ENGINE=olap

AGGREGATE KEY(`user_id`, `date`, `city`, `age`, `sex`)

COMMENT "用户消费和行为记录表"

-- 分区

-- 分桶

DISTRIBUTED BY HASH(`user_id`) BUCKETS 8

PROPERTIES (

"replication_num" = "1"

);

insert into tmp.user_info values

(10000,'2017-10-01','北京',20,0,'2017-10-01 06:00:00',20,10,10),

(10000,'2017-10-01','北京',20,0,'2017-10-01 07:00:00',15,2,2),

(10001,'2017-10-01','北京',30,1,'2017-10-01 17:05:45',2,22,22),

(10002,'2017-10-02','上海',20,1,'2017-10-02 12:59:12',200,5,5),

(10003,'2017-10-02','广州',32,0,'2017-10-02 11:20:00',30,11,11),

(10004,'2017-10-01','深圳',35,0,'2017-10-01 10:00:15',100,3,3),

(10004,'2017-10-03','深圳',35,0,'2017-10-03 10:20:22',11,6,6);

2、订单聚合模型

# 自动聚合出如下结果:

# 订单日期,订单id,userId,商品id,购买得总件数,支付总额

create table tmp.order_info

(

`date` date COMMENT "日期时间",

`oid` bigint COMMENT "订单id",

`userid` varchar(255) COMMENT "用户id",

`spid` varchar(255) COMMENT "商品id",

`total` int sum COMMENT "商品总数",

`pay` int sum COMMENT "支付总金额"

)

ENGINE=olap

AGGREGATE KEY(`date`,`oid`,`userid`,`spid`)

COMMENT "订单聚合表"

partition by range(`date`)

(

partition `p20221201` values less than ("2022-12-02"),

partition `p20221202` values less than ("2022-12-03"),

partition `p20221203` values less than ("2022-12-04")

)

DISTRIBUTED BY HASH(`userid`) BUCKETS 2

PROPERTIES (

"replication_num" = "1"

);

-- 插入数据

-- 订单日期,订单id,userId,商品id,购买件数,支付的金额

insert into tmp.order_info values

('2022-12-01',1,'u01','p01',2,20),

('2022-12-01',1,'u01','p02',1,10),

('2022-12-01',1,'u01','p01',1,10),

('2022-12-01',2,'u02','p03',2,40);