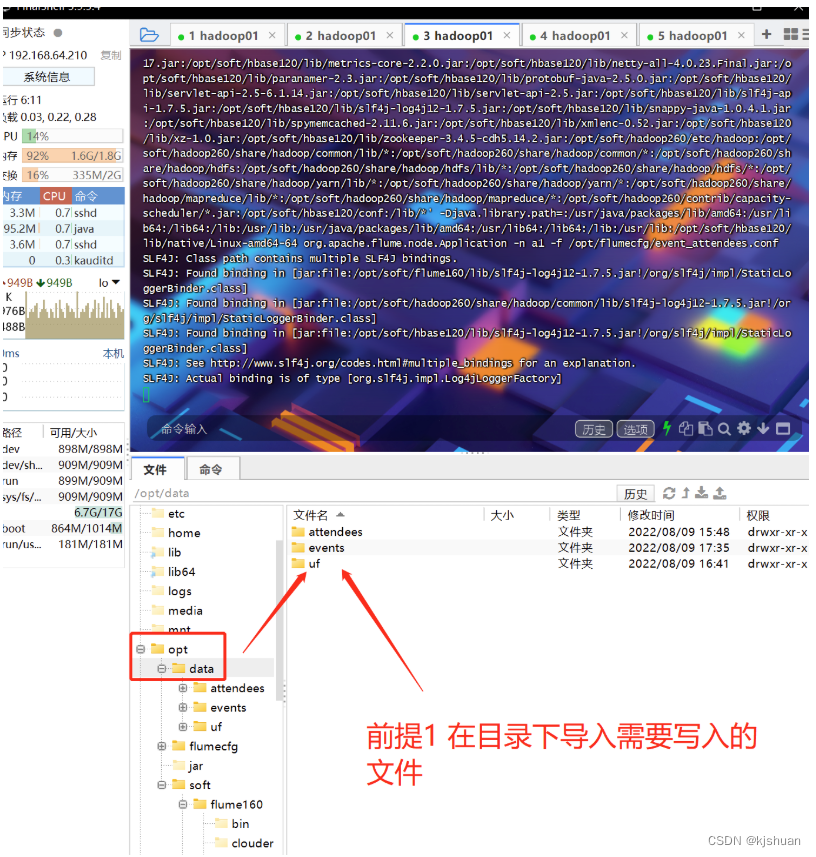

配置前提1

1.1 导入数据到kafka 1.2 flume读取数据 需要安装msyql5.7 可以看我上篇文章

#1 解压文件夹 移动到指定位置 cd /opt/jar tar -zxf flume-ng-1.6.0-cdh5.14.2.tar.gz mv apache-flume-1.6.0-cdh5.12.0-bin/ /opt/soft/flume160 tar -zxf kafka_2.11-2.0.0.tgz mv kafka_2.11-2.0.0 /opt/soft/kafka200 #2 配置kafka配置文件 vim /opt/soft/kafka200/config/server.properties <<=======================================>> listeners=PLAINTEXT://192.168.64.210:9092 log.dirs=/opt/soft/kafka200/data zookeeper.connect=192.168.64.210:2181 <<=======================================>> #3 配置flume配置文件 cp flume-env.sh.template flume.env.sh vim /opt/soft/flume160/conf//flume-env.sh <=======================================> export JAVA_HOME=/opt/soft/jdk180 <=======================================> #4 配置环境变量 vim /etc/profole ======================================= #kafka env export KAFKA_HOME=/opt/soft/kafka200 export PATH=$PATH:$KAFKA_HOME/bin #flume env export FLUME_HOME=/opt/soft/flume160 export PATH=$PATH:$FLUME_HOME/bin ======================================= #激活配置 source /etc/profile #5 启动zookeper zkServer.sh start #6 启动kafka kafka-server-start.sh /opt/soft/kafka200/config/server.properties #创建3个分区 kafka-topics.sh --create --zookeeper 192.168.64.210:2181 --topic event_attendess_raw --replication-factor 1 --partitions 3

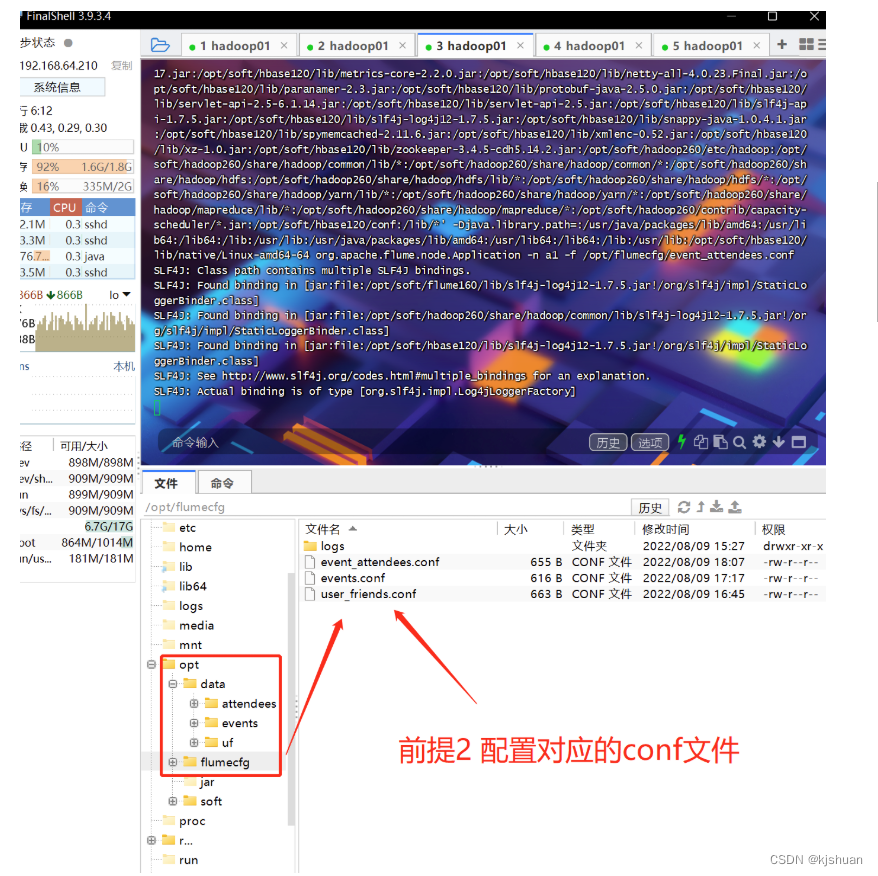

配置conf文件

#查看源文件大小 cd /opt/data/attendees/ ls wc -l event_attendees.csv.COMPLETED cat event_attendees.csv.COMPLETED #配置第一个文件(event_attendees.conf) a1.channels= c1 a1.sources= s1 a1.sinks= k1 a1.sources.s1.type= spooldir a1.sources.s1.channels= c1 a1.sources.s1.spoolDir= /opt/data/attendees a1.sources.s1.deserializer.maxLineLength=120000 a1.sources.s1.interceptors=i1 a1.sources.s1.interceptors.i1.type=regex_filter a1.sources.s1.interceptors.i1.regex=event.* a1.sources.s1.interceptors.i1.excludeEvents=true a1.channels.c1.type = memory a1.sinks.k1.channel = c1 a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.kafka.topic = event_attendees_raw a1.sinks.k1.kafka.bootstrap.servers = 192.168.64.210:9092 a1.sinks.k1.kafka.flumeBatchSize = 20 a1.sinks.k1.kafka.producer.acks = 1 #配置第二个文件(user_friends.conf) a1.channels= c1 a1.sources= s1 a1.sinks= k1 a1.sources.s1.type= spooldir a1.sources.s1.channels= c1 a1.sources.s1.spoolDir= /opt/data/uf a1.sources.s1.deserializer.maxLineLength=60000 a1.sources.s1.interceptors=i1 a1.sources.s1.interceptors.i1.type=regex_filter a1.sources.s1.interceptors.i1.regex=event.* a1.sources.s1.interceptors.i1.excludeEvents=true a1.channels.c1.type = memory a1.sinks.k1.channel = c1 a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.kafka.topic = user_friends_raw a1.sinks.k1.kafka.bootstrap.servers = 192.168.64.210:9092 a1.sinks.k1.kafka.flumeBatchSize = 20 a1.sinks.k1.kafka.producer.acks = 1

#查看文件数量 cd /opt/data/uf wc -l user_friends.csv

将event上传kafka

#配置第三个文件(events_raw.conf) a1.channels= c1 a1.sources= s1 a1.sinks= k1 a1.sources.s1.type= spooldir a1.sources.s1.channels= c1 a1.sources.s1.spoolDir= /opt/data/events a1.sources.s1.interceptors=i1 a1.sources.s1.interceptors.i1.type=regex_filter a1.sources.s1.interceptors.i1.regex=event.* a1.sources.s1.interceptors.i1.excludeEvents=true a1.channels.c1.type = memory a1.sinks.k1.channel = c1 a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.kafka.topic = events_raw a1.sinks.k1.kafka.bootstrap.servers = 192.168.64.210:9092 a1.sinks.k1.kafka.flumeBatchSize = 20 a1.sinks.k1.kafka.producer.acks = 1

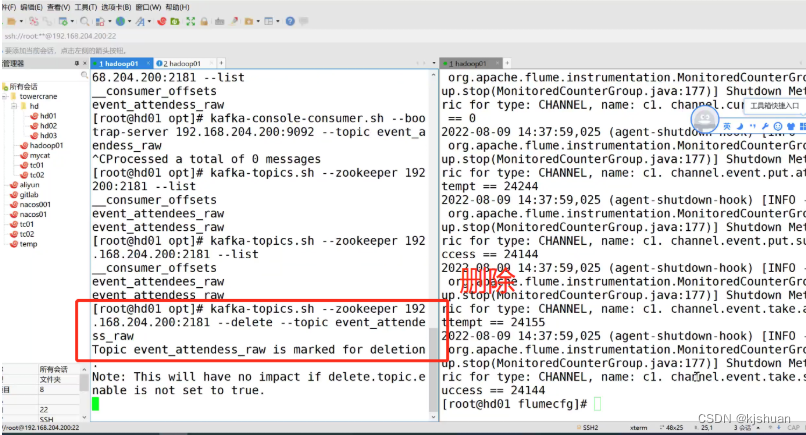

删除消息队列

#必须配置为true才能删除

vim /opt/soft/kafka200/config/server.properties

delete.topic.enable=true

#查看topic数量

kafka-topics.sh --zookeeper 192.168.64.210:2181 --list

#删除topic

kafka-topics.sh --zookeeper 192.168.64.210:2181 --topic events_raw --delete

#查看 文件头

cd /opt/data/events

cat events.csv.COMPLETED | head -1

读入events文件

#第一步 监控kafka kafka-console-consumer.sh --bootstrap-server 192.168.64.210:9092 --topic events_raw #第二步 Flume开始写入文件 flume-ng agent -n a1 -c /opt/soft/flume160/conf/ -f /opt/flumecfg/events.conf -Dflume.root.looger=INFO,console #第三步 查看kafka里的数据 kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.64.210:9092 --topic events_raw #查看topic数量 kafka-topics.sh --zookeeper 192.168.64.210:2181 --list #删除topic kafka-topics.sh --zookeeper 192.168.64.210:2181 --topic events_raw --delete #查看删除日志 cd /opt/soft/kafka200/kafka-logs ls

读入user_friends文件

#第一步 监控kafka kafka-console-consumer.sh --bootstrap-server 192.168.64.210:9092 --topic user_friends_raw #第二步 Flume开始写入文件 flume-ng agent -n a1 -c /opt/soft/flume160/conf/ -f /opt/flumecfg/user_friends.conf -Dflume.root.looger=INFO,console #第三步 查看kafka里的数据 kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.64.210:9092 --topic user_friends_raw #查看topic数量 kafka-topics.sh --zookeeper 192.168.64.210:2181 --list #删除topic kafka-topics.sh --zookeeper 192.168.64.210:2181 --topic user_friends.csv --delete #查看删除日志 cd /opt/soft/kafka200/kafka-logs ls

读入event_attendees文件

#第一步 监控kafka kafka-console-consumer.sh --bootstrap-server 192.168.64.210:9092 --topic event_attendess_raw #第二步 Flume开始写入文件 flume-ng agent -n a1 -c /opt/soft/flume160/conf/ -f /opt/flumecfg/event_attendees.conf -Dflume.root.looger=INFO,console #第三步 查看kafka里的数据 kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list 192.168.64.210:9092 --topic event_attendess_raw #查看topic数量 kafka-topics.sh --zookeeper 192.168.64.210:2181 --list #删除topic kafka-topics.sh --zookeeper 192.168.64.210:2181 --topic event_attendess_raw --delete #查看删除日志 cd /opt/soft/kafka200/kafka-logs ls