pytorch版:

总共分为几个步骤:

1. 加载数据集(这里当然可以用你自己的数据集,但要注意调节参数,h, w, channel)

2. 定义神经网络模型(这里写了两种方法)

3. 检查模型

4. 将模型传入gpu

5. 损失函数和优化器

6. 训练

7. 绘制曲线

8. 评估模型

#LeNet-5网络结构

import torch.nn as nn

import torch

import torchvision

from torchvision import transforms

from torch.utils import data

import matplotlib.pyplot as plt

import torch.nn.functional as F

#定义加载数据集函数

def load_data_mnist(batch_size):

'''下载MNIST数据集然后加载到内存中'''

train_dataset=torchvision.datasets.MNIST(root='dataset',train=True,transform=transforms.ToTensor(),download=True)

test_dataset=torchvision.datasets.MNIST(root='dataset',train=False,transform=transforms.ToTensor(),download=True)

return (data.DataLoader(train_dataset,batch_size,shuffle=True),

data.DataLoader(test_dataset,batch_size,shuffle=False))

#LeNet-5在MNIST数据集上的表现

batch_size=64

train_iter,test_iter=load_data_mnist(batch_size=batch_size)

###########################################################################

# 神经网络模型的第一种形式

# net = nn.Sequential(

# nn.Conv2d(1, 6, kernel_size=5, padding=2), nn.Sigmoid(),

# nn.AvgPool2d(kernel_size=2, stride=2),

# nn.Conv2d(6, 16, kernel_size=5), nn.Sigmoid(),

# nn.AvgPool2d(kernel_size=2, stride=2), nn.Flatten(),

# nn.Linear(16 * 5 * 5, 120), nn.Sigmoid(),

# nn.Linear(120, 84), nn.Sigmoid(),

# nn.Linear(84, 10))

# 神经网络模型的第二种形式

class net1(nn.Module):

def __init__(self):

super(net1, self).__init__()

self.C1 = nn.Conv2d(1, 6, kernel_size=5, padding=2)

self.C2 = nn.Conv2d(6, 16, kernel_size=5)

self.linear1 = nn.Linear(16 * 5 * 5, 120)

self.linear2 = nn.Linear(120, 84)

self.linear3 = nn.Linear(84, 10)

def forward(self, x):

n = self.C1(x)

n = nn.Sigmoid()(n)

n = nn.AvgPool2d(kernel_size=2, stride=2)(n)

n = self.C2(n)

n = nn.Sigmoid()(n)

n = nn.AvgPool2d(kernel_size=2, stride=2)(n)

n = nn.Flatten()(n)

n = self.linear1(n)

n = nn.Sigmoid()(n)

n = self.linear2(n)

n = nn.Sigmoid()(n)

n = self.linear3(n)

return n

net = net1()

######################################################################

#检查模型

x = torch.rand(size=(1, 1, 28, 28), dtype=torch.float32)

# 对应第一种神经网络模型

# for layer in net:

# x = layer(x)

# print(layer.__class__.__name__, 'output shape:\t', x.shape)

# 对应第二种神经网络模型

model_modules = [x for x in net.modules()]

print(model_modules)

print(len(model_modules))

####################################################################

# 获取GPU设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

# 传送网络到GPU

net.to(device)

######################################################################

#损失函数

loss_function=nn.CrossEntropyLoss()

#优化器

optimizer=torch.optim.Adam(net.parameters())

#####################################################################

# 开始训练

num_epochs = 10

train_loss = []

for epoch in range(num_epochs):

for batch_idx, (x, y) in enumerate(train_iter):

# x = x.view(x.size(0), 28 * 28)

# 传送输入和标签到GPU

x, y = x.to(device), y.to(device) # x是inputs, y是labels

out = net(x)

y_onehot = F.one_hot(y, num_classes=10).float() # 转为one-hot编码

loss = loss_function(out, y_onehot) # 均方差

# 清零梯度

optimizer.zero_grad()

loss.backward()

# w' = w -lr * grad

optimizer.step()

train_loss.append(loss.item())

if batch_idx % 10 == 0:

print(epoch, batch_idx, loss.item())

####################################################################

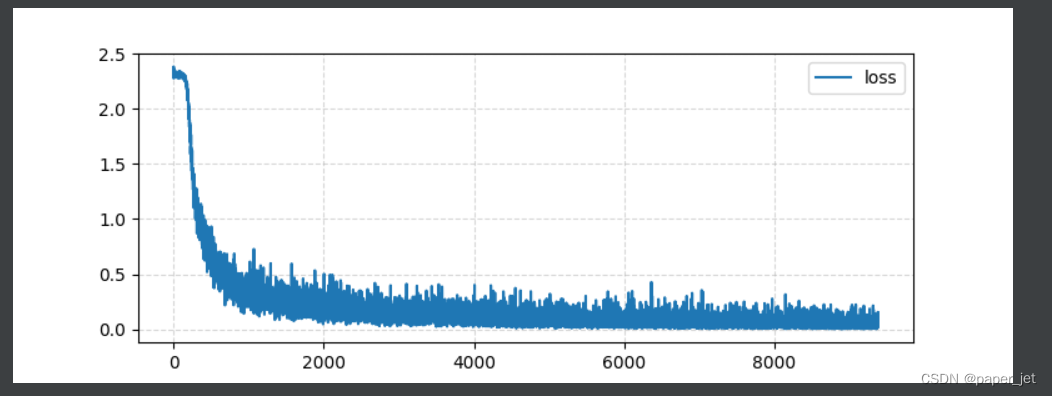

#绘制损失曲线

plt.figure(figsize=(8,3))

plt.grid(True,linestyle='--',alpha=0.5)

plt.plot(train_loss,label='loss')

plt.legend(loc="best")

plt.show()

################################################

#评估模型

total_correct = 0

for batch_idx, (x, y) in enumerate(test_iter):

# x = x.view(x.size(0),28*28)

# 传送输入和标签到GPU

x, y = x.to(device), y.to(device) # x是inputs, y是labels

out = net(x)

pred = out.argmax(dim=1)

correct = pred.eq(y).sum().float().item()

total_correct += correct

total_num = len(test_iter.dataset)

test_acc = total_correct / total_num

print(total_correct, total_num)

print("test acc:", test_acc)

matpotlib显示:

#############################################################################

若训练的模型比较大,有多个模块,可嵌套nn.modules的子类,融合成最后的一整个大模型。

例如:

这里我把 nn.Conv2d(1, 6, kernel_size=5, padding=2) 这一层单分出来变成一个模块,

通过在net1类中调用net2类,最终实现 完整的模型

class net2(nn.Module):

def __init__(self):

super(net2, self).__init__()

self.CCC1 = nn.Conv2d(1, 6, kernel_size=5, padding=2)

def forward(self,x):

x = self.CCC1(x)

return x

class net1(nn.Module):

def __init__(self):

super(net1, self).__init__()

# self.C1 = nn.Conv2d(1, 6, kernel_size=5, padding=2)

self.net22 = net2()

self.C2 = nn.Conv2d(6, 16, kernel_size=5)

self.linear1 = nn.Linear(16 * 5 * 5, 120)

self.linear2 = nn.Linear(120, 84)

self.linear3 = nn.Linear(84, 10)

def forward(self, x):

# n = self.C1(x)

n = self.net22(x)

n = nn.Sigmoid()(n)

n = nn.AvgPool2d(kernel_size=2, stride=2)(n)

n = self.C2(n)

n = nn.Sigmoid()(n)

n = nn.AvgPool2d(kernel_size=2, stride=2)(n)

n = nn.Flatten()(n)

n = self.linear1(n)

n = nn.Sigmoid()(n)

n = self.linear2(n)

n = nn.Sigmoid()(n)

n = self.linear3(n)

return n

net = net1()############################################################################

另一种很规整的检查模型方式:

(但要下载torchsummary包)

from torchsummary import summary

summary(net, (1,28,28)) # 第一个参数为你实例化后的模型, 第二个参数是你输入的尺寸,batch_size自动为-1,这里不用写。所以(1,28,28)是C,H,W # 此语句要放在net.to(device)后,否则会出现模型与参数数据在不同设备的报错 输出表: 可以看到不同层的输出 size 和 参数量

tensorflow版:

import tensorflow as tf

# Load MNIST dataset

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# Normalize pixel values to be between 0 and 1

x_train, x_test = x_train / 255.0, x_test / 255.0

# Define model architecture

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10)

])

# Compile model

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

model.compile(optimizer='adam',

loss=loss_fn,

metrics=['accuracy'])

# Train model

model.fit(x_train, y_train, epochs=5)

# Evaluate model on test data

model.evaluate(x_test, y_test)