机器视觉是人工智能正在快速发展的一个分支,简单说来机器视觉就是用机器代替人眼来做测量和判断。机器视觉系统是通过机器视觉产品(即图像摄取装置,分CMOS和CCD两种)将被摄取目标转换成图像信号,传送给专用的图像处理系统,得到被摄目标的形态信息,根据像素分布和亮度、颜色等信息,转变成数字化信号;图像系统对这些信号进行各种运算来抽取目标的特征,进而根据判别的结果来控制现场的设备动作。

机器视觉基础主要包含形状识别、颜色检测、颜色追踪、二维码识别等。

机器视觉系统最基本的特点就是提高生产的灵活性和自动化程度,在一些不适于人工作业的危险工作环境或者人工视觉难以满足要求的场合,常用机器视觉来替代人工视觉。同时在大批量重复性工业生产过程中,用机器视觉检测方法可以大大提高生产的效率和自动化程度。接下来我们将结合机器视觉基础,基于开源的机器人平台,进行形状识别、颜色检测、颜色追踪的应用开发。

1. 形状识别-识别圆形

实现思路

利用摄像头采集图片信息识别圆形,在界面上显示出圆的圆心坐标。

器材准备

摄像头、红色和绿色两种圆形图(如下图所示)。

操作步骤

① 下载文末资料中的参考程序visual_experiment_ws\src\shape_detection\scripts\shape_detection_victory.py:

#!/usr/bin/env python

#!-*-coding=utf-8 -*-

import rospy

from sensor_msgs.msg import Image

import cv2

import numpy as np

from cv_bridge import CvBridge, CvBridgeError

import sys

import time

lower_blue=np.array([100,43,46])

upper_blue=np.array([124,255,255])

#lower_blue=np.array([14,143,80])

#upper_blue=np.array([23,255,200])

#lower_red=np.array([0,200,55])

#upper_red=np.array([10,255,130])

#lower_red=np.array([0,150,55])

#upper_red=np.array([10,255,130])

lower_red=np.array([0,43,46])

upper_red=np.array([10,255,255])

lower_green=np.array([40,43,46])

upper_green=np.array([77,255,255])

font = cv2.FONT_HERSHEY_SIMPLEX # 设置字体样式

kernel = np.ones((5, 5), np.uint8) # 卷积核

img_pub = rospy.Publisher('webcam/image_raw', Image, queue_size=2)

#cap = cv2.VideoCapture(0)

def areaCal(contour):

area = 0

for i in range(len(contour)):

area += cv2.contourArea(contour[i])

return area

def image_callback(msg):

bridge = CvBridge()

frame = bridge.imgmsg_to_cv2(msg, "bgr8")

#cv2.imshow("source", frame)

#ret, frame = Video.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # 转换为灰色通道

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV) # 转换为HSV空间

mask = cv2.inRange(hsv, lower_red, upper_red) # 设定掩膜取值范围 [消除噪声]

#mask = cv2.inRange(hsv, lower_green, upper_green) # 设定掩膜取值范围 [消除噪声]

opening = cv2.morphologyEx(mask, cv2.MORPH_OPEN, kernel) # 形态学开运算 [消除噪声]

bila = cv2.bilateralFilter(mask, 10, 200, 200) # 双边滤波消除噪声 [消除噪声]

edges = cv2.Canny(opening, 50, 100) # 边缘识别 [消除噪声]

mask_green = cv2.inRange(hsv,lower_green,upper_green)

opening_green = cv2.morphologyEx(mask_green, cv2.MORPH_OPEN, kernel)

bila_green = cv2.bilateralFilter(mask_green, 10, 200, 200)

edges_green = cv2.Canny(opening_green, 50, 100)

images,contourss,hierarchvs = cv2.findContours(edges_green,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

image,contours,hierarchv = cv2.findContours(edges,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

scaling_factor = 0.5

print "area_red=",areaCal(contours),"area_green=",areaCal(contourss)

if(areaCal(contours)>50):

#circles = cv2.HoughCircles(edges, cv2.HOUGH_GRADIENT, 1, 100, param1=100, param2=20, minRadius=20, maxRadius=500)

circles = cv2.HoughCircles(edges, cv2.HOUGH_GRADIENT, 1, 100, param1=100, param2=20, minRadius=0, maxRadius=0)

if circles is not None: # 如果识别出圆

#print "I found the red circle"

for circle in circles[0]:

x_red=int(circle[0])

y_red=int(circle[1])

r_red=int(circle[2])

cv2.circle(frame, (x_red, y_red), r_red, (0, 0, 255), 3) # 标记圆

cv2.circle(frame, (x_red, y_red), 3, (255, 255, 0), -1) # 标记圆心

text = 'circle' + 'x: '+str(x_red)+' y: '+str(y_red)

cv2.putText(frame, text, (10, 30), font, 1, (0, 255, 0), 2, cv2.LINE_AA, 0) # 显示圆心位置

if(areaCal(contourss)>1000):

#circles = cv2.HoughCircles(edges, cv2.HOUGH_GRADIENT, 1, 100, param1=100, param2=20, minRadius=20, maxRadius=500)

circles = cv2.HoughCircles(edges_green, cv2.HOUGH_GRADIENT, 1, 100, param1=100, param2=20, minRadius=0, maxRadius=0)

if circles is not None: # 如果识别出圆

#print "I found the green circle"

for circle in circles[0]:

x_red=int(circle[0])

y_red=int(circle[1])

r_red=int(circle[2])

cv2.circle(frame, (x_red, y_red), r_red, (0, 0, 255), 3) # 标记圆

cv2.circle(frame, (x_red, y_red), 3, (255, 255, 0), -1) # 标记圆心

text = 'circle' + 'x: '+str(x_red)+' y: '+str(y_red)

cv2.putText(frame, text, (10, 60), font, 1, (0, 255, 0), 2, cv2.LINE_AA, 0) # 显示圆心位置

frame = cv2.resize(frame,None,fx=scaling_factor,fy=scaling_factor,interpolation=cv2.INTER_AREA)

msg = bridge.cv2_to_imgmsg(frame, encoding="bgr8")

img_pub.publish(msg)

cv2.waitKey(3)

'''

rate = rospy.Rate(20) # 5hz

scaling_factor = 0.5

bridge = CvBridge()

count = 0

while not rospy.is_shutdown():

if (True):

count = count + 1

else:

rospy.loginfo("Capturing image failed.")

if count == 2:

count = 0

frame = cv2.resize(frame,None,fx=scaling_factor,fy=scaling_factor,interpolation=cv2.INTER_AREA)

msg = bridge.cv2_to_imgmsg(frame, encoding="bgr8")

img_pub.publish(msg)

rate.sleep()

'''

def webcamImagePub():

rospy.init_node('webcam_puber', anonymous=True)

# img_pub = rospy.Publisher('webcam/image_raw', Image, queue_size=2)

rospy.Subscriber("/image_raw", Image, image_callback)

rospy.spin()

if __name__ == '__main__':

try:

webcamImagePub()

except rospy.ROSInterruptException:

pass

finally:

webcamImagePub()

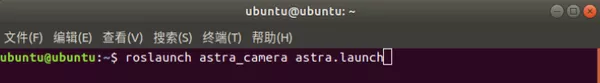

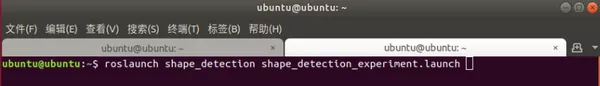

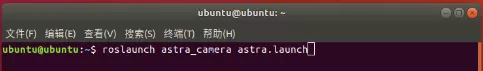

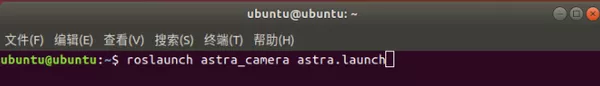

② 打开第一个终端(Ctrl+Alt+T)并输入:roslaunch astra_camera astra.launch 命令(见下图), 等待程序的运行。

打开第二个终端(Ctrl+Shift+T)并输入命令:roslaunch shape_detection shape_detection_experiment.launch,等待界面的启动。

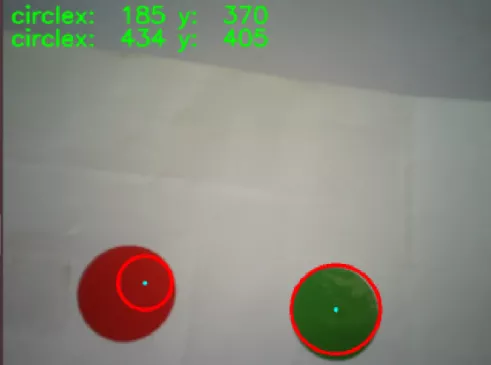

③ 放置待识别的圆形图(请把物品放置在摄像头可以采集到的区域),就可以在界面上看到识别结果。如下图所示是分别识别出红色圆形、绿色圆形轮廓,并显示识别出圆心的中心坐标x、y的值。

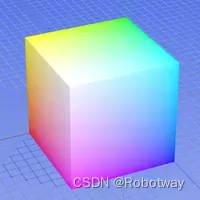

RGB:三原色(Red, Green, Blue)

原点到白色顶点的中轴线是灰度线,r、g、b三分量相等,强度可以由三分量的向量表示。

用RGB来理解色彩、深浅、明暗变化。

色彩变化: 三个坐标轴RGB最大分量顶点与黄紫青YMC色顶点的连线

深浅变化:RGB顶点和CMY顶点到原点和白色顶点的中轴线的距离

明暗变化:中轴线的点的位置,到原点,就偏暗,到白色顶点就偏亮

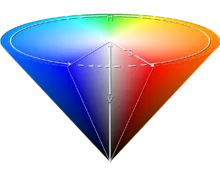

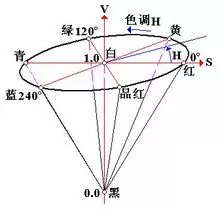

②. HSV模型

倒锥形模型:

这个模型就是按色彩、深浅、明暗来描述的。

H是色彩

S是深浅, S = 0时,只有灰度

V是明暗,表示色彩的明亮程度,但与光强无直接联系。

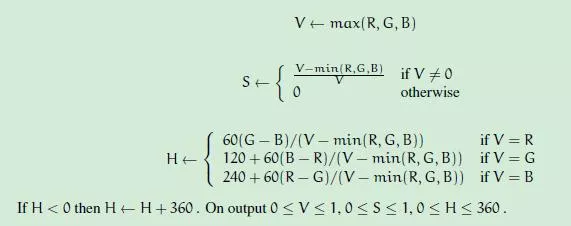

③. RGB与HSV的联系

从上面的直观的理解,把RGB三维坐标的中轴线立起来,并扁化,就能形成HSV的锥形模型了。

但V与强度无直接关系,因为它只选取了RGB的一个最大分量。而RGB则能反映光照强度(或灰度)的变化。

v = max(r, g, b)

由RGB到HSV的转换:

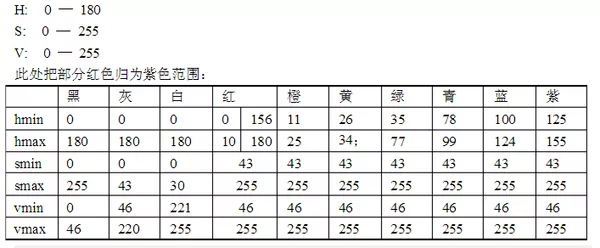

④.HSV色彩范围

操作步骤

① 连接电路:请把摄像头与主机进行连接。

② 下载文末资料中的参考程序visual_experiment_ws\src\color_detection\scripts\color_test_one.py:

#!/usr/bin/env python

#!-*-coding=utf-8 -*-

import rospy

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

import cv2

import numpy as np

import serial

import time

import sys

from std_msgs.msg import UInt16

from std_msgs.msg import String

img_pub = rospy.Publisher('webcam/image_raw', Image, queue_size=2)

lower_blue=np.array([100,43,46])

upper_blue=np.array([124,255,255])

lower_red=np.array([0,43,46])

upper_red=np.array([10,255,255])

lower_green=np.array([40,43,46])

upper_green=np.array([77,255,255])

font = cv2.FONT_HERSHEY_SIMPLEX

kernel = np.ones((5, 5), np.uint8)

def areaCal(contour):

area = 0

for i in range(len(contour)):

area += cv2.contourArea(contour[i])

return area

def image_callback(msg):

bridge = CvBridge()

img = bridge.imgmsg_to_cv2(msg, "bgr8")

#cv2.imshow("source", img)

hsv = cv2.cvtColor(img,cv2.COLOR_BGR2HSV)

mask_red = cv2.inRange(hsv,lower_red,upper_red)

res = cv2.bitwise_and(img,img,mask=mask_red)

scaling_factor = 0.5

# cv2.imshow("res",res)

image,contours,hierarchv = cv2.findContours(mask_red,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

# print "red=",areaCal(contours)

if (areaCal(contours)>5000):

# print "red color"

text_red = 'the color is red'

cv2.putText(img, text_red, (10, 30), font, 1, (0, 0, 255), 2, cv2.LINE_AA, 0)

mask_blue= cv2.inRange(hsv, lower_blue, upper_blue)

res_yellow = cv2.bitwise_and(img,img,mask=mask_blue)

image,contours,hierarchv = cv2.findContours(mask_blue,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

if(areaCal(contours)>8000):

text_blue = 'the color is blue'

# print "blue color"

cv2.putText(img, text_blue, (10, 60), font, 1, (255, 0, 0), 2, cv2.LINE_AA, 0)

mask_green=cv2.inRange(hsv, lower_green,upper_green)

res_green = cv2.bitwise_and(img,img,mask=mask_blue)

image,contours,hierarchv = cv2.findContours(mask_green,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

if (areaCal(contours)>5000):

text_green = 'the color is green'

cv2.putText(img, text_green, (10, 90), font, 1, (0, 255, 0), 2, cv2.LINE_AA, 0)

# print ("green color")

# else:

# print( "NONE COLOR" )

frame = cv2.resize(img,None,fx=scaling_factor,fy=scaling_factor,interpolation=cv2.INTER_AREA)

msg = bridge.cv2_to_imgmsg(frame, encoding="bgr8")

img_pub.publish(msg)

cv2.waitKey(3)

# cv2.waitKey(1)

def main():

rospy.init_node('image_listener',anonymous=True)

#image_topic = "/camera/color/image_raw"

#rospy.Subscriber("/camera/color/image_raw", Image, image_callback)

rospy.Subscriber("/image_raw", Image, image_callback)

rospy.spin()

if __name__ == '__main__':

main()③ 打开终端(Ctrl+Alt+T),输入roslaunch astra_camera astra.launch(见下图), 等待程序的运行。

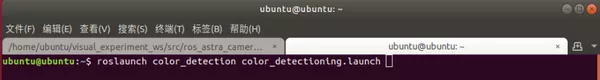

打开第二个终端(Ctrl+Shift+T)输入命令:roslaunch color_detection color_detectioning.launch,等待程序的运行。

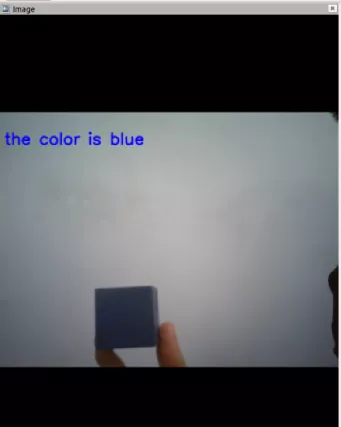

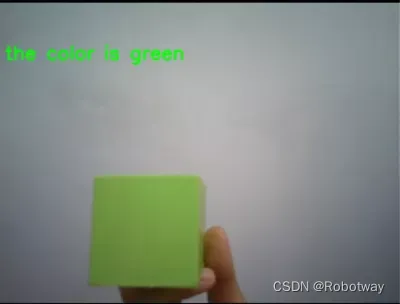

④ 界面启动后,放置物品(请把物品放置在摄像头可以采集到的区域),然后摄像头开始识别并在界面上显示识别结果。

下面以蓝色物品为例,当摄像头识别到蓝色物品后,在界面显示结果(the color is blue)。

3. 颜色追踪

实现思路

摄像头采集到红色物品后,通过串口通信来发布消息,黑手底盘订阅消息后进行相应的运动。

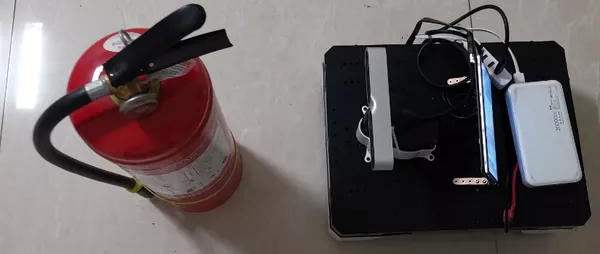

器材准备

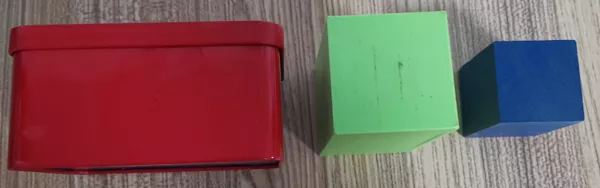

实验中需要用到的器材如下图所示(其中用红色的灭火器来代表待追踪的物体)。

操作步骤

① 下载文末资料中Ros通信的参考程序visual_experiment_ws\src\color_tracking\scripts\ros_arduino_translation_test.py:

#!/usr/bin/env python

#!coding=utf-8

import rospy

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

import cv2

import numpy as np

import serial

import time

import sys

from std_msgs.msg import UInt16

from std_msgs.msg import String

#lower_blue=np.array([50,143,146])

#upper_blue=np.array([124,255,255])

#lower_blue=np.array([14,143,80])

#upper_blue=np.array([23,255,200])

lower_blue=np.array([100,43,46])

upper_blue=np.array([124,255,255])

#lower_red=np.array([0,200,55])

#upper_red=np.array([10,255,130])

#lower_red=np.array([0,150,55])

#upper_red=np.array([10,255,130])

lower_red=np.array([0,43,46])

upper_red=np.array([10,255,255])

lower_green=np.array([40,43,46])

upper_green=np.array([77,255,255])

#ser = serial.Serial('/dev/ttyACM0', 57600, timeout=2.0) #2020.8.28 source value=0.5

img_pub = rospy.Publisher('webcam/image_raw', Image, queue_size=2)

data_pub= rospy.Publisher('Color_center_point',String,queue_size=20)

red_flag=0

blue_flag=0

image_flags = 1

cx_string=""

cy_string=""

cx_cy_string=""

send_data = ""

count=0

scaling_factor = 1.0 #2020.8.28 source value=0.5

def areaCal(contour):

area = 0

for i in range(len(contour)):

area += cv2.contourArea(contour[i])

return area

def image_callback(msg):

global count,send_data

bridge = CvBridge()

frame = bridge.imgmsg_to_cv2(msg, "bgr8")

#cv2.imshow("source", frame)

hsv = cv2.cvtColor(frame,cv2.COLOR_BGR2HSV)

mask_red = cv2.inRange(hsv,lower_red,upper_red)

res = cv2.bitwise_and(frame,frame,mask=mask_red)

#cv2.imshow("res",res)

image,contours,hierarchv = cv2.findContours(mask_red,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

print "mianji=",areaCal(contours)

if (areaCal(contours)>5000):

if(areaCal(contours)>80000):

send_data="back"

#ser.write("back\n")

#print "back"

elif( areaCal(contours)<50000 and areaCal(contours)>5000 ):

send_data="forward"

#ser.write("forward\n")

#print "forward"

else:

send_data="state"

if len(contours) > 0:

c = max(contours, key=cv2.contourArea)

#print "c=",c

cnt=contours[0]

cv2.drawContours(frame, c, -1, (0, 0, 255), 3)#画轮廓

#cv2.imshow("drawcontours",frame)

M = cv2.moments(c)

if M["m00"] !=0:

cx = int(M['m10']/M['m00'])

cy = int(M['m01']/M['m00'])

center = (int(M["m10"] / M["m00"]), int(M["m01"] / M["m00"]))

cv2.circle(frame, (cx,cy), 8, (0, 255, 0), -1)

print "center=",center,"cx=",cx,"cy=",cy

cx_string=str(cx)

cy_string=str(cy)

#cx_cy_string=(cx_string + "-" + cy_string + "\n").encode('utf-8')

#cx_cy_string=(cx_string + "-" + cy_string + "-" + send_data + "\n").encode('utf-8')

cx_cy_string=(cx_string + "-" + cy_string + "+" + send_data ).encode('utf-8')

print (cx_cy_string)

data_pub.publish(cx_cy_string)

else:

cx=0

cy=0

else:

print "no red color something"

#cv2.imshow("chanle", img)

# if cv2.waitKey(1) & 0xFF == ord('q'):

# #break

# #continue

if(image_flags==1):

count = count+1

else:

rospy.loginfo("Capturing image failed.")

if count == 2:

count = 0

frame = cv2.resize(frame,None,fx=scaling_factor,fy=scaling_factor,interpolation=cv2.INTER_AREA)

msg = bridge.cv2_to_imgmsg(frame, encoding="bgr8")

img_pub.publish(msg)

cv2.waitKey(1)

def main():

rospy.init_node('image_listener',anonymous=True)

# img_pub = rospy.Publisher('webcam/image_raw', Image, queue_size=2)

rospy.Subscriber("/image_raw", Image, image_callback)

rospy.spin()

if __name__ == '__main__':

main()

下载文末资料中控制黑手底盘运动的参考程序visual_experiment_ws\src\color_tracking\arduino_program\Color_Tracking_Arduino_Program\Color_Tracking_Arduino_Program.ino:

/*

* rosserial Publisher Example

* Prints "hello world!"

*/

#include <ros.h>

#include <std_msgs/String.h>

#include <Arduino.h>

#include <stdio.h>

#include <string.h>

#define CTL_BAUDRATE 115200 //总线舵机波特率

#define mySerial Serial2

#define SerialBaudrate 57600

#define RGB_LED_NUMBERS 3

#define Bus_servo_Angle_inits 1500

#define ActionDelayTimes 1500

//

#define wheel_speed_forward 0.08 //car forward speed

#define wheel_speed_back -0.08 //car back speed

#define wheel_speed_stop 0.0 //car stop speed

#define wheel_speed_left 0.1 //car turnleft speed

#define wheel_speed_right -0.1 //car turnright speed

#define wheel_speed_left_translation 0.08 //speed of car left translation

#define wheel_speed_right_translation -0.08 //speed of car right translation

char cmd_return[200];

String receive_string="hello";

ros::NodeHandle nh;

void messageCb( const std_msgs::String &toggle_msg){

receive_string=toggle_msg.data;

}

ros::Subscriber<std_msgs::String> sub("Color_center_point", &messageCb );

enum{FORWARD=1,BACK,LEFT,RIGHT,LEFT_TRANSLATION,RIGHT_TRANSLATION,STOP}; //the movement state of the car

void setup()

{

delay(1100);

Serial.begin(SerialBaudrate);

mySerial.begin(CTL_BAUDRATE);

nh.initNode();

nh.subscribe(sub);

}

void loop()

{

if( (receive_string.length())<5 && (receive_string.length())>15 )

{

for(int i=0;i<1;i++){

break;

}

}

else{

int SpacePosition[2] = {0,0};

int Data_one = 0;

int Data_two = 0;

int numbers_left=0 ,numbers_right=0;

char num_left[32] = {};

char num_right[32] = {};

String x_data="";

String y_data="";

String z_data="";

String new_string = "";

SpacePosition[0] = receive_string.indexOf('-');

x_data = receive_string.substring(0,SpacePosition[0]);

//if(x_data.length()>=4){break;}

new_string = receive_string.substring(SpacePosition[0]+1);

SpacePosition[1] = new_string.indexOf('+');

y_data = new_string.substring(0,SpacePosition[1]);

z_data = new_string.substring(SpacePosition[1]+1);

Data_one = x_data.toInt();

Data_two = y_data.toInt();

//if( (Data_one<=120) && (z_data =="state") ){Car_Move(LEFT_TRANSLATION);}

if( (Data_one<=280) && (Data_one>=20)){Car_Move(LEFT_TRANSLATION);}

else if ( (Data_one>=360) && (Data_one<=600) ){Car_Move(RIGHT_TRANSLATION);}

else if( z_data == "forward" ){Car_Move(FORWARD);}

else if( z_data == "back" ){Car_Move(BACK );}

else {Car_Move(STOP);}

receive_string = "";

x_data="";

y_data="";

z_data="";

new_string="";

}

nh.spinOnce();

delay(100);

}② 打开终端(Alt+ctrl+T),输入roslaunch astra_camera astra.launch命令即可,见下图。

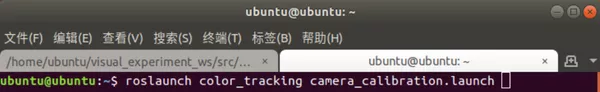

打开第二个终端(shift+ctrl+T),输入roslaunch color_tracking camera_calibration.launch 命令,见下图。

③ 移动灭火器,观察黑手底盘跟随灭火器运动的效果。

【注意:请把灭火器放置在摄像头可采集到的区域内;受硬件的影响,移动灭火器的速度建议稍微慢点,可以先把灭火器移动到一个位置,然后观察底盘追踪的效果】

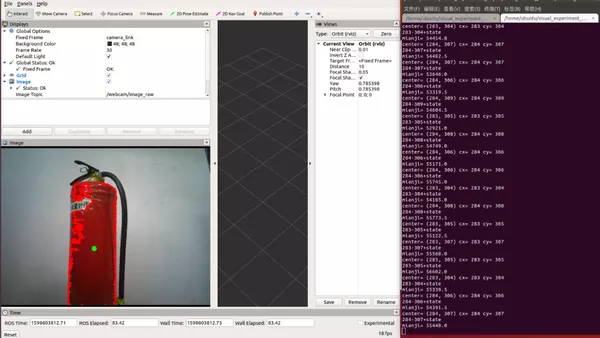

我们可以在rviz界面里看到摄像头采集到红色目标的中心坐标及面积,供追踪使用(如下图所示)。