嗨喽 大家好我是小曼呐

前言

环境介绍

- python 3.8

- pycharm

- requests >>> pip install requests

- pandas >>> pip install pandas

- jieba

- stylecloud

获取弹幕代码

请求数据

# 伪装

headers = {

# 浏览器基本信息

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/105.0.0.0 Safari/537.36'

}

url = f'https://dm.video.qq.com/barrage/segment/u00445z1gcx/t/v1/0/30000'

response = requests.get(url, headers=headers)

获取数据

# .text: 字符串

# .json(): 字典, {"":"", "":""} 包裹起来的内容

json_data = response.json()

barrage_list = json_data['barrage_list']

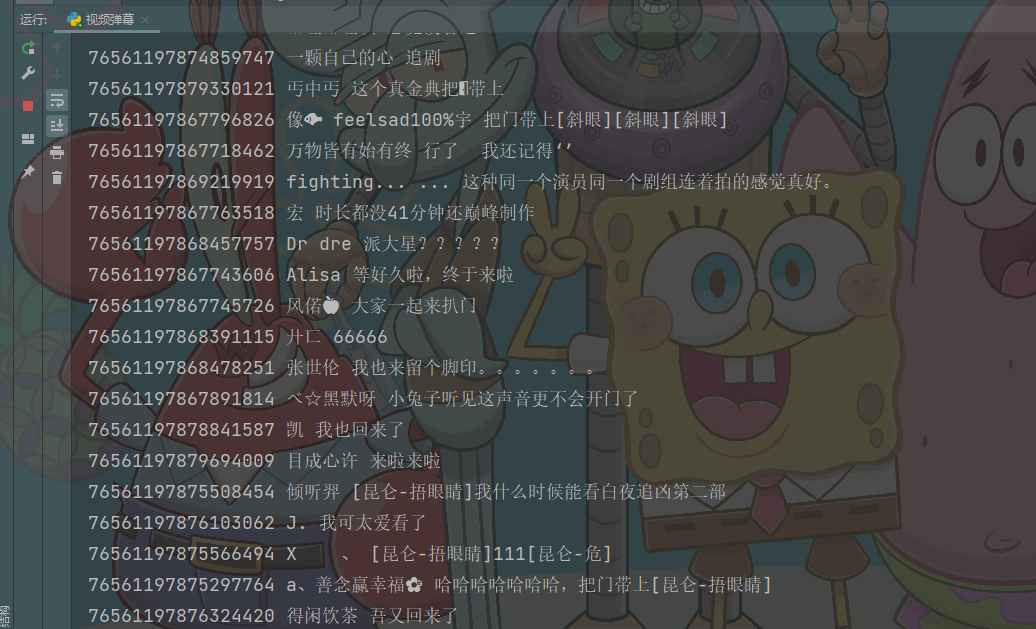

解析数据

# 遍历 循环

for i in range(0, len(barrage_list)):

content = json_data['barrage_list'][i]['content']

nick = json_data['barrage_list'][i]['nick']

id = json_data['barrage_list'][i]['id']

print(id, nick, content)

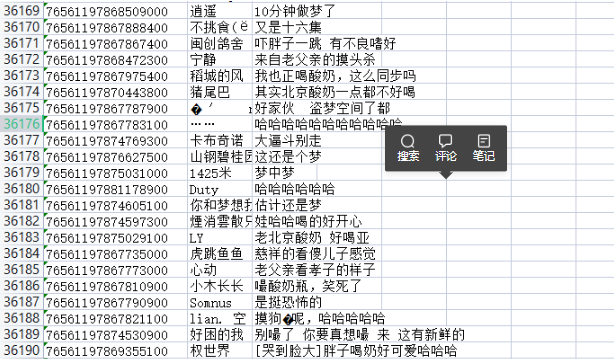

保存弹幕

with open('弹幕.csv', mode='a', encoding='utf-8-sig', newline='') as f:

csv_writer = csv.writer(f)

csv_writer.writerow([id, nick, content])

运行代码,得到结果

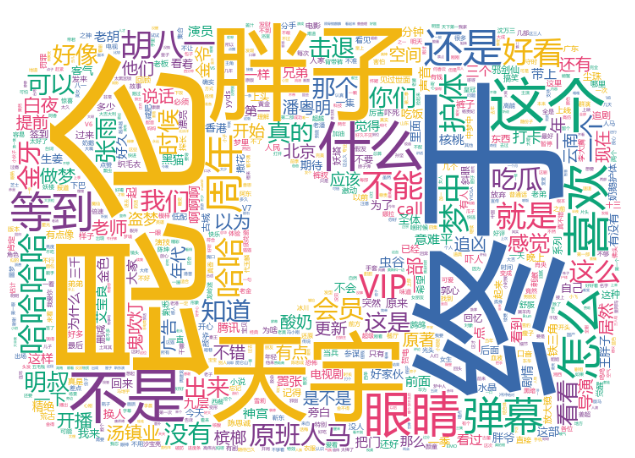

词云

import pandas as pd # 第三方模块

import jieba

import stylecloud

# 两年权限以外

# 冻结学时

# 被动重修以外, 也可以自行选择重修

# 1. 导入数据

df = pd.read_csv('弹幕.csv')

def get_cut_words(content_):

# 定义停用词的表

stop_words = []

with open('stop_words.txt', 'r', encoding='utf-8-sig') as f:

lines = f.readlines()

for line in lines:

stop_words.append(line.strip())

# 添加关键词

my_words = ['666', '鬼吹灯']

for i in my_words:

jieba.add_word(i)

word_num = jieba.lcut(content_.str.cat(sep='。'), cut_all=False)

word_num_selected = [i for i in word_num if i not in stop_words and len(i) >= 2]

return word_num_selected

text = get_cut_words(df['content'])

stylecloud.gen_stylecloud(

text=' '.join(text),

collocations=False,

font_path=r'C:\Windows\Fonts\msyh.ttc',

icon_name='fab fa-youtube',

size=768,

output_name='video.png'

)