参考雷神博客:http://blog.csdn.net/leixiaohua1020/article/details/38868499。

编译环境:

- VS2015

- FFmpeg3.4

- SDL2.0

1、流程图

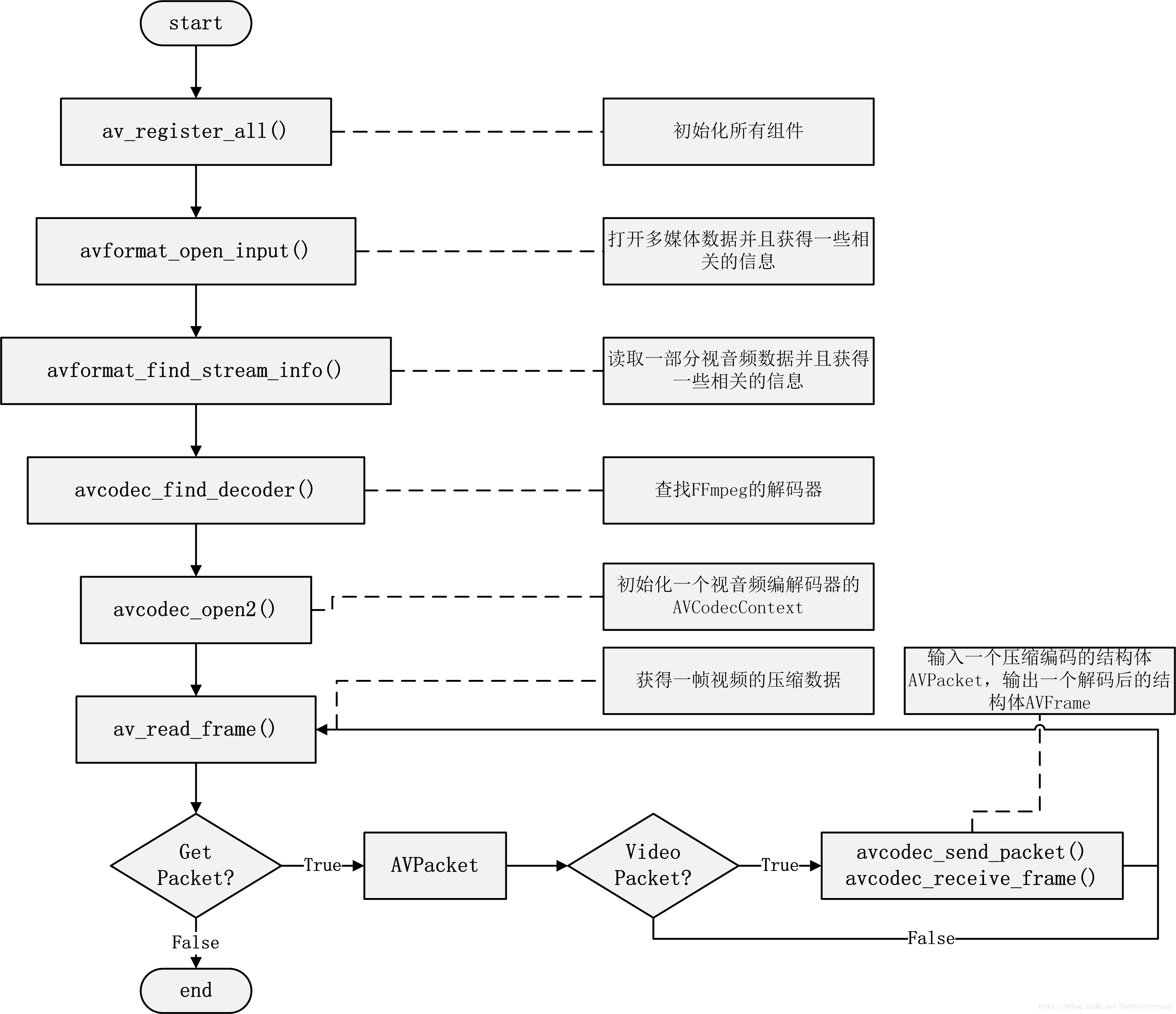

1.1 FFmpeg解码流程

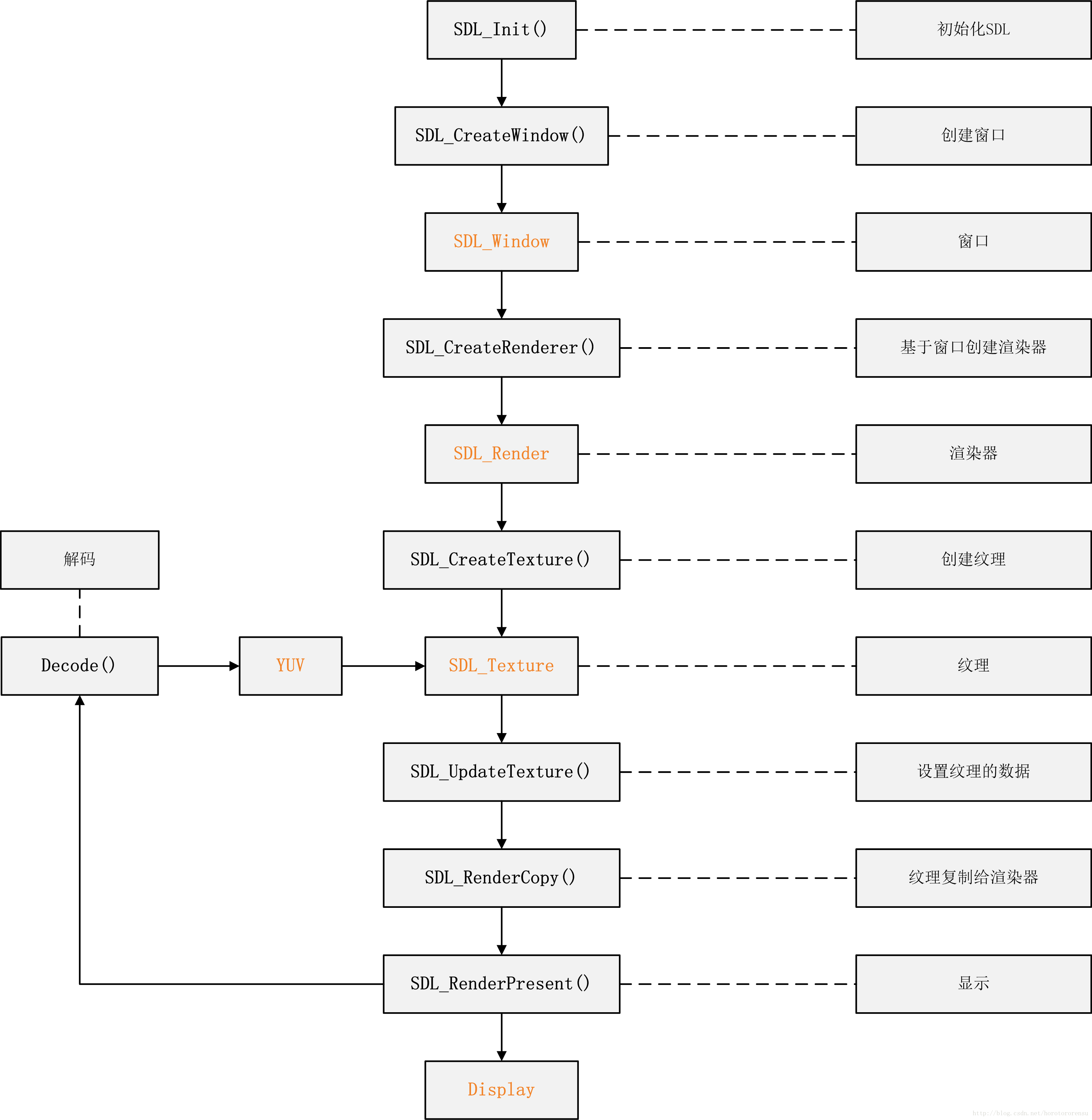

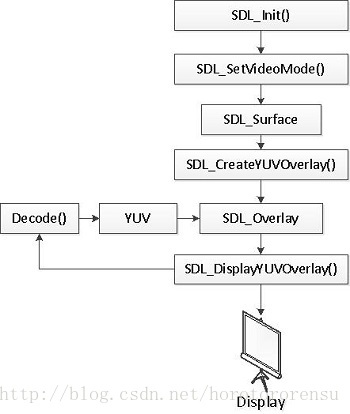

1.2 SDL2显示YUV流程

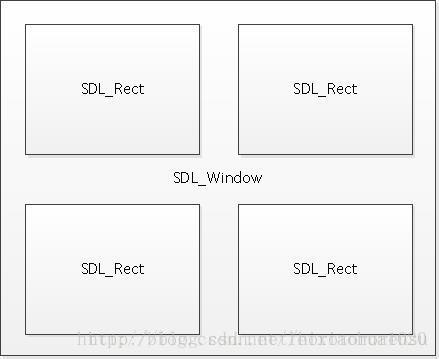

SDL_Window就是使用SDL的时候弹出的那个窗口。在SDL1.x版本中,只可以创建一个一个窗口。在SDL2.0版本中,可以创建多个窗口。

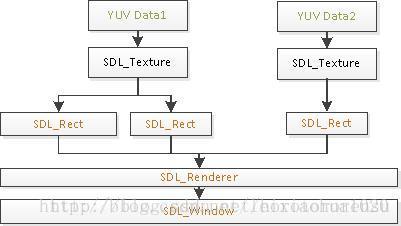

SDL_Texture用于显示YUV数据。一个SDL_Texture对应一帧YUV数据。

SDL_Renderer用于渲染SDL_Texture至SDL_Window。

SDL_Rect用于确定SDL_Texture显示的位置。注意:一个SDL_Texture可以指定多个不同的SDL_Rect,这样就可以在SDL_Window不同位置显示相同的内容(使用SDL_RenderCopy()函数)。

它们的关系如下图所示:

2、程序代码

由于所使用的的FFmpeg3.4对API进行了修改,所以原代码修改后如下:

#include <stdio.h>

extern "C" {

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libavutil/imgutils.h>

#include <SDL.h>

}

//Refresh Event

#define SFM_REFRESH_EVENT (SDL_USEREVENT + 1)

#define SFM_BREAK_EVENT (SDL_USEREVENT + 2)

int thread_exit = 0;

int thread_pause = 0;

int sfp_refresh_thread(void *opaque)

{

thread_exit = 0;

thread_pause = 0;

while (!thread_exit) {

if (!thread_pause) {

SDL_Event event;

event.type = SFM_REFRESH_EVENT;

SDL_PushEvent(&event);

}

SDL_Delay(40);

}

thread_exit = 0;

thread_pause = 0;

//Break

SDL_Event event;

event.type = SFM_BREAK_EVENT;

SDL_PushEvent(&event);

return 0;

}

int main(int argc, char *argv[])

{

AVFormatContext *pFormatCtx;

int i, videoindex;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame, *pFrameYUV;

uint8_t *out_buffer;

AVPacket *packet;

int ret, got_picture;

//------------SDL----------------

int screen_w, screen_h;

SDL_Window *screen;

SDL_Renderer* sdlRenderer;

SDL_Texture* sdlTexture;

SDL_Rect sdlRect;

SDL_Thread *video_tid;

SDL_Event event;

struct SwsContext *img_convert_ctx;

char filepath[] = "test.flv";

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if (avformat_open_input(&pFormatCtx, filepath, NULL, NULL) != 0) {

printf("Couldn't open input stream.\n");

return -1;

}

if (avformat_find_stream_info(pFormatCtx, NULL)<0) {

printf("Couldn't find stream information.\n");

return -1;

}

videoindex = -1;

for (i = 0; i<pFormatCtx->nb_streams; i++)

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

videoindex = i;

break;

}

if (videoindex == -1) {

printf("Didn't find a video stream.\n");

return -1;

}

// pCodecCtx = pFormatCtx->streams[videoindex]->codecpar;

AVStream *stream = pFormatCtx->streams[videoindex];

pCodec = avcodec_find_decoder(stream->codecpar->codec_id);

pCodecCtx = avcodec_alloc_context3(pCodec);

avcodec_parameters_to_context(pCodecCtx, stream->codecpar);

if (pCodec == NULL) {

printf("Codec not found.\n");

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL)<0) {

printf("Could not open codec.\n");

return -1;

}

pFrame = av_frame_alloc();

pFrameYUV = av_frame_alloc();

out_buffer = (uint8_t *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1));

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

//Output Info-----------------------------

printf("---------------- File Information ---------------\n");

av_dump_format(pFormatCtx, 0, filepath, 0);

printf("-------------------------------------------------\n");

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

//SDL 2.0 Support for multiple windows

screen_w = pCodecCtx->width;

screen_h = pCodecCtx->height;

screen = SDL_CreateWindow("Simplest ffmpeg player's Window", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,

screen_w, screen_h, SDL_WINDOW_OPENGL);

if (!screen) {

printf("SDL: could not create window - exiting:%s\n", SDL_GetError());

return -1;

}

sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

//IYUV: Y + U + V (3 planes)

//YV12: Y + V + U (3 planes)

sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, pCodecCtx->width, pCodecCtx->height);

sdlRect.x = 0;

sdlRect.y = 0;

sdlRect.w = screen_w;

sdlRect.h = screen_h;

packet = (AVPacket *)av_malloc(sizeof(AVPacket));

video_tid = SDL_CreateThread(sfp_refresh_thread, NULL, NULL);

//------------SDL End------------

//Event Loop

for (;;) {

//Wait

SDL_WaitEvent(&event);

if (event.type == SFM_REFRESH_EVENT) {

//------------------------------

if (av_read_frame(pFormatCtx, packet) >= 0) {

if (packet->stream_index == videoindex) {

ret = avcodec_send_packet(pCodecCtx, packet);

got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

if (ret < 0) {

printf("Decode Error.\n");

return -1;

}

if (got_picture == 0) {

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize);

//SDL---------------------------

SDL_UpdateTexture(sdlTexture, NULL, pFrameYUV->data[0], pFrameYUV->linesize[0]);

SDL_RenderClear(sdlRenderer);

//SDL_RenderCopy( sdlRenderer, sdlTexture, &sdlRect, &sdlRect );

SDL_RenderCopy(sdlRenderer, sdlTexture, NULL, NULL);

SDL_RenderPresent(sdlRenderer);

//SDL End-----------------------

}

}

av_packet_unref(packet);

}

else {

//Exit Thread

thread_exit = 1;

}

}

else if (event.type == SDL_KEYDOWN) {

//Pause

if (event.key.keysym.sym == SDLK_SPACE)

thread_pause = !thread_pause;

}

else if (event.type == SDL_QUIT) {

thread_exit = 1;

}

else if (event.type == SFM_BREAK_EVENT) {

break;

}

}

sws_freeContext(img_convert_ctx);

SDL_Quit();

//--------------

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

return 0;

}

3、编译问题

使用雷神代码在编译过程中遇到的问题:

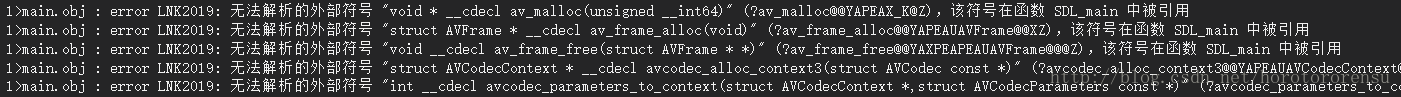

1. 无法解析的外部符号

错误原因:没有使用extern “C”。

在C++中调用C语言编写的动态链接库时,要在与DLL相应的头文件中添加extern “C”。

2. ‘AVStream::codec’: 被声明为已否决

/**

* @deprecated use the codecpar struct instead

*/

attribute_deprecated

AVCodecContext *codec;codec不再使用,使用codecpar代替,将代码

if (pFormatCtx->streams[i]->codecp->codec_type == AVMEDIA_TYPE_VIDEO)修改为

if (pFormatCtx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

将代码

pCodecCtx = pFormatCtx->streams[videoindex]->codec;

pCodec = avcodec_find_decoder(pCodecCtx->codec_id);修改为

AVStream *stream = pFormatCtx->streams[videoindex];

pCodec = avcodec_find_decoder(stream->codecpar->codec_id);

pCodecCtx = avcodec_alloc_context3(pCodec);

avcodec_parameters_to_context(pCodecCtx, stream->codecpar);

3. “PIX_FMT_YUV420P”: 未声明的标识符

使用AV_PIX_FMT_YUV420P代替PIX_FMT_YUV420P。

4. ‘avpicture_get_size’: 被声明为已否决

/**

* @deprecated use av_image_get_buffer_size() instead.

*/

attribute_deprecated

int avpicture_get_size(enum AVPixelFormat pix_fmt, int width, int height); avpicture_get_size不再使用,使用av_image_get_buffer_size代替。将代码

out_buffer = (uint8_t *)av_malloc(avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));修改为

out_buffer = (uint8_t *)av_malloc(av_image_get_buffer_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1));

5. ‘avpicture_fill’: 被声明为已否决

/**

* @deprecated use av_image_fill_arrays() instead.

*/

attribute_deprecated

int avpicture_fill(AVPicture *picture, const uint8_t *ptr,

enum AVPixelFormat pix_fmt, int width, int height); avpicture_fill不再使用,使用av_image_fill_arrays代替。将代码

avpicture_fill((AVPicture *)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);修改为

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height, 1);

6. ‘avcodec_decode_video2’: 被声明为已否决

/**

* Decode the video frame of size avpkt->size from avpkt->data into picture.

* Some decoders may support multiple frames in a single AVPacket, such

* decoders would then just decode the first frame.

*

* @warning The input buffer must be AV_INPUT_BUFFER_PADDING_SIZE larger than

* the actual read bytes because some optimized bitstream readers read 32 or 64

* bits at once and could read over the end.

*

* @warning The end of the input buffer buf should be set to 0 to ensure that

* no overreading happens for damaged MPEG streams.

*

* @note Codecs which have the AV_CODEC_CAP_DELAY capability set have a delay

* between input and output, these need to be fed with avpkt->data=NULL,

* avpkt->size=0 at the end to return the remaining frames.

*

* @note The AVCodecContext MUST have been opened with @ref avcodec_open2()

* before packets may be fed to the decoder.

*

* @param avctx the codec context

* @param[out] picture The AVFrame in which the decoded video frame will be stored.

* Use av_frame_alloc() to get an AVFrame. The codec will

* allocate memory for the actual bitmap by calling the

* AVCodecContext.get_buffer2() callback.

* When AVCodecContext.refcounted_frames is set to 1, the frame is

* reference counted and the returned reference belongs to the

* caller. The caller must release the frame using av_frame_unref()

* when the frame is no longer needed. The caller may safely write

* to the frame if av_frame_is_writable() returns 1.

* When AVCodecContext.refcounted_frames is set to 0, the returned

* reference belongs to the decoder and is valid only until the

* next call to this function or until closing or flushing the

* decoder. The caller may not write to it.

*

* @param[in] avpkt The input AVPacket containing the input buffer.

* You can create such packet with av_init_packet() and by then setting

* data and size, some decoders might in addition need other fields like

* flags&AV_PKT_FLAG_KEY. All decoders are designed to use the least

* fields possible.

* @param[in,out] got_picture_ptr Zero if no frame could be decompressed, otherwise, it is nonzero.

* @return On error a negative value is returned, otherwise the number of bytes

* used or zero if no frame could be decompressed.

*

* @deprecated Use avcodec_send_packet() and avcodec_receive_frame().

*/

attribute_deprecated

int avcodec_decode_video2(AVCodecContext *avctx, AVFrame *picture,

int *got_picture_ptr,

const AVPacket *avpkt); avcodec_decode_video2不再使用。将代码

ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, packet);

... ...

if (got_picture)修改为

ret = avcodec_send_packet(pCodecCtx, packet);

got_picture = avcodec_receive_frame(pCodecCtx, pFrame);

... ...

if (got_picture == 0)

7. ‘av_free_packet’: 被声明为已否决

/**

* Free a packet.

*

* @deprecated Use av_packet_unref

*

* @param pkt packet to free

*/

attribute_deprecated

void av_free_packet(AVPacket *pkt);

#endif av_free_packet不再使用,使用av_packet_unref代替。将代码

av_free_packet(packet);修改为

av_packet_unref(packet);