闲来无事写点小程序自己乐呵乐呵~~~~

背景:

我有个同学是学文本挖掘方向的,有段时间心血来潮,想让他教我一些,因此,他布置了一个小作业给我,即将一个分好词的数据进行进一步的处理和整合。

任务1:

初始数据是这样:

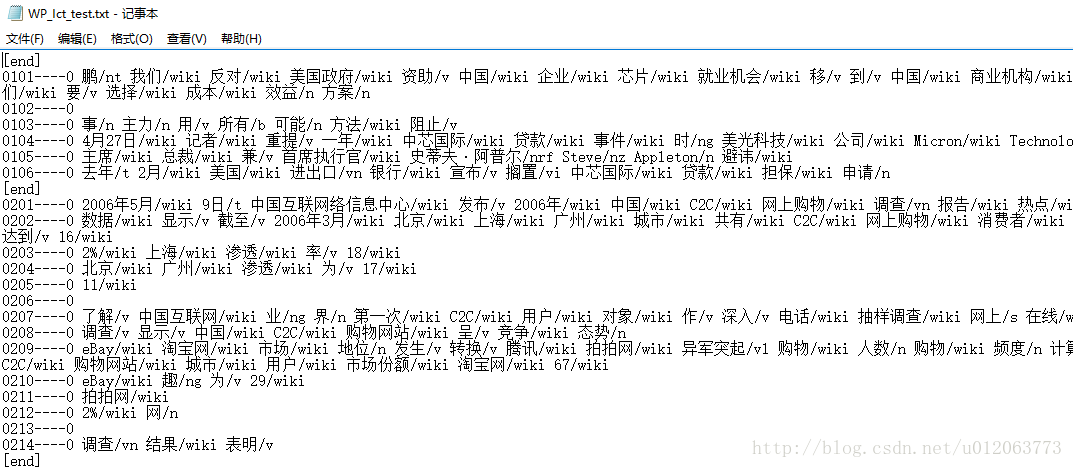

WP_Ict_test2.txt 和WP_Ict_test.txt 中的文件格式均是如下图所示。

目标:计算两个文件中共有的词、单个有的词(包括后缀)

import re

# import chardet

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test.txt', 'r')

f1 = f.readlines() # f1是一个列表类型

lines1 = len(f1)

# print f1

print '1————————————————————————'

a1 = []

b1 = []

# 此处去除头部end及序号

for i in range(lines1):

b1 = f1[i].split()

del b1[0]

a1.extend(b1)

f.close()

print a1

print '2————————————————————————'

mm1 = str(a1)

# 统计该文本中wiki词性的词的个数

print mm1.count('/wiki')

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test2.txt', 'r')

f2 = f.readlines() # f1是一个列表类型

lines2 = len(f2)

# print f2

print '1————————————————————————'

a2 = []

b2 = []

# 此处去除头部end及序号

for i in range(lines2):

b2 = f2[i].split()

del b2[0]

a2.extend(b2)

f.close()

print a2

print '2————————————————————————'

mm2 = str(a2)

# 统计该文本中wiki词性的词的个数

print mm2.count('/wiki')

c1 = set (a1)

c2 = set (a2)

print len(c1.difference(c2))

print len(c1.intersection(c2))

print len(c1-c2)

print len(c2.difference(c1))

print len(c2.intersection(c1))

print len(c2-c1)

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test-WP_Ict_test2.txt','w')

for i in c1.difference(c2):

fil.write(i+' ')

fil.close()

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test2-WP_Ict_test.txt', 'w')

for i in c2.difference(c1):

fil.write(i+' ')

fil.close()

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test_WP_Ict_test2.txt', 'w')

for i in c1.intersection(c2):

fil.write(i+' ')

fil.close()

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test+WP_Ict_test2.txt', 'w')

for i in c1.union(c2):

fil.write(i+' ')

fil.close()

删除该文件中的词性:

#coding:utf-8

import re

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\WP_Ict_test.txt', 'r')

f1 = f.readlines() # f1是一个列表类型

lines1 = len(f1)

# print f1

print '1————————————————————————'

a1 = []

b1 = []

# 此处去除头部end及序号

for i in range(lines1):

b1 = f1[i].split()

del b1[0]

a1.extend(b1)

f.close()

print a1

print '2————————————————————————'

pat ='/.*'

for i in range(len(a1)):

a1[i] = re.sub(pat, '',a1[i])

c = a1

print '3————————————————————————'

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\nonecixing.txt','w')

for i in c:

fil.write(i+' ')

fil.close()

任务2:

train是原文件,就是把属于同一篇文档的内容保存到一行当中

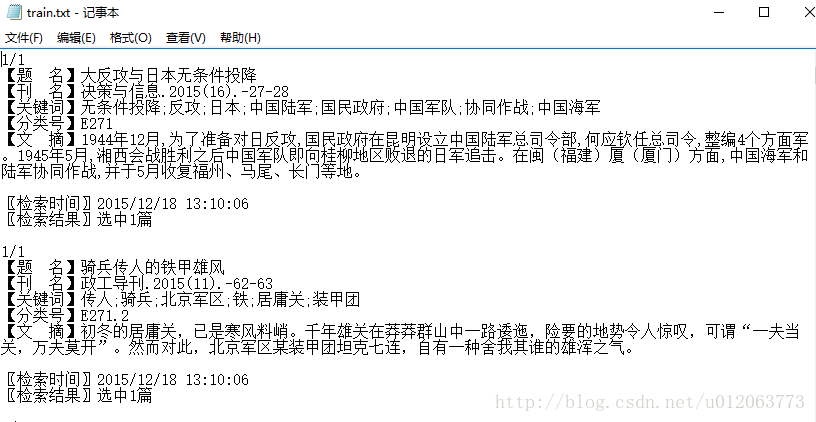

train中的数据格式如下:

第一步:将每一篇的内容放在一行中

import re

# import chardet

# import re

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\train.txt', 'r')

f1 = f.readlines() # f1是一个列表类型

lines1 = len(f1)

print '1————————————————————————'

# print f1

pat =r'\n'

for i in range(len(f1)):

f1[i] = re.sub(pat, '',f1[i])

print '2————————————————————————'

print f1

sep = ''

fline = sep.join(f1)

print fline

f2 = fline.split('1/1')

print '3————————————————————————'

del f2[0]

print f2

sep2 = '\n'

f3 = sep2.join(f2)

print len(f3)

f.close()

fil = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\mytest.txt','w')

for i in f3:

fil.write(i)

fil.close()

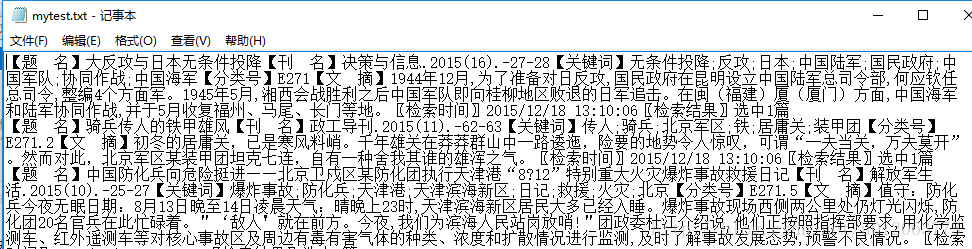

处理后的结果:

第二步:给每一篇文章前加上一个序号

import re

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\yihangwenzhang.txt','r+')

fil1 = f.readlines()

a = []

for i in range(len(fil1)):

# a.append('['+str(i)+']'+fil1[i])

strr=str(i+1).center(2)

a.append('['+strr+']'+fil1[i])

f.close()

f = open(r'C:\Users\Zhu Wen Jing\Desktop\PythonStudy\xuhao.txt','w')

for i in a:

f.write(i)

f.close()

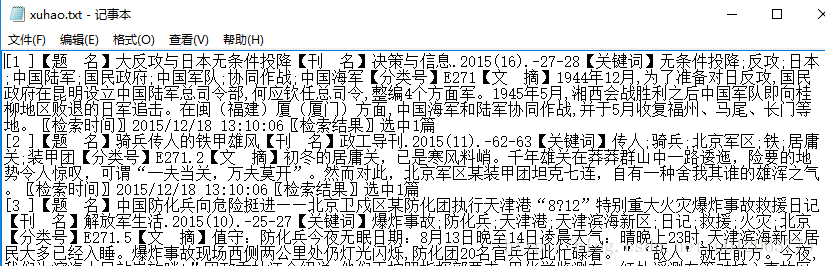

处理后的结果:

第三步:在所有文章中随机抽取N篇文章到新的txt文件中

import re

import random

#给定初始文档file1和输出文档file2,以及抽取的个数num

def suijichouqu(file1, file2, num):

f = open(file1,'r+')

f1 = f.readlines()

r1 =random.sample(range(0,len(f1)),num)

f2=[]

for i in range(num):

f2.append(f1[r1[i]])

f.close()

f = open(file2,'w')

for i in f2:

f.write(i)

f.close()

f1='C:\Users\Zhu Wen Jing\Desktop\PythonStudy\xuhao.txt'

f2='C:\Users\Zhu Wen Jing\Desktop\PythonStudy\suiji.txt'

if __name__=='__main__':

suijichouqu(f1, f2, 300)

处理后的结果: