Unity中的UGUI源码解析之事件系统(6)-RayCaster(下)

接上一篇文章, 继续介绍投射器.

GraphicRaycaster

GraphicRaycaster继承于BaseRaycaster, 是BaseRaycaster具体实现类, 是针对UGUI元素的投射器, 需要对象上同时存在Canvas组件.

值得一提的是, GraphicRaycaster和PhysicsRaycaster还有Physics2DRaycaster存放的目录不同, 后面两个放在EventSystem目录下, 而GraphicRaycaster放在UI目录下, 可能作者想要表面GraphicRaycaster只是针对UI使用的意思.

GraphicRaycaster主要依靠RectTranform相关的矩形框来射线检测, 基本不依靠摄像机.

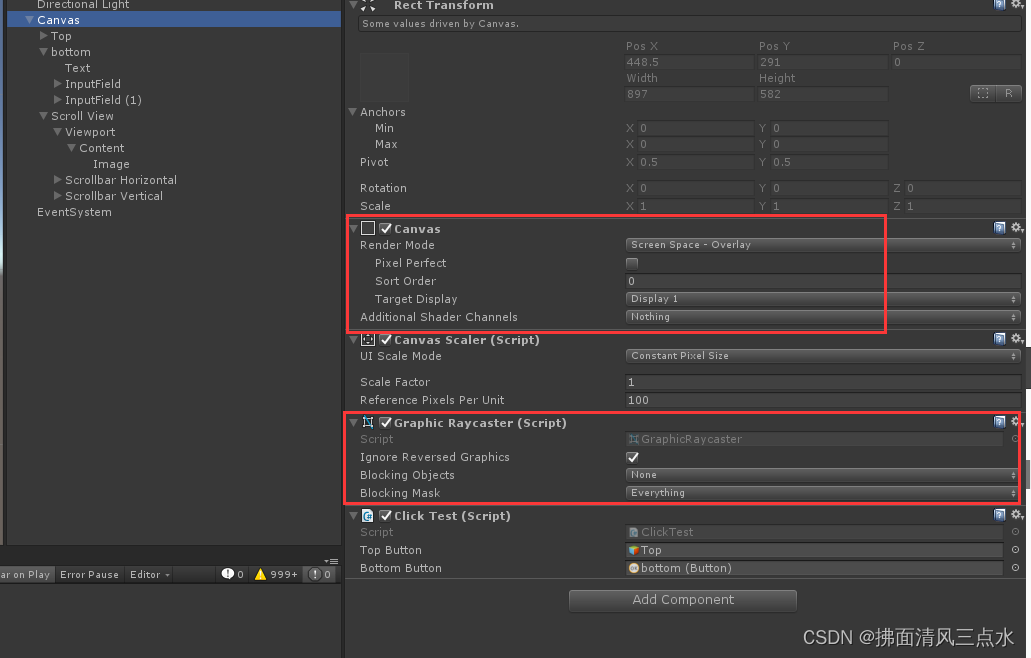

通常我们添加一个Canvas时, 同时默认会添加上此组件. 如图:

面板属性

- Ignore Reversed Graphics: 忽略图形背面(默认勾选), 通过点乘判断射线是否从背面穿透, 如果勾选, 则背面不加入射线投射

- Blocking Objects: 阻挡射线对象类型, 也就是说射线遇到指定类型的物体就会被阻挡, 无法向下传递

- None: 默认不阻挡

- Two D: 2D物体阻挡, 这里的2D物体指的是

GameObject->2D Object菜单里面的物体, 同时需要物体上有2D的碰撞盒子(2D Collider) - Three D: 3D物体阻挡, 这里的3D物体指的是

GameObject->3D Object菜单里面的物体, 同时需要物体上有3D的碰撞盒子(3D Collider) - All: Two D + Three D

- Block Mask: 阻挡掩码, 也就是某些层级(Layer)的物体参与阻挡, 默认是所有的层级

- 上面所说的阻挡, 都是在阻挡的一层要有对应事件的处理器(也就是2D或者3D的Collider)

下面是相关的代码.

[AddComponentMenu("Event/Graphic Raycaster")]

[RequireComponent(typeof(Canvas))]

public class GraphicRaycaster : BaseRaycaster

{

protected const int kNoEventMaskSet = -1;

public enum BlockingObjects

{

None = 0,

TwoD = 1,

ThreeD = 2,

All = 3,

}

[FormerlySerializedAs("ignoreReversedGraphics")]

[SerializeField] private bool m_IgnoreReversedGraphics = true;

[FormerlySerializedAs("blockingObjects")]

[SerializeField] private BlockingObjects m_BlockingObjects = BlockingObjects.None;

public bool ignoreReversedGraphics { get {return m_IgnoreReversedGraphics; } set { m_IgnoreReversedGraphics = value; } }

public BlockingObjects blockingObjects { get {return m_BlockingObjects; } set { m_BlockingObjects = value; } }

[SerializeField]

protected LayerMask m_BlockingMask = kNoEventMaskSet;

private Canvas m_Canvas;

}

属性, 字段和方法

//---------------------------------------------------------

// 重写了BaseRaycaster的排序属性

public override int sortOrderPriority

{

get

{

// 如果Canvas的渲染模式为:ScreenSpaceOverlay, 也就是说总是保持在屏幕最上层, 则使用画布的渲染层级在多个画布中排序

// We need to return the sorting order here as distance will all be 0 for overlay.

if (canvas.renderMode == RenderMode.ScreenSpaceOverlay)

return canvas.sortingOrder;

return base.sortOrderPriority;

}

}

public override int renderOrderPriority

{

get

{

// 同上

// We need to return the sorting order here as distance will all be 0 for overlay.

if (canvas.renderMode == RenderMode.ScreenSpaceOverlay)

return canvas.rootCanvas.renderOrder;

return base.renderOrderPriority;

}

}

//-----------------------------------------------------------------

// GraphicRaycaster主要依赖Canvas来进行各种操作

private Canvas m_Canvas;

private Canvas canvas

{

get

{

if (m_Canvas != null)

return m_Canvas;

m_Canvas = GetComponent<Canvas>();

return m_Canvas;

}

}

// 用于发射射线的摄像机

// 如果Canvas的渲染模式为:ScreenSpaceOverlay或者没有指定摄像机则使用屏幕空间

public override Camera eventCamera

{

get

{

if (canvas.renderMode == RenderMode.ScreenSpaceOverlay || (canvas.renderMode == RenderMode.ScreenSpaceCamera && canvas.worldCamera == null))

return null;

return canvas.worldCamera != null ? canvas.worldCamera : Camera.main;

}

}

射线投射

接下来是重点和最复杂的地方.

[NonSerialized] static readonly List<Graphic> s_SortedGraphics = new List<Graphic>();

// 向给定graphic投射射线, 收集所有被射线穿过的graphic

private static void Raycast(Canvas canvas, Camera eventCamera, Vector2 pointerPosition, IList<Graphic> foundGraphics, List<Graphic> results)

{

int totalCount = foundGraphics.Count;

for (int i = 0; i < totalCount; ++i)

{

Graphic graphic = foundGraphics[i];

// ----------------------------

// -- graphic相关过滤条件

// depth==-1代表不被这个Canvas处理, 也就是绘制

//

if (graphic.depth == -1 || !graphic.raycastTarget || graphic.canvasRenderer.cull)

continue;

if (!RectTransformUtility.RectangleContainsScreenPoint(graphic.rectTransform, pointerPosition, eventCamera))

continue;

// ----------------------------

// z值超过摄像机范围则忽略, 所以可以通过指定z值来脱离射线投射

if (eventCamera != null && eventCamera.WorldToScreenPoint(graphic.rectTransform.position).z > eventCamera.farClipPlane)

continue;

// 射线是否穿过graphic

if (graphic.Raycast(pointerPosition, eventCamera))

{

s_SortedGraphics.Add(graphic);

}

}

// 深度从大到小排序

s_SortedGraphics.Sort((g1, g2) => g2.depth.CompareTo(g1.depth));

// StringBuilder cast = new StringBuilder();

totalCount = s_SortedGraphics.Count;

for (int i = 0; i < totalCount; ++i)

results.Add(s_SortedGraphics[i]);

// Debug.Log (cast.ToString());

s_SortedGraphics.Clear();

}

// [public]向给定graphic投射射线, 收集所有被射线穿过的graphic

[NonSerialized] private List<Graphic> m_RaycastResults = new List<Graphic>();

public override void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

if (canvas == null)

return;

// 收集canvas管理的所有graphic

var canvasGraphics = GraphicRegistry.GetGraphicsForCanvas(canvas);

if (canvasGraphics == null || canvasGraphics.Count == 0)

return;

int displayIndex;

var currentEventCamera = eventCamera; // Propery can call Camera.main, so cache the reference

// 根据canvas的渲染模式, 选择targetDisplay

if (canvas.renderMode == RenderMode.ScreenSpaceOverlay || currentEventCamera == null)

displayIndex = canvas.targetDisplay;

else

displayIndex = currentEventCamera.targetDisplay;

// 获取屏幕坐标, 支持多屏输出

var eventPosition = Display.RelativeMouseAt(eventData.position);

if (eventPosition != Vector3.zero)

{

// 根据屏幕坐标获取targetDisplay

// We support multiple display and display identification based on event position.

int eventDisplayIndex = (int)eventPosition.z;

// 抛弃非当前targetDisplay

// Discard events that are not part of this display so the user does not interact with multiple displays at once.

if (eventDisplayIndex != displayIndex)

return;

}

else

{

// The multiple display system is not supported on all platforms, when it is not supported the returned position

// will be all zeros so when the returned index is 0 we will default to the event data to be safe.

eventPosition = eventData.position;

// We dont really know in which display the event occured. We will process the event assuming it occured in our display.

}

// 转换视口坐标

// Convert to view space

Vector2 pos;

if (currentEventCamera == null)

{

// Multiple display support only when not the main display. For display 0 the reported

// resolution is always the desktops resolution since its part of the display API,

// so we use the standard none multiple display method. (case 741751)

float w = Screen.width;

float h = Screen.height;

if (displayIndex > 0 && displayIndex < Display.displays.Length)

{

w = Display.displays[displayIndex].systemWidth;

h = Display.displays[displayIndex].systemHeight;

}

pos = new Vector2(eventPosition.x / w, eventPosition.y / h);

}

else

pos = currentEventCamera.ScreenToViewportPoint(eventPosition);

// 抛弃视口之外的位置

// If it's outside the camera's viewport, do nothing

if (pos.x < 0f || pos.x > 1f || pos.y < 0f || pos.y > 1f)

return;

float hitDistance = float.MaxValue;

// 生成射线

Ray ray = new Ray();

// 使用相机生成

if (currentEventCamera != null)

ray = currentEventCamera.ScreenPointToRay(eventPosition);

// 2D和3D物体阻挡部分, 收集到投射距离, 代表被阻挡

if (canvas.renderMode != RenderMode.ScreenSpaceOverlay && blockingObjects != BlockingObjects.None)

{

float distanceToClipPlane = 100.0f;

if (currentEventCamera != null)

{

float projectionDirection = ray.direction.z;

distanceToClipPlane = Mathf.Approximately(0.0f, projectionDirection)

? Mathf.Infinity

: Mathf.Abs((currentEventCamera.farClipPlane - currentEventCamera.nearClipPlane) / projectionDirection);

}

// 使用反射获取PhysicsRaycaster的投射接口

if (blockingObjects == BlockingObjects.ThreeD || blockingObjects == BlockingObjects.All)

{

if (ReflectionMethodsCache.Singleton.raycast3D != null)

{

var hits = ReflectionMethodsCache.Singleton.raycast3DAll(ray, distanceToClipPlane, (int)m_BlockingMask);

if (hits.Length > 0)

hitDistance = hits[0].distance;

}

}

// 使用反射获取Physics2DRaycaster的投射接口

if (blockingObjects == BlockingObjects.TwoD || blockingObjects == BlockingObjects.All)

{

if (ReflectionMethodsCache.Singleton.raycast2D != null)

{

var hits = ReflectionMethodsCache.Singleton.getRayIntersectionAll(ray, distanceToClipPlane, (int)m_BlockingMask);

if (hits.Length > 0)

hitDistance = hits[0].distance;

}

}

}

// 收集所有被射线穿过的对象

m_RaycastResults.Clear();

Raycast(canvas, currentEventCamera, eventPosition, canvasGraphics, m_RaycastResults);

int totalCount = m_RaycastResults.Count;

for (var index = 0; index < totalCount; index++)

{

var go = m_RaycastResults[index].gameObject;

bool appendGraphic = true;

// 通过点乘判断背面是否参与投射

if (ignoreReversedGraphics)

{

if (currentEventCamera == null)

{

// If we dont have a camera we know that we should always be facing forward

var dir = go.transform.rotation * Vector3.forward;

appendGraphic = Vector3.Dot(Vector3.forward, dir) > 0;

}

else

{

// If we have a camera compare the direction against the cameras forward.

var cameraFoward = currentEventCamera.transform.rotation * Vector3.forward;

var dir = go.transform.rotation * Vector3.forward;

appendGraphic = Vector3.Dot(cameraFoward, dir) > 0;

}

}

//

if (appendGraphic)

{

float distance = 0;

if (currentEventCamera == null || canvas.renderMode == RenderMode.ScreenSpaceOverlay)

distance = 0;

else

{

// 抛弃在摄像机背面的对象

Transform trans = go.transform;

Vector3 transForward = trans.forward;

// http://geomalgorithms.com/a06-_intersect-2.html

distance = (Vector3.Dot(transForward, trans.position - currentEventCamera.transform.position) / Vector3.Dot(transForward, ray.direction));

// Check to see if the go is behind the camera.

if (distance < 0)

continue;

}

// 根据接触点判断是否抛弃对象

if (distance >= hitDistance)

continue;

// 封装投射结果

var castResult = new RaycastResult

{

gameObject = go,

module = this,

distance = distance,

screenPosition = eventPosition,

index = resultAppendList.Count,

depth = m_RaycastResults[index].depth,

sortingLayer = canvas.sortingLayerID,

sortingOrder = canvas.sortingOrder

};

resultAppendList.Add(castResult);

}

}

}

Graphic相关的内容我们将在后面的文章给出.

PhysicsRaycaster

当我们需要在3D物体上添加事件, 则需要场景中存在PhysicsRaycaster组件.

PhysicsRaycaster需要依靠摄像机进行射线检测. 除了射线检测部分外, 其它基本与GraphicRaycaster差不多.

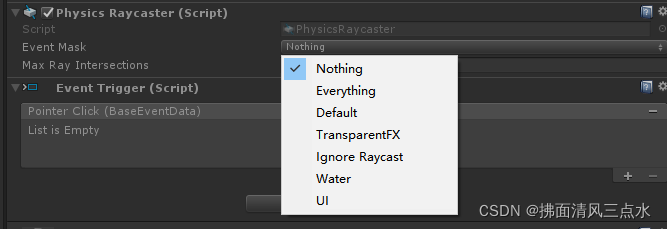

面板属性

- Event Mask: 常用的mask, 和摄像机上的遮罩一样, 用于确定需要被检测的layer的物体, 会与摄像机做"位与"操作, 即参与检测的物体需要被摄像机"看到". 0代表"Nothing", -1代表"EveryThing"

- Max Ray Intersections: 最大射线击中数量, 也就是说, 这个数量确定了射线可以判断击中的物体数量, 默认是0, 代表无限制, 且这个版本是需要申请额外申请内存的(在非托管堆, C++层, 因为C#层无法预先知道数量), 而其它值不需要额外申请内存, 只需要在托管堆申请内存. 当然, 不能是赋值, 因为会用这个值来申请数组, 负值会报错.

下面是属性的相关代码, 使用部分在后面的检测中给出.

[AddComponentMenu("Event/Physics Raycaster")]

[RequireComponent(typeof(Camera))]

public class PhysicsRaycaster : BaseRaycaster

{

/// EventMask的默认值

protected const int kNoEventMaskSet = -1;

[SerializeField] protected LayerMask m_EventMask = kNoEventMaskSet;

/// 最大射线击中数量, 为0时代表不受限的数量, 会在非托管堆申请内存(c++), 其它正数会在托管堆申请(c#)

[SerializeField] protected int m_MaxRayIntersections = 0;

protected int m_LastMaxRayIntersections = 0;

/// 击中结果

RaycastHit[] m_Hits;

/// EventMask与摄像机按位与之后的结果

public int finalEventMask

{

get { return (eventCamera != null) ? eventCamera.cullingMask & m_EventMask : kNoEventMaskSet; }

}

/// EventMask属性

public LayerMask eventMask

{

get { return m_EventMask; }

set { m_EventMask = value; }

}

/// maxRayIntersections属性

public int maxRayIntersections

{

get { return m_MaxRayIntersections; }

set { m_MaxRayIntersections = value; }

}

/// 摄像机, 主要用于用于Mask确定检测的物体, 还有发射射线和计算起始点距离剪切平面的距离(clipPlane)

protected Camera m_EventCamera;

public override Camera eventCamera

{

get

{

if (m_EventCamera == null)

m_EventCamera = GetComponent<Camera>();

return m_EventCamera ?? Camera.main;

}

}

}

射线检测

大体思路是由摄像机发射射线, 并计算起始点距离剪切平面的距离, 供物理模块(Physic)进行射线检测.

根据最大射线击中数量调用物理模块不同接口返回击中结果.

下面是相关代码, C#部分:

// 发射射线并计算距离, 注意这个摄像机非常重要, 在不同的摄像机视角下判断击中的结果可能是不一样的

protected void ComputeRayAndDistance(PointerEventData eventData, out Ray ray, out float distanceToClipPlane)

{

ray = eventCamera.ScreenPointToRay(eventData.position);

// compensate far plane distance - see MouseEvents.cs

float projectionDirection = ray.direction.z;

// 如果发射点距离摄像机非常近, 则认为距离平面无限远

distanceToClipPlane = Mathf.Approximately(0.0f, projectionDirection)

? Mathf.Infinity

: Mathf.Abs((eventCamera.farClipPlane - eventCamera.nearClipPlane) / projectionDirection);

}

public override void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

// 抛弃摄像机viewRect之外的部分

// Cull ray casts that are outside of the view rect. (case 636595)

if (eventCamera == null || !eventCamera.pixelRect.Contains(eventData.position))

return;

Ray ray;

float distanceToClipPlane;

ComputeRayAndDistance(eventData, out ray, out distanceToClipPlane);

int hitCount = 0;

// ====================================================

// -- 根据最大射线击中数量调用物理模块不同接口返回击中结果

if (m_MaxRayIntersections == 0)

{ // 等于0, 代表接受不受限的击中物体

// 通过物理模块的检测所有物体的接口

// 底层是PhysicManager.RaycastAll

if (ReflectionMethodsCache.Singleton.raycast3DAll == null)

return;

// 返回击中结果

m_Hits = ReflectionMethodsCache.Singleton.raycast3DAll(ray, distanceToClipPlane, finalEventMask);

hitCount = m_Hits.Length;

}

else

{ // 非0, 代表接受有限的击中物体

// 通过物理模块的检测所有物体的接口

// 底层是PhysicManager.Raycast

if (ReflectionMethodsCache.Singleton.getRaycastNonAlloc == null)

return;

// 有限击中物体, 预先申请好最大击中结果, 如果两次数量一致则不需重新申请

if (m_LastMaxRayIntersections != m_MaxRayIntersections)

{

m_Hits = new RaycastHit[m_MaxRayIntersections];

m_LastMaxRayIntersections = m_MaxRayIntersections;

}

// 返回实际击中数量

hitCount = ReflectionMethodsCache.Singleton.getRaycastNonAlloc(ray, m_Hits, distanceToClipPlane, finalEventMask);

}

// ====================================================

// 根据距离从小到大排序(由近到远)

if (hitCount > 1)

System.Array.Sort(m_Hits, (r1, r2) => r1.distance.CompareTo(r2.distance));

// 返回检测结果

if (hitCount != 0)

{

for (int b = 0, bmax = hitCount; b < bmax; ++b)

{

var result = new RaycastResult

{

gameObject = m_Hits[b].collider.gameObject,

module = this,

distance = m_Hits[b].distance,

worldPosition = m_Hits[b].point,

worldNormal = m_Hits[b].normal,

screenPosition = eventData.position,

index = resultAppendList.Count,

sortingLayer = 0,

sortingOrder = 0

};

resultAppendList.Add(result);

}

}

}

C++部分(版权原因, 只贴部分代码):

// 数量不受限版本

const PhysicsManager::RaycastHits& PhysicsManager::RaycastAll (const Ray& ray, float distance, int mask)

{

// ....

// 会生成静态数组, 处于非托管堆, 无法释放

static vector<RaycastHit> hits;

// ....

RaycastCollector collector;

collector.hits = &hits;

GetDynamicsScene ().raycastAllShapes ((NxRay&)ray, collector, NX_ALL_SHAPES, mask, distance);

return hits;

}

// 数量受限版本(没有找到实现, 我自己猜的)

int PhysicsManager::Raycast (const Ray& ray, RaycastHit[] outHits, float distance, int mask)

{

// ....

vector<RaycastHit> hits;

RaycastCollector collector;

collector.hits = &hits;

GetDynamicsScene().raycastAllShapes((NxRay&)ray, collector, NX_ALL_SHAPES, mask, distance);

int resultCount = hits.size();

const int allowedResultCount = std::min(resultCount, outHitsSize);

for (int index = 0; index < allowedResultCount; ++index)

*(outHits++) = hits[index];

return allowedResultCount;

}

Physics2DRaycaster

Physics2DRaycaster继承于PhysicsRaycaster, 内容基本是都是一样的, 只是在选择物理模块时, 使用的2D的物理模块, 这里就只贴一下关键代码.

[AddComponentMenu("Event/Physics 2D Raycaster")]

[RequireComponent(typeof(Camera))]

public class Physics2DRaycaster : PhysicsRaycaster

{

public override void Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList)

{

// ...

if (maxRayIntersections == 0)

{

if (ReflectionMethodsCache.Singleton.getRayIntersectionAll == null)

return;

// 用的接口不一样

m_Hits = ReflectionMethodsCache.Singleton.getRayIntersectionAll(ray, distanceToClipPlane, finalEventMask);

hitCount = m_Hits.Length;

}

else

{

if (ReflectionMethodsCache.Singleton.getRayIntersectionAllNonAlloc == null)

return;

if (m_LastMaxRayIntersections != m_MaxRayIntersections)

{

m_Hits = new RaycastHit2D[maxRayIntersections];

m_LastMaxRayIntersections = m_MaxRayIntersections;

}

// 用的接口不一样

hitCount = ReflectionMethodsCache.Singleton.getRayIntersectionAllNonAlloc(ray, m_Hits, distanceToClipPlane, finalEventMask);

}

// ...

}

}

总结

今天介绍了Unity几种射线投射器, GraphicRaycaster, PhysicsRaycaster, Physics2DRaycaster.

值得注意的是, 物理相关的两个投射器需要被投射的物体身上有对应的碰撞体(Collider和Collider2D), 不需要任何父子关系, 只要在场景中被摄像机看到即可进行投射. 而GraphicRaycaster主要使用Canvas来进行投射而不是摄像机, 被投射的物体必须是Canvas或者其子节点.

在分析过程中还发现, 原来Unity的3D物理引擎用的是"NVIDIA PhysX", 而2D物理引擎用的是"Box2D", 我之前一直以为是Unity自己写的呢.

可能是最新写的文章比较深, 我发现很多同学不怎么喜欢看, 我原来认为一开始就玩Unity的同学对底层的追求感不是那么强烈, 看来这个感觉没错.

从我个人的角度出发, 还是建议大家在愉快的用Unity开发游戏时能够抽时间研究研究底层, 因为对原理的理解很多时候能够让我们少走弯路, 同时最后也能走的更远.

接下来就是事件系统的最后一部分了, 也就是最核心的输入模块, 我会用几篇文章来详细介绍.

总之大家按需获取吧, 今天就是这样, 希望对大家有些许帮助.